Abstract

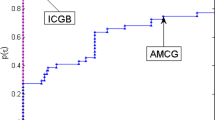

Gradient methods are popular due to the fact that only gradient of the objective function is required. On the other hand, the methods can be very slow if the objective function is very ill-conditioned. One possible reason for the inefficiency of the gradient methods is that a constant criterion, which aims only at reducing the function value, has been used in choosing the steplength, and this leads to a stable dynamic system giving slow convergence. To overcome this, we propose a new gradient method with multiple damping, which works on the objective function and the norm of the gradient vector simultaneously. That is, the proposed method is constructed by combining damping with line search strategies, in which an individual adaptive parameter is proposed to damp the gradient vector while line searches are used to reduce the function value. Global convergence of the proposed method is established under both backtracking and nonmonotone line search. Finally, numerical results show that the proposed algorithm performs better than some well-known CG-based methods.

Similar content being viewed by others

References

Akaike, H.: On a successive transformation of probability distribution and its applications to analysis of the optimum gradient method. Ann. Inst. Stat. Math. Tokyo 11, 1–17 (1959)

Andrei, N.: An unconstrained optimization test functions collection. J. Adv. Model Optim. 10, 147–161 (2008)

Antonelli, L., De Simone, V., di Serafino, D.: On the application of the spectral projected gradient method in image segmentation. J. Math. Imaging Vis. 54, 106–116 (2016)

Bonettini, S., Zanella, R., Zanni, L.: A scaled gradient projection method for constrained image deblurring. Inverse Probl. 25, 015002 (2009)

Bongartz, I., Conn, A.R., Gould, N.I.M., Toint, PhL: CUTE: constrained and unconstrained testing environment. ACM Trans. Math. Softw. 21, 123–160 (1995)

Byrd, R.H., Nocedal, J.: A tool for the analysis of quasi-Newton methods with application to unconstrained minimization. SIAM J. Numer. Anal. 26, 727–739 (1989)

Cauchy, A.: Méthode générale pour la résolution des systèms d’ équations simultanées. Comp. Rend. Sci. Paris 25, 46–89 (1847)

Dai, Y., Yuan, Y.: Analysis of monotone gradient methods. J. Ind. Manag. Optim. 1, 181–192 (2005)

De Asmundis, R., di Serafino, D., Riccio, F., Toraldo, G.: On spectral properties of steepest descent methods. IMA J. Numer. Anal. 33, 1416–1435 (2013)

De Asmundis, R., di Serafino, D., Hager, W., Toraldo, G., Zhang, H.: An efficient gradient method using the Yuan steplength. Comput. Optim. Appl. 59, 541–563 (2014)

De Asmundis, R., di Serafino, D., Landi, G.: On the regularizing behavior of the SDA and SDC gradient methods in the solution of linear ill-posed problems. J. Comput. Appl. Math. 302, 81–93 (2016)

di Serafino, D., Ruggiero, V., Toraldo, G., Zanni, L.: On the steplength selection in gradient methods for unconstrained optimization. Appl. Math. Comput. 318, 176–195 (2018)

Dolan, E., Moré, J.: Benchmarking optimization software with performance profiles. Math. Program. 91, 201–213 (2002)

Fletcher, R.: Practical Methods of Optimization Vol 1: Unconstrained Optimization. Wiley, New York (1987)

Fletcher, R.: A limited memory steepest descent method. Math. Program. 135, 413–436 (2012)

Fletcher, R., Reeves, C.: Function minimization by conjugate gradients. Comput. J. 7, 149–154 (1964)

Glunt, W., Hayden, T.L., Raydan, M.: Molecular conformations from distance matrices. J. Comput. Chem. 14, 114–120 (1993)

Gonzaga, C., Schneider, R.M.: On the steepest descent algorithm for quadratic functions. Comput. Optim. Appl. 63, 523–542 (2016)

Grippo, L., Lampariello, F., Lucidi, S.: A nonmonotone line search techniques for Newton’s method. SIAM J. Numer. Anal. 23(19), 707–716 (1986)

Hager, W.W., Zhang, H.: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16(1), 170–192 (2005)

Kelley, C.T.: Solving Nonlinear Equations with Newton’s Method Number 1 in Fundamental Algorithms for Numerical Calculations. SIAM, Philadelphia (2003)

Liu, Y., Storey, C.: Efficient generalized conjugate gradient algorithms, part 1: theory. J. Optim. Theory Appl. 69, 129–137 (1991)

Luenberger, D.G.: Linear and Nonlinear Programming, 2nd edn. Addison-Wesley, Reading (1984)

Luengo, F., Raydan, M., Glunt, W., HaydenPreconditioned, T.L.: Spectral gradient method. Numer. Algorithms 30, 241–258 (2002)

Polak, E., Ribiere, G.: Note sur la convergence de directions conjugees, Rev. Francaise Informat. Recherche Opertionelle 3e Annee 16, 35–43 (1969)

Polyak, B.T.: The conjugate gradient method in extreme problems. USSR Comput. Math. Math. Phys. 9, 94–112 (1969)

Raydan, M.: The Barzilai and Borwein gradient method for the large scale unconstrained optimization. SIAM J. Optim. 7, 26–33 (1997)

Acknowledgements

The work was supported by the Malaysia Fundamental Research Grant Scheme (FRGS/2/2013/ST06/UPM/02/1) and the first author would also like to acknowledge Yayasan Sultan Iskandar Johor Malaysia for the financial support to attend The 10th International Conference on Optimization: Techniques and Applications (ICOTA 10) where part of this material was presented orally.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sim, H.S., Leong, W.J. & Chen, C.Y. Gradient method with multiple damping for large-scale unconstrained optimization. Optim Lett 13, 617–632 (2019). https://doi.org/10.1007/s11590-018-1247-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-018-1247-9