Abstract

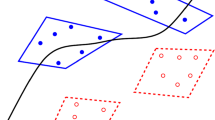

Kernelized support vector machines (SVMs) belong to the most widely used classification methods. However, in contrast to linear SVMs, the computation time required to train such a machine becomes a bottleneck when facing large data sets. In order to mitigate this shortcoming of kernel SVMs, many approximate training algorithms were developed. While most of these methods claim to be much faster than the state-of-the-art solver LIBSVM, a thorough comparative study is missing. We aim to fill this gap. We choose several well-known approximate SVM solvers and compare their performance on a number of large benchmark data sets. Our focus is to analyze the trade-off between prediction error and runtime for different learning and accuracy parameter settings. This includes simple subsampling of the data, the poor-man’s approach to handling large scale problems. We employ model-based multi-objective optimization, which allows us to tune the parameters of learning machine and solver over the full range of accuracy/runtime trade-offs. We analyze (differences between) solvers by studying and comparing the Pareto fronts formed by the two objectives classification error and training time. Unsurprisingly, given more runtime most solvers are able to find more accurate solutions, i.e., achieve a higher prediction accuracy. It turns out that LIBSVM with subsampling of the data is a strong baseline. Some solvers systematically outperform others, which allows us to give concrete recommendations of when to use which solver.

Similar content being viewed by others

Notes

In our actual experiments we will focus on the case of two objectives, namely SVM prediction error and training time

As an SVM has to be fitted on a large data set

Note that we do not normalize to zero mean, as this might destroy sparsity.

Refer to http://largescalesvm.de/htmlplots/ for the excessive results and plots of all solvers on all data sets.

References

Bischl B, Lang M, Mersmann O, Rahnenführer J, Weihs C (2015) BatchJobs and batchexperiments: abstraction mechanisms for using R in batch environments. J Stat Softw 64(11):1–25. http://www.jstatsoft.org/v64/i11/

Bordes A, Ertekin S, Weston J, Bottou L (2005) Fast kernel classifiers with online and active learning. J Mach Learn Res 6:1579–1619

Bottou L, Lin C-J (2007) Support vector machine solvers. In: Bottou L, Chapelle O, DeCoste D, Weston J (eds) Large scale kernel machines. MIT Press, Cambridge, MA, pp 301–320. http://leon.bottou.org/papers/bottou-lin-2006

Bousquet O, Bottou L (2008) The tradeoffs of large scale learning. In: Platt JC, Koller D, Singer Y, Roweis ST (eds) Advances in neural information processing systems, vol 20. Curran Associates Inc, Red Hook, NY, pp 161–168. http://papers.nips.cc/paper/3323-the-tradeoffs-of-large-scale-learning.pdf

Chang C-C, Lin C-J (2011) LIBSVM: a library for support vector machines. ACM Trans Intell Syst Technol 2(3):27:1–27:27. doi:10.1145/1961189.1961199

Cortes C, Vapnik V (1995) Support vector machine. Mach Learn 20(3):273–297

Djuric N, Lan L, Vucetic S, Wang Z (2013) Budgetedsvm: toolbox for scalable svm approximations. J Mach Learn Res 14:3813–3817

Ehrgott M (2013)Multicriteria optimization, vol 491. Springer Science & Business Media, Berlin

Fan R-E, Chang K-W, Hsieh C-J, Wang X-R, Lin C-J (2008) Liblinear: a library for large linear classification. J Mach Learn Res 9:1871–1874

Fine S, Scheinberg K (2002) Efficient svm training using low-rank kernel representations. J Mach Learn Res 2:243–264

Glasmachers T, Igel C (2006) Maximum-gain working set selection for support vector machines. J Mach Learn Res 7:1437–1466

Graf HP, Cosatto E, Bottou L, Durdanovic I, Vapnik V (2004) Parallel support vector machines: the cascade svm. In: NIPS, pp 521–528

Horn D, Wagner T, Biermann D, Weihs C, Bischl B (2015) Model-based multi-objective optimization: taxonomy, multi-point proposal, toolbox and benchmark. In: Evolutionary multi-criterion optimization, Lecture notes in computer science, vol 9018. Springer International Publishing, Cham, pp 64–78

Igel C, Heidrich-Meisner V, Glasmachers T (2008) Shark. J Mach Learn Res 9:993–996

Joachims T (1998) Making large-scale SVM learning practical. In: Schölkopf B, Burges C, Smola A (eds) Advances in kernel methods—support vector learning, chapter 11. MIT Press, Cambridge, pp 169–184

Joachims T, Yu C-NJ (2009) Sparse kernel svms via cutting-plane training. Mach Learn 76(2–3):179–193

Jones DR, Schonlau M, Welch WJ (1998) Efficient global optimization of expensive black-box functions. J Glob Optim 13(4):455–492

Knowles J (2006) ParEGO: a hybrid algorithm with online landscape approximation for expen-sive multiobjective optimization problems. Evol Comput 10(1):50–66

Koch P, Bischl B, Flasch O, Bartz-Beielstein T, Weihs C, Konen W (2012) Tuning and evolution of support vector kernels. Evol Intell 5(3):153–170

Lin C-J (2001) Linear convergence of a decomposition method for support vector machines. Technical report

Nandan M, Khargonekar PP, Talathi SS (2013) Fast svm training using approximate extreme points. arXiv:1304.1391

Platt J (1998) Fast training of support vector machines using sequential minimal optimization. In: Schölkopf B, Burges C, Smola A (eds) Advances in kernel methods—support vector learning, chapter 12. MIT Press, Cambridge, pp 185–208

Shalev-Shwartz S, Singer Y, Srebro N, Cotter A (2011) Pegasos: primal estimated sub-gradient solver for svm. Math Program 127(1):3–30

Steinwart I (2003) Sparseness of support vector machines. J Mach Learn Res 4:1071–1105

Tsang IW, Kwok JT, Cheung P-M, Cristianini N (2005) Core vector machines: fast SVM training on very large data sets. J Mach Learn Res 6:363–392

Tsang IW, Kocsor A, Kwok JT (2007) Simpler core vector machines with enclosing balls. In: Proceedings of the 24th international conference on machine learning. ACM, New York, NY, USA, pp 911–918

van Rijn JN, Bischl B, Torgo L, Gao B, Umaashankar V, Fischer S, Winter P, Wiswedel B, Berthold MR, Vanschoren J (2013) Openml: a collaborative science platform. In: Machine learning and knowledge discovery in databases. Springer, Berlin, Heidelberg, pp 645–649

Wang Z, Crammer K, Vucetic S (2012) Breaking the curse of kernelization: budgeted stochastic gradient descent for large-scale svm training. J Mach Learn Res 13:3103–3131

Williams C, Seeger M (2001) Using the Nyström method to speed up kernel machines. In: Advances in neural information processing systems, vol 13. MIT Press, Cambridge, pp 682–688

Zhang K, Lan L, Wang Z, Moerchen F (2012) Scaling up kernel svm on limited resources: a low-rank linearization approach. In: International conference on artificial intelligence and statistics, pp 1425–1434

Acknowledgments

We acknowledge support by the Mercator Research Center Ruhr, under Grant Pr-2013-0015 Support-Vektor-Maschinen für extrem große Datenmengen and partial support by the German Research Foundation (DFG) within the Collaborative Research Centers SFB 823 Statistical modelling of nonlinear dynamic processes, Project C2.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Horn, D., Demircioğlu, A., Bischl, B. et al. A comparative study on large scale kernelized support vector machines. Adv Data Anal Classif 12, 867–883 (2018). https://doi.org/10.1007/s11634-016-0265-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11634-016-0265-7

Keywords

- Support vector machine

- Multi-objective optimization

- Supervised learning

- Machine learning

- Large scale

- Nonlinear SVM

- Parameter tuning