Abstract

A new contrast enhancement algorithm is proposed, which is based on the fact that, for conventional histogram equalization, a uniform input histogram produces an equalized output histogram. Hence before applying histogram equalization, we modify the input histogram in such a way that it is close to a uniform histogram as well as the original one. Thus, the proposed method can improve the contrast while preserving original image features. The main steps of the new algorithm are adaptive gamma transform, exposure-based histogram splitting, and histogram addition. The object of gamma transform is to restrain histogram spikes to avoid over-enhancement and noise artifacts effect. Histogram splitting is for preserving mean brightness, and histogram addition is used to control histogram pits. Extensive experiments are conducted on 300 test images. The results are evaluated subjectively as well as by DE, PSNR EBCM, GMSD, and MCSD metrics, on which, except for the PSNR, the proposed algorithm has some improvements of 2.89, 9.83, 28.32, and 26.38% over the second best ESIHE algorithm, respectively. That is to say, the overall image quality is better.

Similar content being viewed by others

1 Introduction

Image contrast enhancement is a fundamental pre-processing technique for many applications, such as surveillance system [1], medical image processing [2], analyzing images from satellites [3, 4]. Conventional histogram equalization (HE) can globally enhance the target image. However, HE trends to over-enhance those images with a large proportion of similar regions and results in intensity saturation or noise artifacts effect. Therefore, the approaches of partitioning the input histogram to several sub-histograms and enhancing them separately [5,6,7,8,9,10,11,12] have long been attempts to overcome aforementioned shortcomings and to enhance the input image locally and globally. Brightness-preserving bi-histogram equalization (BBHE) [5] is the earliest work to preserve mean brightness while improving the contrast. Based on the mean brightness, BBHE divides the input histogram into two sub-histograms and applies HE on each sub-histogram independently. Dualistic sub-image histogram equalization (DSIHE) [6] proposed by Wang et al. divides the input histogram into two sub-histograms based on the median value instead of the mean value. Other improvements of BBHE can refer to minimum mean brightness error bi-HE (MMBEBHE) [7], recursive mean-separate HE (RMSHE) [8], and brightness-preserving dynamic HE (BPDHE) [9], etc.

HE performs contrast enhancement based on the accumulative distribution function (CDF). Let \(I_1\) represents an input image of size \(M\times N\) in gray scale\([0,L-1]\). The CDF of the image is defined by

where h(k) is the input pixel intensity frequency with gray-level k. Based on the CDF defined by (1), HE maps input gray-level k into output gray-level T(k) by the following transform function

The operator \(\lfloor * \rfloor \) means rounding down. From (1) and (2), we can infer the increment in the output gray-level T(k) as

To overcome the shortcomings of HE method, techniques of splitting the input histogram into sub-histograms and applying HE on them become rational choices [5,6,7,8,9]. Some practices utilize nonlinear transform as gamma correction [13, 14], or clip histogram spikes as in exposure based sub-image histogram equalization (ESIHE) [10] and median-mean-based sub-image-clipped histogram equalization (MMSICHE) both by Singh and Kapoor [12], or use multiple sub-histograms equalization as adaptive image enhancement based on bi-histogram equalization (AIEBHE) by Tang and Isa [11]. Some other researchers fall back on using the 2D-histogram as two-dimensional histogram equalization (TDHE) [15], and 2D histogram equalization (2DHE) by Kim [16], etc. They all made their way in avoiding some of the shortcomings.

But above methods do not provide solutions to tackle with the problem causing by histogram spikes and pits, and cannot keep balance on enhancing the image globally and locally. By absorbing and integrating previous research results, we propose a new image contrast enhancement algorithm combing adaptive gamma transform, proportional histogram splitting, and standard deviation-based histogram addition. Gamma transform can adaptively restrain histogram spikes, histogram addition can fill histogram pits, and proportional histogram splitting can preserve mean brightness. The new algorithm can keep balance on contrast enhancement locally and globally, feature preserving, and overall quality. The rest of this paper is organized as follows: Sect. 2 presents the new algorithm, Sect. 3 provides the experimental results and discussions, and Sect. 4 concludes the paper.

2 The proposed algorithm

Equation (3) implies that the increment of gray-level \(\bigtriangleup T(k)\) is proportional to its pixel intensity frequency h(k). Therefore, if there exist big histogram spikes in the input histogram, the corresponding output gray levels will occupy broad grayscale bands and squeeze other gray-bins with histogram pits, which causes the intrinsic shortcomings of over-enhancing some regions (regions with high-frequency bins) and contrast loss in the other regions. On the other hand, if the input histogram h is close to a uniformly distributed histogram [that is, h(k) is almost equal to each other for all k], \(\bigtriangleup T(k)\) will be almost equal to each other for all k too, that is, the output histogram will be almost uniform. Therefore, before applying HE, we can modify the input histogram as close to a uniformly distributed histogram as possible to fully exploit the dynamic range [14, 17].

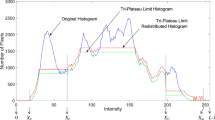

Based on the above considerations, the proposed algorithm consists of three steps, that is, (i) adaptive gamma transform of the input histogram, (ii) histogram splitting and histogram bins redistribution between sub-histograms, and (iii) histogram addition and equalization. Adaptive gamma transform is employed to smooth histogram spikes, histogram addition is applied to fill the histogram pits, and histogram splitting is introduced to preserve the histogram mean. The gamma transform is defined as

where c and \(\gamma \) are positive constants, h represents the original input histogram, and \(\tilde{h}\) is the corresponding output. Applying gamma transform on the input histogram can smooth histogram spikes and restrain the noise artifacts effect. As the value of \(\gamma \) varying, different levels of smooth and restraining can be achieved. Since different images require different levels of smooth and restraining, in the proposed algorithm, the parameter \(\gamma \) is calculated adaptively according to the image intensity exposure. Thus, before gamma transform, the intensity exposure threshold is obtained by [12]

For the proposed algorithm, gamma is defined by

The first column of Fig. 1 is the original image and its histogram. The second column is the results of conventional HE. And the third column presents the results of applying adaptive gamma transform on HE. Comparing the original histogram with that of adaptive gamma transform-based HE and that of convention HE, we observe that the original histogram features are well preserved in the output histogram and the over-enhancement is nonexistence now. We can also observe that applying adaptive gamma transform on HE can lighten the noise artifacts effect as shown on the up right corner of the processed images.

Based on the definition of exposure threshold, the splitting threshold is defined as

where \(T_s\) is the threshold for splitting \(\tilde{h}\) into under exposed sub-histogram \(h_u\) and over exposed \(h_o\).

Unlike aforementioned contrast enhancement methods [5,6,7,8,9,10,11,12] that enhance each sub-histograms within the splitting thresholds, we redistribute the input under exposed sub-histogram from range \([0,T_s]\) to output range [0, U], and the over exposed sub-histogram from \([T_s+1,L-1]\) to output range \([U+1,L-1]\) to fully exploit the dynamic range, where U is the output threshold for under exposed and over exposed sub-histograms and is calculated as

The fourth column of Fig. 1 presents the results of splitting the input histogram and enhancing them independently. We can observe that applying splitting histogram alone can preserve the histogram shape, but the noise artifacts effect is still obvious.

To deal with the problem of detail loss causing by histogram pits, we add two terms to \(h_u\) and \(h_o\), respectively, to further smooth the histogram \(\tilde{h}\). The two addition terms are their standard deviations of \(h_u\) and \(h_o\), respectively.

where m and n are the number of gray levels in sub-histogram \(h_u\) and \(h_o\), respectively, and \(\psi \) and \(\nu \) are their corresponding sub-histogram mean. Finally, the sub-histograms for applying HE are defined by

What calls for special attention is that the output histogram for \(\tilde{h_u}\) and \(\tilde{h_o}\) are in range \([0,T_s]\), and \([T_s+1,L-1]\), respectively.

The fifth column of Fig. 1 presents the results of adding a term (here is the standard deviation of the input histogram) to the original histogram and applying HE on the modified histogram. We can observe that histogram addition can preserve the histogram shape too. By incorporating adaptive gamma transform, histogram splitting, and histogram addition together, the output histogram will be close to a uniformly distributed histogram as well as close to the input histogram to the most extend. Thus, the output image will be a visually pleasing enhanced image.

3 Performance evaluation and discussion

To extensively evaluate the performance of the proposed algorithm, comparative experiments are conducted on 300 test images, which are (i) 25 reference images from the TID2013 [18]; (ii) 75 images from the miscellaneous, and aerials series of the USC-SIPI Image Database [19]; and (iii) 200 training images form the Berkeley Image Data Set [20]. The proposed algorithm is compared with HE, the weighted histogram approximation method (WAHE) [7], MMSICHE [12], AGCWD [13], 2DHE [16], and ESIHE [10] method. Authors of ESIHE compared their method against the BBHE, DSIHE, MMBEBHE, and RMSHE method [5,6,7,8], and showed their superiority.

We assess the performance of these six methods subjectively and objectively. Subjective assessment focuses on evaluating the visual quality of the enhanced images. Objective assessment involves image details, contrast, level of noise, natural appearance, etc., and is evaluated in terms of discrete entropy (DE) [1, 4, 10,11,12, 14, 15, 17], peak signal-to-noise-ratio (PSNR) [3, 11, 21], edge-based contrast measurement (EBCM) [4], gradient magnitude similarity deviation (GMSD) [16, 21, 22], and multiscale contrast similarity deviation (MCSD) [22].

DE characterizes the information contained in an image. Therefore, no results of any enhancement method can outperform the original image on DE, which is defined (in bits) by

PSNR measures the noise level of the result, and a good enhancement method should not amplify the noise level of the origin. For an input image \(I_1\) and its enhanced image \(I_2\) with dimension of \(M\times N\), PSNR is defined by

where MAX is the maximum intensity value, e.g., 255 for 8-bit grayscale images, and MSE refers to the mean square error defined by

GMSD is a perceptual image quality index with high prediction accuracy [21]. It is an efficient distortion assessment metric which can measure the perceptual image quality of a distorted image against the reference [21, 22]. It predicts the quality of image by combining pixel-wise gradient magnitude similarity (GMS) with the standard deviation of the GMS map. The horizontal and vertical gradient magnitude images of \(I_{1}\) and \(I_{2}\) are defined as [21, 23]

where symbol \(\otimes \) means convolving, \(h_{x}\) and \(h_{y}\) are the Prewitt filters in the direction of horizontal x and vertical y, respectively. And the gradient magnitude similarity (GMS) map is defined as

where \(\delta \) is a positive constant for keeping numerical stability. Then, we compute the gradient magnitude similarity mean (GMSM) as

where \(\chi \) is the total number of pixels in image. And finally, the gradient magnitude similarity deviation of the GMS map is defined as

Lower GMSD score denotes better image perceptual quality.

From left to right and top to bottom, the images are from the origin, HE, AGCWD, ESIHE, 2DHE, MMSICHE, WAHE, and the proposed, respectively. The corresponding contrast measurement values are listed in Table 1

-

(iv)

Edge-based contrast measurement (EBCM) [4]

The EBCM is based on the observation that human perception mechanisms are very sensitive to contours (or edges). An enhancement method should yield bigger EBCM result than the input image. The EBCM for image \(I_1\) of size \(M\times N\) is defined as

where C(i, j) represents contrast of pixel at location (i, j) with intensity \(I_1(i,j)\) and is defined as follows

where f(i, j) is the mean edge gray level defined by

where \(\alpha (i,j)\) is the neighboring pixel set of \(I_1(i,j)\), and g(y, z) is the edge value at location (y, z), which is the magnitude of the image gradient by Sobel operators [4]. Higher EBCM value means more edges information. Therefore, we consider the contrast of target image \(I_2\) is enhanced when \(\mathrm{EBCM}(I_2)>\mathrm{EBCM}(I_1)\). But it must be pointed out that higher EBCM value does not necessarily mean better visual quality.

-

(v)

Multiscale contrast similarity deviation (MCSD) [22]

MCSD is another perceptual image quality assessment with high correlation with human judgments [22]. The MCSD measures the contrast features resorting to images multiscale representation. First, contrast similarity deviations (CSD) for the reference image \(I_1\) and its distorted version \(I_2\) at three reduced resolutions are computed. Then, the final MCSD score is defined by

where \(n\mathrm{Scales}\) represents the total scales, \(\beta _{i}\) is the weight of the \(j\mathrm{th}\) scale and \(\sum \nolimits _{j=1}^{n\mathrm{Scales}}\beta _j=1\). The contrast similarity deviation is defined by

where \(\mathrm{MCS}\) (mean contrast similarity) is the mean pooling of contrast similarity map

And the contrast similarity map (CSM) between the original image and its enhanced one is

where ‘\(.\times \)’ refers to element-wise multiplication of two matrices, ‘.2’ indicates element-wise square, and a is a positive constant avoiding divide by zero. CMr and CMd are the contrast maps for the original image and its enhanced one, respectively, and are defined as

where \(w_{i,j}\) is the local window centered at (i, j), and \(\mu _I\) is the local mean of I.

From left to right and top to bottom, the images are from the origin, HE, AGCWD, ESIHE, 2DHE, MMSICHE, WAHE, and the proposed, respectively. The corresponding contrast measurement values are listed in Table 2

From left to right and top to bottom, the images are from the origin, HE, AGCWD, ESIHE, 2DHE, MMSICHE, WAHE, and the proposed, respectively. The corresponding contrast measurement values are listed in Table 3

3.1 Subjective assessment

On most of the test images, MMSICHE, WAHE, 2DHE, ESIHE, and the proposed method present similar visual quality, the AGCWD method presents darker results, and HE provides over-enhanced bright images. On some of the test images, ESIHE and the proposed method obviously outperform the other four reference methods visually. On quite a few images, results of the proposed method are superior to all the other six reference ones. Due to limited space, only three images as Figs. 2, 3, and 4 are selected from those images that cause different visual quality.

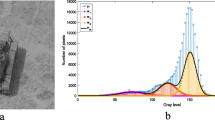

Figure 2 presents a “TANK” on grassland. The processing results of HE, 2DHE, and WAHE are over-enhanced, making the grassland like snowfield. The result of AGCWD method, on the contrary, is too dark to be discernible. All methods except the proposed lose details on turret of the “TANK” as shown on the bottom right corner of the processed images.

Figure 3 shows that the HE, 2DHE, and MMSICHE methods over-enhance the moon, making the margin of the moon scattered on the results. The WAHE method enhances the sky too much making the background sky and the moon become an entire bright sky. The AGCWD method extends the gray levels to both ends, making the bright sky brighter and the dark background darker. The ESIHE method does enhance the background, but it introduces halo effect to the moon making the moon blurred. The HE, ESIHE, 2DHE, MMSICHE, and WAHE methods amplify the noise at different levels. Only the proposed method provides visually enhanced image. The output image of the proposed is more bright than the input, and the moon is not blurred.

In Fig. 4, the HE, ESIHE, 2DHE, MMSICHE, and WAHE methods over-enhance some of the bricks with different levels. The result of AGCWD is too dark and makes the building less clear. Only the proposed produces visually pleasing result.

3.2 Objective assessment

Tables 1, 2, 3 list the performance values of DE, PSNR, EBCM, GMSD, and MCSD as shown in Figs. 2, 3, 4. Table 4 presents the average values of aforementioned five performance metrics on 300 test images.

Table 1 shows that, of all five measurement metrics, the proposed method outperforms the other six reference methods. On PSNR, GMSD, and MCSD metrics, the proposed method is much better than all the other reference methods. Especially for the GMSD and MCSD measurements, the proposed method is better almost by one order of magnitude even compared with the second best ones.

Table 2 demonstrates that the proposed method outperforms the other six reference methods measured by DE, GMSD, and MCSD. The proposed method ranks the third on PSNR, and the first two are the MMSICHE and AGCWD methods. However, they perform bad on EBCM as inferior to all other methods by one order of magnitude. Since EBCM measures the enhancement level, both the MMSICHE and AGCWD methods do not enhance the target image. The proposed method performs the third on EBCM, and the WAHE and 2DHE are the best two. But they attain high EBCM by augmenting the noise level, which is indicated by their worst PSNR scores and visual quality shown in Fig. 3.

As for Table 3, the proposed method produces the best image quality measured by DE, PSNR, GMSD, and MCSD. One thing that needs to be noted is the proposed method attains the same second best EBCM score as the 2DHE method, but provides much better image quality measured by the other five metrics.

Table 4 presents the average experimental results on 300 test images. The ESIHE and MMSICHE attain better PSNR values than the proposed method. But on average, both method barely enhance the edge information as indicated by their EBCM value of 101.850 and 102.004, respectively, which are almost the same as the original average EBCM value 101.386. The best two average values on EBCM are 117.041 and 116.094 by the WAHE, and HE method, respectively. However, both methods suffer from noise artifacts effect as indicated by their low PSNR values. The proposed method performs the best on DE, GMSD, and MCSD, and the second best on PSNR.

4 Conclusion

we proposed a contrast enhancement method that performs well on many image quality metrics. On average, the proposed algorithm have some improvements of 2.89, 9.83, 28.32, and 26.38% over the second best ESIHE algorithm on DE, EBCM, GMSD, and MCSD. Though the proposed method ranks the third on PSNR, the two methods with the highest PSNR values (ESIHE and MMSICHE) barely improve the contrast as denoted by their EBCM values which are almost the same as the input. The proposed method ranks the third on EBCM too. The best two are WAHE and HE, but they performs much worse on the other four metrics. In a word, the overall performance of the proposed method is superior to state-of-the-art reference methods.

References

Soumya, T., Thampi, S.M.: Self-organized night video enhancement for surveillance systems. Signal Image & Video Processing 1, 1–8 (2016)

Rao, H., Zhang, P., Sun, C.: Contrast enhancement for the infrared vein image of leg based on the optical angular spectrum theory. Signal Image & Video Processing 11, 1–7 (2016)

Sulochana, S., Vidhya, R.: Satellite image contrast enhancement using multiwavelets and singular value decomposition (svd). Int. J. Comput. Appl. 35(7), 1–5 (2009)

Celik, T., Tjahjadi, T.: Automatic image equalization and contrast enhancement using gaussian mixture modeling. IEEE Trans. Image Process. 21(1), 145–156 (2012)

Kim, Y.T.: Contrast enhancement using brightness preserving bi-histogram equalization. IEEE Trans. Consum. Electron. 43(1), 1–8 (1997)

Wang, Y., Zhang, B.M.: Image enhancement based on equal area dualistic sub image histogram equalization method. IEEE Trans. Consum. Electron. 45(1), 68–75 (1999)

Chen, S.D., Ramli, R.: Minimum mean brightness error bi-histogram equalization in contrast enhancement. IEEE Trans. Consum. Electron. 49(4), 1310–1319 (2003)

Chen, S.D., Ramli, R.S.: Contrast enhancement using recursive mean-separate histogram equalization for scalable brightness preservation. IEEE Trans. Consum. Electron. 49(4), 1301–1309 (2003)

Ibrahim, H., Kong, N.S.: Brightness preserving dynamic histogram equalization for image contrast enhancement. IEEE Trans. Consum. Electron. 53(4), 1752–1758 (2007)

Singh, Kuldeep, Kapoor, Rajiv: Image enhancement using exposure based sub image histogram equalization. Pattern Recognit. Lett. 36(1), 10C14 (2014)

Tang, Jing Rui, Isa, Nor Ashidi Mat: Adaptive image enhancement based on bi-histogram equalization with a clipping limit. Comput. Electron. Eng. 40(8), 86–103 (2014)

Singh, Kuldeep, Kapoor, Rajiv: Image enhancement via median-mean based sub-image-clipped histogram equalization. Opt.–Int. J. Light Electron. Opt. 125(17), 4646–4651 (2014)

Huang, S.C., Cheng, F.C., Chiu, Y.S.: Efficient contrast enhancement using adaptive gamma correction with weighting distribution. IEEE Trans. Image Process. 22(3), 1032–1041 (2013)

Wang, Xuewen, Chen, Lixia: An effective histogram modification scheme for image contrast enhancement. Signal Process. Image Commun. 58, 187–198 (2017)

Lee, C., Kim, C.S.: Contrast enhancement base on layered difference representation of 2d histogram. IEEE Trans. Image Process. 22(12), 5372–5384 (2013)

Kim, S.W., Choi, B.D., Park, W.J., Ko, S.J.: 2d histogram equalisation based on the human visual system. Electron. Lett. 52(6), 443–445 (2016)

Arici, T., Dikbas, S., Altunbasak, Y.: A histogram modification framework and its application for image contrast enhancement. IEEE Trans. Image Process. 18(9), 1921–1934 (2009)

Ponomarenko, Nikolay, Jin, Lina, Ieremeiev, Oleg, Lukin, Vladimir, Egiazarian, Karen, Astola, Jaakko, Vozel, Benoit, Chehdi, Kacem, Carli, Marco, Battisti, Federica: Image database tid2013: peculiarities, results and perspectives. Signal Process. Image Commun. 30, 57–77 (2015)

Signal and Image Processing Institute of USC University of Southern California. The usc-sipi image database. http://sipi.usc.edu/database/

Martin, D., Fowlkes, C., Tal, D., Malik, J.: A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In: Proceeding of the 8th International Conference on Computer Vision, vol. 2, pp. 416–423, July (2001)

Xue, Wufeng, Zhang, Lei, Mou, Xuanqin, Bovik, Alan C: Gradient magnitude similarity deviation: a highly efficient perceptual image quality index. IEEE Trans. Image Process. 23(2), 684–695 (2014)

Tonghan Wang, Lu, Zhang, Huizhen Jia, Li, Baosheng, Shu, Huazhong: Multiscale contrast similarity deviation: An effective and efficient index for perceptual image quality assessment. Signal Process. Image Commun. 45, 1–9 (2016)

Xue, Wufeng, Mou, Xuanqin, Zhang, Lei, Bovik, Alan C., Feng, Xiangchu: Blind image quality assessment using joint statistics of gradient magnitude and laplacian features. IEEE Trans. Image Process. 23(11), 4850–4862 (2014)

Acknowledgements

This project is partially supported by the National Natural Science Foundation of China (61362021, 61272216, 61572147), Guangxi Natural Science Foundation (2013GXNSFDA019030, 2014GXNSFAA118003, 2016GXNSFAA380043), Guangxi Colleges and Universities Key Laboratory of Intelligent Processing of Computer Images and Graphics (GIIP201408, GIIP201503, GIIP201501, GIIP201401), Basic Capabilities Promotion Project for Youth and Middle-aged Teachers in Colleges and Universities of Guangxi (ky2016YB162), and Program for Innovative Research Team of Guilin University of Electronic Technology.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wang, X., Chen, L. Contrast enhancement using feature-preserving bi-histogram equalization. SIViP 12, 685–692 (2018). https://doi.org/10.1007/s11760-017-1208-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-017-1208-2