Abstract

In recent years, image compression methods based on deep learning have received extensive attention and research. Most methods focus on minimizing the mean squared error (MSE) to obtain reconstructed images with higher peak signal-to-noise ratio (PSNR). However, the ability of pixel-wise distortion to capture the perceptual differences between images is fairly limited, which may suffer from undesirable visual perception quality of the reconstructed images. To address this problem, we propose a novel rate-distortion loss based on perception metric in learned image compression. In this work, we introduce the perception metric into the rate-distortion loss, which can enhance the capacity of compression model to capture perceptual differences and semantic information in images. By performing that, the rate-distortion performance of our proposed model on multi-scale structural similarity (MS-SSIM) and the classification accuracy of reconstructed images have been improved. Comprehensive experimental results demonstrate that the proposed method has comparable performance in terms of PSNR, and the performance on MS-SSIM outperforms traditional image codecs, such as JPEG and BPG, as well as other previous end-to-end compression methods. More significantly, the visual quality of the reconstructed images is dramatically improved.

Similar content being viewed by others

1 Introduction

Image compression is a very significant research topic in the field of multimedia signal processing. Its goal is to remove the redundancy in image data, so as to store and transmit data with a more efficient format. Some traditional image compression methods, such as JPEG [31], JPEG2000 [23], BPG [7], have achieved great success. Typically they rely on hand-crafted encoder/decoder, using transform, intra-prediction, quantization, entropy coding and other modules to reduce the spatial redundancy in images to improve the coding efficiency. However, these modules are independent and need to be optimized separately, which cause the whole compression framework cannot be optimized jointly. Moreover, it becomes more and more difficult to further improve the coding efficiency on the classical image compression framework, and these methods are not expected to be a general solution for diverse coding requirements.

With the development of deep learning, deep neural network (DNN) has been widely used in various tasks of image processing, such as image classification, image inpainting, super-resolution and so on. Inspired by this, more and more researchers begin to apply DNN for image compression, and some end-to-end image compression methods [3, 4, 24, 27, 28] have been proposed. These methods still adopt hybrid coding framework, including transform, quantization, entropy coding and other modules, which are designed by convolution neural network (CNN), so all modules can be optimized by minimizing the given objective function. Rate-distortion loss \(L=R+\lambda D\) is a common objective function in image compression methods [26]. Here, R represents the entropy rate of latent features. D is the distortion between the original image and the reconstructed image, which is typically measured by MSE. However, the ability of pixel-wise distortion like MSE to capture perceptual differences (such as high-frequency texture details) between the original image and the reconstructed image is very limited [12, 32, 33]. Therefore, the model trained by MSE can get higher PSNR, while the reconstructed images are usually lacking high-frequency details and overly-smooth, which causes undesirable visual quality [8, 11, 13, 17].

To address this problem, we propose a novel rate-distortion loss based on perception metric, which is calculated by high-level feature maps of the VGG network [8, 13, 17, 25]. We use the proposed loss as the optimization objective of the compression model and propose an end-to-end image compression method base on perception metric. Incorporating the perception metric into the rate-distortion loss can better capture the perceptual differences between the original image and the reconstructed image, thus the decoded image with higher visual perception quality is obtained. In addition, the perception metric can improve the learning ability of the model for semantic information, which makes the reconstructed images contain more abundant semantic information. The experimental results show that the proposed method achieves quite acceptable compression performance both on PSNR and MS-SSIM.

2 Related works

2.1 Traditional image compression

Classical image compression methods use hand-crafted modules to remove the redundancy in the image. For example, JPEG [31] first divides the image into \(8\times 8\) blocks, then the discrete cosine transform (DCT) is used to transform the image blocks to the frequency domain, and finally, the quantized DCT blocks are encoded by Huffman coding technology. In JPEG2000 [23], wavelet transform is adopted instead of DCT to improve coding efficiency. On the other hand, BPG [7] exploits the intra-prediction technique for image compression, which further reduces the spatial redundancy in image data. Therefore, the coding efficiency of BPG is better than that of JPEG and JPEG2000.

2.2 End-to-end image compression

The existing end-to-end image compression methods can be divided into two categories. The first type is based on recurrent neural network (RNN). Toderici et al. [28] first proposed the image compression method based on RNN, which employs RNN iteratively to compress the image residuals and realize variable bitrate coding. After that, Toderici et al. [29] adopted a neural network similar to PixelRNN [30] and GRU unit to propose an improved version. Johnston et al. [14] used SSIM weighted loss and hidden state initialization to improve the coding efficiency.

The other type is based on CNN. Most of them adopt the hybrid coding framework based on autoencoder (AE) network. Considering that the quantization procedure is not differentiable, some works have been proposed to deal with this problem to make end-to-end training possible, such as [3, 4]. In addition, some works focus on the design of network structure. For instance, Li et al. [19] proposed an image compression method based on a content-weighted strategy, which learned the importance map to guide the bitrate allocation. In [9, 10], the convolution layers are replaced by residual blocks to enhance the network architecture. Moreover, there are several studies investigate the entropy module to improve the coding efficiency. For example, Ballé et al. [4] used the piecewise linear function to fit the probability distribution of latent codes. After that, they introduced hyperprior model [5] and autoregressive context model [20] to improve the entropy coding efficiency. On the other hand, Lee et al. [18] realized the accurate estimation of the probability distribution of latent features by exploiting two types of contexts. Cheng et al. [10] used discretized Gaussian mixture likelihoods to parameterize the distributions of latent codes, which achieved a more accurate and flexible entropy model.

2.3 Distortion metric

Pixel-wise distortion (such as MSE) has poor ability to deal with the inherent uncertainty in recovering high-frequency signal: minimizing MSE will make the pixel values tend to average. Therefore, the reconstructed images are usually overly-smooth and have poor perceptual quality. In order to solve this problem, Dosovitskiy et al. [11] introduce a distortion calculated in feature space and combine the adversarial loss to train a generative model. Furthermore, Johnson et al. [13], Bruna et al. [8], and Ledig et al. [17] directly employ the differences of high-level features maps extracted from the pretrained VGG19 network [25] as the distortion metric, so that the generated images contain more texture details and have higher visual perception quality. The superiority of these methods in visual quality proves the effectiveness of distortion metric based on feature space.

3 Proposed method

3.1 Overall framework

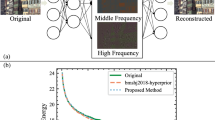

Based on the model proposed by Ballé et al. [5], we propose an end-to-end compression method based on perception metric. The architecture of our model is shown in Fig. 1. The compression process is as follow: First, an uncompressed image \({\varvec{x}}\) is given, the encoder transforms it into a latent space to obtain its latent features \({\varvec{y}}\). Then, the quantization module is used to round \({\varvec{y}}\) to get the discrete representation \(\hat{{\varvec{y}}}\). In [4, 5], the additive uniform noise is introduced to approximate the rounding quantization during training. The specific formula is shown as:

where U is a uniform distribution centered on \({\hat{y}}_{i}\)

After obtaining the quantized latent features \(\hat{{\varvec{y}}}\), entropy coding methods, such as arithmetic coding, can be used to encode \(\hat{{\varvec{y}}}\) to get bit stream for transmission. In the training stage, the entropy of \({\varvec{y}}\) is used to estimate the actual bitrate, where the entropy is determined by the Shannon cross entropy between the probability distribution of \(\hat{{\varvec{y}}}\) and the actual marginal distribution. Aim to further improve the modeling ability of entropy coding module, Ballé et al. [5] exploited hyperprior module to improve the coding efficiency. The hyperprior module captures the spatial dependence of \(\hat{{\varvec{y}}}\) by the hyperencoder and obtains the side information \({\varvec{z}}\) for estimating the distribution of \(\hat{{\varvec{y}}}\). In this case, the probability distribution is modeled as a Gaussian distribution, and the distribution of each element is characterized by its corresponding mean \(\mu _{i}\) and standard deviation \(\sigma _{i}\). This can be formulated as:

where the means are set to 0, the standard deviations are deduced from the hyperprior \(\hat{{\varvec{z}}}\), \(\hat{{\varvec{z}}}\) is the quantized side information, \(U\left( -\frac{1}{2}, \frac{1}{2}\right) \) represents the range of uniform distribution.

However, there is no prior information that can be used to estimate the probability density function of \(\hat{{\varvec{z}}}\). Therefore, a fully factorized density model is used to fit the probability density function. The corresponding formula is as follows:

where \({\hat{z}}_{i}\) denotes each element in \({\hat{z}}\), \(\psi _{i}\) represent the parameter of \(p_{{\hat{z}}_{i} \mid \psi _{i}}\)

When the bitrate estimation of \(\hat{{\varvec{z}}}\) and \(\hat{{\varvec{y}}}\) is completed, the decoder is used to transform \(\hat{{\varvec{y}}}\) to obtain reconstructed image \(\hat{{\varvec{x}}}\). And the whole framework is optimized by considering the rate-distortion trade-off, which is defined as follows:

where \(d(\hat{{\varvec{x}}}, {\varvec{x}})\) represents the distortion between reconstructed image \(\hat{{\varvec{x}}}\) and original image \({\varvec{x}}\), R is the bitrate, \(\lambda \) is a Lagrange multiplier used to realize the trade-off between distortion and bitrate.

3.2 Rate-distortion loss

In this paper, we define the rate-distortion loss as follows:

where \(d_\mathrm{MSE}\) means employing MSE as distortion metric, H and W represent the height and width of the image, respectively. \(R=R_{\hat{{\varvec{y}}}}+R_{\hat{{\varvec{z}}}}\) represents the total bitrate of \(\hat{{\varvec{y}}}\) and \(\hat{{\varvec{z}}}\). In this work, the bitrate is estimated by calculating entropy of \(\hat{{\varvec{y}}}\) and \(\hat{{\varvec{z}}}\), which can be formulated as:

3.3 Rate-distortion loss based on perception metric

The MSE loss is the most widely used distortion metric for image reconstruction in compression methods. However, directly using MSE as a measure of image distortion will make the reconstructed image lack high-frequency details. Dosovitskiy et al. [11], Johnson et al. [13], Bruna et al. [8], and Ledig et al. [17] have proved that non-pixel-wise content loss can capture the perceptual differences between the original image and the generated image, so that the generated image contains more texture details. Inspired by this, we employ the pretrained VGG19 network [25] to extract the features of original image \({\varvec{x}}\) and reconstructed image \(\hat{{\varvec{x}}}\), then adopt Euclidean distance (L2-norm) to calculate the differences between them in high-dimensional space. This non-pixel-wise content loss is defined as a perception metric, which is formulated as follows:

where \(\phi _{m, n}\) represents the parameters from the input layer in the VGG19 network to the n-th convolution layer(after activation) before the m-th maxpooling layer, \(\phi _{m, n}({\hat{x}})\) and \(\phi _{m, n}({x})\) represent the extracted high-level feature maps, h and w represent dimensions of high dimensional features.

Different from the previous work [8, 11, 13, 17], the proposed method not only needs to focus on the visual quality of the reconstructed image, but also needs to consider the compression rate. In this work, the perception metric is introduced into the optimization of the end-to-end image compression framework, and the rate-distortion loss based on perception metric is proposed. The formula is as follows:

where w is a parameter to weighted the perception metric.

Two distortion metrics mentioned above are used to constrain the image compression model. On the one hand, using MSE to train model can ensure the compression performance of the model. On the other hand, introducing perception metric with a certain weight into rate-distortion optimization can improve the feature extraction ability of the model, so as to enhance the visual perception quality of the reconstructed images.

4 Experiments

4.1 Implementation details

We use CVPR workshop CLIC training datasets [2], and randomly crop images into \(256\times 256\times 3\) patches to train our model. In order to evaluate the performance of different methods, Kodak dataset [16] is utilized for testing. To validate the generalization ability of our proposed method, we also tested our proposed method using CVPR workshop CLIC Professional Test dataset [2] with 250 high-resolution images.

In addition, our model is optimized by Adam [15], and the learning rate is maintained at a fixed value of \(1 \times 10^{-4}\), batch size is set to 16. We empirically set the value of parameter w to \(5 \times 10^{-5}\), \(\phi _{m, n}=\phi _{5,4}\). The number of channels N and M are set as 128 and 192, respectively, for lower-rate models, while for higher rate models, N and M are set to 192 and 320, respectively. For comparison, we select 8 different values of \(\lambda \) to obtain 8 models with different compression rates. Our model framework is implemented on the PyTorch [22] and CompressAI [6] platform. All the experiments are conducted on a single GPU.

4.2 Evaluation metrics

The performance of the compression model is typically related to the bitrate and the quality of the reconstructed image. The bitrate is represented by the average bit per pixel (bpp), and the quality is generally measured by PSNR or MS-SSIM. PSNR represents the pixel-wise distortion, while MS-SSIM is more related to human visual perception [33]. In order to distinguish different image quality accurately, MS-SSIM values are converted into decibels by (-10\(\log _{10}\)(1-MS-SSIM)).

Rate-distortion performance of different methods on Kodak dataset [16]

Rate-distortion performance of different methods on CLIC professional test dataset [2]

4.3 Experimental results

Since our approach is based on the framework in [5], first, we re-implement this methodology. The results from our implementation of [5] are labeled as baseline in this paper. Then, we compare the performance of the proposed method with baseline [5] and other five methods, which include some traditional compression methods, such as JPEG [31], JPEG2000 [23], BPG [7], the intra-coding of VVC/VTM [1, 21], and a learning-based method [4] (marked as Ballé2017). We evaluate these methods on Kodak dataset [16] and obtain the rate-distortion curves, which are shown in Fig. 2. Regrading PSNR, our method yields comparable results with baseline [5] and achieves better performance than JPEG, JPEG2000 and Ballé2017 [4]. Regarding MS-SSIM, our method outperforms JPEG, JPEG200, BPG, even achieves competitive results with VVC at high bitrates. Meanwhile, there is a clear gap (about 0.1 to 0.4dB) between baseline [5] and our method. Moreover, we also investigated the proposed loss based on Cheng2020 [10] (marked as Cheng2020[Proposed loss]) to demonstrate the superiority of our method. As shown in Fig. 2, by optimizing the proposed loss, we realize a significant improvement over Cheng2020 [10] in terms of MS-SSIM, while the performance on PSNR does not dropped. Obviously, introducing the perception metric into the rate-distortion loss can improve the performance of the compression models on MS-SSIM quality metric.

Figure 3 shows the performance of these methods mentioned above on CLIC Professional Test dataset [2]. Regarding PSNR, our approach is slightly improved at high bitrates when compared with baseline. Regarding MS-SSIM, the performance of the proposed method is significantly better than baseline, and the similar improvement is also reflected in Cheng2020[Proposed loss] when compared with Cheng2020 [10]. It shows our method also works for high-resolution images.

To further demonstrate the effectiveness of the proposed method, we visualize some reconstructed images obtained by the proposed method and baseline when testing on Kodak dataset [16], and the visualization result is shown in Fig. 4. It can be found from the reconstructed images generated by baseline [5] that the texture in the yellow rectangle region of the first image is blurred. In the second image, the wall in the green rectangle is fuzzy. What’s more, in the third image, the texture of the parrot’s eye area in the blue rectangle is smooth and the edge is not clear. In contrast, the proposed method captures the texture details of the image by introducing the perception metric based on high-level feature maps, which improves the perceptual quality of the reconstructed image, so as to reflect a more delicate and more satisfactory visual quality.

4.4 Investigation of distortion metric

In order to investigate the effect of different distortion loss choices in the perception metric, we select another set of parameters \(\phi _{2,2}\) obtained from the pretrained VGG19 network to build a perception metric loss \(d_{\mathrm{VGG} / 2,2}\) based on low-level feature maps. In addition, we also consider the impact of using different distance to calculate the perception metric on our model performance. In this paper, Manhattan distance (L1) and Euclidean distance (L2) are used to construct the perception metrics, respectively, and thus, the models optimized by different distortion metrics are evaluated on Kodak dataset [16]. Since the performance gap between different distortion metrics is relatively slight, we zoom in the RD curves with bpp around 0.7 to clearly show the gap, as shown in Fig. 5.

Performance of models with different distortion metrics on Kodak dataset [16]

When the perception metric is based on \(\phi _{5,4}\), the corresponding models have achieved better performance than baseline both on PSNR and MS-SSIM quality metrics. However, when we adopt L1 distance to calculate the distortion in high-level feature space, the performance on MS-SSIM has dropped when compared with L2 distance. So the ability of L1 distance to capture perceptual differences is weak. And we observe that using the high-level VGG feature maps \(\phi _{5,4}\) shows better performance when compared with \(\phi _{2,2}\), which indicates that the perception metric based on high-level feature maps has stronger ability to capture the perceptual differences of images, and the performance is improved more obviously.

4.5 Parameter sensitivity analysis

In order to explore the relationship between \(d_\mathrm{MSE }\) and \(d_{\mathrm{VGG} / 5,4}\), we analyze the sensitivity of the parameter w in this section. The range of w is set from \(\{\)0, \(2 \times 10^{-5}\), \(5 \times 10^{-5}\), \(8 \times 10^{-5}\), \(1 \times 10^{-4}\) \(\}\), and other parameters remain unchanged. We evaluate our models which trained with different values of parameter w and obtain corresponding MS-SSIM values at the same compression rate are shown in Fig. 6. It is easy to find that when \(w =5 \times 10^{-5}\), the performance of the model on MS-SSIM quality metric reaches the best. This means that the perception metric improves the model performance most obviously under the appropriate value of parameter w.

4.6 Comparison of semantic information

In order to verify whether the proposed method retains more semantic information, we compress all images in Kodak dataset [16] by our method and baseline [5] to obtain the reconstructed images under 8 compression rates. The pretrained VGG19 network is used to classify the original images in Kodak dataset, and the corresponding prediction labels are used as the ground truth. Then, we use VGG19 to classify the reconstructed images obtained by two methods, the cross entropy of the ground truth and the prediction values of the reconstructed images is calculated to represent the classification accuracy. The cross entropy curves are shown in Fig. 7. It can be seen that under 8 different compression rates, the cross entropy obtained by our method is lower than baseline. So the proposed method can contain more semantic information in the reconstructed images, which is more suitable for classification.

5 Conclusion

In this work, we propose a novel rate-distortion loss based on perception metric in learned image compression, which can improve the ability of the model to capture the perceptual differences and semantic information, so as to improve the visual perception quality (MS-SSIM) of the reconstructed images and make them contain more semantic information. The rate-distortion performance of different methods is compared on both Kodak and high-resolution datasets, and the images with different reconstructed quality are classified by the pretrained VGG19 network. Experimental results show that the proposed method achieves the same performance as baseline [5] in terms of PSNR. Regrading MS-SSIM, the performance of our method outperforms several traditional codecs, except for VVC. Compared with the end-to-end image compression methods [4, 5], our method also has better visual perception quality. Meanwhile, the performance of our method in classification task is better than baseline.

References

Versatile video coding reference software version 9.1 (vtm-9.1) (2020). https://vcgit.hhi.fraunhofer.de/jvet/VVCSoftware_VTM/-/tags/VTM-9.1

Workshop and challenge on learned image compression (2020). http://www.compression.cc/challenge/

Agustsson, E., Mentzer, F., Tschannen, M., Cavigelli, L., Timofte, R., Benini, L., Van Gool, L.: Soft-to-hard vector quantization for end-to-end learning compressible representations. In: Advances in Neural Information Processing Systems (NIPS), pp. 1141–1151 (2017)

Ballé, J., Laparra, V., Simoncelli, E.P.: End-to-end optimized image compression. In: International Conference on Learning Representations (ICLR), pp. 1–27 (2017)

Ballé, J., Minnen, D., Singh, S., Hwang, S.J., Johnston, N.: Variational image compression with a scale hyperprior. In: International Conference on Learning Representations (ICLR), pp. 1–23 (2018)

Bégaint, J., Racapé, F., Feltman, S., Pushparaja, A.: Compressai: a pytorch library and evaluation platform for end-to-end compression research. arXiv preprint arXiv:2011.03029 (2020)

Bellard, F.: BPG image format (2014). https://bellard.org/bpg/

Bruna, J., Sprechmann, P., LeCun, Y.: Super-resolution with deep convolutional sufficient statistics. arXiv preprint arXiv:1511.05666 (2015)

Cheng, Z., Sun, H., Takeuchi, M., Katto, J.: Deep residual learning for image compression. In: CVPR Workshops, pp. 1–4 (2019)

Cheng, Z., Sun, H., Takeuchi, M., Katto, J.: Learned image compression with discretized gaussian mixture likelihoods and attention modules. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7939–7948 (2020)

Dosovitskiy, A., Brox, T.: Generating images with perceptual similarity metrics based on deep networks. arXiv preprint arXiv:1602.02644 (2016)

Gupta, P., Srivastava, P., Bhardwaj, S., Bhateja, V.: A modified PSNR metric based on HVS for quality assessment of color images. In: 2011 International Conference on Communication and Industrial Application (ICCIA), pp. 1–4. IEEE (2011)

Johnson, J., Alahi, A., Fei-Fei, L.: Perceptual losses for real-time style transfer and super-resolution. In: European Conference on Computer Vision (ECCV), pp. 694–711. Springer (2016)

Johnston, N., Vincent, D., Minnen, D., Covell, M., Singh, S., Chinen, T., Hwang, S.J., Shor, J., Toderici, G.: Improved lossy image compression with priming and spatially adaptive bit rates for recurrent networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4385–4393 (2018)

Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Kodak, E.: Kodak lossless true color image suite (photocd pcd0992) (1993). http://r0k.us/graphics/kodak/

Ledig, C., Theis, L., Huszár, F., Caballero, J., Cunningham, A., Acosta, A., Aitken, A., Tejani, A., Totz, J., Wang, Z., et al.: Photo-realistic single image super-resolution using a generative adversarial network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4681–4690 (2017)

Lee, J., Cho, S., Beack, S.K.: Context-adaptive entropy model for end-to-end optimized image compression. arXiv preprint arXiv:1809.10452 (2018)

Li, M., Zuo, W., Gu, S., Zhao, D., Zhang, D.: Learning convolutional networks for content-weighted image compression. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3214–3223 (2018)

Minnen, D., Ballé, J., Toderici, G.: Joint autoregressive and hierarchical priors for learned image compression. In: Advances in Neural Information Processing Systems (NIPS), pp. 10771–10780 (2018)

Ohm, J.R., Sullivan, G.J.: Versatile video coding—towards the next generation of video compression. In: Picture Coding Symposium (2018)

Paszke, A., Gross, S., Massa, F., Lerer, A., Bradbury, J., Chanan, G., Killeen, T., Lin, Z., Gimelshein, N., Antiga, L., et al.: Pytorch: An imperative style, high-performance deep learning library. In: Advances in Neural Information Processing Systems (NIPS), pp. 8026–8037 (2019)

Rabbani, M., Joshi, R.: An overview of the jpeg2000 still image compression standard. Signal Proc. Image Commun. 17(1), 3–48 (2002)

Rippel, O., Bourdev, L.: Real-time adaptive image compression. In: International Conference on Machine Learning (ICML), pp. 2922–2930. PMLR (2017)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

Sullivan, G.J., Wiegand, T.: Rate-distortion optimization for video compression. IEEE Signal Process. Mag. 15(6), 74–90 (1998)

Theis, L., Shi, W., Cunningham, A., Huszár, F.: Lossy image compression with compressive autoencoders. arXiv preprint arXiv:1703.00395 (2017)

Toderici, G., O’Malley, S.M., Hwang, S.J., Vincent, D., Minnen, D., Baluja, S., Covell, M., Sukthankar, R.: Variable rate image compression with recurrent neural networks. arXiv preprint arXiv:1511.06085 (2015)

Toderici, G., Vincent, D., Johnston, N., Jin Hwang, S., Minnen, D., Shor, J., Covell, M.: Full resolution image compression with recurrent neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 5306–5314 (2017)

Van Oord, A., Kalchbrenner, N., Kavukcuoglu, K.: Pixel recurrent neural networks. In: International Conference on Machine Learning (ICML), pp. 1747–1756. PMLR (2016)

Wallace, G.K.: The jpeg still picture compression standard. IEEE Trans. Consum. Electron. 38(1), 43–59 (1992)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Wang, Z., Simoncelli, E.P., Bovik, A.C.: Multiscale structural similarity for image quality assessment. In: The Thrity-Seventh Asilomar Conference on Signals, Systems and Computers, pp. 1398–1402. IEEE (2003)

Acknowledgements

This research was supported by the National Natural Science Foundation of China (Grant No. 61871154, No. 62031013), by the Youth Program of National Natural Science Foundation of China (61906103, 61906124), by the Basic and applied basic research fund of Guangdong Province (2019A1515011307).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, S., Huang, Y., Yang, H. et al. End-to-end image compression method based on perception metric. SIViP 16, 1803–1810 (2022). https://doi.org/10.1007/s11760-022-02137-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-022-02137-y