Abstract

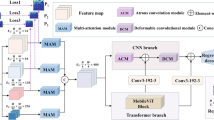

In practical application, the range and scale of some crowd scenes change little, and the number of images is relatively small. Aiming at making use of a few unlabeled images from the target scene, the crowd counting network can be well adapted to the new scene. This paper proposes a cross-scene adaptive crowd counting model based on supervised adaptive network parameters. The whole network consists of counting network and supervision network. Firstly, the adaptive parameters of the dynamic adaptive standardization module in the counting network are space invariant and can intuitively capture the global information of the target scene. And these parameters are generated from the unlabeled data by the supervision network, while the other network parameters in the counting network are obtained in the training stage and shared in different scenes. In order to fully integrate the shallow and deep features of the crowd, we use both adaptive adjustable convolution parameters and channel attention to extract more feature details. The influence of unlabeled data on parameter generation makes the model more adaptive. Therefore, the model is adapting to the appearance changes of the global scene. Finally, comprehensive experiments on four existing datasets show the effectiveness of the proposed method.

Similar content being viewed by others

References

Zhang, Y., Zhou, D., Chen, S., Gao, S., Yi, M.: Single-image crowd counting via multi-column convolutional neural network. In: IEEE Conference on Computer Vision and Pattern Recognition, 27–30 June 2016, Las Vegas, Nevada, USA, pp. 589–597. IEEE (2016)

Li, Y., Zhang, X., Chen, D.: CSRNet: dilated convolutional neural networks for understanding the highly congested scenes. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition, 18–22 June 2018, Salt Lake, Utah, USA, pp. 1091–1100. IEEE (2018)

Zan, S., Yi, X., Ni, B., Wang, M., Yang, X.: Crowd counting via adversarial cross-scale consistency pursuit. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition, 18–22 June 2018, Salt Lake, Utah, USA, pp. 5245–5254. IEEE (2018)

Liu, W., Salzmann, M., Fua, P.: Context-aware crowd counting. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition, 15–20 June 2019, Long Beach, California, USA, pp. 5094–5103. IEEE (2019)

Gao, J., Wang, Q., Yuan, Y.: SCAR: spatial-/channel-wise attention regression networks for crowd counting. Neurocomputing 363, 1–8 (2019)

Zhang, C., Li, H., Wang, X., Yang, X.: Cross-scene crowd counting via deep convolutional neural networks. In: IEEE Conference on Computer Vision and Pattern Recognition, 7–12 June 2015, Boston, MA, USA, pp. 833–841. IEEE (2015)

Wang, Q., Gao, J., Lin, W., Yuan, Y.: Learning from synthetic data for crowd counting in the wild. In: IEEE, CVF Conference on Computer Vision and Pattern Recognition, 15–20 June 2019, Long Beach, California, USA, pp. 8190–8199. IEEE (2019)

Han, T., Gao, J., Yuan, Y., Wang, Q.: Focus on semantic consistency for cross-domain crowd understanding. In: IEEE International Conference on Acoustics, Speech, and Signal Processing, 4–9 May 2020, Barcelona, Spain, pp. 1848–1852. IEEE (2020)

Hossain, M.A., Krishna Reddy, M.K., Hosseinzadeh, M., Chanda, O., Wang, Y.: One-shot scene-specific crowd counting. In: British Machine Vision Conference, 9–12 September 2019, Cardiff, Wales, UK. Springer (2019)

Reddy, M.K.K., Hossain, M., Rochan, M., Wang, Y.: Few-shot scene adaptive crowd counting using meta-learning. In: IEEE Winter Conference on Applications of Computer Vision, 2–5 March 2020, Snowmass Village, Colorado, USA, pp. 2803–2812. IEEE (2020)

Krishnareddy, M., Rochan, M., Lu, Y., Wang, Y.: AdaCrowd: unlabeled scene adaptation for crowd counting. IEEE Trans. Multimed. 24, 1008–1019 (2021)

Sindagi, V.A., Yasarla, R., Babu, D.S., Babu, R.V., Patel, V.M.: Learning to count in the crowd from limited labeled data. In: 16th European Conference on Computer Vision, 23–28 August 2020, Glasgow, UK, pp. 212–229. Springer (2020)

Liu, Y., Liu, L., Wang, P., Zhang, P., Lei, Y.: Semi-supervised crowd counting via self-training on surrogate tasks. In: 16th European Conference on Computer Vision, 23–28 August 2020, Glasgow, UK, pp. 242–259. Springer (2020)

Zhao, Z., Shi, M., Zhao, X., Li, L.: Active crowd counting with limited supervision. In: 16th European Conference on Computer Vision, 23–28 August 2020, Glasgow, UK, pp. 565–581. Springer (2020)

Long, M., Cao, Y., Wang, J., Jordan, M.: Learning transferable features with deep adaptation networks. In: 32nd International Conference on Machine Learning, 6–11 July 2015, Lille, France, pp. 97–105. IMLS (2015)

Long, M., Zhu, H., Wang, J., Jordan, M.: Deep transfer learning with joint adaptation networks. In: 34th International Conference on Machine Learning, 6–11 August 2017, Sydney, Australia, pp. 3470–3479. IMLS (2017)

Wang, Q., Han, T., Gao, J., Yuan, Y.: Neuron linear transformation: modeling the domain shift for crowd counting. IEEE Trans. Neural Netw. Learn. Syst. (2021). https://doi.org/10.1109/TNNLS.2021.3051371

Kang, D., Dhar, D., Chan, A.: Incorporating side information by adaptive convolution. Int. J. Comput. Vis. 128, 2897–2918 (2020)

Ioffe, S., Szegedy, C.: Batch normalization: accelerating deep network training by reducing internal covariate shift. In: 32nd International Conference on Machine Learning, 6–11 July 2015, Miami, Florida, USA, pp. 448–456. IMLS (2015)

Shi, C., Yuan, C., Cai, J., Zheng, Z., Cheng, Y., Lin, Z.: Conditional Kronecker. Conditional Kronecker batch normalization for compositional reasoning. In: British Machine Vision Conference, 2–6 September 2018, Newcastle, UK. Springer (2018)

Liu, M.Y., Huang, X., Mallya, A., Karras, T., Aila, T., Lehtinen, J., Kautz, J.: Few-shot unsupervised image-to-image translation. In: IEEE International Conference on Computer Vision, 20–26 October 2019, Seoul, Korea (South), pp. 10550–10559. IEEE (2019)

Chen, Y., Dai, X., Liu, M., Chen, D., Yuan, L., Liu, Z.: Dynamic convolution: attention over convolution kernels. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition, 20–25 June 2020, Seattle, Washington, USA, pp. 11027–11036. IEEE (2020)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: IEEE Conference on Computer Vision and Pattern Recognition, 26 June–1 June 2016, Las Vegas, New York, USA, pp. 770–778. IEEE (2016)

Paszke, A., Gross, S., Massa, F., Lerer, A., Bradbury, J., Chanan, G., Killeen, T., Lin, Z., Gimelshein, N., Antiga, L.: Pytorch: an imperative style, high-performance deep learning library. In: Advances in Neural Information Processing Systems, 8–14 December 2019, Vancouver, Canada, pp. 8024–80358. NIPS (2019)

Kingma, D.P., Adam, J.Ba.: A method for stochastic optimization. In: International Conference on Learning Representations, 7–9 May 2015, San Diego, California, USA, pp. 1–13. ICLR (2015)

Loy, C., Gong, S., Xiang, T.: From semi-supervised to transfer counting of crowds. In: IEEE International Conference on Computer Vision, 3–6 December 2013, Sydney, Australia, pp. 2256–2263. IEEE (2013)

Ferryman, J., Shahrokni, A.: Pets2009: dataset and challenge. In: IEEE International Workshop on Performance Evaluation of Tracking and Surveillance, 7–9 December 2019, Snowbird, Utah, USA. IEEE (2019)

Fang, Y., Zhan, B., Cai, W., Gao, S., Hu, B.: Locality-constrained spatial transformer network for video crowd counting. In: IEEE International Conference on Multimedia and Expo, 8–12 July 32019, Shanghai, China, pp. 814–819. IEEE (2019)

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grant Nos. 61771420 and 62001413, the National Natural Science Foundation of Hebei Province under Grant No. F2020203064, as well as the Doctoral Foundation in Yanshan University under Grant No. BL18033 and Technology Youth Foundation in Hebei University of Environmental Engineering under Grant No. 2020ZRQN01.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, S., Hu, Z., Zhao, M. et al. Cross-scene crowd counting based on supervised adaptive network parameters. SIViP 16, 2113–2120 (2022). https://doi.org/10.1007/s11760-022-02173-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-022-02173-8