Abstract

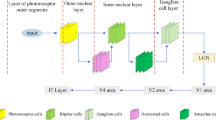

The study of biologically inspired edge detection is one of the hot research topics in the computer vision field. Previous biologically inspired edge detection models are mainly based on X-type ganglion cells. However, according to physiological studies, Y-type and W-type ganglion cells exist in the visual system, playing essential roles in visual information processing. All three types of ganglion cells form their own independent visual channels, and all three channels working parallelly demonstrate a depth-guided stereoscopic mechanism. To model the biological visual mechanism more comprehensively, we propose a biologically inspired edge detection method based on the visual mechanism of the X-, Y-, and W-channels, namely DXYW. In the proposed model, three separate sub-models simulating the X-, Y-, and W-channels are designed to process grayscale images and disparity maps. Furthermore, a depth-guided multi-channel fusion method is introduced to integrate the visual response from different channels. Experimental results show that the multi-channel mechanism modeled in DXYW is consistent with the relevant physiological mechanisms. And DXYW achieves competitive edge detection performance that better maintains the integrity of object contours and suppresses background texture.

Similar content being viewed by others

References

Dai, Q., Fang, F., Li, J., Zhang, G., Zhou, A.: Edge-guided composition network for image stitching. Pattern Recogn. 118, 108019 (2021)

Tu, Z., Ma, Y., Li, C., Tang, J., Luo, B.: Edge-guided non-local fully convolutional network for salient object detection. IEEE Trans. Circuits Syst. Video Technol. 31(2), 582–593 (2020)

Peng, P., Yang, K., Luo, F., Li, Y.: Saliency detection inspired by topological perception theory. Int. J. Comput. Vis. 129, 2352–2374 (2021)

Sobel, I.E.: Camera Models and Machine Perception. Stanford University, Stanford (1970)

Prewitt, J.M.: Object enhancement and extraction. Picture processing and Psychopictorics 10(1), 15–19 (1970)

Kendall, J.D., Suhas, K.: The building blocks of a brain-inspired computer. Appl. Phys. Rev. 7(1), 011305 (2020)

Irfan, M., Jiangbin, Z., Iqbal, M., Masood, Z., Arif, M.H., ul Hassan, S.R.: Brain inspired lifelong learning model based on neural based learning classifier system for underwater data classification. Expert Syst. Appl. 186, 115798 (2021)

Grigorescu, C., Petkov, N., Westenberg, M.A.: Contour detection based on non-classical receptive field inhibition. IEEE Trans. Image Process. 12(7), 729–739 (2003)

Petkov, N., Subramanian, E.: Motion detection, noise reduction, texture suppression, and contour enhancement by spatiotemporal gabor filters with surround inhibition. Biol. Cybern. 97(5), 423–439 (2007)

Yang, K., Li, C., Li, Y.: Multifeature-based surround inhibition improves contour detection in natural images. IEEE Trans. Image Process. 23(12), 5020–5032 (2014)

Akbarinia, A., Parraga, C.A.: Feedback and surround modulated boundary detection. Int. J. Comput. Vision 126(12), 1367–1380 (2018)

Lin, C., Li, F., Cao, Y., Zhao, H.: Bio-inspired contour detection model based on multibandwidth fusion and logarithmic texture inhibition. IET Image Proc. 13(12), 2304–2313 (2019)

Wang, G., Chen, Y.G., Gao, M., Yang, S.C., Feng, F.Q., De Baets, B.: Boundary detection using unbiased sparseness-constrained colour-opponent response and superpixel contrast. IET Image Proc. 14(13), 2976–2986 (2020)

Victor, J.D.: The dynamics of the cat retinal x cell centre. J. Physiol. 386(1), 219–246 (1987)

Enroth-Cugell, C., Robson, J.G.: The contrast sensitivity of retinal ganglion cells of the cat. J. Physiol. 187(3), 517–552 (1966)

Victor, J.D.: The dynamics of the cat retinal y cell subunit. J. Physiol. 405(1), 289–320 (1988)

Hunter, I.W., Korenberg, M.J.: The identification of nonlinear biological systems: Wiener and hammerstein cascade models. Biol. Cybern. 55(2), 135–144 (1986)

Hochstein, S., Shapley, R.: Quantitative analysis of retinal ganglion cell classifications. J. Physiol. 262(2), 237–264 (1976)

Stone, J., Fukuda, Y.: Properties of cat retinal ganglion cells: a comparison of w-cells with x-and y-cells. J. Neurophysiol. 37(4), 722–748 (1974)

Wilson, P.D., Stone, J.: Evidence of w-cell input to the cat’s visual cortex via the c laminae of the lateral geniculate nucleus. Brain Res. 92(3), 472–478 (1975)

Roe, A.W., Garraghty, P.E., Esguerra, M., Sur, M.: Experimentally induced visual projections to the auditory thalamus in ferrets: evidence for a w cell pathway. J. Compar. Neurol. 334(2), 263–280 (1993)

Rowe, M.H., Dreher, B.: Retinal w-cell projections to the medial interlaminar nucleus in the cat: Implications for ganglion cell classification. J. Compar. Neurol. 204(2), 117–133 (1982)

Anderson, J.C., da Costa, N.M., Martin, K.A.: The w cell pathway to cat primary visual cortex. J. Compar. Neurol. 516(1), 20–35 (2009)

Julesz, B.: Foundations of cyclopean perception. (1971)

Pettigrew, J.D., Dreher, B.: Parallel processing of binocular disparity in the cat’s retinogeniculocortical pathways. Proc. R. Soc. Lond. Ser. B Biol. Sci. 232(1268), 297–321 (1987)

Yang, K., Gao, S., Guo, C., Li, C., Li, Y.: Boundary detection using double-opponency and spatial sparseness constraint. IEEE Trans. Image Process. 24(8), 2565–2578 (2015)

Otsu, N.: A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 9(1), 62–66 (1979)

Silberman, N., Hoiem, D., Kohli, P., Fergus, R.: Indoor segmentation and support inference from rgbd images. In: European Conference on Computer Vision, pp. 746–760 (2012)

Gupta, S., Arbelaez, P., Malik, J.: Perceptual organization and recognition of indoor scenes from rgb-d images. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 564–571 (2013)

Zhang, Q., Lin, C., Li, F.: Application of binocular disparity and receptive field dynamics: a biologically-inspired model for contour detection. Pattern Recogn. 110, 107657 (2021)

He, J., Zhang, S., Yang, M., Shan, Y., Huang, T.: Bdcn: Bi-directional cascade network for perceptual edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 44(1), 100–113 (2020)

Doll’ar, P., Zitnick, C.L.: Fast edge detection using structured forests. IEEE Trans. Pattern Anal. Mach. Intell. 37(8), 1558–1570 (2014)

Martin, D.R., Fowlkes, C.C., Malik, J.: Learning to detect natural image boundaries using local brightness, color, and texture cues. IEEE Trans. Pattern Anal. Mach. Intell. 26(5), 530–549 (2004)

Yang, K., Gao, S., Li, C., Li, Y.: Efficient color boundary detection with color-opponent mechanisms. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2810–2817 (2013)

Cao, Y.J., Lin, C., Li, Y.J.: Learning crisp boundaries using deep refinement network and adaptive weighting loss. IEEE Trans. Multimed. 23, 761–771 (2020)

Acknowledgements

The authors appreciate the anonymous reviewers for their helpful and constructive comments on an earlier draft of this paper. This work was supported by the National Natural Science Foundation of China (Grant No. 61866002), Guangxi Natural Science Foundation (Grant No. 2020GXNSFDA297006, Grant No. 2018GXNSFAA138122, Grant No. 2015GXNSFAA139293), and Innovation Project of Guangxi University of Science and Technology Graduate Education (Grant No. GKYC202101).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interests regarding the publication of this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Lin, C., Wang, Q. & Wan, S. DXYW: a depth-guided multi-channel edge detection model. SIViP 17, 481–489 (2023). https://doi.org/10.1007/s11760-022-02253-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-022-02253-9