Abstract

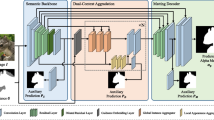

Image outpainting aims at extending the field of an existing image. A current challenge with image outpainting is that background noise may interfere with the expansion of the subject, resulting in visual distortions and artifacts. In this study, a subject-aware image outpainting (SAIO) method, which reduces background pixel interference and emphasizes the subject, is proposed to solve this issue. After training a Matting model as a pre-extractor for the subject, two networks are trained in series: the subject outpainting network (SO-Net) for subject extension and background completion network (BC-Net) for background extension. First, the Matting model is used to simultaneously extract the input image subject and separate the background, and the subject is transferred to SO-Net to generate the predicted subject. Second, the predicted subject is fused with the background separated in the previous step as the input of the second network. Finally, BC-Net outputs the complete image. To improve the training ability of the network and quality of the output image, both networks adopt the conditional training strategy. The qualitative and quantitative results show that our method achieves good performance; a performance of 28.99 in peak signal-to-noise ratio (PSNR) on the test dataset was achieved. The proposed method can be widely applied to intelligent image processing systems.

Similar content being viewed by others

Availability of data and materials

All accompanying data are provided in the manuscript.

References

Kopf, J., Kienzle, W., Drucker, S., Kang, S.B.: Quality prediction for image completion. ACM Trans. Graph. 31, 131:1-131:8 (2012). https://doi.org/10.1145/2366145.2366150

Zhang, Y., Xiao, J., Hays, J., Tan, P.: FrameBreak: dramatic image extrapolation by guided shift-maps. In: 2013 IEEE Conference on Computer Vision and Pattern Recognition, pp. 1171–1178 (2013)

Wang, M., Lai, Y.-K., Liang, Y., Martin, R.R., Hu, S.-M.: BiggerPicture: data-driven image extrapolation using graph matching. ACM Trans. Graph. 33, 173:1-173:13 (2014). https://doi.org/10.1145/2661229.2661278

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial nets. In: Advances in Neural Information Processing Systems. Curran Associates, Inc. (2014)

Sabini, M., Rusak, G.: Painting outside the box: image outpainting with GANs. arXiv:1808.08483 [cs] (2018)

Yang, Z., Dong, J., Liu, P., Yang, Y., Yan, S.: Very long natural scenery image prediction by outpainting. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 10560–10569 (2019)

Krishnan, D., Teterwak, P., Sarna, A., Maschinot, A., Liu, C., Belanger, D., Freeman, W.: Boundless: generative adversarial networks for image extension. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 10520–10529 (2019)

Sengupta, S., Jayaram, V., Curless, B., Seitz, S.M., Kemelmacher-Shlizerman, I.: Background matting: the world is your green screen. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2288–2297 (2020)

Zhou, Q., Wang, S., Wang, Y., Huang, Z., Wang, X.: Human de-occlusion: invisible perception and recovery for humans. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3690–3700 (2021)

Bowen, R.S., Chang, H., Herrmann, C., Teterwak, P., Liu, C., Zabih, R.: OCONet: image extrapolation by object completion. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2307–2317. IEEE, Nashville (2021)

Miyato, T., Koyama, M.: cGANs with Projection Discriminator. arXiv:1802.05637 [cs, stat] (2018)

Arjovsky, M., Chintala, S., Bottou, L.: Wasserstein generative adversarial networks. In: Proceedings of the 34th International Conference on Machine Learning, pp. 214–223. PMLR (2017)

Sivic, J., Kaneva, B., Torralba, A., Avidan, S., Freeman, W.T.: Creating and exploring a large photorealistic virtual space. In: 2008 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, pp. 1–8 (2008)

Wang, Y., Tao, X., Shen, X., Jia, J.: Wide-context semantic image extrapolation. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1399–1408 (2019)

Zhang, L., Wang, J., Shi, J.: Multimodal image outpainting with regularized normalized diversification. In: 2020 IEEE Winter Conference on Applications of Computer Vision (WACV), pp. 3422–3431 (2020)

Kim, K., Yun, Y., Kang, K.W., Kong, K., Lee, S., Kang, S.J.: Painting outside as inside: edge guided image outpainting via bidirectional rearrangement with progressive step learning. In: 2021 IEEE Winter Conference on Applications of Computer Vision (WACV) (2021). https://doi.org/10.1109/WACV48630.2021.00217

Wang, Y., Wei, Y., Qian, X., Zhu, Li., Yang, Yi.: Sketch-guided scenery image outpainting. IEEE Trans. Image Process. 30, 2643–2655 (2021). https://doi.org/10.1109/TIP.2021.3054477

Lin, H., Pagnucco, M., Song, Y.: Edge guided progressively generative image outpainting. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 806–815. IEEE, Nashville (2021)

Lu, C.-N., Chang, Y.-C., Chiu, W.-C.: Bridging the visual gap: wide-range image blending. arXiv:2103.15149 [cs] (2021)

Cheng, Y.C., Lin, C.H., Lee, H.Y., Ren, J., Tulyakov, S., Yang, M.H.: In&Out: diverse image outpainting via GAN inversion. arXiv:2104.00675 [cs] (2021)

Li, X., Zhang, H., Feng, L., Hu, J., Zhang, R., Qiao, Q.: Edge‐aware image outpainting with attentional generative adversarial networks. In: IET Image Processing. ipr2.12447 (2022). https://doi.org/10.1049/ipr2.12447

Kong, D., Kong, K., Kim, K., Min, S.J., Kang, S.J.: Image-adaptive hint generation via vision transformer for outpainting. In: 2022 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), pp. 4029–4038. IEEE, Waikoloa (2022)

Yao, K., Gao, P., Yang, X., Huang, K., Sun, J., Zhang, R.: Outpainting by queries. (2022). https://doi.org/10.48550/arXiv.2207.05312

Gao, P., Yang, X., Zhang, R., Huang, K., Geng, Y.: Generalised image outpainting with U-transformer. arXiv:2201.11403 [cs] (2022)

Chang, H., Zhang, H., Jiang, L., Liu, C., Freeman, W.T.: MaskGIT: masked generative image transformer, vol. 11 (2022)

Li, X., Ren, Y., Ren, H., Shi, C., Zhang, X., Wang, L., Mumtaz, I., Wu, X.: Perceptual image outpainting assisted by low-level feature fusion and multi-patch discriminator. Comput, Mater Contin 71, 5021–5037 (2022). https://doi.org/10.32604/cmc.2022.023071

Yang, C.-A., Tan, C.-Y., Fan, W.-C., Yang, C.-F., Wu, M.-L., Wang, Y.-C.F.: Scene graph expansion for semantics-guided image outpainting. arXiv:2205.02958 (2022)

Wei, G., Guo, J., Ke, Y., Wang, K., Yang, S., Sheng, N.: A Three-stage GAN model based on edge and color prediction for image outpainting. Expert Syst. Appl. (2022). https://doi.org/10.1016/j.eswa.2022.119136

Zhang, X., Chen, F., Wang, C., Tao, M., Jiang, G.-P.: SiENet: Siamese expansion network for image extrapolation. IEEE Signal Process. Lett. 27, 1590–1594 (2020). https://doi.org/10.1109/LSP.2020.3019705

Yu, J., Lin, Z., Yang, J., Shen, X., Lu, X., Huang, T.: Free-Form Image Inpainting With Gated Convolution. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV). pp. 4470–4479 (2019)

Heusel, M., Ramsauer, H., Unterthiner, T., Nessler, B., Hochreiter, S.: GANs trained by a two time-scale update rule converge to a local nash equilibrium. arXiv:1706.08500 [cs, stat] (2018)

Funding

Received no external funding.

Author information

Authors and Affiliations

Contributions

Yongzhen Ke contributed to conceptualization, methodology, supervision, and project administration. Nan Sheng contributed to methodology, software, writing—original draft, and writing—review and editing. Gang Wei contributed to methodology, software, and writing—original draft. Kai Wang contributed to resources, validation, and data curation. Fan Qin contributed to validation and writing—review and editing. Jing Guo contributed to writing—review and editing, formal analysis, and visualization.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Ethical approval

Not applicable.

Consent for publication

The work described has not been published before. It is not under consideration for publication elsewhere. Its publication has been approved by all co-authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ke, Y., Sheng, N., Wei, G. et al. Subject-aware image outpainting. SIViP 17, 2661–2669 (2023). https://doi.org/10.1007/s11760-022-02444-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-022-02444-4