Abstract

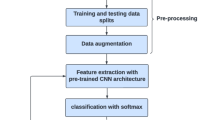

Hand gesture is a kind of natural interaction way and hand gesture recognition has recently become more and more popular in human–computer interaction. However, the complexity and variations of hand gesture like various illuminations, views, and self-structural characteristics make the hand gesture recognition still challengeable. How to design an appropriate feature representation and classifier are the core problems. To this end, this paper develops an expressive deep hybrid hand gesture recognition architecture called CNN-MVRBM-NN. The framework consists of three submodels. The CNN submodel automatically extracts frame-level spatial features, and the MVRBM submodel fuses spatial information over time for training higher level semantics inherent in hand gesture, while the NN submodel classifies hand gesture, which is initialized by MVRBM for second order data representation, and then such NN pre-trained by MVRBM can be fine-tuned by back propagation so as to be more discriminative. The experimental results on Cambridge Hand Gesture Data set show the proposed hybrid CNN-MVRBM-NN has obtained the state-of-the-art recognition performance.

Similar content being viewed by others

References

Chen Fs F, Cm HC (2003) Hand gesture recognition using a real-time tracking method and hidden markov models. Image Vis Comput 21(8):745–758

Auephanwiriyakul S, Phitakwinai S (2013) Thai sign language translation using scale invariant feature transform and hidden markov models. Pattern Recognit Lett 34(11):1291–1298

Lukas P, Yuji O, Yoshihiko M, Hiroshi I (2014) A hog-based hand gesture recognition system on a mobile device. In ICIP, pp 3973–3977

Ss R, Agrawal A (2015) Vision based hand gesture recognition for human computer interaction: a survey. Artif Intell Rev 43:1–54

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. NIPS 25:1097–1105

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. Comput Sci, pp 1409–1556

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S et al (2015) Going deeper with convolutions. In: CVPR, pp 1–9

Simonyan K, Zisserman A (2014) Two-stream convolutional networks for action recognition in videos. In: NIPS, pp 568–576

Girshick R, Donahue J, Darrell T, Malik J (2014) Rich feature hierarchies for accurate object detection and semantic segmentation. In: CVPR, pp 580–587

Ji SW, Xu W, Yang M, Yu K (2013) 3D convolutional neural networks for human action recognition. IEEE Trans Pattern Anal Mach Intell 35(1):221–231

Karpathy A, Toderici G, Shetty S, Leung T, Sukthankar R, Li FF (2014)Large-scale video classification with convolutional neural networks. In: CVPR, pp 1725–1732

Hinton GE, Salakhutdinov RR (2006) Reducing the dimensionality of data with neural networks. Science 313(5789):504–507

Qi GL, Sun YF, Gao JB, Hu YL, Li JH (2016) Matrix variate rbm and its applications. In: IJCNN, pp 389–395

Yamato J, Ohya J, Ishii K (1992) Recognizing human action in time-sequential images using hidden markov model. In: CVPR, pp 379–385

Ramamoorthy A, Vaswani N, Chaudhury S, Banerjee S (2003) Recognition of dynamic hand gestures. Pattern Recognit 36(9):2069–2081

Liang B, Zheng L (2014) Multi-modal gesture recognition using skeletal joints and motion trail model. In: European conference on computer vision, pp 623–638

Xy WANG, Gz DAI, Xw ZHANG, Fj Z (2008) Recognition of complex dynamic gesture based on HMM-FNN model. J Softw 19(9):2302–2312 (in Chinese)

Singha J, Hussain RL (2016) Recognition of global hand gestures using self co-articulation information and classifier fusion. J Multimodal User Interfaces 10(1):77–93

Ripley BD (1996) Pattern recognition and neural networks. Cambridge University Press, Cambridge

Nguyen TD, Tran T, Phung D, Venkatesh S (2015) Tensor variate restricted Boltzmann machines. In: AAAI, pp 2887–2893

Sun Y, Wang X, Tang X (2015) Deeply learned face representations are sparse, selective, and robust. In: CVPR, pp 2892–2900

Kim TK, Cipolla R (2009) Canonical correlation analysis of video volume tensors for action categorization and detection. IEEE Trans Pattern Anal Mach Intell 31(8):1415–1428

Lui YM (2012) Human gesture recognition on product manifolds. J Mach Learn Res 13(1):3297–3321

Shen CH, Harandi M, Hartley R (2015) Extrinsic methods for coding and dictionary learning on Grassmann manifolds. Int J Comput Vis 114(2):113–136

Canny J (1986) A computational approach to edge detection. IEEE Trans Pattern Anal Mach Intell 8(6):679–698

Acknowledgements

This research is supported by the Natural Science Foundation of China (Nos. 61402024, 61772049, 61632006, 61876012), Beijing Natural Science Foundation (Nos. 4162009), Beijing Educational Committee(No. KM201710005022), Beijing Key Laboratory of Computational Intelligence and Intelligent System. We would also like to thank PhD candidate Wentong Wang for his genuine suggestion in CNN program design.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, J., Huai, H., Gao, J. et al. Spatial-temporal dynamic hand gesture recognition via hybrid deep learning model. J Multimodal User Interfaces 13, 363–371 (2019). https://doi.org/10.1007/s12193-019-00304-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12193-019-00304-z