Abstract

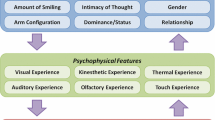

In this work, we discuss a set of feature representations for analyzing human spatial behavior (proxemics) motivated by metrics used in the social sciences. Specifically, we consider individual, physical, and psychophysical factors that contribute to social spacing. We demonstrate the feasibility of autonomous real-time annotation of these proxemic features during a social interaction between two people and a humanoid robot in the presence of a visual obstruction (a physical barrier). We then use two different feature representations—physical and psychophysical—to train Hidden Markov Models (HMMs) to recognize spatiotemporal behaviors that signify transitions into (initiation) and out of (termination) a social interaction. We demonstrate that the HMMs trained on psychophysical features, which encode the sensory experience of each interacting agent, outperform those trained on physical features, which only encode spatial relationships. These results suggest a more powerful representation of proxemic behavior with particular implications in autonomous socially interactive and socially assistive robotics.

Similar content being viewed by others

Notes

These proxemic distances pertain to Western American culture—they are not cross-cultural.

In this implementation, head pose was used to estimate the visual code; however, as the size of each person’s face in the recorded image frames was rather small, the results from the head tracker were quite noisy [37]. If the head pose estimation confidence was below some threshold, the system would instead rely on the shoulder pose for eye gaze estimates.

In this implementation, we utilized the 3-dimensional point cloud provided by our motion capture system for improved accuracy (see Sect. 4.1); however, we assume nothing about the implementations of others, so total distance can be used for approximations in the general case.

More formally, Hall’s touch code distinguishes between caressing and holding, feeling or caressing, extended or prolonged holding, holding, spot touching (hand peck), and accidental touching (brushing); however, automatic extraction of such forms of touching go beyond the scope of this work.

For example, we do not measure the radiant heat or odor transmitted by one individual and the intensity at the corresponding sensory organ of the receiving individual.

This occurs for the SFP axis estimates, as well as at the public distance interval, the loud and very loud voice loudness intervals, and the outside reach kinesthetic interval.

References

Adams L, Zuckerman D (1991) The effect of lighting conditions on personal space requirements. J Gen Psychol 118(4):335–340

Aiello J (1987) Human spatial behavior. In: Handbook of environmental psychology, Chap 12. Wiley, New York

Aiello J, Aiello T (1974) The development of personal space: proxemic behavior of children 6 through 16. Human Ecol. 2(3):177–189

Aiello J, Thompson D, Brodzinsky D (1981) How funny is crowding anyway? Effects of group size, room size, and the introduction of humor. Basic Appl Soc Psychol 4(2):192–207

Argyle M, Dean J (1965) Eye-contact, distance, and affliciation. Sociometry 28:289–304

Bailenson J, Blascovich J, Beall A, Loomis J (2001) Equilibrium theory revisited: mutual gaze and personal space in virtual environments. Presence 10(6):583–598

Burgoon J, Stern L, Dillman L (1995) Interpersonal adaptation: dyadic interaction patterns. Cambridge University Press, New York

Cassell J, Sullivan J, Prevost S (2000) Embodied conversational agents. MIT Press, Cambridge

Dempster A, Laird N, Rubin D (1977) Maximum likelihood from incomplete data via the EM algorithm. J R Stat Soc 39:1–38

Deutsch RD (1977) Spatial structurings in everyday face-to-face behavior: a neurocybernetic model. The Association for the Study of Man-Environment Relations, Orangeburg

Evans G, Wener R (2007) Crowding and personal space invasion on the train: please don’t make me sit in the middle. J Environ Psychol 27:90–94

Feil-Seifer D, Matarić M (2011) Automated detection and classification of positive vs negative robot interactions with children with autism using distance-based features. In: HRI, Lausanne, pp 323–330

Geden E, Begeman A (1981) Personal space preferences of hospitalized adults. Res Nurs Health 4:237–241

Hall ET (1959) The silent language. Doubleday Co, New York

Hall E (1963) A system for notation of proxemic behavior. Am Anthropol 65:1003–1026

Hall ET (1966) The hidden dimension. Doubleday Co, Chicago

Hall ET (1974) Handbook for proxemic research. American Anthropology Assn, Washington

Hayduk L, Mainprize S (1980) Personal space of the blind. Soc Psychol Q 43(2):216–223

Hediger H (1955) Studies of the psychology and behaviour of captive animals in zoos and circuses. Butterworths Scientific Publications, Stoneham

Huettenrauch H, Eklundh K, Green A, Topp E (2006) Investigating spatial relationships in human-robot interaction. In: IROS, Beijing

Jan D, Traum DR (2007) Dynamic movement and positioning of embodied agents in multiparty conversations. In: Proceedings of the 6th international joint conference on autonomous agents and multiagent systems, AAMAS ’07, pp 14:1–14:3

Jan D, Herrera D, Martinovski B, Novick D, Traum D (2007) A computational model of culture-specific conversational behavior. In: Proceedings of the 7th international conference on intelligent virtual agents, IVA ’07, pp 45–56

Jones S, Aiello J (1973) Proxemic behavior of black and white first-, third-, and fifth-grade children. J Pers Soc Psychol 25(1):21–27

Jones S, Aiello J (1979) A test of the validity of projective and quasi-projective measures of interpersonal distance. West J Speech Commun 43:143–152

Jung J, Kanda T, Kim MS (2013) Guidelines for contextual motion design of a humanoid robot

Kendon A (1990) Conducting interaction—patterns of behavior in focused encounters. Cambridge University Press, New York

Kennedy D, Gläscher J, Tyszka J, Adolphs R (2009) Personal space regulation by the human amygdala. Nat Neurosci 12:1226–1227

Kristoffersson A, Severinson Eklundh K, Loutfi A (2013) Measuring the quality of interaction in mobile robotic telepresence: a pilot’s perspective. Int J Soc Robot 5(1):89–101

Kuzuoka H, Suzuki Y, Yamashita J, Yamazaki K (2010) Reconfiguring spatial formation arrangement by robot body orientation. In: HRI, Osaka

Lawson B (2001) Sociofugal and sociopetal space, the language of space. Architectural Press, Oxford

Llobera J, Spanlang B, Ruffini G, Slater M (2010) Proxemics with multiple dynamic characters in an immersive virtual environment. ACM Trans Appl Percept 8(1):3:1–3:12

Low S, Lawrence-Zúñiga D (2003) The anthropology of space and place: locating culture. Blackwell Publishing, Oxford

Mead R, Matarić M (2011) An experimental design for studying proxemic behavior in human-robot interaction. Tech Rep CRES-11-001, USC Interaction Lab, Los Angeles

Mead R, Matarić MJ (2012) Space, speech, and gesture in human-robot interaction. In: Proceedings of the 14th ACM international conference on multimodal interaction, ICMI ’12, Santa Monica, CA, pp 333–336

Mead R, Atrash A, Matarić MJ (2011) Recognition of spatial dynamics for predicting social interaction. In: HRI, Lausanne, Switzerland, pp 201–202

Mehrabian A (1972) Nonverbal communication. Aldine Transcation, Piscataway

Morency L, Whitehill J, Movellan J (2008) Generalized adaptive view-based appearance model: integrated framework for monocular head pose estimation. In: 8th IEEE international conference on automatic face gesture recognition (FG 2008), pp 1–8

Mumm J, Mutlu B (2011) Human-robot proxemics: physical and psychological distancing in human-robot interaction. In: HRI, Lausanne, pp 331–338

Oosterhout T, Visser A (2008) A visual method for robot proxemics measurements. In: HRI workshop on metrics for human-robot interaction, Amsterdam

Pelachaud C, Poggi I (2002) Multimodal embodied agents. Knowl Eng Rev 17(2):181–196

Price G, Dabbs J Jr. (1974) Sex, setting, and personal space: changes as children grow older. Pers Soc Psychol Bull 1:362–363

Rabiner LR (1990) A tutorial on hidden Markov models and selected applications in speech recognition. In: Readings in speech recognition, pp 267–296

Satake S, Kanda T, Glas DF, Imai M, Ishiguro H, Hagita N (2009) How to approach humans?: Strategies for social robots to initiate interaction. In: HRI, pp 109–116

Schegloff E (1998) Body torque. Soc Res 65(3):535–596

Schöne H (1984) Spatial orientation: the spatial control of behavior in animals and man. Princeton University Press, Princeton

Shotton J, Fitzgibbon A, Cook M, Sharp T, Finocchio M, Moore R, Kipman A, Blake A (2011) Real-time human pose recognition in parts from single depth images. In: CVPR

Sommer R (1967) Sociofugal space. Am J Sociol 72(6):654–660

Takayama L, Pantofaru C (2009) Influences on proxemic behaviors in human-robot interaction. In: IROS, St. Louis

Torta E, Cuijpers RH, Juola JF, van der Pol D (2011) Design of robust robotic proxemic behaviour. In: Proceedings of the third international conference on social robotics, ICSR’11, pp 21–30

Trautman P, Krause A (2010) Unfreezing the robot: navigation in dense, interacting crowds. In: IROS, Taipei

Vasquez D, Stein P, Rios-Martinez J, Escobedo A, Spalanzani A, Laugier C (2012) Human aware navigation for assistive robotics. In: Proceedings of the thirteenth international symposium on experimental robotics, ISER’12, Québec City, Canada

Walters M, Dautenhahn K, Boekhorst R, Koay K, Syrdal D, Nehaniv C (2009) An empirical framework for human-robot proxemics. In: New frontiers in human-robot interaction, Edinburgh

Acknowledgements

This work is supported in part by an NSF Graduate Research Fellowship, as well as ONR MURI N00014-09-1-1031 and NSF IIS-1208500, CNS-0709296, IIS-1117279, and IIS-0803565 grants. We thank Louis-Philippe Morency for his insights in integrating his head pose estimation system [37] and in the experimental design process, and Mark Bolas and Evan Suma for their assistance in using the PrimeSensor, and Edward Kaszubski for his help in integrating the proxemic feature extraction and behavior recognition systems into the Social Behavior Library.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Mead, R., Atrash, A. & Matarić, M.J. Automated Proxemic Feature Extraction and Behavior Recognition: Applications in Human-Robot Interaction. Int J of Soc Robotics 5, 367–378 (2013). https://doi.org/10.1007/s12369-013-0189-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-013-0189-8