Abstract

The relationship between humans and robots is increasingly becoming focus of interest for many fields of research. The studies investigating the dynamics underpinning the human–robot interaction have, up to date, mainly analysed adults’ behaviour when interacting with artificial agents. In this study, we present results associated with the human–robot interaction involving children aged 5 to 6 years playing the Ultimatum Game (UG) with either another child or a humanoid robot. Assessment of children’s attribution of mental and physical properties to the interactive agents showed that children recognized the robot as a distinct entity compared to the human. Nevertheless, consistently with previous studies on adults, the results on the UG revealed very similar behavioural responses and reasoning when the children played with the other child and with the robot. Finally, by analysing children’s justifications for their behaviour at the UG, we found that children tended to consider “fair” only the divisions that were exactly equal (5–5 divisions), and to justify them either in quantitative terms (outcome) or in terms of equity. These results are discussed in terms of theory of mind, as well as in light of developmental theories underpinning children’s behaviour at the Ultimatum Game.

Similar content being viewed by others

1 Introduction

The introduction of social robots—defined as “autonomous or semi-autonomous robots that interact and communicate with humans by following the behavioural norms expected by the people with whom the robot is intended to interact” [5, p. 1]—is deeply affecting our society in different fields of human life. The robots used in industrial processes, for instance, are modifying job roles and rules ([1, 50, 58]; see also [26]). Prospectively, the introduction of robots in other contexts, such as households, education, care assistance, manufacturing, etc., will also presumably have an increasingly significant effect on human activities and roles. In this perspective, several researchers are working towards the implementation of robots in the attempt to make them behaviourally and physically similar to the human being [2, 25, 38, 84]. The humanoid robots—the ultimate realization of these attempts—are characterized by human-like physical features, behaviours, and cognitive processes, which call to mind the idea of “they are more like-me” [68].

The implementation of human-like artificial agents is also aimed at supporting scientific research in different fields, such as anthropology, psychology, ethics, sociology, engineering, informatics, mathematics, physics, etc. Referring to psychology, Scassellati [87, 88], for example, suggests that the use of human-like robots is beneficial to the study of typical and atypical human social development, allowing to test cognitive, behavioural and developmental psychological models. In fact, employment of human-like robots may serve both as “stimuli” to study the human cognition by evoking social cognitive processes [101], as well as “agents” the humans can observe and interact with [103]. In this respect, Wykowska and colleagues [103] showed that—by combining experimental control and ecological validity—manipulation of various behavioral and physical parameters in humanoid robots during a robot-human interaction may provide insightful information concerning the social cognitive processes in the human brain. It was shown, for example, that, while low-level perceptual mechanisms are similarly activated when both artificial and natural agents are observed, activation of high order social cognitive mechanisms requires the artificial agents to be endowed of human-like features, which allow the emulation—in the human—of human-like behaviours.

By contributing to the understanding and identification of the precursors underlying human sociality and their ontogenesis, knowledge from developmental psychology is also crucial to the implementation of psychological models to be used in the human–robot interaction. Growing recognition of the role of developmental psychology for the understanding of the human–robot interaction is evidenced by the substantial increase—in the last decades—of researches focussing on the child-robot interaction (e.g., [7, 11, 12, 47, 48, 61, 71, 72, 77]). The results of these studies consistently show that children tend to attribute mental states to humanoid robots, treating the robots as human agents (for review, see [64]). Connecting developmental psychology to the study of the human–robot interaction from a multidisciplinary perspective, Itakura [42] proposed a new research domain named Developmental Cybernetics (DC; [42]; see also [45, 46, 76, 77]). Developmental Cybernetics explores the child-robot interaction through the construction of theoretical frameworks that characterize the design of the robot with the aim to facilitate these interactions [42, 46]. It focuses on three abilities, which are critical for making a robot a social agent: theory of communication (ToC; [43, 44, 76]), theory of body (ToB; [70, 72]), and theory of mind (ToM; [42, 45, 46]). With particular focus on the theory of mind, several contributions have now demonstrated its significance for the development of social competences: through this human psychological ability it is possible to understand one’s own and other people’s mental states (intentions, emotions, desires, beliefs), allowing to predict and interpret one’s own and others’ behaviours on the basis of such meta-representations ([21, 59, 80, 82, 102], for a review, see [98]). There are different behaviours and cognitive processes that are linked to ToM: imitation [4, 8, 9, 83]; joint attention, pointing, gaze-following [13, 14, 23, 67, 68, 78]; and intentionality understanding [4, 20, 30, 36]. Several studies have investigated the effect of these precursors on the interaction between humans and robots [22, 29, 40, 41, 49, 51, 69, 71,72,73, 78, 79, 90, 99]. For example, by examining the influence of an online eye-contact of a humanoid robot on humans’ reception of the robot—as indicated by self-reports—Kompatsiari and colleagues [53] showed that eye-contact facilitates the attribution of human-like characteristics to the robot. People, in fact, were sensitive to the mutual gaze of the artificial agent, feeling more engaged with the robot when a mutual gaze was established. Also, Okumura and colleagues [77] investigated the effect of the referential nature of gaze on the acquisition of information, comparing—through eye-tracking—human gaze with nonhuman gaze (robot) in 10- and 12-months-old infants. The findings showed that infants followed both human and robot gaze, although only human gaze had an effect on the acquisition of information. In a most recent review of the literature, Wiese and colleagues [101] further argued that designing artificial agents so that they are perceived as intentional agents activates in the human brain areas involved in social-cognitive processing, ultimately increasing the possibility that robots are treated by humans as social partners. By creating artificial agents as intentional partners may indeed lead to the promotion of feelings of social connection, empathy, and prosociality [101]. The effect of intentionality on the human tendency to anthropomorphise things was also shown in very young children. When young children interact with artefacts, these acquire the status of relational artefacts, and are thought of by children as “alive” and with an “intention” [93, 94]. Already Piaget [81] suggested that children younger than 6-years-old tend to attribute consciousness to objects—namely the capability to feel and perceive—and that children consider “alive” also the things that are inanimate for the adult, if these objects serve a function or are used to reach a goal: a phenomenon called animism. In this respect, Katayama et al. [52] showed that, although children are generally able to discriminate between the human and the robot, 5 and 6-years-old children tend attribute to robots biological and psychological proprieties.

Following from these findings and with the aim to specifically explore young children’s behavioural responses and mental states attribution to an artificial agent during an interactive setting, in the present study we investigated preschool children’s behaviour when interacting with a robot with respect to a human agent. Relational skills in social contexts are typically studied trough use of interactive games deriving from the Game Theory [15, 96]. One of such games is the Ultimatum Game [31, 32]. Used with children, the Ultimatum Game (UG) serves to delineate development in terms of equity and negotiation ability in decision-making, thus focusing on sensitivity to fairness and aversion to inequality [27, 28]. The UG involves interacting with at least another agent, and it is related to the development of ToM in that it activates the ability of mental comprehension, detection and anticipation of the other’s behaviour [16,17,18,19, 37, 60, 62, 63, 66, 89, 91, 95]. In the UG, the subject can play as either the proposer or the receiver. The general rule underlying the UG is that the proposer makes an offer, proposing a certain division of goods between him or her and the other player, i.e., the receiver. If the receiver refuses the offer, neither players get anything, whereas if the offer is accepted, both players obtain the proposed division. Using the UG, it is then possible to study interactive behaviour in reciprocal situations from a developmental perspective, comparing behaviour at different ages. Some studies suggest that infants are already responsive to an unequal resource distribution at 19-month-olds (e.g., [89]). Fehr and colleagues [28] showed that sensitivity to fairness increases from 3 to 8 years of age, and that selfish behaviour decreases from 3–4 to 5–6 years, although most of 5–6 year olds still tend to not share (78% of the sample). Additionally, school age children (> 7 years) generally behave more fairly when distributing resources and tend to share more than younger children [28, 39].

Only few studies have employed the UG paradigm with robots, and—even so—uniquely in interaction with adults. Research on adults generally shows that participants are more likely to behave in a similar fashion when playing the UG with another human or a robotic agent, and that this similarity increases the more the robotic agent presents human-like features. Terada and Takeuchi [92], for example, reported that rejection scores in the UG were higher in the case of a computer opponent than in the case of a human or robotic opponent, suggesting that people might treat a robot as a reciprocal partner as when playing with another human. Also, Nishio and colleagues [74] tested university students while playing the UG with four distinctive artificial agents, which differed in the degree of similarity with humans. In their study, there were four conditions: computer terminal (Computer condition), humanoid robot (Humanoid condition), android robot (Android condition), and humans (Human condition). They included a mentalizing stimulus for each agent, which consisted of four short interactional sentences pronounced by each agent when meeting the subject (e.g., “how are things going?”). Furthermore, the authors administered a post-experimental questionnaire in order to test how participants perceived the agents (i.e., human likeness, or machine likeness). The authors examined the number of rejections of the agent’s proposals and the number of the agents’ fair and unfair proposals, both measures used as indicators of recognition of the agents as social entities. The results showed that, when the agent looked like a human, fairness of the proposals and refusal rate were similar as when interacting with a human, and particularly when introducing the artificial agent after the interactional sentences. Additionally, Nitsch and Glassen [75] analysed a group of young adults, who played the UG with a human agent and with a humanoid robot behaving so as to be perceived animated or apathetic. The results showed that the participants that played in the animated robot condition perceived the interactions as more positive and human-like than in the apathetic condition. Robins and colleagues [84] also studied reciprocity in the human–robot interaction in a sample of young adults through the UG and the Prisoner Dilemma. They showed that participants were equally reciprocal with the human and the robot, despite the fact that the robot collaborated more with humans in the Prisoner Dilemma task, indicating that human–human interactions are nevertheless privileged with respect to human–robot interactions. Finally, Takagishi and colleagues [91] examined the effect of different robots’ facial expressions in the UG, and showed that emotional expressions had an effect on the offers made by the participant to the robot compared to offers made to a computer displaying a simple line drawings face.

Altogether, the results of these studies suggest—in adult subjects—a proclivity to interact with robotic agents in a very similar fashion as with other humans. With respect to children’s behaviour, there are no studies so far addressing children’s decision making during an interactive and reciprocal social situation involving a robot. In the present study, we made an attempt to fill this developmental gap by exploring interactive behaviour during the UG when children played with either another child or with a robot. Our sample children were aged 5-to-6 years. At this age, children had acquired at least a first-order ToM capability [98]. First-order ToM entails a recursive thinking, which implies the meta-representation or the representation of a mental representation of a low complexity level, of the kind “I think that you think…”. In this respect, we hypothesized that children aged 5–6 years were able to discriminate the interactive partners as a function of agency (human vs. robot) on the basis of mental states attribution. In the UG, the children played both as proposers and receivers. Their performance on the UG was evaluated against several socio-demographic, as well as, cognitive factors. Finally, an analysis of children’s justifications for their behaviour when playing both as proposers and receivers was carried out, outlining—for the first time—response patterns that characterize the rationale underlying these children’s game strategy.

2 Method

2.1 Participant

Thirty-three (33) Italian kindergarten children participated in the experiment. Two children were excluded from the study: one failed the First-Order ToM task and the other one failed the Inhibition task (see below). Data analysis was therefore carried out on 31 children (males = 18, females = 13; mean age = 70.8 months, SD = 2.99 months). The children attended three different schools located in north Italy. The children’s parents received a written explanation of the procedure of the study, the measurement items, and the materials used, and they gave written consent. The children were not reported by teachers or parents for leaning and/or socio-relational difficulties. The study was approved by the Local Ethic Committee (Università Cattolica del Sacro Cuore, Milan).

2.2 General Procedure and Measures

The children were tested individually in a quiet room in the kindergartens. Administrations were carried out by a single researcher both in the morning and afternoon during normal activity. The children were assessed in two experimental sessions in different days within 2 weeks. The administration of the tasks in two independent sessions was thought of not to overly tire the children with an excessively long procedure as well as not to disrupt children’s normal school activity.

In the first session, children were administered the following tests: A First-Order False-Belief task and two First-Order False-Belief videos, assessing first-order theory of mind; two Strange Stories, assessing advanced theory of mind; and the Attribution of Mental and Physical States (AMPS) scale. In the second session, children were administered the following tests: The Family Affluence Scale (FAS), assessing socioeconomic status; two executive functions tests, assessing the ability to inhibit behaviour and working memory; further two First-Order False-Belief videos; additional two Strange Stories; and the Ultimatum Game, assessing fairness.

The tests were so distributed within sessions to balance the total time required to complete the whole battery, which was of approximately 45–50 min, with each session lasting about 25 min. Within each session, the administration of the tests was randomized across children.

2.3 Socioeconomic Status

To assess children’s socio-economic status, we used the Italian translation of the Family Affluence Scale (FAS; [10, 97]). This test is composed of four items measuring family wealth, and more specifically: (1) family car ownership (score range 0–2); (2) own bedroom (range 0–1); number of computers at home (range 0–3); number of times spent on vacation during the past year (range 0–3). The FAS total score was obtained adding up the four-item scores (total range 0–9).

2.4 Executive Functions (Inhibition and Working Memory)

We tested the children’s executive functions using the Inhibition “A Developmental NEuroPSYchological Assessment” subtest (NEPSY II; [54]) testing the ability to inhibit automatic responses in favour of novel responses and the ability to switch between response types. The subject looks at a series of black and white shapes or arrows and names either the shape or direction or an alternate response, depending on the colour of the shape or arrow. In the present study, we used the combined scores of the Inhibition NEPSY-II subtest, which associates accuracy and speed of response.

Furthermore, we used the Backward Digit Span testing working memory. The experimenter verbally presented the child with sequences of numbers that the child had to repeat in reverse order. The test started with three sequences of two digits, and gradually introduced triples of sequences of increasing length, to a maximum possible length of eight digits. The test stopped when the child failed all three sequences of the same length. The score was calculated as follows: Children received 1 when they reversed at least two out of three sequences; if successful, the child passed to the next longer series, scoring 0.33 reserving at least one out of three sequences. The decimal scores were then summed to obtain the main score (e.g., 1 + 0.33 = 1.33) until children reached 1 (e.g., 1 + 0.33 + 0.33 + 0.33 = 2), if necessary. For a detailed description of the scoring criteria see Korkman et al. [54].

2.5 First-Order False Belief Task

To assess first-order ToM, we used the classical Unexpected Transfer task story [102]. The experimenter told the story using two dolls (one male and one female), a ball, a box, and a basket. The classical story is about two siblings playing with a ball in a room. One of the children puts the ball in a box and leaves the room. Meanwhile, the other child takes the ball out of the box, puts it in the basket and goes away. Finally, the first character comes back in the room and wants to play with the ball. At the end of the story the experimenter asks the child the following questions: “What is the first place where he will look for a teddy bear?”—referring to the first character (First-Order False Belief question); “Where did the child put the ball before going upstairs?” (control memory question); “Where is the ball really?” (Reality control question).

The answers to the two control questions (memory and reality) were used to filter children’s performance: children who did not pass them scored 0 and their performance on the First-Order False Belief question was not evaluated. If children passed the control questions, the test question about the false belief scored 1 if correct and 0 if incorrect.

2.6 First-Order False Belief: Video Stimuli

To assess children on the First-Order False Belief, we introduced—besides the commonly used task as described above—a new tool, namely eight videos representing the classical story, this time introducing Robovie as a new character. The aim of the videos was twofold: On one hand, we aimed at comparing the video version of the false belief task with the classical storyboard narration; on the other hand, by comparing children’s attribution of a first-order false belief to another child and to a robot we aimed at assessing whether beliefs attribution to the robot substantially differs from belief attribution to the child, thus highlighting children’s recognition of different mental properties. To these purposes, we used a story similar to the classical Unexpected Transfer task [102] as videos script. The videos started presenting two characters (two children, two robots, or a child and a robot) in a room with two boxes of a different colour (blue and pink) placed in front of them. Subsequently, one of the characters put a teddy bear under one of the two boxes (the same for all videos) and then left the room. When the other character was alone in the room, he, she, or it moved the teddy bear under the other box and left the room. Finally, the first character returned into the room to take the teddy bear. A voiceover narration described the story in each video.

A frequency analysis showed that all children attributed a false belief to the child independent of whether the task was administered in the form of storyboard or video, suggesting that the storyboard narration is equivalent to the video format in terms of task effect. Additionally, all children attributed a false belief also to the robot, independent of the role played by the robot (i.e., making or undergoing the transfer with a human agent or with another robot), suggesting that the robot, like the human, is subject to informational access limitations. Details about video recordings are in Supplementary Material (S1). The videos can be found in Electronic Supplementary Material.

2.7 Strange Stories

We assessed children’ advanced ToM using the Strange Stories task [33, 100], whose Italian translation had been already used in other studies [21, 55]. This task evaluates the ability to make inferences about mental states by interpreting non-literal statements. We selected a subgroup of four mentalistic stories involving double bluffs, misunderstandings, white lies, and persuasion. After reading the stories, we asked the children to explain the characters’ behaviour without a time limit. The experimenter transcribed ad verbatim the children’s answers. Scoring was based on the general guidelines [100]: 0 for incorrect answers, 1 for partially correct answers, and 2 for full and explicit answers. Two judges independently coded 30% of the responses. The inter-rater agreement was substantial (Cronbach’s Alpha = 0.96). Disagreements were resolved through discussion between judges. Total scores ranged from 0 to 8. The analysis of children’s performance on the strange stories showed a quite poor general performance. In fact, children scored very low, ranging from 0 to 3 out of a total possible score of 8.

2.8 Attribution of Mental and Physical States

The Attribution of Mental and Physical States (AMPS) is a measure of states attribution that the child has to make looking at pictures depicting a character. The AMPS is an ad-hoc scale drawn from the questionnaire described in Martini and colleagues [65]. This scale was used to assess what features the subject (the child) ascribed to the interactive agent (another child or the robot) in mental and physical terms. The experimenter first showed the child two pictures depicting a child or a robot and then asked the child—as a control question—what was depicted in the picture (reality question): If the child correctly recognized the character, the test went on; if the child responded incorrectly, the experimenter corrected the child making sure that he or she was fully aware of the agent’s identity and then proceeded with the test. No children failed the reality question; that is, all of the children correctly recognized the child and the robot at first instance.

The presentation order of the two pictures was randomized across children. After presenting the picture and asking the reality question, the experimenter asked the child 25 questions about the agent’s mental states. The child had to respond “Yes” or “No” to each question. The 25 questions were grouped in five different states categories: Epistemic, Emotional, Desires and Intentions, Imaginative, and Perceptive. The total score was the sum of the “Yes” answers (score range 0–25); the five partial scores were the sum of the “Yes” answer for each category (score range 0–5).

2.9 Ultimatum Game

A standard version of the Ultimatum Game (UG; [32]) was used to evaluate fairness. During the game, the child (playing as proponent) could decide how to distribute 10 stickers between him or her and a passive player represented by a graphic representation of either a child–human agent or a robot. The graphic representation of the other child corresponded to a male figure when the subject was a boy, whereas it represented a female when the subject was a girl. The use of a graphic representation of an interactive partner has been already used with children during an UG in previous studies (e.g., [16, 60]).

The stickers depicted known animals by the children (e.g., dogs, cats, tigers, lions, birds, fishes, etc.). The number of stickers that could be offered during the game ranged between 1 and 9. After playing the UG, the child was actually given a final amount of sticker. Children played one round as proposers and five rounds as responders, for a total of six rounds. Playing as proposer, the child could decide how to divide the stickers with the other child or the robot (graphic representation) and, playing as responder, the child could decide whether to accept or refuse the proposed division. In case of acceptance, both players received the respective proposed amounts; in case of refusal, neither player gained anything.

When playing as responder, each round (5 in total) corresponded to a specific type of proposal, ranging from a division of 5–5 (fair) to a division of 1–9 (highly unfair). The rounds were randomized across children. For each round, the child could accept or refuse the offered amount, receiving a score 1 in case of acceptance and 0 when refusing the offer. The total amount of acceptances—corresponding to the total gain—was also calculated (range 0–15) when the child played as receiver. Similarly, when playing as proposer, the total amount proposed was calculated for each child (range 0–9).

2.9.1 UG Procedure

Before starting the game, the experimenter explained the game rules presenting four different examples in a graphic format: (1) an adult playing as proposer with a child, who accepted the offer; (2) an adult playing as proposer with a child, who refused the offer; (3) a robot playing as proposer with a child, who accepted the offer; (4) a robot playing as proposer with a child, who refused the offer.

During the game, the researcher played “the other child’s/robot’s” role, proposing, accepting, or refusing offers. The children’s performance at the Ultimatum Game was evaluated in a single session lasting approximately 10 min.

At the end of the game, children were asked the reasons that they placed a certain offer (proposer) or accepted/refused a certain proposal (receiver). The children’s answers were classified according to three criteria: (1) outcome—the justification refers uniquely to the amount offered or received without commenting on or referring to the other player in mental terms (e.g., “because I like that he does not have anything and I have them all”; “because I wanted to win”); (2) equity—the justification includes terms that refer explicitly to the construct of equity, independent of whether the amount offered or received was equal or unequal (e.g., “because in this way we are even”; “because it was nicer to give him three and I like to share them”); (3) mentalistic—the justification includes terms that refer to the other player in mental terms (e.g., “because he would like me to receive more”; “he believed that I would win”).Footnote 1

3 Results and Discussion

All of the continuous variables were normally distributed with skewness between − 1 and 1.

3.1 AMPS

With this analysis, we compared the attribution of states to the human agent (the other child) and to the robot. The inspection of the boxplots revealed a tendency towards maximum scores (5) for states attribution to the human agent—particularly with respect to the perceptive state, where we observed a ceiling effect—and a normal distribution for states attribution to the robot. A repeated measures general linear model (GLM) was carried out with five levels of state (epistemic, emotional, desires and intentions, imaginative, perceptive), and two levels of agency (human vs. robot) as the within groups factors. The results showed a main effect of state (F4,120 = 5.94, p < 0.0001, partial-η2= 0.17, δ = 0.98), a main effect of agency (HA > RB; F1,30 = 53.82, p < 0.0001, partial-η2= 0.64, δ = 0.1), and a significant interaction state * agency (F4,120 = 7.90, p < 0.0001, partial-η2= 0.21, δ = 0.1). Post hoc analyses (Bonferroni corrected) showed that desires and intentions scored significantly higher than both epistemic (Mdiff= 0.69, p < 0.01) and imaginative (Mdiff= 0.52, p < 0.05) states; perceptive state scored significantly higher than imaginative state (Mdiff= 0.61, p < 0.01). Additionally, the analyses showed that the interaction effect stemmed primarily from differences between state within agency (HA, RB). More specifically, with respect to states attribution to HA, epistemic state scored higher than imaginative state (Mdiff= 0.58, p < 0.01), and perceptive state scored higher than all the other states (p < 0.05) except for the epistemic, which just failed to reach significance (Mdiff= 0.36, p = 0.055). With respect to states attribution to RB, the results showed that desires and intentions received higher scores compared to epistemic and imaginative states (Mdiff= 1.23, Mdiff= 1.00, respectively; p < 0.001). These results are summarized in Fig. 1.

The results on the attribution of states to HA and RB clearly showed that children perceived the human agent with greater epistemic and emotional states, intentions and desires, imagination, and perceptive properties than the robot, therefore considering this latter as a distinct entity (see, [24]). Children tended to ascribe poor imagination to both the child and the robot, in line with evidence showing that young children’s imagination abilities mature gradually [34,35,36, 56, 57]. Interestingly, children tended to ascribe desires and intentions also to the robot, although to a significantly lesser extent than to the human agent. This finding is congruent with evidence showing young children’s tendency to ascribe intention also to non-living things [81]. This tendency could have then been enhanced in this study because, during ToM videos (see Sect. 2), children observed the robot performing real actions. In this respect, also from a neurophysiological perspective, movement and intentions go hand in hand ([40, 83]; see also, [22, 86]), as also suggested by psychophysiological evidence with children [20]. All the same, while attributing intentions to the robot, children ascribed to the robot a low ability to think, understand, and decide (epistemic attribution). These results, apparently in contrast, are on the other hand much in line with the idea of animism introduced above, and namely with young children’s tendency to attribute a living soul to plants, inanimate objects, and natural phenomena, while being aware that these entities are non-living (see [52, 77, 92]).

3.2 Ultimatum Game

The main aim of the present study was to assess whether preschool children behave differently when interacting with a human agent or with a robot. We therefore had children playing the UG with either another child or with the robot, as both proposers and receivers.

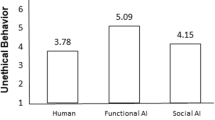

3.2.1 Proposer

A t test comparing the total amount offered to the other child or to the robot when the participant played as proposer showed no significant difference in the total amount offered (HA: M = 3.71, SE = 0.31; RB: M = 3.55, SE = 0.35; p > 0.05). Reinforcing this result, we found high internal consistency (Cronbach α = 0.85) between each individual child’s offer when playing with the human agent or the robot. Table 1 reports children’s proposed divisions to HA and RB.

These results are in line with literature (e.g., [28]) showing that pre-schoolers tend to make offers that favour their own gain, although the frequency of children that made offers towards fairness (i.e., ranging between 4–6 and 6–4) was quite substantial (68% towards HA; 55% towards RB). Additionally, these results extend previous findings showing that 5-year-old children’s behaviour and game strategy is consistent independent of whether children play with another child or with a robot, in line with the large body of existing literature on adults, as fully introduced above.

3.2.2 Receiver

Within this analysis, we summed all acceptances when children played as receivers calculating the total amount gained by each child. A t-test comparing the total acceptances from the other child and the robot showed no differences in the total amount accepted as a function of agency (t = 0.75, p > 0.05), indicating that children accepted similar amounts when playing with HA (M = 10.97, SE = 0.77) and with RB (M = 10.45, SE = 0.95). This result is congruent with the data described above, showing children’s tendency to adopt a similar game strategy when playing with the other child or with the robot.

Specifically comparing children’s acceptances of each proposed division from HA and RB, the results confirmed no differences in the frequency of acceptances as a function of agency within division (for statistics, see Table 2). Additionally, comparing the frequency of acceptances between successive divisions for both HA and RB, the Wilcoxon test showed no significant differences for the child (i.e., 5–5 vs. 6–4; 6–4 vs. 7–3; etc.; p > 0.05), whereas we observed significant differences for the robot. More precisely, acceptances of 6–4 division were significantly lower than acceptances of 5–5 division (71% vs. 90%, p < 0.01), as well as acceptances of 7–3 compared to 6–4 divisions (52% vs. 71%, p < 0.01). This latter result shows a significant, although minor, tendency of children to accept slight unfair offers more often from another child than from the robot.

3.3 Correlations

Pearson’s correlations were carried out between the total scores at the UG when children played with the other child and the robot, as both proposer and receiver. The results showed, as expected, a substantial correlation between the total amount proposed to the other child and the robot (r2 = 0.56, p < 0.01), as well as a strong correlation between the total amount accepted by the other child and the robot (r2 = 0.49, p < 0.01). Additionally, UG scores were further correlated with demographic, cognitive, and socio-economic variables, including age (months), the child’s gender, and scores on: working memory, inhibition task, intentionality test, and Family Affluence Scale. These correlations are summarized in Table 3. In general, no significant correlations were observed between the UG scores and any of these variables (p > 0.05).

3.4 Analysis of Children’s Justifications at the UG

After the child played the UG as both proposer and receiver, the experimenter asked him or her to explain the reasons for his or her division. The children’s answers were classified as “outcome”, “equity”, or “mentalistic” (for details, see Sect. 2). Two independent judges evaluated the children’s justifications.

3.4.1 Proposer

The inter-rater reliability scores were substantial both when assessing children’s justifications when playing with the other child (Cronbach’s Alpha = 0.88), and when playing with the robot (Cronbach’s Alpha = 0.91).

Analysing the frequencies of children’s justification when making an offer (proposer), the results showed an even distribution of justification type (amount, equity) when children offered a precise fair amount (5–5 division), whereas a skewed distribution of justification type toward “amount” when children started placing offers that privileged their own gain. This was independent of whether children played with the HA or the RB. A summary of the frequency distribution of justification types for each proposed division is presented in Table 4(A).

3.4.2 Receiver

The inter-rater reliability scores were calculated for each proposed division (5–5; 6–4; 7–3; 8–2; 9–1) when children played as receivers. The results showed substantial agreements of children’s justifications of acceptance/refusals when interacting with the other child (mean Cronbach’s Alpha = 0.83; min = 0.82, max = 0.84) and with the robot (mean Cronbach’s Alpha = 0.83; min = 0.80, max = 0.84).

By comparing the frequency of justification types when children accepted or refused the proposed divisions, we found that, when receiving a 5–5 division, justifications were either based on “outcome” (HA: N = 13; RB: N = 14) or on “equity” (HA: N = 18; RB: N = 16); only one child presented a mentalization-based justification when interacting with the robot. When faced with a 6–4 division, the majority of children (HA: N = 26; RB: N = 28) presented a justification based on “outcome” independent of acceptance or refusal; five children presented a justification based on “equity” and none based on “mentalization” when interacting with the other child; only one child presented a justification based on “equity” and two children a justification based on “mentalization” when interacting with the robot. A summary of the frequency distribution and statistics of acceptances and refusals of each proposed division and justification type is presented in Table 4(B). In general, these results suggest that, when children were proposed a division skewing towards unfairness, the equity and mentalization-based justifications decreased substantially (χ2(4) = 16.47, p < 0.001), in favour of the outcome-based justification.

The analysis of children’s justification for their behaviour—when playing both as proponents and receivers—indicates that children tend to regard fair (and hyperfair) divisions either in terms of outcome or in terms of equity-based reasoning, with an approximately equal split. When the division starts skewing, even slightly (i.e., 6–4 division), towards unfairness, the types of justifications remarkably bias towards an outcome-based reasoning. It is noteworthy observing that mentalistic-based justifications were very poor, if not even inexistent, in all proposed scenarios. This general behaviour was observed both when children played with the other child and with the robot, indicating no differences in the type of reasoning underlying specific choices as a function of agency.

On the whole, the UG results suggest that children aged 5 years behave quite fairly, though showing a tendency towards favouring their own gain placing and accepting unfair—and even very unfair—divisions, in line with current literature describing pre-schoolers’ behaviour during the UG (see, for example, [60]). Lack of behavioural differences as a function of agency (HA vs. RB) further indicates that children at this age tend to disregard to other’s mind when making choices, following a very consistent strategy throughout the game on the basis of other criteria (e.g., tendency to maximise gains), or personal traits (e.g., disposition to fairness). This is particularly true if considering the fact that the robot was indeed considered a different entity from the human agent as observed from the analysis of states attribution described above. This idea of self-centeredness is further supported by the analysis of children’s justifications for their offers (proposer) and acceptances/refusals (receiver). In fact, we found an even distribution of justification types (amount, equity) when children offered or accepted a fair amount both to/from another child and to/from the robot; whereas, we observed a remarkably skewed outcome-based justification when children offered or accepted/refused unfair divisions. These latter results are further discussed below.

4 General Discussion

This study investigated 5-year-olds’ behaviour at the Ultimatum Game. The aim was to compare young children’s game strategy when playing with a human or robotic agent. The results showed that children’s game strategy was very similar when they played with another child and with a robot, independent of the role played (proposer or receiver). Children were quite conservative both when offering and accepting proposals, that is, they generally tended to offer and accept fair divisions (5–5), although almost half of children proposed and accepted also unfair divisions, ranging from 6–4 to 9–1. These results are in line with developmental literature on UG. For example, Benenson and colleagues [6] observed that children aged 4 years donated less than 6 and 9-year-olds, thus showing that sensitivity to fairness increases with age. Furthermore, a research by Lombardi and colleagues [60] showed that, at 6 years, children were more selfish than children aged 8 and 10 years, further highlighting that younger children tend to maximize their gain accepting also unfair offers.

Importantly for the present purpose, no significant differences were observed between offers made to or received from the other child or the robot. In this respect, children’s behaviour was comparable to adults’ behaviour as reported in several studies. Terada and Takeuchi [92], for example, showed that, when playing the UG, adult participants took approximately the same time to decide how to distribute goods between a human and a robot. In the study by Kahn and colleagues [47], the frequency of acceptances and refusals became closer to that toward a human when the robot had more human-like features. Similar results were also found by Rosenthal-von der Pütten and Krämer [85], on the whole suggesting that, when bargaining, strategic thinking does not substantially change if playing with a human or a robotic agent.

Although also in the present study behaviour was comparable when children played with another child or with the robot, it is worth noting the significant, although minor, tendency of children to accept slight unfair offers more often from another child than from the robot when they played as receivers. In this respect, one may suggest that children’s expectancy from a robot is to be a totally fair player. Accordingly, divergence from this initial expectation could be less acceptable compared to situations in which children played with a human peer, whose behaviour could be plausibly expected to be also unfair. Alternatively, but not mutually exclusive, one may suggest that children believe they have a distinctive status compared to robots that, as assessed through AMPS, are perceived as different entities with respect to children at all levels: epistemic, emotional, imaginative, and perceptual. Also, in this light, unfair offers would be less acceptable when made by a robot than by a human peer.

Finally, with respect to results from the analysis of children’s justifications at the UG, it is interesting to observe that the reasons provided by children for their behaviour, both when playing as proposers and receivers, suggest that children aged 5 years tend to consider fair only a division that is exactly fair (5–5). A slight skewed division towards unfairness (i.e., 6–4) already falls within the range of categorization that characterizes very unfair proposals, regardless of whether the proposals are made or received. Additionally, it is noteworthy that fair or slightly hyperfair divisions were typically justified in either outcome or equity terms, whereas unfair offers were mostly justified in terms of quantity. Mentalization-based justifications were poor for all divisions, although—in these cases—it was interesting to observe that, when children played as proposers, mentalization-based justifications were uniquely confined to the fairest divisions (4–5; 5–5; 6–4). In general, these considerations may suggest that children, particularly in case of unfair offers, tend to not use mentalization-based explanations in order to reduce the distress stemming from the discrepancy between a “socially-expected” equal behaviour and the actual “selfish” behaviour. This interpretation, which highlights a moral conflict, can be further extended to the outcome-based justifications predominantly used to explain unfair offers. In fact, using a “cold” justification for morally-inacceptable behaviour may, in a certain sense, serve to resolve a social cognitive conflict (e.g., [3]), ultimately reducing the distress caused by the experimenter’s explicit request to provide a justification.

5 Concluding Remarks and Limitations of the Study

This study allowed to highlight the use of artificial agents as “stimuli” to evoke and analyse human social abilities by investigating the development of psychological processes. In particular, we showed for the first time that young children’s fairness is not affected by the partner’s agency since, as it appears and in line with previous results, 5 years-old children’s decisions during social interactions are self-centred and poorly oriented towards the other’s mind. Future studies could address the same issue focussing on different ages to highlight behavioural developmental changes in older children, when higher mentalistic abilities have matured. In this respect, it is worth noting the importance of considering age in the human–robot interaction since the quality of the interaction with a robotic agent may significantly change if the partner is a child or an adult. Additionally, we showed that the analysis of children’s justifications for their behaviour may be enlightening with respect to children’s actual psychological understanding of constructs, such as that of fairness.

It is important to stress that the present results need to be confirmed with an ampler sample allowing to increase the power of the effects observed. Also, we cannot be sure as a matter of fact if using different living or non-living entities (e.g., an animal, a chair with drawn eyes, etc.) would produce alternative results as those presented here for the robot. Future studies could then use additional agents and eventually address online, in vivo, interactions with the robot in order to support the present findings. On the whole, and as also remarked in Kompatsiari et al. [53], findings of this kind may be relevant to the human–robot interaction research with the aim to develop social behavior of artificial agents, as well as for research studying mechanisms of social cognition.

Notes

Ad verbatim translation from Italian.

References

Argote L, Goodman PS, Schkade D (1983) The human side of robotics: how worker’s react to a robot. In: Husband TH (ed) International trends in manufacturing technology. Springer, New York, pp 19–32

Asada M, MacDorman KF, Ishiguro H, Kuniyoshi Y (2001) Cognitive developmental robotics as a new paradigm for the design of humanoid robots. Rob Auton Syst 37(2):185–193. https://doi.org/10.1016/S0921-8890(01)00157-9

Bandura A (1991) Social cognitive theory of moral thought and action. In: Kurtines WM, Gewirtz J, Lamb JL (eds) Handbook of moral behavior and development, vol 1. Psychology Press, London, pp 45–103

Baron-Cohen S (1991) Precursors to a theory of mind: understanding attention in others. In: Whiten A (ed) Natural theories of mind: evolution, development and simulation of everyday mindreading. Blackwell, Oxford, pp 233–251

Bartneck C, Forlizzi J (2004) A design-centred framework for social human–robot interaction. In: 13th IEEE international workshop on robot and human interactive communication. IEEE, pp 591–594. https://doi.org/10.1109/roman.2004.1374827

Benenson JF, Pascoe J, Radmore N (2007) Children’s altruistic behavior in the dictator game. Evol Hum Behav 28(3):168–175. https://doi.org/10.1016/j.evolhumbehav.2006.10.003

Beran TN, Ramirez-Serrano A, Kuzyk R, Fior M, Nugent S (2011) Understanding how children understand robots: perceived animism in child–robot interaction. Int J Hum Comput Stud 69(7):539–550. https://doi.org/10.1016/j.ijhcs.2011.04.003

Boucenna S, Anzalone S, Tilmont E, Cohen D, Chetouani M (2014) Learning of social signatures through imitation game between a robot and a human partner. IEEE Trans Auton Ment Dev 6(3):213–225. https://doi.org/10.1109/TAMD.2014.2319861

Boucenna S, Cohen D, Meltzoff AN, Gaussier P, Chetouani M (2016) Robots learn to recognize individuals from imitative encounters with people and avatars. Sci Rep 6:19908. https://doi.org/10.1038/srep19908

Boyce W, Torsheim T, Currie C, Zambon A (2006) The family affluence scale as a measure of national wealth: validation of an adolescent self-report measure. Soc Indic Res 78(3):473–487. https://doi.org/10.1007/s11205-005-1607-6

Breazeal C (2003) Toward sociable robots. Rob Auton Syst 42(3):167–175. https://doi.org/10.1016/S0921-8890(02)00373-1

Breazeal C, Harris PL, DeSteno D, Westlund K, Jacqueline M, Dickens L, Jeong S (2016) Young children treat robots as informants. Top Cogn Sci 8(2):481–491. https://doi.org/10.1111/tops.12192

Brooks R, Meltzoff AN (2002) The importance of eyes: how infants interpret adult looking behavior. Dev Psychol 38(6):958–966. https://doi.org/10.1037//0012-1649.38.6.958

Camaioni L, Perucchini P, Bellagamba F, Colonnesi C (2004) The role of declarative pointing in developing a theory of mind. Infancy 5(3):291–308. https://doi.org/10.1207/s15327078in0503_3

Camerer CF (2011) Behavioral game theory: experiments in strategic interaction. Princeton University Press, Princeton

Castelli I, Massaro D, Sanfey AG, Marchetti A (2010) Fairness and intentionality in children’s decision-making. Int Rev Econ 57(3):269–288. https://doi.org/10.1007/s12232-010-0101-x

Castelli I, Massaro D, Sanfey AG, Marchetti A (2014) “What is fair for you?” Judgments and decisions about fairness and theory of mind. Eur J Dev Psychol 11(1):49–62. https://doi.org/10.1080/17405629.2013.806264

Castelli I, Massaro D, Bicchieri C, Chavez A, Marchetti A (2014) Fairness norms and theory of mind in an Ultimatum Game: judgments, offers, and decisions in school-aged children. PLoS ONE 9(8):e105024. https://doi.org/10.1371/journal.pone.0105024

Castelli I, Massaro D, Sanfey AG, Marchetti A (2017) The more I can choose, the more I am disappointed: the “illusion of control” in children’s decision-making. Open Psychol J 10(1):55–60. https://doi.org/10.2174/1874350101710010055

Cattaneo L, Fabbri-Destro M, Boria S, Pieraccini C, Monti A, Cossu G, Rizzolatti G (2007) Impairment of actions chains in autism and its possible role in intention understanding. Proc Natl Acad Sci USA 104(45):17825–17830. https://doi.org/10.1073/pnas.0706273104

Cavallini E, Lecce S, Bottiroli S, Palladino P, Pagnin A (2013) Beyond false belief: theory of mind in young, young-old, and old-old adults. Int J Aging Hum Dev 76:181–198. https://doi.org/10.2190/AG.76.3.a

Chaminade T, Zecca M, Blakemore SJ, Takanishi A, Frith CD, Micera S, Dario P, Rizzolatti G, Gallese V, Umiltà MA (2010) Brain response to a humanoid robot in areas implicated in the perception of human emotional gestures. PLoS ONE 5(7):e11577. https://doi.org/10.1371/journal.pone.0011577

Charman T, Baron-Cohen S, Swettenham J, Baird G, Cox A, Drew A (2000) Testing joint attention, imitation, and play as infancy precursors to language and theory of mind. Cogn Dev 15(4):481–498. https://doi.org/10.1016/S0885-2014(01)00037-5

Di Dio C, Isernia S, Ceolaro C, Marchetti A, Massaro D (2018) Growing up thinking of God’s beliefs: theory of mind and ontological knowledge. Sage Open 1:1–14. https://doi.org/10.1177/2158244018809874

Di Salvo CF, Gemperle F, Forlizzi J, Kiesler S (2002) All robots are not created equal: the design and perception of humanoid robot heads. In: Proceedings of the 4th conference on designing interactive systems: processes, practices, methods, and techniques, ACM, pp 321–326. https://doi.org/10.1145/778712.778756

European Union: European Parliament: European Parliament resolution of 16 February 2017 with recommendations to the Commission on Civil Law Rules on Robotics [2015/2103(INL)]

Fehr E, Schmidt KM (1999) A theory of fairness, competition, and cooperation. Q J Econ 114(3):817–868. https://doi.org/10.1162/003355399556151

Fehr E, Bernhard H, Rockenbach B (2008) Egalitarianism in young children. Nature 454(7208):1079–1083. https://doi.org/10.1038/nature07155

Fiore SM, Wiltshire TJ, Lobato EJ, Jentsch FG, Huang WH, Axelrod B (2013) Toward understanding social cues and signals in human–robot interaction: effects of robot gaze and proxemic behavior. Front psychol 4:859. https://doi.org/10.3389/fpsyg.2013.00859

Fogassi L, Ferrari PF, Gesierich B, Rozzi S, Chersi F, Rizzolatti G (2005) Parietal lobe: from action organization to intention understanding. Science 308(5722):662–667. https://doi.org/10.1126/science.1106138

Güth W, Kocher MG (2014) More than thirty years of ultimatum bargaining experiments: motives, variations, and a survey of the recent literature. J Econ Behav Organ 108:396–409. https://doi.org/10.1016/j.jebo.2014.06.006

Güth W, Schmittberger R, Schwarze B (1982) An experimental analysis of ultimatum bargaining. J Econ Behav Organ 3(4):367–388. https://doi.org/10.1016/0167-2681(82)90011-7

Happé FG (1994) An advanced test of theory of mind: understanding of story characters’ thoughts and feelings by able autistic, mentally handicapped, and normal children and adults. J Autism Dev Disord 24(2):129–154. https://doi.org/10.1007/BF02172093

Harris PL (2000) The work of the imagination. Blackwell Publishing, Oxford

Harris PL, Leevers H (2000) Pretending, imagery and self-awareness in autism. In: Baron-Cohen S, Tager-Flusberg H, Cohen DJ (eds) Understanding other minds: perspectives from developmental cognitive neuroscience. Oxford University Press, New York, pp 182–202

Harris PL, Kavanaugh RD, Meredith MC (1994) Young children’s comprehension of pretend episodes: the integration of successive actions. Child Dev 65(1):16–30. https://doi.org/10.1111/j.1467-8624.1994.tb00731.x

Hoffman E, McCabe K, Smith V (2000) The impact of exchange context on the activation of equity in Ultimatum Games. Exp Econ 3(1):5–9. https://doi.org/10.1007/BF01669204

Hoffman G, Ju W (2014) Designing robots with movement in mind. J Hum Robot Interact 3(1):89–122. https://doi.org/10.5898/JHRI.3.1.Hoffman

House BR, Silk JB, Henrich J, Barrett HC, Scelza BA, Boyette AH, Hewlett BS, McElreath R, Laurence S (2013) Ontogeny of prosocial behavior across diverse societies. Proc Natl Acad Sci USA 110(36):14586–14591. https://doi.org/10.1073/pnas.1221217110

Ishiguro H, Ono T, Imai M, Kanda T (2003) Development of an interactive humanoid robot “Robovie”—an interdisciplinary approach. In: Jarvis RA, Zelinsky A (eds) Robotics research. Springer tracts in advanced robotics, vol 6. Springer, Berlin, pp 179–191. https://doi.org/10.1007/3-540-36460-9_12

Ishiguro H, Ono T, Imai M, Maeda T, Kanda T, Nakatsu R (2001) Robovie: an interactive humanoid robot. Ind Robot Int J 28(6):498–504. https://doi.org/10.1108/01439910110410051

Itakura S (2008) Development of mentalizing and communication: from viewpoint of developmental cybernetics and developmental cognitive neuroscience. IEICE Trans Commun E91-B(7):2109–2117. https://doi.org/10.1093/ietcom/e91-b.7.2109

Itakura S (2012) Understanding infants’ mind through a robot: challenge of developmental cybernetics. In: 22nd Biennial meeting of international society for the study of behavioural development, Edmonton, Canada

Itakura S (2013) Mind in non-human agents: challenge of developmental cybernetics. In: 18th Biennial conference of Australian human development, Gold Coast, Australia

Itakura S, Ishida H, Kanda T, Shimada Y, Ishiguro H, Lee K (2008) How to build an intentional android: infants’ imitation of a robot’s goal-directed actions. Infancy 13(5):519–532. https://doi.org/10.1080/15250000802329503

Itakura S, Okanda M, Moriguchi Y (2008) Discovering mind: development of mentalizing in human children. In: Itakura S, Fujita K (eds) Origins of the social mind: evolutionary and developmental views. Springer, Tokyo, pp 179–198. https://doi.org/10.1007/978-4-431-75179-3_9

Kahn PH Jr, Kanda T, Ishiguro H, Freier NG, Severson RL, Gill BT, Ruckert JH, Shen S (2012) “Robovie, you’ll have to go into the closet now”: children’s social and moral relationships with a humanoid robot. Dev Psychol 48(2):303–314. https://doi.org/10.1037/a0027033

Kanda T, Hirano T, Eaton D, Ishiguro H (2004) Interactive robots as social partners and peer tutors for children: a field trial. Int J Hum Comput Interact 19(1):61–84. https://doi.org/10.1207/s15327051hci1901&2_4

Kanda T, Shimada M, Koizumi S (2012) Children learning with a social robot. In: HRI ‘12 proceedings of the seventh annual ACM/IEEE international conference on human–robot interaction. IEEE, pp 351–358. https://doi.org/10.1145/2157689.2157809

Kang JW, Hong HS, Kim BS, Chung MJ (2008) Assistive mobile robot systems helping the disabled workers in a factory environment. Int J Assist Robot Mechatron 9:42–52. https://doi.org/10.5772/5155

Kanngiesser P, Itakura S, Zhou Y, Kanda T, Ishiguro H, Hood B (2015) The role of social eye-gaze in children’s and adults’ ownership attributions to robotic agents in three cultures. Interact Stud 16(1):1–28. https://doi.org/10.1075/is.16.1.01kan

Katayama N, Katayama JI, Kitazaki M, Itakura S (2010) Young children’s folk knowledge of robots. Asian Cult Hist 2(2):111. https://doi.org/10.5539/ach.v2n2p111

Kompatsiari K, Tikhanoff V, Ciardo F, Metta G, Wykowska A (2017) The importance of mutual gaze in human–robot interaction. In: International conference on social robotics 2017. Springer, Cham, pp 443–452. https://doi.org/10.1007/978-3-319-70022-9_44

Korkman M, Kirk U, Kemp S (2007) NEPSY-II: a developmental neuropsychological assessment. The Psychological Corporation, San Antonio

Lecce S, Zocchi S, Pagnin A, Palladino P, Taumoepeau M (2010) Reading minds: the relation between children’s mental state knowledge and their metaknowledge about reading. Child Dev 81:1876–1893. https://doi.org/10.1111/j.1467-8624.2010.01516.x

Lillard AS (1994) Making sense of pretence. In: Lewis C, Mitchell P (eds) Children’s early understanding of mind: origins and development. Lawrence Erlbaum, Hillsdale, pp 211–234

Lillard AS (2013) Fictional world, the neuroscience of the imagination, and childhood education. In: Taylor M (ed) The Oxford handbook of the development of imagination. Oxford University Press, New York, pp 137–160. https://doi.org/10.1093/oxfordhb/9780195395761.013.0010

Lin P, Abney K, Bekey GA (2011) Robot ethics: the ethical and social implications of robotics. MIT Press, Cambridge

Lombardi E, Greco S, Massaro D, Schär R, Manzi F, Iannaccone A, Perret-Clermont AN, Marchetti A (2018) Does a good argument make a good answer? Argumentative reconstruction of children’s justifications in a second order false belief task. Learn Cult Soc Interact 18:13–27. https://doi.org/10.1016/j.lcsi.2018.02.001

Lombardi E, Di Dio C, Castelli I, Massaro D, Marchetti A (2017) Prospective thinking and decision making in primary school age children. Heliyon 3(6):e00323. https://doi.org/10.1016/j.heliyon.2017.e00323

Manzi F, Massaro D, Kanda T, Tomita K, Itakura S, Marchetti A (2017) Teoria della Mente, bambini e robot: l’attribuzione di stati mentali. In: XXX Congresso AIP Sezione di Psicologia dello Sviluppo e dell’Educazione, Messina, Italy

Marchetti A, Castelli I, Harlè K, Sanfey A (2011) Expectations and outcome: the role of proposer features in the Ultimatum Game. J Econ Psychol 32:446–449. https://doi.org/10.1016/j.joep.2011.03.009

Marchetti A, Castelli I, Sanfey A (2008) Teoria della Mente e decisione in ambito economico: un contributo empirico. In: Antonietti A, Balconi M (eds) Mente ed economia Come psicologia e neuroscienze spiegano il comportamento economico. Il Mulino, Bologna, pp 191–207

Marchetti A, Manzi F, Itakura S, Massaro D (2018) Theory of mind and humanoid robots from a lifespan perspective. Z Psychol 226:98–109. https://doi.org/10.1027/2151-2604/a000326

Martini MC, Gonzalez CA, Wiese E (2016) Seeing minds in others-can agents with robotic appearance have human-like preferences? PLoS ONE 11(1):e0146310. https://doi.org/10.1371/journal.pone.0146310

Massaro D, Castelli I, Manzi F, Lombardi E, Marchetti A (2017) Decision making as a complex psychological process. Bildung und Erziehung 70(1):17–32. https://doi.org/10.7788/bue-2017-0104

Meltzoff AN, Gopnik A (1993) The role of imitation in understanding persons and developing a theory of mind. In: Baron-Cohen S, Tager-Flusberg H, Cohen DJ (eds) Understanding other minds: perspectives from autism. Oxford University Press, New York, pp 335–366

Meltzoff AN, Brooks R (2001) “Like me” as a building block for understanding other minds: bodily acts, attention, and intention. In: Malle BF, Moses LJ, Baldwin DA (eds) Intentions and intentionality: foundations of social cognition. MIT Press, Cambridge, pp 171–192

Meltzoff AN, Brooks R, Shon AP, Rao RP (2010) “Social” robots are psychological agents for infants: a test of gaze following. Neural Netw 23(8):966–972. https://doi.org/10.1016/j.neunet.2010.09.005

Minato T, Shimada M, Itakura S, Lee K, Ishiguro H (2006) Evaluating the human likeness of an android by comparing gaze behaviors elicited by the android and a person. Adv Robot 20(10):1147–1163. https://doi.org/10.1163/156855306778522505

Moriguchi Y, Matsunaka R, Itakura S, Hiraki K (2012) Observed human actions, and not mechanical actions, induce searching errors in infants. Child Dev Res 2012:1–5. https://doi.org/10.1155/2012/465458

Morita TP, Slaughter V, Katayama N, Kitazaki M, Kakigi R, Itakura S (2012) Infant and adult perceptions of possible and impossible body movements: an eye-tracking study. J Exp Child Psychol 113:401–414. https://doi.org/10.1016/j.jecp.2012.07.003

Mutlu B, Forlizzi J, Hodgins J (2006) A storytelling robot: modeling and evaluation of human-like gaze behavior. In: 6th IEEE-RAS international conference on humanoid robots. IEEE, pp 518–523. https://doi.org/10.1109/ichr.2006.321322

Nishio S, Ogawa K, Kanakogi Y, Itakura S, Ishiguro H (2012) Do robot appearance and speech affect people’s attitude? Evaluation through the Ultimatum Game. In: RO-MAN, 2012 IEEE. IEEE, pp 809–814. https://doi.org/10.1109/roman.2012.6343851

Nitsch V, Glassen T (2015) Investigating the effects of robot behavior and attitude towards technology on social human-robot interactions. In: 24th IEEE international symposium on robot and human interactive communication. IEEE, pp 535–540. https://doi.org/10.1109/roman.2015.7333560

Okanda M, Kanda T, Ishiguro H, Itakura S (2013) Three-and 4-year-old children’s response tendencies to various interviewers. J Exp Child Psychol 116(1):68–77. https://doi.org/10.1016/j.jecp.2013.03.012

Okumura Y, Kanakogi Y, Kanda T, Ishiguro H, Itakura S (2013) Infants understand the referential nature of human gaze but not robot gaze. J Exp Child Psychol 116(1):86–95. https://doi.org/10.1016/j.jecp.2013.02.007

Okumura Y, Kanakogi Y, Kobayashi T, Itakura S (2017) Individual differences in object-processing explain the relationship between early gaze-following and later language development. Cognition 166:418–424. https://doi.org/10.1016/j.cognition.2017.06.005

Park HW, Gelsomini M, Lee JJ, Breazeal C (2017) Telling stories to robots: the effect of backchanneling on a child’s storytelling. In: Proceedings of the 2017 ACM/IEEE international conference on human–robot interaction. ACM, pp 100–108. https://doi.org/10.1145/2909824.3020245

Perner J, Wimmer H (1985) “John thinks that Mary thinks that…” attribution of second-order beliefs by 5- to 10-year-old children. J Exp Child Psychol 39(3):437–471. https://doi.org/10.1016/0022-0965(85)90051-7

Piaget J (1929) The child’s conception of the world. Routledge, London

Premack D, Woodruff G (1978) Does the chimpanzee have a theory of mind? Behav Brain Sci 1(4):515–526. https://doi.org/10.1017/S0140525X00076512

Rizzolatti G, Fogassi L, Gallese V (2001) Neurophysiological mechanisms underlying the understanding and imitation of action. Nat Rev Neurosci 2(9):661–670. https://doi.org/10.1038/35090060

Robins B, Dautenhahn K, Boekhorst RT, Billard A (2005) Robotic assistants in therapy and education of children with autism: can a small humanoid robot help encourage social interaction skills? Univ Access Inf Soc 4(2):105–120. https://doi.org/10.1007/s10209-005-0116-3

Rosenthal-von der Pütten AM, Krämer NC (2014) How design characteristics of robots determine evaluation and uncanny valley related responses. Comput Human Behav 36:422–439. https://doi.org/10.1016/j.chb.2014.03.066

Sandoval EB, Brandstetter J, Obaid M, Bartneck C (2016) Reciprocity in human-robot interaction: a quantitative approach through the prisoner’s dilemma and the Ultimatum Game. Int J Soc Robot 8(2):303–317. https://doi.org/10.1007/s12369-015-0323-x

Scassellati B (2003) Investigating models of social development using a humanoid robot. In: Proceedings of the international joint conference on neural networks. IEEE, pp 2704–2709. https://doi.org/10.1109/ijcnn.2003.1223995

Scassellati B (2005) Using social robots to study abnormal social development. In: Berthouze L, Kaplan F, Kozima H, Yano H, Konczak J, Metta G, Nadel J, Sandini G, Stojanov G, Balkenius C (eds) Proceedings of the fifth international workshop on epigenetic robotics: modeling cognitive development in robotic systems. LUCS, Lund, pp 11–14

Sloane S, Baillargeon R, Premack D (2012) Do infants have a sense of fairness? Psychol Sci 23(2):196–204. https://doi.org/10.1177/0956797611422072

Srinivasan SM, Lynch KA, Bubela DJ, Gifford TD, Bhat AN (2013) Effect of interactions between a child and a robot on the imitation and praxis performance of typically developing children and a child with autism: a preliminary study. Percepl Mot Skills 116(3):885–904. https://doi.org/10.2466/15.10.PMS.116.3.885-904

Takagishi H, Kameshima S, Schug J, Koizumi M, Yamagishi T (2010) Theory of mind enhances preference for fairness. J Exp Child Psychol 105(1):130–137. https://doi.org/10.1016/j.jecp.2009.09.005

Terada K, Takeuchi C (2017) Emotional expression in simple line drawings of a robot’s face leads to higher offers in the Ultimatum Game. Front Psychol 8:724. https://doi.org/10.3389/fpsyg.2017.00724

Torta E, van Dijk E, Ruijten PAM, Cuijpers RH (2013) The Ultimatum Game as measurement tool for anthropomorphism in human–robot interaction. In: Herrmann G, Pearson MJ, Lenz A, Bremner P, Spiers A, Leonards U (eds) Social robotics. ICSR 2013. Lecture notes in computer science, vol 8239. Springer, Cham. https://doi.org/10.1007/978-3-319-02675-6_21

Turkle S (2004) Whither psychoanalysis in computer culture. Psychoanal Psychol 21(1):16–30. https://doi.org/10.1037/0736-9735.21.1.16

Villani D, Massaro D, Castelli I, Marchetti A (2013) Where are you watching? Patterns of visual exploration in the Ultimatum Game. Open Psychol J 6(1):76–80. https://doi.org/10.2174/1874350101306010076

Von Neumann J, Morgenstern O (1945) Theory of games and economic behavior. Princeton University Press, Princeton

Wardle J, Robb K, Johnson F (2002) Assessing socioeconomic status in adolescents: the validity of a home affluence scale. J Epidemiol Community Health 56(8):595–599. https://doi.org/10.1136/jech.56.8.595

Wellman HM, Cross D, Watson J (2001) Meta-analysis of theory-of-mind development: the truth about false belief. Child Dev 72(3):655–684. https://doi.org/10.1111/1467-8624.00304

Westlund JMK, Dickens L, Jeong S, Harris PL, DeSteno D, Breazeal CL (2017) Children use non-verbal cues to learn new words from robots as well as people. Int J Child Comput Interact 13:1–9. https://doi.org/10.1016/j.ijcci.2017.04.001

White S, Hill E, Happé F, Frith U (2009) Revisiting the strange stories: revealing mentalizing impairments in autism. Child Dev 80(4):1097–1117. https://doi.org/10.1111/j.1467-8624.2009.01319.x

Wiese E, Metta G, Wykowska A (2017) Robots as intentional agents: using neuroscientific methods to make robots appear more social. Front Psychol 8:1663. https://doi.org/10.3389/fpsyg.2017.01663

Wimmer H, Perner J (1983) Beliefs about beliefs: representation and constraining function of wrong beliefs in young children’s understanding of deception. Cognition 13(1):103–128. https://doi.org/10.1016/0010-0277(83)90004-5

Wykowska A, Chaminade T, Cheng G (2016) Embodied artificial agents for understanding human social cognition. Phil Trans R Soc B 371(1693):20150375. https://doi.org/10.1098/rstb.2015.0375

Funding

Università Cattolica del Sacro Cuore contributed to the publication of this research.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Supplementary material 2 (M4V 30207 kb)

Supplementary material 3 (MP4 24173 kb)

Supplementary material 4 (MP4 32775 kb)

Supplementary material 5 (MP4 34190 kb)

Rights and permissions

About this article

Cite this article

Di Dio, C., Manzi, F., Itakura, S. et al. It Does Not Matter Who You Are: Fairness in Pre-schoolers Interacting with Human and Robotic Partners. Int J of Soc Robotics 12, 1045–1059 (2020). https://doi.org/10.1007/s12369-019-00528-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-019-00528-9