Abstract

Decentralized Markov decision processes (DEC-MDPs) provide powerful modeling tools for cooperative multi-agent decision making under uncertainty. In this paper, we tackle particular subclasses of theoretic decision models which operate under time pressure having uncertain actions’ durations. Particularly, we extend a solution method called opportunity cost decentralized Markov decision process (OC-DEC-MDP) to handle more complex precedence constraints where actions of each agent are presented by a partial plan. As a result of local partial plans with precedence constraints between agents, mis-coordination situations may occur. For this purpose, we introduce communication decisions between agents. Since dealing with offline planning for communication increase state space size, we aim at restricting the use of communication. To this end, we propose to exploit problem structure in order to limit communication decisions. Moreover, we study two separate cases about the reliability of the communication. The first case we assume that the communication is always successful (i.e. all messages are always successfully received). The second case, we enhance our policy computation algorithm to deal with possibly missed messages. Experimental results show that even if communication is costly, it improves the degree of coordination between agents and it increases team performances regarding constraints.

Similar content being viewed by others

Notes

In the explanation of how the transition function is computed, we consider only the case without a message.

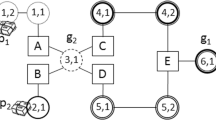

On Fig. 2 we schematize only some success states (without messages) for simplification.

The case where this agent is a constrained and predecessor agent at the same time can also be taken into account in our model. We distinguished between them to simplify the explanation.

References

Becker R, Zilberstein S, Lesser V, Goldman CV (2003) Transition-independent decentralized Markov decision processes. In: International joint conference on autonomous agents and multi-agent systems (AAMAS), pp 41–48

Becker R, Lesser V, Zilberstein S (2004) Decentralized Markov decision processes with event-driven interactions. In: The third international joint conference on autonomous agents and multi-agent systems (AAMAS), pp 302–309

Becker R, Carlin A, Lesser V, Zilberstein S (2009) Analyzing myopic approaches for multiagent communication. Comput Intell 25:31–50

Bellman R (1957) Dynamic programming. Princeton University, New Jersey

Bernstein DS, Givan S, Immerman N, Zilberstein S (2002) The complexity of decentralized control of Markov decision processes. Math Oper Res 27:819–840

Beynier A, Mouaadib A (2010) A rich communicative model in opportunistic decentralized decision making. In: ACM international conference on web intelligence and intelligent agent technology

Beynier A, Mouaddib A (2005) A polynomial algorithm for decentralized Markov decision processes with temporal constraints. In: The fourth international conference on autonomous agents and multi-agent systems (AAMAS), pp 963–969

Beynier A, Mouaddib A (2011) Solving efficiently decentralized MDPs with temporal and resource constraints. J Auton Agents Multi Agent Syst 23:486–539

Bresina J, Washington R (2000) Expected utility distributions for flexible contingent execution. In: The AAAI workshop representation issues for real world planning systems

Goldman CV, Zilberstein S (2003) Optimizing information exchange in cooperative multi-agent systems. In: The second international joint conference on autonomous agents and multi-agent systems (AAMAS), New York, pp 137–144

Goldman CV, Zilberstein S (2004) Decentralized control of cooperative systems: categorization and complexity analysis. J Artif Intell Res 22:143–174

Kumar A, Zilberstein S (2009) Constraint-based dynamic programming for decentralized POMDPs with structured interactions. In: International joint conference on autonomous agents and multiagent systems (AAMAS), pp 561–568

Lambrechts O, Demeulemeester E, Herroelen W (2008) Proactive and reactive strategies for resource-constrained project scheduling with uncertain resource availabilities. J Sched 11:121–136

Lazarova-Molnar S, Mizouni R (2010) Modeling human decision behaviors for accurate prediction of project schedule duration. Lecture notes in business information processing

Marecki J, Tambe M (2007) On opportunistic techniques for solving decentralized Markov decision processes with temporal constraints. In: International joint conference on autonomous agents and multi-agent systems (AAMAS)

Matignon L, JeanPierre L, Mouaddib A (2012) Coordinated multi robot exploration under communication constraints using decentralized Markov decision processes. In: Twenty-sixth AAAI conference on artificial intelligence

Melo F, Spaan MTJ, Witwicki SJ (2012) Exploiting sparse interactions for optimizing communication in DEC-MDPs. In: Seventh annual workshop on multi-agent sequential decision making (MSDM) held in conjunction with AAMAS

Mostafa H (2011) Exploiting structure in coordinating multiple decision makers. Dissertation, University of Massachusetts, Amherst

Mostafa H, Lesser V (2009) Offline Planning for communication by exploiting structured interactions in decentralized MDP. In: IEEE/WIC/ACM international conference on web intelligence and agent technology (WI-IAT), pp 193–200

Mouaddib A, Zilberstein S (1998) Optimal scheduling for dynamic progressive processing. In: European conference on artificial intelligence (ECAI)

Nair R, Tambe M, Marsella S (2002) Team formation for reformation. In: AAAI spring symposium on intelligent distributed and embedded systems

Oliehoek FA (2012) Decentralized POMDPs. In: Wiering M, Van Otterlo M (eds) Reinforcement learning: state of the art. Adaptation, learning, and optimization. Springer, Berlin/Heidelberg, pp 471–503

Oliehoek FA, Spaan MTJ (2012) Tree-based solution methods for multiagent pomdps with delayed communication. In: AAAI

Prakash S, Singh A, Sammal PS (2014) Implementaion of distributed multiagent system using JADE platform. Int J Comput Appl 105:12–19

Roth M, Simmons R, Veloso M (2005) Reasoning about joint beliefs for execution-time communication decisions. In: The fourth international joint conference on autonomous agents and multi-agent systems (AAMAS)

Roth M, Simmons R, Veloso M (2007) Exploiting factored representations for decentralized execution in multi-agent teams. In: International joint conference on autonomous agents and multi-agent systems (AAMAS)

Singh A, Juneja D, Sharma AK (2011) Agent development toolkits. Int J Adv Technol 2:158–164

Spaan MTJ, Gordon GJ, Vlassis N (2006) Decentralized planning under uncertainty for teams of communicating agents. In: The fifth international joint conference on autonomous agents and multi-agent systems (AAMAS), pp 249–256

Spaan MTJ, Oliehoek FA, Vlassis N (2008) Multiagent planning under uncertainty with stochastic communication delays. In: International conference on automated planning and scheduling (ICAPS)

Valtazanos A, Steedman M (2014) Improving uncoordinated collaboration in partially observable domains with imperfect simultaneous action communication. In: The second workshop on distributed and multi-agent planning (DMAP) held in conjunction with ICAPS

Washington R, Golden K, Bresina J, Smith D E, Anderson C, Smith T (1999) Autonomous rovers for mars exploration. In: IEEE aerospace conference

Wieser F (1889) Valeur naturelle (Dernatürliche Wert)

Witwicki SJ, Durfee EH (2011) Towards unifying characterization for quantifying weak coupling in DEC-POMDP. In: International joint conference on autonomous agents and multi-agent systems (AAMAS)

Xuan P, Lesser V, Zilberstein S (2001) Communication decision in multi-agent cooperation: Model and Experiments. In: The fifth international joint conference on autonomous agent

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Abdelmoumène, H., Belleili, H. An extended version of opportunity cost algorithm for communication decisions. Evolving Systems 7, 41–60 (2016). https://doi.org/10.1007/s12530-015-9138-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12530-015-9138-0