Abstract

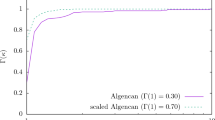

This paper discusses the use of a stopping criterion based on the scaling of the Karush–Kuhn–Tucker (KKT) conditions by the norm of the approximate Lagrange multiplier in the ALGENCAN implementation of a safeguarded augmented Lagrangian method. Such stopping criterion is already used in several nonlinear programming solvers, but it has not yet been considered in ALGENCAN due to its firm commitment with finding a true KKT point even when the multiplier set is not bounded. In contrast with this view, we present a strong global convergence theory under the quasi-normality constraint qualification, that allows for unbounded multiplier sets, accompanied by an extensive numerical test which shows that the scaled stopping criterion is more efficient in detecting convergence sooner. In particular, by scaling, ALGENCAN is able to recover a solution in some difficult problems where the original implementation fails, while the behavior of the algorithm in the easier instances is maintained. Furthermore, we show that, in some cases, a considerable computational effort is saved, proving the practical usefulness of the proposed strategy.

Similar content being viewed by others

Data Availability Statement

In the tests we use “the constrained nonlinear programming problems from CUTEst (available at github.com/ralna/CUTEst), including all from the Netlib (ftp://ftp.numerical.rl.ac.uk/pub/cutest/netlib) and the Maros & Meszaros (bitbucket.org/optrove/maros-meszaros) libraries. Mathematical programs with complementarity constraints from MacMPEC (available at wiki.mcs.anl.gov/leyffer/index.php/MacMPEC)” The quotation is in the manuscript, p. 12, 2nd paragraph.

References

Andreani, R., Birgin, E.G., Martínez, J.M., Schuverdt, M.L.: On augmented Lagrangian methods with general lower-level constraints. SIAM J. Optim. 18(4), 1286–1309 (2007). https://doi.org/10.1137/060654797

Andreani, R., Fazzio, N., Schuverdt, M., Secchin, L.: A sequential optimality condition related to the quasi-normality constraint qualification and its algorithmic consequences. SIAM J. Optim. 29(1), 743–766 (2019). https://doi.org/10.1137/17M1147330

Andreani, R., Haeser, G., Martínez, J.M.: On sequential optimality conditions for smooth constrained optimization. Optimization 60(5), 627–641 (2011). https://doi.org/10.1080/02331930903578700

Andreani, R., Haeser, G., Mito, L.M., Ramos, A., Secchin, L.D.: On the best achievable quality of limit points of augmented Lagrangian schemes. Tech. rep., Optimization Online (2020). http://www.optimization-online.org/DB_HTML/2020/07/7929.html

Andreani, R., Haeser, G., Schuverdt, M.L., Silva, P.J.S.: A relaxed constant positive linear dependence constraint qualification and applications. Math. Program. 135(1), 255–273 (2012). https://doi.org/10.1007/s10107-011-0456-0

Andreani, R., Martínez, J.M., Santos, L.T.: Newton’s method may fail to recognize proximity to optimal points in constrained optimization. Math. Program. 160(1), 547–555 (2016). https://doi.org/10.1007/s10107-016-0994-6

Andreani, R., Martinez, J.M., Schuverdt, M.L.: On the relation between constant positive linear dependence condition and quasinormality constraint qualification. J. Optim. Theory Appl. 125(2), 473–483 (2005). https://doi.org/10.1007/s10957-004-1861-9

Andreani, R., Martínez, J.M., Ramos, A., Silva, P.J.S.: A cone-continuity constraint qualification and algorithmic consequences. SIAM J. Optim. 26(1), 96–110 (2016). https://doi.org/10.1137/15M1008488

Andreani, R., Martínez, J.M., Ramos, A., Silva, P.J.S.: Strict constraint qualifications and sequential optimality conditions for constrained optimization. Math. Oper. Res. 43(3), 693–717 (2018). https://doi.org/10.1287/moor.2017.0879

Andreani, R., Martínez, J.M., Svaiter, B.F.: A new sequential optimality condition for constrained optimization and algorithmic consequences. SIAM J. Optim. 20(6), 3533–3554 (2010). https://doi.org/10.1137/090777189

Andreani, R., Secchin, L.D., Silva, P.J.S.: Convergence properties of a second order augmented Lagrangian method for mathematical programs with complementarity constraints. SIAM J. Optim. 28(3), 2574–2600 (2018). https://doi.org/10.1137/17M1125698

Bartholomew-Biggs, M.: Nonlinear Optimization with Financial Applications. Springer US (2005). https://doi.org/10.1007/b102601

Bartholomew-Biggs, M.: Nonlinear Optimization with Engineering Applications, Springer Optimization and Its Applications, vol. 19. Springer, US (2008). https://doi.org/10.1007/978-0-387-78723-7

Bertsekas, D.P.: Nonlinear Programming, 2nd edn. Athena Scientific (1999)

Bertsekas, D.P.: Convex Analysis and Optimization. Athena Scientific (2003)

Bertsekas, D.P., Ozdaglar, A.E.: Pseudonormality and a Lagrange multiplier theory for constrained optimization. J. Optim. Theory Appl. 114(2), 287–343 (2002). https://doi.org/10.1023/A:1016083601322

Birgin, E.G., Martínez, J.M.: Large-scale active-set box-constrained optimization method with spectral projected gradients. Comput. Optim. Appl. 23(1), 101–125 (2002). https://doi.org/10.1023/A:1019928808826

Birgin, E.G., Martínez, J.M.: Improving ultimate convergence of an augmented Lagrangian method. Optim. Methods Softw. 23(2), 177–195 (2008). https://doi.org/10.1080/10556780701577730

Birgin, E.G., Martínez, J.M.: Practical Augmented Lagrangian Methods for Constrained Optimization. Society for Industrial and Applied Mathematics, Philadelphia, PA (2014). https://doi.org/10.1137/1.9781611973365

Birgin, E.G., Martínez, J.M.: Complexity and performance of an augmented Lagrangian algorithm. Optimization Methods and Software, pp. 1–36 (2020). https://doi.org/10.1080/10556788.2020.1746962

Bueno, L., Haeser, G., Rojas, F.: Optimality conditions and constraint qualifications for generalized nash equilibrium problems and their practical implications. SIAM J. Optim. 29(1), 31–54 (2019). https://doi.org/10.1137/17M1162524

Büskens, C., Wassel, D.: The ESA NLP Solver WORHP, pp. 85–110. Springer New York, New York, NY (2013). https://doi.org/10.1007/978-1-4614-4469-5_4

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91(2), 201–213 (2002). https://doi.org/10.1007/s101070100263

Fletcher, R., Leyffer, S.: User manual for filterSQP. Tech. Rep. NA/181, University of Dundee (1998). https://wiki.mcs.anl.gov/leyffer/images/5/58/SQP_manual.pdf

Gill, P.E., Kungurtsev, V., Robinson, D.P.: A stabilized SQP method: global convergence. IMA J. Numer. Anal. 37(1), 407–443 (2016). https://doi.org/10.1093/imanum/drw004

Gondzio, J.: Interior point methods 25 years later. Eur. J. Oper. Res. 218(3), 587–601 (2012). https://doi.org/10.1016/j.ejor.2011.09.017

Guignard, M.: Generalized Kuhn–Tucker conditions for mathematical programming problems in a Banach space. SIAM J. Control 7(2), 232–241 (1969). https://doi.org/10.1137/0307016

Janin, R.: Directional derivative of the marginal function in nonlinear programming. In: Fiacco, A. V. (ed.) Sensitivity, Stability and Parametric Analysis, Mathematical Programming Studies, vol. 21, pp. 110–126. Springer Berlin (1984). https://doi.org/10.1007/BFb0121214

Mangasarian, O., Fromovitz, S.: The Fritz John necessary optimality conditions in the presence of equality and inequality constraints. J. Math. Anal. Appl. 17(1), 37–47 (1967). https://doi.org/10.1016/0022-247X(67)90163-1

Murtagh, B.A., Saunders, M.A.: MINOS 5.51 user’s guide. Tech. Rep. SOL 83-20R, Stanford University (2003). https://web.stanford.edu/group/SOL/guides/minos551.pdf

Nocedal, J., Wright, S.J.: Numerical Optimization, 2nd edn. Springer, Berlin (2006)

Ozdaglar, A.E., Bertsekas, D.P.: The relation between pseudonormality and quasiregularity in constrained optimization. Optim. Methods Softw. 19(5), 493–506 (2004). https://doi.org/10.1080/10556780410001709420

Qi, L., Wei, Z.: On the constant positive linear dependence condition and its application to SQP methods. SIAM J. Optim. 10(4), 963–981 (2000). https://doi.org/10.1137/S1052623497326629

Secchin, L.D.: Scaled-algencan v1.0.0 (2021). doi: https://doi.org/10.5281/zenodo.5138345

Sra, S., Nowozin, S., Wright, S.J. (eds.): Optimization for Machine Learning. MIT Press, Neural Information Processing Series (2012)

Terlaky, T., Anjos, M.F., Ahmed, S.: Advances and Trends in Optimization with Engineering Applications. SIAM (2017)

Wächter, A., Biegler, L.T.: On the implementation of an interior-point filter line-search algorithm for large-scale nonlinear programming. Math. Program. 106(1), 25–57 (2006). https://doi.org/10.1007/s10107-004-0559-y

Ye, J.J., Zhang, J.: Enhanced Karush–Kuhn–Tucker condition and weaker constraint qualifications. Math. Program. 139(1), 353–381 (2013). https://doi.org/10.1007/s10107-013-0667-7

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

This work has been partially supported by CEPID-CeMEAI (FAPESP 2013/07375-0), FAPES (Grant 116/2019), FAPESP (Grants 2017/18308-2 and 2018/24293-0), CNPq (Grants 301888/2017-5, 304301/2019-1 and 302915/2016-8) and PRONEX - CNPq/FAPERJ (grant E-26/010.001247/2016).

Conflict of interest

The authors declare that they have no conflict of interest.

Availability of data and materials

All data analyzed during this study are publicly available. URLs are included in this published article.

Code availability

ALGENCAN 3.1.1 is available under the GNU General Public License, as well as the proposed scaled version [34]. We emphasize that the HSL packages used in this study are available for academic use. URLs are included in this published article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Andreani, R., Haeser, G., Schuverdt, M.L. et al. On scaled stopping criteria for a safeguarded augmented Lagrangian method with theoretical guarantees. Math. Prog. Comp. 14, 121–146 (2022). https://doi.org/10.1007/s12532-021-00207-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12532-021-00207-9