Abstract

Over the last decade, we have encountered various complex optimization problems in the engineering and research domains. Some of them are so hard that we had to turn to heuristic algorithms to obtain approximate optimal solutions. In this paper, we present a novel metaheuristic algorithm called mussels wandering optimization (MWO). MWO is inspired by mussels’ leisurely locomotion behavior when they form bed patterns in their habitat. It is an ecologically inspired optimization algorithm that mathematically formulates a landscape-level evolutionary mechanism of the distribution pattern of mussels through a stochastic decision and Lévy walk. We obtain the optimal shape parameter μ of the movement strategy and demonstrate its convergence performance via eight benchmark functions. The MWO algorithm has competitive performance compared with four existing metaheuristics, providing a new approach for solving complex optimization problems.

Similar content being viewed by others

Introduction

One of the most fundamental problems worldwide is the search for an optimal state. Optimization is about finding the best possible solutions for given problems [1]. Formally, the goal of optimization is to find the best possible elements x * from a feasible set X according to a set of criteria \(F=\{f_1,f_2,\ldots,f_n\},\) the so-called objective functions. For a single-objective minimization problem, the goal is to find the minimum solution \(x^*\in X\) where f(x *) ≤ f(x) for all x.

Over the past few decades, many optimization algorithms have been proposed to solve complex optimization problems. These algorithms are usually divided into deterministic algorithms, probabilistic algorithms, and metaheuristics [2]. Deterministic algorithms are mainly used if a clear relation between the characteristics of the possible solutions and their utility for a given problem exists, i.e., branch and bound, and mathematical programming methods. However, it is always hard to solve a problem analytically in most cases, because the relation between a solution candidate and its “fitness” is always not obvious or too complicated, or the dimensionality of the search space is very high for many real-world problems. Probabilistic algorithms model a problem or search a problem space using a probabilistic model of candidate solutions [3]. Monte Carlo-based approaches belong to the family of probabilistic algorithms, which trade off guaranteed correctness of the solution for shorter runtime. Metaheuristics are designed to tackle complex optimization problems where other optimization methods have failed to be either effective or efficient. These methods have come to be recognized as some of the most practical approaches for solving many complex problems, particularly for the many real-world problems that are combinatorial in nature.

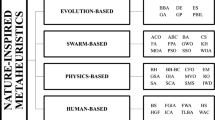

In particular, nature-inspired metaheuristics have attracted increasing attention, becoming powerful methods to solve modern global optimization problems [4]. Among these, the most successful are evolutionary algorithms (EAs), which draw their inspiration from evolution by natural selection [5]. There are several different types of EAs, including genetic algorithms [6], genetic programming [7], evolutionary programming [8], the estimation of distribution algorithm [9], and differential evolution [10]. Later, swarm intelligence offered more effective approaches for complex optimization problems, being inspired from collective animal behavior [11], i.e., particle swarm optimization [12], ant colony optimization [13], artificial bee colony [14], group search optimizer [15], and bacterial foraging optimization [16]. Such successful algorithms also include simulated annealing [17], artificial neural network [18], artificial immune algorithm [19], chemical reaction optimization [20], memetic algorithm [21], and harmony algorithm [22]. More recently, a number of ecological optimization algorithms have been proposed and successfully applied, i.e., biogeography-based optimization [23], cuckoo search [24], shuffled frog-leaping algorithm [25], and the self-organizing migrating algorithm [26].

All these metaheuristics have unique computational models, mimicking specific behaviors for the performance of diversity. However, most of them satisfy the unifying principle of being population based with randomization being involved in the solution process. Notably, these forms of population behavior patterns exhibit both deterministic and stochastic characteristics in order to guarantee some inheritance as well as to promote healthy evolution. This stochastic feature plays a significant role in such algorithms [27]. However, many existing algorithms just borrow some random parameters to maintain diversity and promote its evolution. More comprehensive stochastic behavior-oriented optimizers are seldom reported. It will be exciting and challenging to deeply understand the integral stochastic behaviors or mechanisms of an ecological system in order to develop new, efficient stochastic optimization models for complex problems.

Recently, Daniel [28] reported that mussels in intertidal beds use random movement behaviors to optimize their formation of patches to balance feeding and mortality. This species of mussel, despite exhibiting leisurely locomotion, exhibits economy of movement and savvy behavioral strategies that approach a theoretical ideal as well as, or better than, more visibly athletic species. Inspired by this important discovery, this paper formulates a metaheuristic named mussels wandering optimization (MWO) for the first time, using the mussels’ stochastic walking strategy. In particular, mussels use a Lévy walk during the formation of a spatially patterned bed. Mussels move according to others’ behaviors and environmental complexity. The interaction between individuals and the complexity of the habitat shapes the mussels’ movement in ecological systems.

In the rest of the paper, we first introduce the bed formatting behavior of natural mussels in section “Bed Formatting Behavior of Mussels for Optimization.” Then, the mussels wandering optimization (MWO) algorithm is formulated in section “Mussels Wandering Optimization.” We demonstrate its performance via a set of standard benchmarks and compare it with some existing metaheuristic algorithms in section “Simulation and Results.” The paper is concluded in section “Conclusions.”

Bed Formatting Behavior of Mussels for Optimization

Nature is full of biological landscapes that seem static to the casual observer but that actually contain highly dynamic spatial features. These features are shaped dramatically, if slowly, by subtle interplays between large-scale population-level patterns and small-scale movements of individuals [28]. Mussel is a species of mollusk, unusually thriving on rocky-shore and soft-bottom habitats, and in the intake mouths of coastal power stations [29]. Mussels depend on not only physical processes, such as water flow rate, temperature, salinity, and desiccation, but also biological processes, such as amount of food resources for survival, growth, and reproduction [30].

Mussels form extensive beds of high or varying density at complex soft or hard surfaces so as to provide themselves with habitat structure. Mussel bed patterns of distribution and abundance result from a combination of constraints that occur from regional processes at the macrohabitat scale [24] to local interactions at the microhabitat scale [25] over periods of various decades, in the range of their lifespan [30, 31]. When mussels move, they usually use a random strategy with respect to local mussel density, predators, and habitat quality parameters such as the amount of biomass and the water flow rate [32].

In particular, pattern formation in mussel beds depends on two opposing biological mechanisms: cooperation and competition. Mussels can aggregate not only to provide physical protection to the underlying bed, thus enabling the bed to withstand erosion by high tidal currents and wave action, but also to resist predators and high wave stress. Within a cluster, mussels move less when surrounded by conspecifics, possibly to minimize predation, wave stress or dislodgement losses. By moving into cooperative aggregations, mussels increase their local density. However, mussel behavior prevents the formation of such large clusters, and possibly mussels decide to move when the cluster size becomes too large, because of competition for algae. This interaction between local cooperation and long-range competition results in simultaneous risk reduction and minimization of competition for algae [32].

Experiments show that the probability of a mussel moving decreases with the short-range density and increases with long-range density [28]. When a mussel decides to move, it adopts a Lévy walk during the formation of spatially patterned beds, and models reveal that this Lévy movement accelerates bed pattern formation. The emergent patterning in mussel beds, in turn, improves individual fitness. Moreover, much research has demonstrated that, in fish, insect, or bird foraging, and human travel, the step length is also a stochastic process which approaches a Lévy walk [33–35].

The step lengths for mussel are not constant but rather are chosen from a probability distribution with a power-law tail [34], which is characterized by rare but extremely long step lengths, and the same sites are revisited much less frequently than in a normal diffusion process, represented as [32],

where C μ is a normalization constant. The shape parameter 1 < μ < 3 is known as the Lévy exponent or scaling exponent and determines the movement strategy. When μ is close to 1, the resulting movement strategy resembles ballistic, straight-line motion, as the probability to move a very large distance is equal to the chance of making a small displacement.

Behaviorally, power-law distributions require cognitive memory of the duration of the current move. It seems likely that organisms that perform Lévy walks have specific adaptations for doing so. Ecologically, Lévy walks are important because they yield a “scale-free” blend of long and short movements that can be more efficient in locating and exploiting resources that are patchy at multiple scales. Notably, in mussels, this efficiency extends beyond exploitation to the fundamental mechanisms underlying the formation of favorably patchy mussel beds.

Notably, this bed pattern formatting behavior with a random walk is just an optimization process to search for the most suitable place for each mussel in the habitat. Therefore, it is promising to design an optimization algorithm by modeling the ecological population behavior of mussels for complex global optimization problems.

Mussels Wandering Optimization

A population of mussels includes N individuals in a certain spatial region of marine “bed” called the habitat. The habitat is mapped to a d-dimensional space S d of the problem to be optimized. The objective function value f(s) at each point \(s \in S^d\) represents the nutrition provided by the habitat. Each mussel has a position \({\it x}_i := (x_{i1},\ldots,x_{id}), i \in {\bf N}_N=\{1,2,\ldots,N\}\) in S d, forming a specified spatial bed pattern, accordingly.

For simplicity in describing MWO, the following idealized rules are given: (1) Mussel size is negligible; (2) When mussels are wandering for food and avoiding negative circumstance factors, there is no breeding and mortality; and (3) Mussels can walk across any other’s body.

The spatial distance D ij between m i and m j in S d can be calculated by

In MWO, all mussels are uniformly located in the habitat at the beginning. At each iteration, a mussel moves or remains still with a certain probability in the search space. The moving probability of a mussel depends on its short-range density and long-range density [28].

For mussel m i , given a short-range reference r s (the radius of the inner circle in 2-D space shown in Fig. 2), the short-range density ξ si is defined as the ratio of the number of mussels that are located away from m i but within distance r s to the population size N in unit distance, i.e.,

where \(\sharp(A<b)\) is used to compute the count in set A satisfying \(a < b, a\in A; D_i\) is the distance matrix from m i to other mussels in the population.

Given the long-range reference r l (the radius of the outer circle in Fig. 2), the long-range density ξ li of mussel m i is defined as

The short-range reference r s and long-range reference r l change through the iterations. In iteration t, given the distance data, the dynamic short-range reference r s (t) and long-range reference r l (t) are defined and calculated by

where α and β are positive constant coefficients with \(\alpha<\beta; \max_{i,j\in{\bf N}_N}\{D_{ij}(t)\}\) is the maximum distance among all mussels at iteration t, and δ is a scale factor of space which depends on the problem to be solved.

In the MWO algorithm, mussels are likely to move when surrounded by species at high long-range density, but likely to stay at their home position for high short-range density. Therefore, for mussel m i , given short-range density ξ si and long-range density ξ li , its moving probability is calculated by

where a, b, and c are positive constant coefficients and z is a value randomly sampled from the uniform distribution [0, 1]. If P i = 0, mussel m i stays still; and if P i = 1, mussel m i moves.

Once mussel m i decides to move, the step length is subject to a Lévy distribution. Its new position x′ i is calculated by

where the step length ℓ i := γ [1 − rand()]−1/(μ−1), 1.0 < μ < 3.0, and γ denotes the walk scale factor, which is a positive real number; \(\Updelta_g:=x_g-x_i\) is defined as the distance from m i to the best position x g found by all mussels with the optimum nutrition value.

The main steps of the MWO algorithm are presented as follows:

Step 1: Initialize a population of mussels and the algorithm parameters

At the beginning, N mussels are generated and uniformly placed in space S d. Set the maximum generation G, coefficients of range references α and β, space scale factor δ, moving coefficients a, b, and c, and walk scale factor γ.

Evaluate the initial fitness of mussel m i by computing the objective function f(x i ). Find the best mussel and record its position as x g .

Step 2: Calculate the short-range density ξ s and long-range density ξ l for each mussel

Using all mussels’ coordinate positions, compute the distances \( D_{ij}, i, j\in {\bf N}_N\) between any two mussels by using Eq. (2), and then compute the short-range reference r s and long-range reference r l by (5). For all mussels, calculate their ξ si and \(\xi_{li}, i\in {\bf N}_N\) by using (3) and (4), respectively.

Step 3: Determine the movement strategy for each mussel

Calculate the moving probability P i of mussel m i according to the short-range density ξ si and long-range density ξ li by Eq. (6); If P i = 1, calculate its step length by ℓ i = γ[1 − rand()]−1/(μ−1).

Step 4: Update the position for all mussels

Compute the new position coordinate x′ i of mussel m i in S d by using Eq. (7).

Step 5: Evaluate the fitness of mussel m i after position updating. Calculate the objective function f(x′) for the new positions. Rank the solutions so as to find the global best mussel m g , and update the best record [best position x g and optimal fitness f *(x g )].

Step 6: Examine if the termination criteria is satisfied? If it is true, stop the algorithm and output the optimized results; otherwise, go to step 2 to start the next iteration.

The pseudocode for the MWO algorithm is listed as follows.

Simulation and Results

In this section, we test the convergence performance of MWO and compare it with some existing population-based metaheuristic algorithms.

Benchmarks

To evaluate the performance of the MWO algorithm for some chosen problems, we employ a set of eight standard benchmark functions, which are given in Table 1.

These benchmarks have been widely used in the literature for comparison of optimization methods. Some are multimodal, which means that they have multiple local minima. Some are nonseparable, which means that they cannot be written as a sum of functions of individual variables. Some are regular, which means they are analytical (differentiable) at each point of their domain [23, 36]. Each of the functions has a global minimum f(x *) = 0.

Experimental Setting

In this work, we investigate the effect of the Lévy walk in the MWO algorithm. Different values of the shape parameter μ of the Lévy distribution are tested, i.e., μ = 1.5, μ = 1.8, μ = 1.9, μ = 2.0, μ = 2.1, μ = 2.2, and μ = 2.5.

To explore the benefits of MWO, we compare its performance on various benchmark functions with four well-known metaheuristic algorithm: (1) genetic algorithm (GA), (2) biogeography-based optimization (BBO), (3) particle swarm optimization (PSO), and (4) group search optimizer (GSO). We executed GA, BBO, PSO, and GSO algorithms with their standard versions by adopting the empirical parameters setting.

The parameter setting of the MWO algorithm is summarized as follows. The population size N = 50 is used in the paper. The initial population is generated uniformly at random in the search space S d. The short-range reference coefficient α = 1.1, the long-range reference coefficient β = 7.5, the moving coefficients a = 0.63, b = 1.26, and c = 1.05, the walk scale factor γ = 0.1, and the scale factor of space δ is set as δ = 25 for f 1 and f 2, δ = 0.3 for f 3, δ = 1.2 for f 4, δ = 150 for f 5, δ = 10 for f 6, δ = 2.5 for f 7, and δ = 15 for f 8.

In this work, we ran 50 Monte Carlo simulations for each case with different μ values on each benchmark to obtain representative performance. The simulations were executed on 20-D and 30-D functions, respectively. We carried out the simulations with two different termination criteria: (1) the maximum number of iterations G = 1,000 is reached, and (2) the algorithm converges to a predefined precision called the error goal \(\epsilon,\) that is \(f(x^*)\leq\epsilon,\) e.g., \(\epsilon= 10^{-10}\) for \(f_1, \epsilon=15\) for \(f_2, \epsilon=10^{-6}\) for \(f_3, \epsilon=10\) for \(f_4, \epsilon= 10^{-3}\) for f 5–f 7, and \(\epsilon=0.1\) for f 8 defined in this work.

The experiments were carried out on a PC with a 2.40-GHz Intel processor and 4.0 GB RAM. All programs were written and executed in MATLAB 7.1. The operating system was Microsoft Windows 7.

Results and Discussion

The experiments included an average test on all cases for each benchmark function. Tables 2 and 3 list the best and mean values of the 20-D and 30-D functions obtained by MWO in 1,000 iterations, respectively. Numbers in parenthesis indicate the ranking in seven simulations of MWO with different settings of μ.

Table 2 shows that the best and mean function values are all not good when μ is set as a larger (μ = 2.5) or smaller value (μ = 1.5) in its valid range. When μ = 2.0, MWO performs well for all eight functions, e.g., the best function results rank first or second for f 1, f 3, and f 5–f 8, and third for f 2 and f 4; the mean function results rank first or second for all benchmarks except f 4. For f 2 and f 4, the MWO with μ = 1.9 performs the best, but its minimum function result ranks second after μ = 1.8 for f 4. For f 3, the best result obtained with μ = 2.1 ranks first and its mean function result is inferior to that of μ = 2.0. Generally, μ = 2.0 is an optimal setting for MWO on average, and [1.9, 2.1] presents a good range of μ of MWO for solving 20-D functions. From Table 3, we can obtain a similar conclusion for 30-D functions. μ = 2.0 is the optimal setting on average, and a recommended range of μ is [1.9, 2.0].

Figures 2 and 3 show typical optimal dynamics of MWO with different μ values for f 1–f 8. It is obvious that MWO with μ = 2.0 converges fastest and achieves the best solution for most functions, except f 4 and f 5. MWO with μ = 1.8 converges fast for f 4. For f 5, MWO with μ = 1.9 performs well, but all algorithms encounter a saturation state.

Furthermore, we present the evolving fitness dynamics of the 20-D benchmarks for MWO with the optimal setting of μ = 2.0 in Fig. 4. In Fig. 4, each subfigure represents a typical evolving dynamics of the global minimal value (red line) and the average value of all mussels (black dotted line), in logarithmic coordinates. These curves of minimum values are in accordance with the results in Table 2. From these figures, we find that MWO keeps converging to its optimum solution for the benchmarks except f 4 and f 5. We also find that, when the best global value decreases in the iterative process, the average fitness appears to oscillate for all benchmarks, especially f 2, f 3, f 5, and f 8. These results prove that the mussels preferred Lévy walk can maintain the diversity of the population, because the Lévy walk adopts long steps with low probabilities and revisits the same sites far less often, which helps MWO to escape from local optima and find optimal results more efficiently.

We compared the optimal results of MWO with μ = 2.0 with GA, BBO, PSO, and GSO for the 20-D and 30-D functions. The best and mean results are presented in Tables 4 and 5. Table 4 shows that MWO generates significantly better results than GA and GSO on all the functions with d = 20. From the comparisons between MWO and BBO, we can see that MWO has obviously better performance than BBO on f 1, f 3, and f 5–f 8. However, MWO yields statistically inferior results on f 4 compared with BBO. From the comparisons between MWO and PSO, we can see that MWO has better average performance than the PSO algorithm on all the functions except f 1. For f 7, PSO presents better optimal results than MWO does.

From Table 5, we see that MWO performs best on average for the majority of the 30-D benchmarks. BBO exceeds MWO on f 4. PSO is the second most effective, followed by BBO. PSO runs in first place in terms of the best value for functions f 1, f 5, and f 6, but its mean function values are no match for MWO.

Table 6 presents the average CPU time in seconds for MWO (μ = 2.0) and the other four algorithms. From this table, we see that the average CPU time required by BBO is less than those of the other algorithms for all eight functions except f 1. Among the other four algorithms, the average CPU time of GSO is the longest; GA, MWO, and PSO have similar CPU time cost, or rather MWO requires slightly more time than GA and PSO.

In this section, we also describe the test of the convergence performance of MWO based on a predefined precision called the error goal \(\epsilon,\) compared with BBO and PSO, which are the best two among the four metaheuristics. The error goal is the criterion selected to stop the algorithm, which means that the termination condition is \(f(x^*)\leq\epsilon.\) Table 7 presents the simulation results of BBO, PSO, and MWO for 20-D functions, in which a successful run is defined as the algorithm converging to the error goal \(\epsilon\) in 10,000 iterations. The average convergence time only takes successful runs into account.

From the results in Table 7, we see that MWO needs fewer runs in terms of number of iterations and CPU time in seconds on average than PSO to reach the precision \(\epsilon\) defined in the table, for most functions except f 1. It performs better than BBO except for f 4. For 50 Monte Carlo simulations, the average success rate of MWO (100 % for almost all the functions) is significantly higher than that of PSO for f 2, f 4, and f 5–f 8. It also exceeds BBO for f 1, f 2, and f 5–f 7. Therefore, MWO has competitive performance on the whole to other metaheuristics in terms of accuracy and convergence speed.

Conclusions

This paper presents a novel metaheuristic algorithm—mussels wandering optimization (MWO)—for the first time, through mathematically modeling mussels’ leisurely locomotion behavior when they format their bed pattern in a habitat. MWO emphasizes competition and cooperation among mussels via stochastic decisions based on the mussel density in the habitat, and random walks. In the computational model, besides the Lévy distribution of step length adopted by each mussel, its moving decision behavior is also a stochastic variable.

MWO is conceptually simple and easy to implement. The performance of MWO is demonstrated via a set of eight benchmarks and compared with four well-known metaheuristics, i.e., GA, BBO, PSO, and GSO. The primary conclusion is that MWO beats the four algorithms on average. MWO provides a new approach for solving a variety of large-scale optimization problems, which makes it particularly attractive for real-world applications.

One of the most significant merits of MWO is that it provides an open framework to tackle hard optimization problems, by utilizing research in spatial bed formatting, landscape-level pattern evolution, and the leisurely locomotion behavior of mussels in their habitat. Our future planned work includes: (1) presenting the mathematical models of distribution patterns of mussels based on some typical random walks, (2) proving the convergence of the proposed models for complex optimization problems with and without noise, and (3) exploring its promising real-world applications.

References

Weise T. Global optimization algorithms theory and application, Germany. 2009. it-weise.de.

Michalewicz Z, Fogel DB. How to solve it: modern heuristics, 2nd ed. Berlin: Springer; 2004.

Nobakhti A. On natural based optimization. Cognit Comput. 2010;2:97–119.

Shadbolt Nigel Nature-inspired computing. IEEE Intell Syst. 2004;1/2:1–3.

Zhang J, Zhan Z, et al. Enhancing evolutionary computation algorithms via machine learning techniques: a survey. IEEE Comput Intell Mag. 2011;68–75.

Zhang J, Chung H, Lo W Clustering-based adaptive crossover and mutation probabilities for genetic algorithms. IEEE Trans Evol Comput. 2007;11(3):326–35.

Chen Shu-Heng, et al. Genetic programming: an emerging engineering tool. Int J Knowl Based Intell Eng Syst. 2008;12(1):1–2.

Fogel LJ. Intelligence through simulated evolution : forty years of evolutionary programming. New York: Wiley; 1999.

Price K, Storn R, Lampinen J. Differential evolution: a practical approach to global optimization. Berlin: Springer; 2005.

Gao Y, Culberson J. Space complexity of estimation of distribution algorithms. Evol Comput. 2005;13(1):125–43.

Kennedy J, Eberhart RC. Swarm intelligence. San Francisco: Morgan Kaufmann; 2001.

Kennedy J, Eberhart R. Particle swarm optimization. In: Proceedings of the international conference on neural networks. Australia: Perth; 1995. pp. 1942–48.

Dorigo M, Gambardella L. Ant colony system: a cooperative learning approach to the traveling salesman problem. IEEE Trans Evol Comput. 1997;1(1):53–66.

Karaboga D, Akay B. A comparative Study of artificial bee colony algorithm. Appl Math Comput. 2009;214:108–32.

He S, Wu Q, Saunders J. Group search optimizer: an optimization algorithm inspired by animal searching behavior. IEEE Trans Evol Comput. 2009;13(5):973–90.

Passino K. Biomimicry of bacterial foraging for distributed optimization and control. IEEE Control Syst Mag. 2002;22:52–67.

Kirkpatrick S, Gelatt C, Vecchi M. Optimization by simulated annealing. Science, 1983;220(4598):671–80.

Haykin S. Neural networks: a comprehensive foundation. Englewood: Prentice Hall; 1999.

Bagheria A, Zandiehb M, Mahdavia Iraj, Yazdani M. An artificialimmunealgorithm for the flexible job-shop scheduling problem. Futur Gener Comput Syst. 2010;26(4):533–41.

Lam A, Li V. Chemical-reaction-inspired metaheuristic for optimization. IEEE Trans Evol Comput. 2010;14(3):381–99.

Chen X, Ong Y, Lim M. Research frontier: memetic computation—past, present and future. IEEE Comput Intell Mag. 2010;5(2):24–36.

Geem Z, Kim J, Loganathan G. A new heuristic optimization algorithm: harmony search. Simulation, 2001;76(2):60–68.

Simon D. Biogeography-based optimization. IEEE Trans Evol Comput. 2008;12(6):702–13.

Yang X-S. Cuckoo search via Lévy flights. World Congr Nat Biol Inspired Comput, 2009.

Eusuffa M, Lanseyb K, Pashab F. Shuffled frog-leaping algorithm: a memetic meta-heuristic for discrete optimization. Eng Optim. 2006;38(2):129–54.

Zelinka I. SOMA: self-organizing migrating algorithm. Berlin: Springer; 2004. pp. 167–217.

Kang Q, An J, Wang L, Wu Q. Unification and diversity of computation models for generalized swarm intelligence. Int J Artif Intell Tools. 2012;21(3):1240012.

Daniel G. Why did you Lévy?. Sci Technol Human Values 2011;332:1514.

Alfaro Andrea C. Population dynamics of the green-lipped mussel, Perna canaliculus, at various spatial and temporal scales in northern New Zealand. J Exp Mar Biol Ecol. 2006;334:294–315.

Haag WR, Warren ML. Role of ecological factors and reproductive strategies in structuring freshwater mussel communities. Can J Fish Aquat Sci. 1998;55:297–306.

Strayer D, Downing J, Haag W Changing perspectives on pearly mussels-North America’s most imperiled animals. BioScience, 2004;54(5):429–39.

de Jager M. Lvy walks evolve through interaction between movement and environmental complexity. Science, 2011;332:1551–53.

Viswanathan G. Fish in Lévy-flight foraging. Nat Environ Pollut Technol. 2010;465:1018–19.

Viswanathan G. Lévy flight search patterns of wandering albatrosses, Nature. 1996;381:413–15.

Brockmann D, Hufnagel L, Geisel T. The scaling laws of human travel. Nature 2006;439:462–65.

Cai Z, Wang Y. A multiobjective optimization-based evolutionary algorithm for constrained optimization. IEEE Trans Evol Comput. 2006;10:658–75.

Acknowledgments

The authors thank Prof. M. Zhou and Prof. R. Kozma for helpful discussions and constructive comments. The authors also thank the reviewers for their instrumental comments in improving this paper from its original version. This work was supported in part by the National Science Foundation of China (grants no. 61005090, 61034004, 61272271, and 91024023), the Natural Science Foundation Program of Shanghai (grant no. 12ZR1434000), the Program for New Century Excellent Talents in University of MOE of China (grant no. NECT-10-0633), and the Ph.D. Programs Foundation of MOE of China (grant no. 20100072110038).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

An, J., Kang, Q., Wang, L. et al. Mussels Wandering Optimization: An Ecologically Inspired Algorithm for Global Optimization. Cogn Comput 5, 188–199 (2013). https://doi.org/10.1007/s12559-012-9189-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-012-9189-5