Abstract

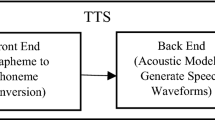

In the development of the voice conversion and the emotional speech style transformation in the text-to-speech systems, it is very important to obtain feedback information about the users’ opinion on the resulting synthetic speech quality. For this reason, the evaluations of the quality of the produced synthetic speech must often be performed for comparison. The main aim of the experiments described in this paper was to find out whether the classifier based on Gaussian mixture models (GMMs) could be applied for evaluation of male and female resynthesized speech that had been transformed from neutral to four emotional states (joy, surprise, sadness, and anger) spoken in Czech and Slovak languages. We suppose that it is possible to combine this GMM-based statistical evaluation with the classical one in the form of listening tests or it can replace them. For verification of our working hypothesis, a simple GMM emotional speech classifier with a one-level structure was realized. The next task of the performed experiment was to investigate the influence of different types and values (mean, median, standard deviation, relative maximum, etc.) of the used speech features (spectral and/or supra-segmental) on the GMM classification accuracy. The obtained GMM evaluation scores are compared with the results of the conventional listening tests based on the mean opinion scores. In addition, correctness of the GMM classification is analyzed with respect to the influence of the setting of the parameters during the GMM training—the number of mixture components and the types of speech features. The paper also describes the comparison experiment with the reference speech corpus taken from the Berlin database of emotional speech in German language as the benchmark for the evaluation of the performance of our one-level GMM classifier. The obtained results confirm practical usability of the developed GMM classifier, so we will continue in this research with the aim to increase the classification accuracy and compare it with other approaches like the support vector machines.

Similar content being viewed by others

References

Faundez-Zanuy M, Hussain A, Mekyska J, Sesa-Nogueras E, Monte-Moreno E, Esposito A, Chetouani M, Garre-Olmo J, Abel A, Smékal Z, Lopez-de-Ipiña K. Biometric applications related to human beings: there is life beyond security. Cognit Comput. 2013;5(1):136–51.

Reynolds DA, Quatieri TF, Dunn RB. Speaker verification using adapted Gaussian mixture models. Digit Signal Proc. 2000;10(1–3):19–41.

Reynolds DA, Rose RC. Robust text-independent speaker identification using Gaussian mixture speaker models. IEEE Trans Speech Audio Process. 1995;3(1):72–83.

Kim J-B, Park J-S, Oh Y-H. Speaker-characterized emotion recognition using online and iterative speaker adaptation. Cognit Comput. 2012;4(4):398–408.

Chetouani M, Faundez-Zanuy M, Gas B, Zarader JL. Investigation on LP-residual representations for speaker identification. Pattern Recogn. 2009;42(3):487–94.

Campbell WM, Campbell JP, Reynolds DA, Singer E, Torres-Carrasquillo PA. Support vector machines for speaker and language recognition. Comput Speech Lang. 2006;20(2–3):210–29.

Bhardwaj S, Srivastava S, Hanmandlu M, Gupta JRP. GFM-based methods for speaker identification. IEEE Trans Cybern. 2013;43(3):1047–58.

Ayadi ME, Kamel MS, Karray F. Survey on speech emotion recognition: features, classification schemes, and databases. Pattern Recogn. 2011;44(3):572–87.

Atassi H, Esposito A, Smékal Z. Emotion recognition from spontaneous Slavic speech. In: Proceedings of the IEEE international conference on cognitive infocommunications; 2012. p. 389–94.

Gharavian D, Sheikhan M, Ashoftedel F. Emotion recognition improvement using normalized formant. supplementary features by hybrid of DTW-MLP-GMM model. Neural Comput Appl. 2013;22(6):1181–91.

Milton A., Tamil Selvi S. Class-specific multiple classifiers scheme to recognize emotions from speech signals. Comput Speech Lang. 2013. doi:10.1016/j.csl.2013.08.004.

Mariooryad S, Busso C. Compensating for speaker or lexical variabilities in speech for emotion recognition. Speech Commun. 2014;57:1–12. doi:10.1016/j.specom.2013.07.

Rao KS. Voice conversion by mapping the speaker-specific features using pitch synchronous approach. Comput Speech Lang. 2010;24(3):474–94.

Maia R, Akamine M. On the impact of excitation and spectral parameters for expressive statistical parametric speech synthesis. Comput Speech Lang. 2013. doi:10.1016/j.csl.2013.10.001.

Přibilová A, Přibil J. Spectrum modification for emotional speech synthesis. In: Esposito A, Hussain A, Marinaro M, Martone R, editors. Multimodal signals: cognitive and algorithmic issues. LNAI 5398. Berlin: Springer; 2009. p. 232–41.

Přibilová A, Přibil J. Harmonic model for female voice emotional synthesis. In: Fierrez J, Ortega-Garcia J, Esposito A, Drygajlo A, Faundez-Zanuy M, editors. Biometric ID management and multimodal communication. LNCS 5707. Berlin: Springer; 2009. p. 41–8.

Vích R, Přibil J, Smékal Z. New cepstral zero-pole vocal tract models for TTS synthesis. In: Proceedings of IEEE Region 8 EUROCON’2001; 2001, vol. 2, p. 458–62.

Scherer KR. Vocal communication of emotion: a review of research paradigms. Speech Commun. 2003;40(1–2):227–56.

Přibil J, Přibilová A. Statistical analysis of complementary spectral features of emotional speech in Czech and Slovak. In: Habernal I, Matoušek V, editors. Text, speech and dialogue. LNAI 6836. Berlin: Springer; 2011. p. 299–306.

Přibil J, Přibilová A. Comparison of spectral and prosodic parameters of male and female emotional speech in Czech and Slovak. In: Proceedings of the IEEE international conference on acoustics, speech, and signal processing (ICASSP); 2011, p. 4720–3.

Li M, Han KJ, Narayan S. Automatic speaker age and gender recognition using acoustic and prosodic level information fusion. Comput Speech Lang. 2013;27(1):151–67.

Přibil J, Přibilová A. Evaluation of influence of spectral and prosodic features on GMM classification of Czech and Slovak emotional speech. EURASIP J Audio Speech Music Process. 2013;2013(8):1–22.

Přibil J, Přibilová A. Influence of visual stimuli on evaluation of converted emotional speech by listening tests. In: Esposito A, Vinciarelli A, Vicsi K, Pelachaud C, Nijholt A, editors. Analysis of verbal and nonverbal communication and enactment. LNCS 6800. Berlin: Springer; 2011. p. 378–92.

Artstein R, Poesio M. Inter-coder agreement for computational linguistics. Comput Linguist. 2008;4:555–96. doi:10.1162/coli.07-034-R2.

Siegert I, Böck R, Wendemuth A. Inter-rater reliability for emotion annotation in human-computer interaction—comparison and methodological improvements. J Multimodal User Interfaces Special Issue From Multimodal Analysis to Real-Time Interactions with Virtual Agents, doi:10.1007/s12193-013-0129-9, Springer, 2013 (online).

Burkhardt F, Paeschke A, Rolfes M, Sendlmeier W, Weiss B. A database of German emotional speech. In Proceedings of INTERSPEECH 2005, Lisbon, Portugal, p. 1517–1520.

Vondra M, Vích R. Recognition of emotions in german speech using Gaussian Mixture models. In: Esposito A, Hussain A, Marinaro M, Martone R, editors. Multimodal signals: cognitive and algorithmic issues. LNAI 5398. Berlin: Springer; 2009. p. 256–63.

Bitouk D, Verma R, Nenkova A. Class-level spectral features for emotion recognition. Speech Commun. 2010;52:613–25.

Dileep AD, Sekhar CC. Class-specific GMM based intermediate matching kernel for classification of varying length patterns of long duration speech using support vector machines. Speech Commun. 2014;57:126–43.

Bourouba H, Korba CA, Djemili R. Novel approach in speaker identification using SVM and GMM. Control Eng Appl Inform. 2013;15(3):87–95.

Kotti M, Paternò F. Speaker-independent emotion recognition exploiting a psychologically-inspired binary cascade classification schema. Int J Speech Technol. 2012;15:131–50. doi:10.1007/s10772-012-9127-7.

Schuller B, Vlasenko B, Eyben F, Wollmer M, Stuhlsatz A, Wendemuth A, Rigoll G. Cross-corpus acoustic emotion recognition: variances and strategies. IEEE Trans Affect Comput. 2010;1(2):119–31.

Nabney IT. Netlab Pattern Analysis Toolbox. Copyright (1996–2001). Retrieved 16 Feb 2012, from http://www.mathworks.com/matlabcentral/fileexchange/2654-netlab.

Shami M, Verhelst W. An evaluation of the robustness of existing supervised machine learning approaches to the classification of emotions in speech. Speech Commun. 2007;49:201–12.

Matoušek J, Tihelka D. SVM-based detection of misannotated words in read speech corpora. In: Habernal I, Matoušek V, editors. Text, speech, and dialogue. LNCS 8082. Berlin: Springer; 2013. p. 457–64.

Acknowledgments

The work has been supported by the Grant Agency of the Slovak Academy of Sciences (VEGA 2/0013/14) and the Ministry of Education of the Slovak Republic (VEGA1/0987/12, KEGA 022STU-4/2014).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Přibil, J., Přibilová, A. GMM-Based Evaluation of Emotional Style Transformation in Czech and Slovak. Cogn Comput 6, 928–939 (2014). https://doi.org/10.1007/s12559-014-9283-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-014-9283-y