Abstract

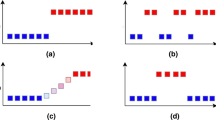

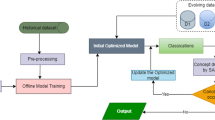

Detecting changes in data streams attracts major attention in cognitive computing systems. The challenging issue is how to monitor and detect these changes in order to preserve the model performance during complex drifts. By complex drift, we mean a drift that presents many characteristics in the sometime. The most challenging complex drifts are gradual continuous drifts, where changes are only noticed during a long time period. Moreover, these gradual drifts may also be local, in the sense that they may affect a little amount of data, and thus make the drift detection more complicated. For this purpose, a new drift detection mechanism, EDIST2, is proposed in order to deal with these complex drifts. EDIST2 monitors the learner performance through a self-adaptive window that is autonomously adjusted through a statistical hypothesis test. This statistical test provides theoretical guarantees, regarding the false alarm rate, which were experimentally confirmed. EDIST2 has been tested through six synthetic datasets presenting different kinds of complex drift, and five real-world datasets. Encouraging results were found, comparing to similar approaches, where EDIST2 has achieved good accuracy rate in synthetic and real-world datasets and has achieved minimum delay of detection and false alarm rate.

Similar content being viewed by others

Notes

Probably Approximately Correct learning model.

References

Akila V, Zayaraz G. A brief survey on concept drift. In: Intelligent computing, communication and devices, advances in intelligent systems and computing, vol. 308. India: Springer; 2015. p. 293–302.

Baena-García M, del Campo-Ávila J, Fidalgo R, Bifet A, Gavaldá R, Morales-Bueno R. Early drift detection method. In: Fourth international workshop on knowledge discovery from data streams. 2006.

Bifet A, Gavaldà R: Learning from time-changing data with adaptive windowing. In: Proceedings of the seventh SIAM international conference on data mining. 26–28 Apr 2007, Minneapolis. p. 443–448.

Bifet A, Holmes G, Kirkby R, Pfahringer B. MOA: massive online analysis. J Mach Learn Res. 2010;11:1601–4.

Bifet A, Read J, Zliobaite I, Pfahringer B, Holmes G. Pitfalls in benchmarking data stream classification and how to avoid them. In: Machine learning and knowledge discovery in databases, lecture notes in computer science, vol. 8188. Berlin: Springer; 2013. p. 465–79.

Brzezinski D, Stefanowski J. Reacting to different types of concept drift: the accuracy updated ensemble algorithm. IEEE Trans Neural Netw Learn Syst. 2014;25(1):81–94.

Domingos P, Hulten G. Mining high-speed data streams. In: Proceedings of the sixth ACM SIGKDD international conference on knowledge discovery and data mining, KDD00. ACM: New York; 2000. p. 71–80.

Dries A, Ruckert U. Adaptive concept drift detection. Stat Anal Data Min. 2009;2(5–6):311–27.

Duda RO, Hart PE, Stork DG. Pattern classification. 2nd ed. New York: Wiley; 2000.

Ferrucci D, Brown E, Chu-Carroll J, Fan J, Gondek D, Kalyanpur AA, Lally A, Murdock JW, Nyberg E, Prager J, Schlaefer N, Welty C. Building Watson: an overview of the DeepQA project. AI Mag. 2010;31(3):59–79.

Gama J, Castillo G. Learning with local drift detection. In: Proceedings of the advanced data mining and applications, second international conference, ADMA 2006. 14–16 Aug 2006, Xi’an. p. 42–55.

Gama J, Sebastio R, Rodrigues P. On evaluating stream learning algorithms. Mach Learn. 2013;90(3):317–46.

Gama Ja, Žliobaitė I, Bifet A, Pechenizkiy M, Bouchachia A. A survey on concept drift adaptation. ACM Comput Surv. 2014;46(4):44:1–37.

Harries M. Splice-2 comparative evaluation: electricity pricing. Technical report. The University of South Wales, UK; 1999.

Hoens T, Polikar R, Chawla N. Learning from streaming data with concept drift and imbalance: an overview. Prog Artif Intell. 2012;1(1):89–101.

Huang GB. What are extreme learning machines? Filling the gap between Frank Rosenblatt’s dream and John Von Neumann’s puzzle. Cogn Comput. 2015;7(3):1–16.

Hulten G, Spencer L, Domingos P. Mining time-changing data streams. In: Proceedings of the seventh ACM SIGKDD international conference on knowledge discovery and data mining. 26–29 Aug 2001, vol. 7. San Francisco. p. 97–106.

Ikonomovska E, Gama J, Sebastio R, Gjorgjevik D. Regression trees from data streams with drift detection. In: Gama J, Costa V, Jorge A, Brazdil P, editors. Discovery science, lecture notes in computer science, vol. 5808. Berlin: Springer; 2009. p. 121–35.

Jia H, Ding S, Du M. Self-tuning p-spectral clustering based on shared nearest neighbors. Cogn Comput. 2015;7(3):1–11.

Katakis I, Tsoumakas G, Vlahavas I. Tracking recurring contexts using ensemble classifiers: an application to email filtering. Knowl Inf Syst. 2010;22(3):371–91.

Khamassi I, Sayed-Mouchaweh M. Drift detection and monitoring in non-stationary environments. In: Evolving and adaptive intelligent systems (EAIS). Austria; 2014. p. 1–6.

Khamassi I, Sayed-Mouchaweh M, Hammami M, Ghédira K. Ensemble classifiers for drift detection and monitoring in dynamical environments. In: Annual conference of the Prognostics and Health Management Society. New Orlean; 2013.

Kifer D, Ben-David S, Gehrke J. Detecting change in data streams. In: Proceedings of the thirtieth international conference on very large data bases, vol. 30, VLDB’04. 2004. p. 180–191.

Klinkenberg R, Renz I. Adaptive information filtering: learning in the presence of concept drifts. In: Workshop notes of the ICML/AAAI-98 workshop learning for text categorization. AAAI Press; 1998. p. 33–40.

Kolter JZ, Maloof MA. Dynamic weighted majority: an ensemble method for drifting concepts. J Mach Learn Res. 2007;8:2755–90.

Minku L, White A, Yao X. The impact of diversity on online ensemble learning in the presence of concept drift. IEEE Trans Knowl Data Eng. 2010;22(5):730–42.

Mitchell TM. Machine learning, McGraw Hill series in computer science. New York: McGraw-Hill; 1997.

Muthukrishnan S, van den Berg E, Wu Y. Sequential change detection on data streams. In: Seventh IEEE international conference on data mining workshops. ICDM workshops; 2007. p. 550–1.

Navarretta C. The automatic identification of the producers of co-occurring communicative behaviours. Cogn Comput. 2014;6(4):689–98.

Persson A, Al Moubayed S, Loutfi A. Fluent human–robot dialogues about grounded objects in home environments. Cogn Comput. 2014;6(4):914–27.

Ross G, Adams N. Two nonparametric control charts for detecting arbitrary distribution changes. J Qual Technol. 2012;44:102–16.

Schlimmer JC, Granger RH Jr. Incremental learning from noisy data. Mach Learn. 1986;1(3):317–54.

Sobhani P, Beigy H. New drift detection method for data streams. In: Bouchachia A, editor. Adaptive and intelligent systems, lecture notes in computer science, vol. 6943. Berlin: Springer; 2011. p. 88–97.

Stolfo S, Fan W, Lee W, Prodromidis A, Chan P. Cost-based modeling for fraud and intrusion detection: results from the jam project. In: Proceedings of the DARPA information survivability conference and exposition, vol. 2, DISCEX’00; 2000. p. 130–144.

Street WN, Kim Y. A streaming ensemble algorithm (sea) for large-scale classification. In: Proceedings of the seventh ACM SIGKDD international conference on knowledge discovery and data mining, KDD01. ACM: New York; 2001. p. 377–82.

Vergara A, Vembu S, Ayhan T, Ryan MA, Homer ML, Huerta R. Chemical gas sensor drift compensation using classifier ensembles. Sens Actuators B Chem. 2012;166167:320–9.

Vinciarelli A, Esposito A, Andr E, Bonin F, Chetouani M, Cohn J, Cristani M, Fuhrmann F, Gilmartin E, Hammal Z, Heylen D, Kaiser R, Koutsombogera M, Potamianos A, Renals S, Riccardi G, Salah A. Open challenges in modelling, analysis and synthesis of human behaviour in human–human and human–machine interactions. Cogn Comput. 2015;7(3):1–17.

Widmer G, Kubat M. Effective learning in dynamic environments by explicit context tracking. In: Brazdil P, editor. Machine learning: ECML-93, lecture notes in computer science, vol. 667. Berlin: Springer; 1993. p. 227–43.

Widmer G, Kubat M. Learning in the presence of concept drift and hidden contexts. In: Machine learning. 1996. p. 69–101.

Zliobaite I. Learning under concept drift: an overview. CoRR abs/1010.4784. 2010.

Author information

Authors and Affiliations

Corresponding author

Appendix: EDIST2 Algorithm

Appendix: EDIST2 Algorithm

Rights and permissions

About this article

Cite this article

Khamassi, I., Sayed-Mouchaweh, M., Hammami, M. et al. Self-Adaptive Windowing Approach for Handling Complex Concept Drift. Cogn Comput 7, 772–790 (2015). https://doi.org/10.1007/s12559-015-9341-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-015-9341-0