Abstract

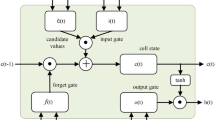

Event sequences with marker and timing information are available in a wide range of domains, from machine log in automatic train supervision systems to information cascades in social networks. Given the historical event sequences, predicting what event will happen next and when it will happen can benefit many useful applications, such as maintenance service schedule for mass rapid transit trains and product advertising in social networks. Temporal point process (TPP) is one effective solution to solve the next event prediction problem due to its capability of capturing the temporal dependence among events. The recent recurrent temporal point process (RTPP) methods exploited recurrent neural network (RNN) to get rid of the parametric form assumption in the density functions of TPP. However, most existing RTPP methods focus only on the temporal dependence among events. In this work, we design a novel multi-relation structure RNN model with a hierarchical attention mechanism to capture not only the conventional temporal dependencies but also the explicit multi-relation topology dependencies. We then propose an RTPP algorithm whose density function conditioned on the event sequence embedding learned from our RNN model for cognitively predict the next event marker and time. The experiments show that our proposed MRS-RMTPP outperforms the state-of-the-art baselines in terms of both event marker prediction and event time prediction on three real-world datasets. The capability of capturing both ontology relation structure and temporal structure in the event sequences is of great importance for the next event marker and time prediction.

Similar content being viewed by others

References

Dong H, Ning B, Cai B, Hou Z. Automatic train control system development and simulation for high-speed railways. IEEE Circuits Syst Mag 2010;10(2):6–18.

Wang H, Feng R, Leung AC, Tsang KF. Lagrange programming neural network approaches for robust time-of-arrival localization. Cogn Comput 2018;10(1):23–34.

Zhang H, Wu L, Song Y, Su C, Wang Q, Su F. An online sequential learning non-parametric value-at-risk model for high-dimensional time series. Cogn Comput 2018;10(2):187–200.

Wang J, Zheng VW, Liu Z, Chang KC. Topological recurrent neural network for diffusion prediction. ICDM; 2017.

Daley DJ, Vere-Jones D. 2008. An introduction to the theory of point processes, vol II. 2nd ed. Probability and its applications (New York), General theory and structure.

Kingman JFC, Vol. 3. Poisson processes. Oxford: Oxford University Press; 1993.

HAWKES AG. Spectra of some self-exciting and mutually exciting point processes. Biometrika 1971;58(1):83–90.

Isham V, Westcott M. A self-correcting point process. Adv Appl Probab 1979;37:629–46.

Engle R, Duration RJR. Autoregressive conditional a new model for irregularly spaced transaction data. Econometrica 1998;66(5):1127–62.

Grossberg S. REcurrent neural networks. Scholarpedia 2013;8 (2):1888.

Du N, Dai H, Trivedi R, Upadhyay U, Gomez-Rodriguez M, Song L. Recurrent marked temporal point processes: embedding event history to vector. KDD; 2016. p. 1555–64.

Xiao S, Yan J, Yang X, Zha H, Chu SM. Modeling the intensity function of point process via recurrent neural networks. AAAI; 2017. p. 1597–603.

Wang Y, Shen H, Liu S, Gao J, Cheng X. Cascade dynamics modeling with attention-based recurrent neural network. IJCAI; 2017. p. 2985–91.

Li Y, Yang L, Xu B, Wang J, Lin H. Improving user attribute classification with text and social network attention. Cogn Comput 2019;11(4):459–68.

Ma Y, Peng H, Khan T, Cambria E, Hussain A. Sentic LSTM: a hybrid network for targeted Aspect-Based sentiment analysis. Cogn Comput 2018;10(4):639–50.

Hochreiter S, Schmidhuber J. Long short-term memory. Neural Comput 1997;9(8):1735–80.

Cho K, van Merriënboer B, Gülçehre Ç, Bahdanau D, Bougares F, Schwenk H, et al. Learning phrase representations using RNN encoder–decoder for statistical machine translation. EMNLP; 2014. p. 1724–34.

Yang H, Cheung LP. Implicit heterogeneous features embedding in deep knowledge tracing. Cogn Comput 2018;10(1):3–14.

Lauren P, Qu G, Yang J, Watta P, Huang G, Lendasse A. Generating word embeddings from an extreme learning machine for sentiment analysis and sequence labeling tasks. Cogn Comput 2018;10(4):625–38.

Tai KS, Socher R, Manning CD. Improved semantic representations from tree-structured long short-term memory networks. ACL; 2015. p. 1556–66.

Zheng J, Cai F, Chen W, Feng C, Chen H. Hierarchical neural representation for document classification. Cogn Comput 2019;11(2):317–27.

Zhang B, Yin X. SSDM2: a two-stage semantic sequential dependence model framework for biomedical question answering. Cogn Comput 2018;10(1):73–83.

Shuai B, Zuo Z, Wang B, Wang G. DAG-recurrent neural networks for scene labeling. CVPR; 2016. p. 3620–29.

Liu Z, Zheng VW, Zhao Z, Zhu F, Chang KC, Wu M, et al. Semantic proximity search on heterogeneous graph by proximity embedding. AAAI; 2017. p. 154–60.

Liu Z, Zheng VW, Zhao Z, Yang H, Chang KCC, Wu M, et al. Subgraph-augmented path embedding for semantic user search on heterogeneous social network. WWW; 2018.

Brillinger DR, Guttorp PM, Schoenberg FP. . Point processes, temporal. American Cancer Society; 2013.

Aalen O, Borgan O, Gjessing H. 2008. Survival and event history analysis: a process point of view statistics for biology and health.

Mei H, Eisner J. The neural Hawkes process: a neurally self-modulating multivariate point process. NIPS; 2017.

Song H, Rajan D, Thiagarajan JJ, Spanias A. Attend and diagnose: clinical time series analysis using attention models. AAAI; 2018. p. 4091–98.

Ma F, Chitta R, Zhou J, You Q, Sun T, Gao J. Dipole: diagnosis prediction in healthcare via attention-based bidirectional recurrent neural networks. KDD; 2017. p. 1903–11.

Xiao S, Yan J, Yang X, Zha H, Chu SM. Modeling the intensity function of point process via recurrent neural networks. AAAI; 2017. p. 1597–1603.

Press WH, Teukolsky SA, Vetterling WT, Flannery BP. Numerical recipes in C. Cambridge: Cambridge University Press; 1992.

Werbos PJ. Backpropagation through time: what it does and how to do it. Proceedings of the IEEE 1990;78 (10):1550–60.

Kingma DP, Ba J. 2014. Adam: a method for stochastic optimization. CoRR arXiv:1412.6980.

Du N, Dai H, Trivedi R, Upadhyay U, Gomez-Rodriguez M, Song L. Recurrent marked temporal point processes: embedding event history to vector. 1555–64; 2016.

Hodas NO, Lerman K. 2013. The simple rules of social contagion. CoRR arXiv:1308.5015.

Leskovec J, Backstrom L, Kleinberg J. 2009. Meme-tracking and the dynamics of the news cycle.

Team TD. 2016. Theano: a python framework for fast computation of mathematical expressions. CoRR arXiv:1605.02688.

Hyndman RJ, Koehler AB. Another look at measures of forecast accuracy. International Journal of Forecasting 2006;22(4):679–88.

Funding

This study was financially supported in part by the National Research Foundation (NRF), Prime Minister’s Office, Singapore, under its National Cybersecurity R&D Programme (Award No. NRF2014NCR-NCR001-31) and administered by the National Cybersecurity R&D Directorate. This research is also financially supported in part by the National Research Foundation, Prime Ministers Office, Singapore under its Campus for Research Excellence and Technological Enterprise (CREATE) program.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

This research was mainly conducted when Hongyun Cai and Vincent W. Zheng worked at Advanced Digital Sciences Center. This research was supported in part by the National Research Foundation (NRF), Prime Ministers Office, Singapore, under its National Cybersecurity R&D Programme (Award No. NRF2014NCR-NCR001-31) and administered by the National Cybersecurity R&D Directorate. It is also supported in part by the National Research Foundation, Prime Ministers Office, Singapore under its Campus for Research Excellence and Technological Enterprise (CREATE) programme.

Additional information

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Cai, H., Nguyen, T.T., Li, Y. et al. Modeling Marked Temporal Point Process Using Multi-relation Structure RNN. Cogn Comput 12, 499–512 (2020). https://doi.org/10.1007/s12559-019-09690-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-019-09690-8