Abstract

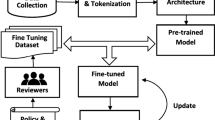

Creation of task-oriented dialog/virtual agent (VA) capable of managing complex domain-specific user queries pertaining to multiple intents is difficult since the agent must deal with several subtasks simultaneously. Most end-to-end dialogue systems, however, only provide user semantics as inputs from texts into the learning process and neglect other useful user behaviour and information from other modalities such as images. This stresses the benefit of incorporating multi-modal inputs for eliciting user preference in the task. Also, sentiment of the user plays a significant role in achieving maximum user/customer satisfaction during the conversation. Thus, it is also important to incorporate users’ sentiments during policy learning, especially when serving user’s composite goals. For the creation of multi-modal VA aided with sentiment for conversations encompassing multi-intents, this paper introduces a new dataset, named Vis-SentiVA: Visual and Sentiment aided VA created from open-accessed conversational dataset. We present a hierarchical reinforcement learning (HRL) typically options-based VA to learn policies for serving multi-intent dialogues. Multi-modal information (texts and images) extraction to identify user’s preference is incorporated in the learning framework. A combination of task-based and sentiment-based rewards is integrated in the hierarchical value functions for the VA to be user adaptive. Empirically, we show that all these aspects induced together in the learning framework play a vital role in acquiring higher dialogue task success and increased user contentment in the process of creating composite-natured VAs. This is the first effort in integrating sentiment-aware rewards in the multi-modal HRL framework. The paper highlights that it is indeed essential to include other modes of information extraction such as images and behavioural cues of the user such as sentiment to secure greater user contentment. This also helps in improving success of composite-natured VAs serving task-oriented dialogues.

Similar content being viewed by others

References

Acosta J. Using emotion to gain rapport in a spoken dialog system. Human Language Technologies: Conference of the North American Chapter of the Association of Computational Linguistics, Proceedings, May 31 - June 5, 2009, Boulder, Colorado, USA, Student Research Workshop and Doctoral Consortium, pp 49–54, https://www.aclweb.org/anthology/N09-3009/; 2009.

Acosta JC, Ward NG. Achieving rapport with turn-by-turn, user-responsive emotional coloring. Speech Communication 2011;53(9-10):1137–1148. https://doi.org/10.1016/j.specom.2010.11.006.

Cambria E, Speer R, Havasi C, Hussain A. 2010. Senticnet: A publicly available semantic resource for opinion mining. In: 2010 AAAI Fall Symposium Series.

Cambria E, Olsher D, Rajagopal D. 2014. Senticnet 3: A common and common-sense knowledge base for cognition-driven sentiment analysis. In: Twenty-eighth AAAI conference on artificial intelligence.

Cambria E, Fu J, Bisio F, Poria S. 2015. Affectivespace 2: Enabling affective intuition for concept-level sentiment analysis. In: Twenty-ninth AAAI conference on artificial intelligence.

Cambria E, Poria S, Bajpai R, Schuller B. 2016. Senticnet 4: A semantic resource for sentiment analysis based on conceptual primitives. In: Proceedings of COLING 2016, the 26th international conference on computational linguistics: Technical papers, pp 2666–2677.

Cambria E, Poria S, Hazarika D, Kwok K. 2018. Senticnet 5: Discovering conceptual primitives for sentiment analysis by means of context embeddings. In: Thirty-Second AAAI Conference on Artificial Intelligence.

Cambria E, Li Y, Xing FZ, Poria S, Kwok K. 2020. Senticnet 6: Ensemble application of symbolic and subsymbolic ai for sentiment analysis. CIKM.

Casanueva I, Budzianowski P, Su P, Ultes S, Rojas-Barahona LM, Tseng B, Gasic M. 2018. Feudal reinforcement learning for dialogue management in large domains. In: Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT, New Orleans, Louisiana, USA, June 1-6, 2018, Volume 2 (Short Papers), pp 714–719. https://aclanthology.info/papers/N18-2112/n18-2112.

Catizone R, Setzer A, Wilks Y. Multimodal dialogue management in the comic project. Proceedings of the 2003 EACL Workshop on Dialogue Systems: interaction, adaptation and styes of management; 2003.

Chaturvedi I, Satapathy R, Cavallari S, Cambria E. Fuzzy commonsense reasoning for multimodal sentiment analysis. Pattern Recogn. Lett. 2019;125:264–270.

Cuayáhuitl H. Simpleds: A simple deep reinforcement learning dialogue system. Dialogues with Social Robots. Springer; 2017. p. 109–118.

Cuayáhuitl H, Yu S. Deep reinforcement learning of dialogue policies with less weight updates. Proc. Interspeech 2017; 2017. p. 2511–2515. https://doi.org/10.21437/Interspeech.2017-1060.

Deng J, Dong W, Socher R, Li L, Li K, Li F. Imagenet: A large-scale hierarchical image database. 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2009), 20-25 June 2009, Miami, Florida, USA; 2009. p. 248–255. https://doi.org/10.1109/CVPRW.2009.5206848.

He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, June 27-30, 2016; 2016. p. 770–778. https://doi.org/10.1109/CVPR.2016.90.

Hochreiter S, Schmidhuber J. Long short-term memory. Neural Computation 1997;9(8): 1735–1780.

Howard N, Cambria E. Intention awareness: improving upon situation awareness in human-centric environments. Human-centric Computing and Information Sciences 2013;3(1):1–17.

Howard N, Qusaibaty A, Kanareykin S. Intention awareness in a nutshell. Defense Concepts Journal 2006;1(3):48–57.

Huang B, Carley KM. 2019. Parameterized convolutional neural networks for aspect level sentiment classification. arXiv:190906276.

Li W, Shao W, Ji S, Cambria E. 2020. Bieru: Bidirectional emotional recurrent unit for conversational sentiment analysis. arXiv:200600492.

Lin TH, Bui T, Kim DS, Oh J. 2020. A multimodal dialogue system for conversational image editing. arXiv:200206484.

Ma Y, Nguyen KL, Xing FZ, Cambria E. 2020. A survey on empathetic dialogue systems. Information Fusion.

Majumder N, Hazarika D, Gelbukh A, Cambria E, Poria S. Multimodal sentiment analysis using hierarchical fusion with context modeling. Knowledge-based systems 2018;161:124–133.

McTear MF. Spoken dialogue technology: enabling the conversational user interface. ACM Computing Surveys (CSUR) 2002;34(1):90–169.

Nasihati Gilani S, Traum D, Merla A, Hee E, Walker Z, Manini B, Gallagher G, Petitto LA. 2018. Multimodal dialogue management for multiparty interaction with infants. In: Proceedings of the 20th ACM International Conference on Multimodal Interaction, pp 5–13.

Peng B, Li X, Li L, Gao J, Çelikyilmaz A, Lee S, Wong K. Composite task-completion dialogue policy learning via hierarchical deep reinforcement learning. Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, EMNLP 2017, Copenhagen, Denmark, September 9-11, 2017; 2017. p. 2231–2240 . https://aclanthology.info/papers/D17-1237/d17-1237.

Pennington J, Socher R, Manning C. Glove: Global vectors for word representation. Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP); 2014. p. 1532–1543.

Pittermann J, Pittermann A, Minker W. Emotion recognition and adaptation in spoken dialogue systems. I J Speech Technology 2010;13(1):49–60. https://doi.org/10.1007/s10772-010-9068-y.

Qureshi SA, Saha S, Hasanuzzaman M, Dias G. Multitask representation learning for multimodal estimation of depression level. IEEE Intell Syst 2019;34(5):45–52. https://doi.org/10.1109/MIS.2019.2925204.

Saha T, Gupta D, Saha S, Bhattacharyya P. 2020. Emotion aided dialogue act classification for task-independent conversations in a multi-modal framework. Cogn. Comput. 1–13.

Saha T, Gupta D, Saha S, Bhattacharyya P. 2020. A hierarchical approach for efficient multi-intent dialogue policy learning. Multimedia Tools and Applications, 1–26.

Saha T, Gupta D, Saha S, Bhattacharyya P. 2020. Towards integrated dialogue policy learning for multiple domains and intents using hierarchical deep reinforcement learning. Expert Systems with Applications, p 113650.

Saha T, Saha S, Bhattacharyya P. Towards sentiment aided dialogue policy learning for multi-intent conversations using hierarchical reinforcement learning. PloS one 2020;15(7):e0235,367.

Schaul T, Quan J, Antonoglou I, Silver D. 2016. Prioritized experience replay.

Schuller B, Steidl S, Batliner A, Vinciarelli A, Scherer K, Ringeval F, Chetouani M, Weninger F, Eyben F, Marchi E, et al. 2013. The interspeech 2013 computational paralinguistics challenge: Social signals, conflict, emotion, autism. In: Proceedings INTERSPEECH 2013 14th Annual Conference of the International Speech Communication Association, Lyon, France.

Seo S, Kim C, Kim H, Mo K, Kang P. 2020. Comparative study of deep learning-based sentiment classification. IEEE Access, p 8. https://doi.org/10.1109/ACCESS.2019.2963426.

Shi W, Yu Z. Sentiment adaptive end-to-end dialog systems. Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, ACL 2018, Melbourne, Australia, July 15-20, 2018, Volume 1: Long Papers; 2018. p. 1509–1519. https://www.aclweb.org/anthology/P18-1140/.

Sutton RS, Precup D, Singh S. Between mdps and semi-mdps: A framework for temporal abstraction in reinforcement learning. Artif intell 1999;112(1-2):181–211.

Tang D, Li X, Gao J, Wang C, Li L, Jebara T. Subgoal discovery for hierarchical dialogue policy learning. Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, October 31 - November 4, 2018; 2018. p. 2298–2309. https://aclanthology.info/papers/D18-1253/d18-1253.

Tran DH, Sheng QZ, Zhang WE, Hamad SA, Zaib M, Tran NH, Yao L, Khoa NLD. 2020. Deep conversational recommender systems: A new frontier for goal-oriented dialogue systems. arXiv:200413245.

Welch BL. The generalization ofstudent’s’ problem when several different population variances are involved. Biometrika 1947;34(1/2):28–35.

Zhou J, Chen Q, Huang JX, Hu QV, He L. Position-aware hierarchical transfer model for aspect-level sentiment classification. Inform. Sci. 2020;513:1–16.

Acknowledgements

Dr. Sriparna Saha gratefully acknowledges the Young Faculty Research Fellowship (YFRF) Award, supported by Visvesvaraya PhD scheme for Electronics and IT, Ministry of Electronics and Information Technology (MeitY), Government of India, being implemented by Digital India Corporation (formerly Media Lab Asia) for carrying out this research.

Funding

This research has not been funded by any company or organization.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The author declares that he/she has no conflict of interest.

Ethical Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article belongs to the Topical Collection: A Decade of Sentic Computing

Guest Editors: Erik Cambria, Amir Hussain

Rights and permissions

About this article

Cite this article

Saha, T., Saha, S. & Bhattacharyya, P. Towards Sentiment-Aware Multi-Modal Dialogue Policy Learning. Cogn Comput 14, 246–260 (2022). https://doi.org/10.1007/s12559-020-09769-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-020-09769-7