Abstract

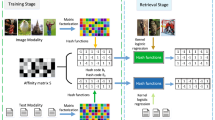

Unsupervised multi-modal hashing has received considerable attention in large-scale multimedia retrieval areas since its low storage and high search speed. Existing unsupervised multi-modal hashing methods usually aim to mine the complementary information and the structural information for different modalities and preserve them in low-dimensional discrete space. The main limitations are two folds: (1) The shared semantic properties and the specific-modality information between multi-modal data are not explored simultaneously, which limits the improvement of retrieval accuracy. (2) Most multi-modal hashing methods with rough fusion manners cause greatly the information loss. In this paper, we present an unsupervised Joint and Individual Feature Fusion Hashing (JIFFH) that jointly performs the unified feature learning and individual feature learning. A two-layer fusion architecture with an adaptive weighting scheme is adopted to fuse effectively the common semantic properties and the specific-modality data information. The experimental results on three public multi-modal datasets show that our proposed method is better than state-of-the-art unsupervised multi-modal hashing methods. In conclusion, the proposed JIFFH method is very effective to learn discriminative hash codes and can boost retrieval performance.

Similar content being viewed by others

Data Availability

Data is available through the corresponding authors on reasonable request.

References

Pouyanfar S, Yang Y, Chen SC, Shyu ML, Iyengar S. Multimedia big data analytics: a survey. ACM Comput Surv (CSUR). 2018;51(1):1–34.

Torralba A, Fergus R, Weiss Y. Small codes and large image databases for recognition. In: 2008 IEEE Conference on Computer Vision and Pattern Recognition. IEEE; 2008. p. 1–8.

Ji R, Liu H, Cao L, Liu D, Wu Y, Huang F. Toward optimal manifold hashing via discrete locally linear embedding. IEEE Trans Image Process. 2017;26(11):5411–20.

Lin M, Ji R, Chen S, Sun X, Lin CW. Similarity-preserving linkage hashing for online image retrieval. IEEE Trans Image Process. 2020;29:5289–300.

Li S, Chen Z, Lu J, Li X, Zhou J. Neighborhood preserving hashing for scalable video retrieval. In: Proceedings of the IEEE/CVF International Conference on Computer Vision; 2019. p. 8212–21.

Meng M, Wang H, Yu J, Chen H, Wu J. Asymmetric supervised consistent and specific hashing for cross-modal retrieval. IEEE Trans Image Process. 2020;30:986–1000.

Fang Y, Ren Y. Supervised discrete cross-modal hashing based on kernel discriminant analysis. Pattern Recognit. 2020;98:107062.

Yu J, Wu XJ, Zhang D. Unsupervised multi-modal hashing for cross-modal retrieval. Cognit Comput. 2021;1–13.

Shu Z, Bai Y, Zhang D, Yu J, Yu Z, Wu XJ. Specific class center guided deep hashing for cross-modal retrieval. Inf Sci. 2022;609:304–18.

Yu J, Wu XJ, Kittler J, Semi-supervised hashing for semi-paired cross-view retrieval. In: 24th International Conference on Pattern Recognition (ICPR). IEEE 2018; 2018. p. 958–63.

Liu X, Hu Z, Ling H, Cheung YM. MTFH: a matrix tri-factorization hashing framework for efficient cross-modal retrieval. IEEE transactions on pattern analysis and machine intelligence. 2019.

Zhang D, Wu XJ, Yu J. Label consistent flexible matrix factorization hashing for efficient cross-modal retrieval. ACM Trans Multimedia Comput Commun Appl (TOMM). 2021;17(3):1–18.

Zhu L, Lu X, Cheng Z, Li J, Zhang H. Flexible multi-modal hashing for scalable multimedia retrieval. ACM Trans Intell Syst Technol (TIST). 2020;11(2):1–20.

Lu X, Zhu L, Cheng Z, Li J, Nie X, Zhang H. Flexible online multi-modal hashing for large-scale multimedia retrieval. In: Proceedings of the 27th ACM International Conference on Multimedia. 2019. p. 1129–37.

Lu X, Zhu L, Cheng Z, Nie L, Zhang H. Online multi-modal hashing with dynamic query-adaption. In: Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval. 2019. p. 715–24.

Lu X, Liu L, Nie L, Chang X, Zhang H. Semantic-driven interpretable deep multi-modal hashing for large-scale multimedia retrieval. IEEE Transactions on Multimedia. 2020.

Gong Y, Lazebnik S, Gordo A, Perronnin F. Iterative quantization: a procrustean approach to learning binary codes for large-scale image retrieval. IEEE Trans Pattern Anal Mach Intell. 2012;35(12):2916–29.

Song J, Yang Y, Huang Z, Shen HT, Luo J. Effective multiple feature hashing for large-scale near-duplicate video retrieval. IEEE Trans Multimedia. 2013;15(8):1997–2008.

Liu L, Yu M, Shao L. Multiview alignment hashing for efficient image search. IEEE Tran Image Process. 2015;24(3):956–66.

Shen X, Shen F, Sun QS, Yuan YH. Multi-view latent hashing for efficient multimedia search. In: Proceedings of the 23rd ACM International Conference on Multimedia. 2015. p. 831–4.

Shen X, Shen F, Liu L, Yuan YH, Liu W, Sun QS. Multiview discrete hashing for scalable multimedia search. ACM Trans Intell Syst Technol (TIST). 2018;9(5):1–21.

Zheng C, Zhu L, Zhang S, Zhang H. Efficient parameter-free adaptive multi-modal hashing. IEEE Signal Process Lett. 2020;27:1270–4.

Wold S, Esbensen K, Geladi P. Principal component analysis. Chemometr Intell Lab Syst. 1987;2(1–3):37–52.

Huiskes MJ, Lew MS. The mir flickr retrieval evaluation. In: Proceedings of the 1st ACM International Conference on Multimedia Information Retrieval. 2008. p. 39–43.

Chua TS, Tang J, Hong R, Li H, Luo Z, Zheng Y. Nus-wide: a real-world web image database from national university of singapore. In: Proceedings of the ACM International Conference on Image and Video Retrieval. 2009. p. 1–9.

Lin TY, Maire M, Belongie S, Hays J, Perona P, Ramanan D, et al. Microsoft coco: Common objects in context. In: European Conference on Computer Vision. Springer; 2014. p. 740–55.

Kishida K. Property of average precision and its generalization: an examination of evaluation indicator for information retrieval experiments. Japan: National Institute of Informatics Tokyo; 2005.

Van der Maaten L, Hinton G. Visualizing data using t-SNE. J Mach Learn Res. 2008;9(11).

Rasiwasia N, Costa Pereira J, Coviello E, Doyle G, Lanckriet GR, Levy R, et al. A new approach to cross-modal multimedia retrieval. In: Proceedings of the 18th ACM International Conference on Multimedia. 2010. p. 251–60.

Funding

The paper is supported by the startup foundation of Zhengzhou University of Light Industry (Grant No. 2021BSJJ025), the Henan Provincial Science and Technology Department (Grant Nos. 222102210064, 222102210010, 212102210095), and the Academic Degrees & Graduate Education Reform Project of Henan Province (No. 2021SJGLX115Y).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the Topical Collection on Section on Big Data Analytics

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yu, J., Zheng, Y., Wang, Y. et al. Joint and Individual Feature Fusion Hashing for Multi-modal Retrieval. Cogn Comput 15, 1053–1064 (2023). https://doi.org/10.1007/s12559-022-10086-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-022-10086-4