Abstract

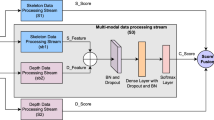

Fusion of multiple modalities from different sensors is an important area of research for multimodal human action recognition. In this paper, we conduct an in-depth study to investigate the effect of different parameters like input preprocessing, data augmentation, network architectures and model fusion so as to come up with a practical guideline for multimodal action recognition using deep learning paradigm. First, for RGB videos, we propose a novel image-based descriptor called stacked dense flow difference image (SDFDI), capable of capturing the spatio-temporal information present in a video sequence. A variety of deep 2D convolutional neural networks (CNN) are then trained to compare our SDFDI against state-of-the-art image-based representations. Second, for skeleton stream, we propose data augmentation technique based on 3D transformations so as to facilitate training a deep neural network on small datasets. We also propose a bidirectional gated recurrent unit (BiGRU) based recurrent neural network (RNN) to model skeleton data. Third, for inertial sensor data, we propose data augmentation based on jittering with white Gaussian noise along with deep a 1D-CNN network for action classification. The outputs of all these three heterogeneous networks (1D-CNN, 2D-CNN and BiGRU) are combined by a variety of model fusion approach based on score and feature fusion. Finally, in order to illustrate the efficacy of the proposed framework, we test our model on a publicly available UTD-MHAD dataset, and achieved an overall accuracy of 97.91%, which is about 4% higher than using each modality individually. We hope that the discussions and conclusions from this work will provide a deeper insight to the researchers in the related fields, and provide avenues for further studies for different multi-sensor based fusion architectures.

Similar content being viewed by others

Notes

This dataset can be downloaded from http://www.utdallas.edu/~cxc123730/UTD-MHAD.html.

References

Alahi A, Goel K, Ramanathan V, Robicquet A, Fei-Fei L, Savarese S (2016) Social lstm: human trajectory prediction in crowded spaces. In: IEEE conference on computer vision and pattern recognition, pp 961–971

Altun K, Barshan B (2010) Human activity recognition using inertial/magnetic sensor units. In: Springer international workshop on human behavior understanding, pp 38–51

Bi L, Feng D, Kim J (2018) Dual-path adversarial learning for fully convolutional network (FCN)-based medical image segmentation. Vis Comput 34:1–10

Bilen H, Fernando B, Gavves E, Vedaldi A, Gould S (2016) Dynamic image networks for action recognition. In: IEEE conference on computer vision and pattern recognition, pp 3034–3042

Brox T, Bruhn A, Papenberg N, Weickert J (2004) High accuracy optical flow estimation based on a theory for warping. In: Springer European conference on computer vision, pp 25–36

Caba Heilbron F, Escorcia V, Ghanem B, Carlos Niebles J (2015) Activitynet: a large-scale video benchmark for human activity understanding. In: IEEE conference on computer vision and pattern recognition, pp 961–970

Chambers J, Cleveland W, Tukey P, Kleiner B (1983) Graphical methods for data analysis. Wadsworth statistics/probability series

Chen C, Jafari R, Kehtarnavaz N (2015) Utd-mhad: a multimodal dataset for human action recognition utilizing a depth camera and a wearable inertial sensor. In: IEEE international conference on image processing, pp 168–172

Chen C, Jafari R, Kehtarnavaz N (2016) Fusion of depth, skeleton, and inertial data for human action recognition. In: IEEE international conference on acoustics, speech and signal processing, pp 2712–2716

Chikhaoui B, Ye B, Mihailidis A (2017) Feature-level combination of skeleton joints and body parts for accurate aggressive and agitated behavior recognition. J Ambient Intell Hum Comput 8(6):957–976

Chikhaoui B, Ye B, Mihailidis A (2018) Aggressive and agitated behavior recognition from accelerometer data using non-negative matrix factorization. J Ambient Intell Hum Comput 9(5):1375–1389

Cho K, Van Merriënboer B, Gulcehre C, Bahdanau D, Bougares F, Schwenk H, Bengio Y (2014) Learning phrase representations using rnn encoder-decoder for statistical machine translation. In: Conference on empirical methods in natural language processing, pp 1724–1734

Chollet F (2015) Keras (online). https://github.com/keras-team/keras. Accessed 10 Oct 2018

Chollet F (2017) Xception: deep learning with depthwise separable convolutions. In: 2017 IEEE conference on computer vision and pattern recognition, pp 1800–1807

Delachaux B, Rebetez J, Perez-Uribe A, Mejia HFS (2013) Indoor activity recognition by combining one-vs.-all neural network classifiers exploiting wearable and depth sensors. In: Springer international work-conference on artificial neural networks, pp 216–223

Deng J, Dong W, Socher R, Li LJ, Li K, Fei-Fei L (2009) Imagenet: a large-scale hierarchical image database. In: IEEE conference on computer vision and pattern recognition, pp 248–255

Deng Z, Vahdat A, Hu H, Mori G (2016) Structure inference machines: recurrent neural networks for analyzing relations in group activity recognition. In: IEEE conference on computer vision and pattern recognition, pp 4772–4781

Donahue J, Anne Hendricks L, Guadarrama S, Rohrbach M, Venugopalan S, Saenko K, Darrell T (2015) Long-term recurrent convolutional networks for visual recognition and description. In: IEEE conference on computer vision and pattern recognition, pp 2625–2634

Du Y, Wang W, Wang L (2015) Hierarchical recurrent neural network for skeleton based action recognition. In: IEEE conference on computer vision and pattern recognition, pp 1110–1118

El Madany NED, He Y, Guan L (2016) Human action recognition via multiview discriminative analysis of canonical correlations. In: IEEE international conference on image processing, pp 4170–4174

Ermes M, Pärkkä J, Mäntyjärvi J, Korhonen I (2008) Detection of daily activities and sports with wearable sensors in controlled and uncontrolled conditions. IEEE Trans Inf Technol Biomed 12(1):20–26

Farnebäck G (2003) Two-frame motion estimation based on polynomial expansion. In: Springer scandinavian conference on image analysis, pp 363–370

Feichtenhofer C, Pinz A, Zisserman (2016) Convolutional two-stream network fusion for video action recognition. In: IEEE conference on computer vision and pattern recognition, pp 1933–1941

Gasparrini S, Cippitelli E, Gambi E, Spinsante S, Wåhslén J, Orhan I, Lindh T (2016) Proposal and experimental evaluation of fall detection solution based on wearable and depth data fusion. In: ICT innovations 2015, Springer, pp 99–108

Girshick R, Donahue J, Darrell T, Malik J (2014) Rich feature hierarchies for accurate object detection and semantic segmentation. In: IEEE conference on computer vision and pattern recognition, pp 580–587

Gogić I, Manhart M, Pandžić IS, Ahlberg J (2018) Fast facial expression recognition using local binary features and shallow neural networks. Vis Comput. https://doi.org/10.1007/s00371-018-1585-8

Haghighat M, Abdel-Mottaleb M, Alhalabi W (2016) Discriminant correlation analysis: real-time feature level fusion for multimodal biometric recognition. IEEE Trans Inf Forensics Secur 11(9):1984–1996

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: IEEE conference on computer vision and pattern recognition, pp 770–778

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Hou Y, Li Z, Wang P, Li W (2018) Skeleton optical spectra-based action recognition using convolutional neural networks. IEEE Trans Circuits Syst Video Technol 28(3):807–811

Howard AG, Zhu M, Chen B, Kalenichenko D, Wang W, Weyand T, Andreetto M, Adam H (2017) Mobilenets: efficient convolutional neural networks for mobile vision applications. arXiv:170404861

Hussein ME, Torki M, Gowayyed MA, El-Saban M (2013) Human action recognition using a temporal hierarchy of covariance descriptors on 3d joint locations. Int Jt Conf Artif Intell 13:2466–2472

Imran J, Kumar P (2016) Human action recognition using rgb-d sensor and deep convolutional neural networks. In: IEEE international conference on advances in computing, communications and informatics, pp 144–148

Ioffe S, Szegedy C (2015) Batch normalization: accelerating deep network training by reducing internal covariate shift. In: International conference on machine learning, pp 448–456

Jiang T, Zhang Z, Yang Y (2018) Modeling coverage with semantic embedding for image caption generation. Vis Comput. https://doi.org/10.1007/s00371-018-1565-z

Karpathy A, Toderici G, Shetty S, Leung T, Sukthankar R, Fei-Fei L (2014) Large-scale video classification with convolutional neural networks. In: IEEE conference on computer vision and pattern recognition, pp 1725–1732

Khaire P, Kumar P, Imran J (2018) Combining CNN streams of RGB-D and skeletal data for human activity recognition. Pattern Recognit Lett 107–116

Kittler J, Hatef M, Duin RP, Matas J (1998) On combining classifiers. IEEE Trans Pattern Anal Mach Intell 20(3):226–239

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Neural information processing systems, pp 1097–1105

Lefebvre G, Berlemont S, Mamalet F, Garcia C (2013) BLSTM-RNN based 3d gesture classification. In: Springer international conference on artificial neural networks, pp 381–388

Li Q, Stankovic JA, Hanson MA, Barth AT, Lach J, Zhou G (2009) Accurate, fast fall detection using gyroscopes and accelerometer-derived posture information. In: IEEE sixth international workshop on wearable and implantable body sensor networks, pp 138–143

Li Q, Qiu Z, Yao T, Mei T, Rui Y, Luo J (2016) Action recognition by learning deep multi-granular spatio-temporal video representation. In: ACM international conference on multimedia retrieval, pp 159–166

Li C, Xie C, Zhang B, Chen C, Han J (2018a) Deep fisher discriminant learning for mobile hand gesture recognition. Pattern Recognit 77:276–276

Li X, Huang H, Zhao H, Wang Y, Hu M (2018b) Learning a convolutional neural network for propagation-based stereo image segmentation. Vis Comput. https://doi.org/10.1007/s00371-018-1582-y

Liu J, Shahroudy A, Xu D, Wang G (2016) Spatio-temporal lstm with trust gates for 3d human action recognition. In: Springer European conference on computer vision, pp 816–833

Liu K, Chen C, Jafari R, Kehtarnavaz N (2014) Fusion of inertial and depth sensor data for robust hand gesture recognition. IEEE Sens J 14(6):1898–1903

Long J, Shelhamer E, Darrell T (2015) Fully convolutional networks for semantic segmentation. In: IEEE conference on computer vision and pattern recognition, pp 3431–3440

Ma C, Wang A, Chen G, Xu C (2018) Hand joints-based gesture recognition for noisy dataset using nested interval unscented kalman filter with LSTM network. Vis Comput 34(6–8):1053–1063

Ordóñez FJ, Roggen D (2016) Deep convolutional and lstm recurrent neural networks for multimodal wearable activity recognition. Sensors 16(1):115

Roy N, Misra A, Cook D (2016) Ambient and smartphone sensor assisted adl recognition in multi-inhabitant smart environments. J Ambient Intell Humanz Comput 7(1):1–19

Sarcevic P, Kincses Z, Pletl S (2017) Online human movement classification using wrist-worn wireless sensors. J Ambient Intell Humaniz Comput 10:1–18

Sargano AB, Angelov P, Habib Z (2017) A comprehensive review on handcrafted and learning-based action representation approaches for human activity recognition. Appl Sci 7(1):110

Satyamurthi S, Tian J, Chua MCH (2018) Action recognition using multi-directional projected depth motion maps. J Ambient Intell Humaniz Comput. https://doi.org/10.1007/s12652-018-1136-1

Shahroudy A, Liu J, Ng TT, Wang G (2016) NTU RGB+ D: a large scale dataset for 3d human activity analysis. In: IEEE conference on computer vision and pattern recognition, pp 1010–1019

Simonyan K, Zisserman A (2014) Two-stream convolutional networks for action recognition in videos. In: Advances in neural information processing systems, pp 568–576

Soomro K, Zamir AR, Shah M (2012) Ucf101: a dataset of 101 human actions classes from videos in the wild. arXiv:12120402

Sun QS, Zeng SG, Liu Y, Heng PA, Xia DS (2005) A new method of feature fusion and its application in image recognition. Pattern Recognit 38(12):2437–2448

Sutskever I, Vinyals O, Le QV (2014) Sequence to sequence learning with neural networks. In: Advances in neural information processing systems, pp 3104–3112

Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. In: IEEE conference on computer vision and pattern recognition, pp 2818–2826

Szegedy C, Ioffe S, Vanhoucke V, Alemi AA (2017) Inception-v4, inception-resnet and the impact of residual connections on learning. AAAI Conf Artif Intell 4:12

Tran D, Bourdev L, Fergus R, Torresani L, Paluri M (2015) Learning spatiotemporal features with 3d convolutional networks. In: IEEE international conference on computer vision, pp 4489–4497

Varol G, Laptev I, Schmid C (2018) Long-term temporal convolutions for action recognition. IEEE Trans Pattern Anal Mach Intell 40(6):1510–1517

Vinyals O, Toshev A, Bengio S, Erhan D (2015) Show and tell: a neural image caption generator. In: IEEE conference on computer vision and pattern recognition, pp 3156–3164

Wang H, Schmid C (2013) Action recognition with improved trajectories. In: IEEE international conference on computer vision, pp 3551–3558

Wang H, Wang L (2017) Modeling temporal dynamics and spatial configurations of actions using two-stream recurrent neural networks. In: IEEE conference on computer vision and pattern recognition

Wang H, Kläser A, Schmid C, Liu CL (2011) Action recognition by dense trajectories. In: IEEE conference on computer vision and pattern recognition, pp 3169–3176

Wang L (2017) OpenCV implementation of different optical flow algorithms (online). https://github.com/wanglimin/dense_flow. Accessed 10 Oct 2018

Wang L, Xiong Y, Wang Z, Qiao Y, Lin D, Tang X, Van Gool L (2016a) Temporal segment networks: towards good practices for deep action recognition. In: Springer European conference on computer vision, pp 20–36

Wang P, Li W, Gao Z, Zhang J, Tang C, Ogunbona PO (2016b) Action recognition from depth maps using deep convolutional neural networks. IEEE Trans Hum Mach Syst 46(4):498–509

Wang P, Li Z, Hou Y, Li W (2016c) Action recognition based on joint trajectory maps using convolutional neural networks. In: ACM multimedia conference, pp 102–106

Wang P, Wang S, Gao Z, Hou Y, Li W (2017) Structured images for RGB-D action recognition. In: IEEE international conference on computer vision, pp 1005–1014

Xu K, Ba J, Kiros R, Cho K, Courville A, Salakhudinov R, Zemel R, Bengio Y (2015) Show, attend and tell: neural image caption generation with visual attention. In: International conference on machine learning, pp 2048–2057

Yan C, Li L, Zhang C, Liu B, Zhang Y, Zhang Y, Dai Q (2018a) A fast UYGHUR text detector for complex background images. IEEE Trans Multimed

Yan C, Xie H, Chen J, Zhang Y, Dai Q (2018b) Cross-modality bridging and knowledge transferring for image understanding. IEEE Trans Multimed

Yu Z, Liu Q, Liu G (2017) Deeper cascaded peak-piloted network for weak expression recognition. Vis Comput 34:1–9

Zach C, Pock T, Bischof H (2007) A duality based approach for realtime TV-L1 optical flow. In: Springer joint pattern recognition symposium, pp 214–223

Zhang B, Wang L, Wang Z, Qiao Y, Wang H (2016) Real-time action recognition with enhanced motion vector CNNS. In: IEEE conference on computer vision and pattern recognition, pp 2718–2726

Zhang S, Liu X, Xiao J (2017) On geometric features for skeleton-based action recognition using multilayer LSTM networks. In: IEEE winter conference on applications of computer vision, pp 148–157

Zhang Z, Tian Z, Zhou M (2018) Handsense: smart multimodal hand gesture recognition based on deep neural networks. J Ambient Intell Humaniz Comput. https://doi.org/10.1007/s12652-018-0989-7

Zhao R, Ali H, van der Smagt P (2017) Two-stream RNN/CNN for action recognition in 3d videos. In: IEEE international conference on intelligent robots and systems, pp 4260–4267

Zhou F, Hu Y, Shen X (2018) Msanet: multimodal self-augmentation and adversarial network for RGB-D object recognition. Vis Comput. https://doi.org/10.1007/s00371-018-1559-x

Zhu W, Lan C, Xing J, Zeng W, Li Y, Shen L, Xie X (2016) Co-occurrence feature learning for skeleton based action recognition using regularized deep LSTM networks. AAAI Conf Artif Intell 2:8

Funding

No funding source available

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Imran, J., Raman, B. Evaluating fusion of RGB-D and inertial sensors for multimodal human action recognition. J Ambient Intell Human Comput 11, 189–208 (2020). https://doi.org/10.1007/s12652-019-01239-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12652-019-01239-9