Abstract

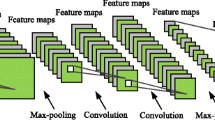

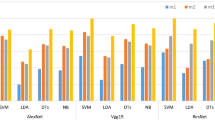

In image classification, shallow convolutional features and deep convolutional features are not fully utilized by many network frameworks. To solve this problem, we propose a combinatorial convolutional network (CCNet) that integrates convolutional features of all levels. According to its own structure, the convolutional features of shallow, medium, and deep levels are extracted. These features are combined by weighted concatenation and convolutional fusion, and the coefficients of each channel of final combination feature are again weighted to improve the identification degree of features. CCNet can improve the single case where most network only add or concatenate shallow and deep features, so that the network can achieve lower classification error rate while generating low-dimensional features. Extensive experiments are performed on CIFAR-10 and CIFAR-100 respectively. The experimental results show that the low-dimensional image feature vectors generated by CCNet effectively reduce the classification error rate when the number of convolutional layers does not exceed 100 layers.

Similar content being viewed by others

References

Abdi M, Nahavandi S (2016) Multi-residual networks: improving the speed and accuracy of residual networks. arXiv preprint arXiv:1609.05672

Chen Z, Ho P (2018) Cloud based content classification with global-connected net (GC-Net). In: 2018 21st conference on innovation in clouds, internet and networks and workshops, Paris, France, pp 1–6

Csurka G, Dance CR, Fan L, Willamowski J, Bray C (2004) Visual categorization with bags of keypoints. In: Workshop on statistical learning in computer vision, ECCV, pp 1:1–22

Han X, Dai Q (2018) Batch-normalized Mlpconv-wise supervised pre-training network in network. Appl Intell 48(1):142–155. https://doi.org/10.1007/s10489-017-0968-2

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, pp 770–778. https://doi.org/10.1109/cvpr.2016.90

He K, Zhang X, Ren S, Sun J (2016b) Identity mappings in deep residual networks. European conference on computer vision. Springer, Cham, pp 630–645. https://doi.org/10.1007/978-3-319-46493-0_38

Hu J, Shen L, Sun G (2018). Squeeze-and-excitation networks. In: IEEE/CVF conference on computer vision and pattern recognition. Salt Lake City, UT, USA, pp 7132–7141. https://doi.org/10.1109/cvpr.2018.00745

Huang G, Sun Y, Liu Z, Sedra D, Weinberger KQ (2016) Deep networks with stochastic depth. European conference on computer vision. Springer, Cham, pp 646–661

Huang G, Liu Z, Van Der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. In: IEEE conference on computer vision and pattern recognition, Honolulu, HI, USA, pp 2261–2269

Ioffe S, Szegedy C (2015) Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv preprint arXiv:1502.03167

Jiang X, Pang Y, Sun M, Li X (2017) Cascaded subpatch networks for effective CNNs. IEEE Trans Neural Netw Learn Syst 29(7):2684–2694. https://doi.org/10.1109/TNNLS.2017.2689098

Krizhevsky A, Hinton G (2009) Learning multiple layers of features from tiny images. Technical report, University of Toronto

Krizhevsky A, Sutskever I, Hinton GE (2012) ImageNet classification with deep convolutional neural networks. In: International conference on neural information processing systems, pp 1097–1105

Lai D, Tian W, Chen L (2019) Improving classification with semi-supervised and fine-grained learning. Pattern Recogn 88:547–556

Larsson G, Maire M, Shakhnarovich G (2016) Fractalnet: Ultra-deep neural networks without residuals. arXiv preprint arXiv:1605.07648

Lazebnik S, Schmid C, Ponce J (2006) Beyond bags of features: spatial pyramid matching for recognizing natural scene categories. In: 2006 IEEE computer society conference on computer vision and pattern recognition, New York, USA, vol 2, pp 2169–2178

Lin T, Dollár P, Girshick R, He K, Hariharan B, Belongie S (2017) Feature pyramid networks for object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, Honolulu, USA, pp 2117–2125. https://doi.org/10.1109/cvpr.2017.106

Lu B, Hu Q, Hui Y, Wen Q, Li M (2018) Feature reinforcement network for image classification. In: 2018 IEEE international conference on multimedia and expo, San Diego, CA, USA, pp 1–6. https://doi.org/10.1109/icme.2018.8486608

Srivastava RK, Greff K, Schmidhuber J (2015) Training very deep networks. In: Advances in neural information processing systems, pp 2377–2385

Szegedy C, Liu W, Jia Y et al (2015) Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, Boston, MA, USA, pp 1–9

Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. In: IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, pp 2818–2826. https://doi.org/10.1109/cvpr.2016.308

Szegedy C, Ioffe S, Vanhoucke V, Alemi AA (2017) Inception-v4, inception-resnet and the impact of residual connections on learning. In: Thirty-first AAAI conference on artificial intelligence. San Francisco, California, USA, pp 4278–4284

Veit A, Wilber M, Belongie S (2016) Residual networks are exponential ensembles of relatively shallow networks, vol 1(2), p 3. arXiv preprint arXiv:1605.06431

Weng Y, Zhou T, Liu L, Xia C (2019) Automatic convolutional neural architecture search for image classification under different scenes. IEEE Access 7:38495–38506

Xie S, Girshick R, Dollár P, Tu Z, He K (2017) Aggregated residual transformations for deep neural networks. In: IEEE conference on computer vision and pattern recognition, Honolulu, HI, USA, pp 5987–5995

Xu M, Zhu J, Lv P, Zhou B, Tappen MF, Ji R (2017) Learning-based shadow recognition and removal from monochromatic natural images. IEEE Trans Image Process 26(12):5811–5824

Xu M, Li C, Lv P, Lin N, Hou R, Zhou B (2018) An efficient method of crowd aggregation computation in public areas. IEEE Trans Circuits Syst Video Technol 28(10):2814–2825. https://doi.org/10.1109/TCSVT.2017.2731866

Yan C, Xie H, Chen J, Zha Z, Hao X, Zhang Y, Dai Q (2018) A fast uyghur text detector for complex background images. IEEE Trans Multimedia 20(12):3389–3398. https://doi.org/10.1109/TMM.2018.2838320

Yan C, Li L, Zhang C, Liu B, Zhang Y, Dai Q (2019) Cross-modality bridging and knowledge transferring for image understanding. IEEE Trans Multimedia. https://doi.org/10.1109/TMM.2019.2903448

Yue K, Xu F, Yu J (2019) Shallow and wide fractional max-pooling network for image classification. Neural Comput Appl 31(2):409–419. https://doi.org/10.1007/s00521-017-3073-x

Zagoruyko S, Komodakis N (2016) Wide residual networks. arXiv preprint arXiv:1605.07146

Acknowledgements

This work is supported by the National Natural Science Foundation of China (no. 51641609), Natural Science Foundation of Hebei Province of China (no. F2015203212).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wu, C., Li, Y., Zhao, Z. et al. Research on image classification method of features of combinatorial convolution. J Ambient Intell Human Comput 11, 2913–2923 (2020). https://doi.org/10.1007/s12652-019-01433-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12652-019-01433-9