Abstract

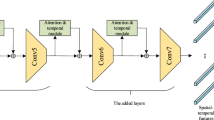

The problem of action and interaction recognition of human activities from the perspective of first-person view-point is an interesting area of research in the field of human action recognition (HAR). This paper presents a data-driven spatio-temporal network to combine different modalities computed from first-person videos using a temporal attention mechanism. First, our proposed approach uses three-stream inflated 3D ConvNet (I3D) to extract low-level features from RGB frame difference (FD), optical flow (OF) and magnitude-orientation (MO) streams. An I3D network has the advantage to directly learn spatio-temporal features over short video snippets (like 16 frames). Second, the extracted features are fused together and fed to a Bidirectional long short-term memory (BiLSTM) network to model high-level temporal feature sequences. Third, we propose to incorporate attention mechanism with our BiLSTM network to automatically select the most relevant temporal snippets in the given video sequence. Finally, we conducted extensive experiments and achieve state-of-the-art results on JPL (98.5%), NUS (84.1%), UTK (91.5%) and DogCentric (83.3%) datasets. These results show that features extracted from three-stream network are complementary to each other, and attention mechanism further improves the results by a large margin than previous attempts based on handcrafted and deep features.

Similar content being viewed by others

References

Abebe G, Cavallaro A, Parra X (2016) Robust multi-dimensional motion features for first-person vision activity recognition. Comput Vis Image Underst 149:229–248

Bahdanau D, Cho K, Bengio Y (2014) Neural machine translation by jointly learning to align and translate. arXiv preprint arXiv:1409.0473

Bin Y, Yang Y, Shen F, Xie N, Shen HT, Li X (2018) Describing video with attention-based bidirectional lstm. IEEE Trans Cybern 49(7):2631–2641

Brox T, Bruhn A, Papenberg N, Weickert J (2004) High accuracy optical flow estimation based on a theory for warping. In: European conference on computer vision, pp 25–36

Caetano CA, De Melo VHC, dos Santos JA, Schwartz WR (2017) Activity recognition based on a magnitude-orientation stream network. In: IEEE conference on graphics, patterns and images, pp 47–54

Carreira J, Zisserman A (2017) Quo vadis, action recognition? A new model and the kinetics dataset. In: IEEE conference on computer vision and pattern recognition, pp 6299–6308

Chorowski JK, Bahdanau D, Serdyuk D, Cho K, Bengio Y (2015) Attention-based models for speech recognition. In: Advances in neural information processing systems, pp 577–585

Cui Z, Ke R, Pu Z, Wang Y (2018) Deep bidirectional and unidirectional lstm recurrent neural network for network-wide traffic speed prediction. arXiv preprint arXiv:1801.02143

Donahue J, Anne Hendricks L, Guadarrama S, Rohrbach M, Venugopalan S, Saenko K, Darrell T (2015) Long-term recurrent convolutional networks for visual recognition and description. In: IEEE conference on computer vision and pattern recognition, pp 2625–2634

Edara DC, Vanukuri LP, Sistla V, Kolli VKK (2019) Sentiment analysis and text categorization of cancer medical records with LSTM. J Ambient Intell Human Comput. https://doi.org/10.1007/s12652-019-01399-8

Fa L, Song Y, Shu X (2018) Global and local c3d ensemble system for first person interactive action recognition. In: Springer International Conference on Multimedia Modeling, pp. 153–164

Farnebäck G (2003) Two-frame motion estimation based on polynomial expansion. In: Springer scandinavian conference on image analysis, pp 363–370

Fernando B, Gavves E, Oramas J, Ghodrati A, Tuytelaars T (2016) Rank pooling for action recognition. IEEE Trans Pattern Anal Mach Intell 39(4):773–787

Girshick R, Donahue J, Darrell T, Malik J (2014) Rich feature hierarchies for accurate object detection and semantic segmentation. In: IEEE conference on computer vision and pattern recognition, pp 580–587

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: IEEE conference on computer vision and pattern recognition, pp 770–778

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780

Imran J, Raman B (2020) Evaluating fusion of rgb-d and inertial sensors for multimodal human action recognition. J Ambient Intell Humaniz Comput 11(1):189–208

Ioffe S, Szegedy C (2015) Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv preprint arXiv:1502.03167

Iwashita Y, Takamine A, Kurazume R, Ryoo MS (2014) First-person animal activity recognition from egocentric videos. In: IEEE international conference on pattern recognition, pp 4310–4315

Jia Y, Shelhamer E, Donahue J, Karayev S, Long J, Girshick R, Guadarrama S, Darrell T (2014) Caffe: convolutional architecture for fast feature embedding. In: ACM international conference on multimedia, pp 675–678

Kahani R, Talebpour A, Mahmoudi-Aznaveh A (2019) A correlation based feature representation for first-person activity recognition. Multimed Tools Appl 78(15):21673–21694

Karpathy A, Toderici G, Shetty S, Leung T, Sukthankar R, Fei-Fei L (2014) Large-scale video classification with convolutional neural networks. In: IEEE conference on computer vision and pattern recognition, pp 1725–1732

Kay W, Carreira J, Simonyan K, Zhang B, Hillier C, Vijayanarasimhan S, Viola F, Green T, Back T, Natsev P et al (2017) The kinetics human action video dataset. arXiv preprint arXiv:1705.06950

Kim YJ, Lee DG, Lee SW (2020) Three-stream fusion network for first-person interaction recognition. Pattern Recogn 103:107279

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems, pp 1097–1105

Kwon H, Kim Y, Lee JS, Cho M (2018) First person action recognition via two-stream convnet with long-term fusion pooling. Pattern Recogn Lett 112:161–167

Laptev I (2005) On space-time interest points. Int J Comput Vis 64(2–3):107–123

Li H, Cai Y, Zheng WS (2019) Deep dual relation modeling for egocentric interaction recognition. In: IEEE conference on computer vision and pattern recognition, pp 7932–7941

Monteiro J, Aires JP, Granada R, Barros RC, Meneguzzi F (2017) Virtual guide dog: An application to support visually-impaired people through deep convolutional neural networks. In: IEEE international joint conference on neural networks, pp 2267–2274

Monteiro J, Granada R, Barros RC et al (2018) Evaluating the feasibility of deep learning for action recognition in small datasets. In: IEEE international joint conference on neural networks, pp 1–8

Moreira TP, Menotti D, Pedrini H (2017) First-person action recognition through visual rhythm texture description. In: IEEE international conference on acoustics, speech and signal processing, pp 2627–2631

Moreira TP, Menotti D, Pedrini H (2020) Video action recognition based on visual rhythm representation. J Vis Commun Image Represent 71:102771. https://doi.org/10.1016/j.jvcir.2020.102771

Narayan S, Kankanhalli MS, Ramakrishnan KR (2014) Action and interaction recognition in first-person videos. In: IEEE conference on computer vision and pattern recognition workshops, pp 512–518

Ojala T, Pietikainen M, Maenpaa T (2002) Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans Pattern Anal Mach Intell 24(7):971–987

Özkan F, Arabaci MA, Surer E, Temizel A (2017) Boosted multiple kernel learning for first-person activity recognition. In: IEEE European signal processing conference, pp 1050–1054

Passricha V, Aggarwal RK (2020) A comparative analysis of pooling strategies for convolutional neural network based hindi asr. J Ambient Intell Humaniz Comput 11(2):675–691

Piergiovanni A, Fan C, Ryoo MS (2017) Learning latent subevents in activity videos using temporal attention filters. In: AAAI conference on artificial intelligence

Purwanto D, Chen YT, Fang WH (2019) First-person action recognition with temporal pooling and Hilbert–Huang transform. IEEE Trans Multimed 21(12):3122–3135

Ryoo MS, Matthies L (2013) First-person activity recognition: what are they doing to me? In: IEEE conference on computer vision and pattern recognition, pp 2730–2737

Ryoo MS, Rothrock B, Matthies L (2015) Pooled motion features for first-person videos. In: IEEE conference on computer vision and pattern recognition, pp 896–904

Schuster M, Paliwal KK (1997) Bidirectional recurrent neural networks. IEEE Trans Signal Process 45(11):2673–2681

Sharma S, Kiros R, Salakhutdinov R (2015) Action recognition using visual attention. arXiv preprint arXiv:1511.04119

Simonyan K, Zisserman A (2014) Two-stream convolutional networks for action recognition in videos. In: Advances in neural information processing systems, pp 568–576

Sudhakaran S, Lanz O (2017) Convolutional long short-term memory networks for recognizing first person interactions. In: IEEE international conference on computer vision workshops, pp 2339–2346

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: IEEE conference on computer vision and pattern recognition, pp 1–9

Tran D, Bourdev L, Fergus R, Torresani L, Paluri M (2015) Learning spatiotemporal features with 3d convolutional networks. In: IEEE international conference on computer vision, pp 4489–4497

Varol G, Laptev I, Schmid C (2017) Long-term temporal convolutions for action recognition. IEEE Trans Pattern Anal Mach Intell 40(6):1510–1517

Vinyals O, Toshev A, Bengio S, Erhan D (2015) Show and tell: a neural image caption generator. In: IEEE conference on computer vision and pattern recognition, pp 3156–3164

Wang H, Schmid C (2013) Action recognition with improved trajectories. In: IEEE International conference on computer vision, pp 3551–3558

Wang L, Qiao Y, Tang X (2015) Action recognition with trajectory-pooled deep-convolutional descriptors. In: IEEE conference on computer vision and pattern recognition, pp 4305–4314

Wang L, Xiong Y, Wang Z, Qiao Y, Lin D, Tang X, Van Gool L (2016) Temporal segment networks: Towards good practices for deep action recognition. In: European conference on computer vision, pp 20–36

Xia L, Gori I, Aggarwal JK, Ryoo MS (2015) Robot-centric activity recognition from first-person rgb-d videos. In: IEEE winter conference on applications of computer vision, pp 357–364

Xu K, Ba J, Kiros R, Cho K, Courville A, Salakhudinov R, Zemel R, Bengio Y (2015) Show, attend and tell: neural image caption generation with visual attention. In: International conference on machine learning, pp 2048–2057

Zach C, Pock T, Bischof H (2007) A duality based approach for realtime tv-l 1 optical flow. In: Springer joint pattern recognition symposium, pp 214–223

Zaki HF, Shafait F, Mian A (2017) Modeling sub-event dynamics in first-person action recognition. In: IEEE conference on computer vision and pattern recognition, pp 7253–7262

Zhou B, Andonian A, Oliva A, Torralba A (2018) Temporal relational reasoning in videos. In: European conference on computer vision, pp 803–818

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Funding

No funding source available.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Imran, J., Raman, B. Three-stream spatio-temporal attention network for first-person action and interaction recognition. J Ambient Intell Human Comput 13, 1137–1152 (2022). https://doi.org/10.1007/s12652-021-02940-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12652-021-02940-4