Abstract

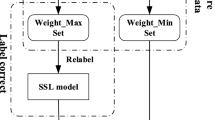

Machine learning techniques may suffer from adversarial attack in which an attacker misleads a learning process by manipulating training samples. Data sanitization is one of countermeasures against poisoning attack. It is a data pre-processing method which filters suspect samples before learning. Recently, a number of data sanitization methods are devised for label flip attack, but their flexibility is limited due to specific assumptions. It is observed that abrupt label flip caused by attack changes complexity of classification. A data sanitization method based on data complexity, which is a measure of the difficulty of classification on a dataset, is proposed in this paper. Our method measures the data complexity of a training set after removing a sample and its nearest samples. Contaminated samples are then distinguished from untainted samples according to their data complexity values. Experimental results support the idea that data complexity can be used to identify attack samples. The proposed method achieves a better result than the current sanitization method in terms of detection accuracy for well known security application problems.

Similar content being viewed by others

References

Alfeld S, Zhu X, Barford P (2016) Data poisoning attacks against autoregressive models. In: Proceedings of the thirtieth AAAI conference on artificial intelligence, AAAI’16, pp 1452–1458

Amos B, Turner H, White J (2013) Applying machine learning classifiers to dynamic android malware detection at scale. In: Wireless communications and mobile computing conference (IWCMC), IEEE, pp 1666–1671

Barreno M, Nelson B, Sears R, Joseph AD, Tygar JD (2006) Can machine learning be secure? In: Proceedings of the 2006 ACM symposium on information, computer and communications security, ACM, pp 16–25

Barreno M, Nelson B, Joseph AD, Tygar J (2010) The security of machine learning. Mach Learn 81(2):121–148

Bernadó-Mansilla E, Ho TK (2005) Domain of competence of xcs classifier system in complexity measurement space. IEEE Trans Evol Comput 9(1):82–104

Biggio B, Fumera G, Roli F (2010) Multiple classifier systems for robust classifier design in adversarial environments. Int J Mach Learn Cybernet 1(1–4):27–41

Biggio B, Corona I, Fumera G, Giacinto G, Roli F (2011a) Bagging classifiers for fighting poisoning attacks in adversarial classification tasks. In: Multiple classifier systems. Springer, Berlin, pp 350–359

Biggio B, Fumera G, Roli F (2011b) Design of robust classifiers for adversarial environments. In: IEEE international conference on systems, man, and cybernetics (SMC), IEEE, pp 977–982

Biggio B, Nelson B, Laskov P (2011c) Support vector machines under adversarial label noise. In: ACML, pp 97–112

Biggio B, Nelson B, Laskov P (2012) Poisoning attacks against support vector machines. In: 29th intl conf. on machine learning (ICML), pp 1807–1814

Biggio B, Fumera G, Roli F (2014) Security evaluation of pattern classifiers under attack. IEEE Trans Knowl Data Eng 26(4):984–996

Brückner M, Kanzow C, Scheffer T (2012) Static prediction games for adversarial learning problems. J Mach Learn Res 13(1):2617–2654

Chan PPK, Yang C, Yeung DS, Ng WWY (2015) Spam filtering for short messages in adversarial environment. Neurocomputing 155(C):167–176

Corona I, Giacinto G, Roli F (2013) Adversarial attacks against intrusion detection systems: taxonomy, solutions and open issues. Inf Sci 239:201–225

Cretu GF, Stavrou A, Locasto ME, Stolfo SJ, Keromytis AD (2008) Casting out demons: sanitizing training data for anomaly sensors. In: IEEE symposium on security and privacy, IEEE, pp 81–95

Dalvi N, Domingos P, Sanghai S, Verma D, et al (2004) Adversarial classification. In: Proceedings of the tenth ACM SIGKDD international conference on Knowledge discovery and data mining, ACM, pp 99–108

Fefilatyev S, Shreve M, Kramer K, Hall L, Goldgof D, Kasturi R, Daly K, Remsen A, Bunke H (2012) Label-noise reduction with support vector machines. In: 21st international conference on pattern recognition (ICPR), IEEE, pp 3504–3508

Georgala K, Kosmopoulos A, Paliouras G (2014) Spam filtering: an active learning approach using incremental clustering. In: Proceedings of the 4th international conference on web intelligence, mining and semantics (WIMS14), ACM, pp 1–12

Globerson A, Roweis S (2006) Nightmare at test time: robust learning by feature deletion. In: Proceedings of the 23rd international conference on Machine learning, ACM, pp 353–360

He ZM, Chan PPK, Yeung DS, Pedrycz W, Ng WWY (2015) Quantification of side-channel information leaks based on data complexity measures for web browsing. Int J Mach Learn Cybernet 6(4):607–619

Ho TK, Basu M (2002) Complexity measures of supervised classification problems. IEEE Trans Pattern Anal Mach Intell 24(3):289–300

Huang L, Joseph AD, Nelson B, Rubinstein BI, Tygar J (2011) Adversarial machine learning. In: Proceedings of the 4th ACM workshop on security and artificial intelligence, ACM, pp 43–58

Jorgensen Z, Zhou Y, Inge M (2008) A multiple instance learning strategy for combating good word attacks on spam filters. J Mach Learn Res 9:1115–1146

Kong JS, Rezaei B, Sarshar N, Roychowdhury VP (2006) Collaborative spam filtering using e-mail networks. Computer 39(8):67–73

Lee H, Ng AY (2005) Spam deobfuscation using a hidden markov model. In: CEAS

Li B, Wang Y, Singh A, Vorobeychik Y (2016) Data poisoning attacks on factorization-based collaborative filtering. In: Advances in neural information processing systems, pp 1885–1893

Lichman M (2013) UCI machine learning repository. http://archive.ics.uci.edu/ml

Lowd D, Meek C (2005) Adversarial learning. In: Proceedings of the eleventh ACM SIGKDD international conference on Knowledge discovery in data mining, ACM, pp 641–647

Luengo J, Herrera F (2012) Shared domains of competence of approximate learning models using measures of separability of classes. Inf Sci 185(1):43–65

Michie D, Spiegelhalter DJ, Taylor CC, Campbell J (eds) (1994) Machine learning, neural and statistical classification. Ellis Horwood, Upper Saddle River

Nelson B, Barreno M, Chi FJ, Joseph AD, Rubinstein BI, Saini U, Sutton CA, Tygar JD, Xia K (2008) Exploiting machine learning to subvert your spam filter. LEET 8:1–9

Nelson B, Barreno M, Chi FJ, Joseph AD, Rubinstein BI, Saini U, Sutton C, Tygar J, Xia K (2009) Misleading learners: co-opting your spam filter. In: Machine learning in cyber trust. Springer, Berlin, pp 17–51

Rubinstein BI, Nelson B, Huang L, Joseph AD, Lau Sh, Rao S, Taft N, Tygar J (2009) Antidote: understanding and defending against poisoning of anomaly detectors. In: Proceedings of the 9th ACM SIGCOMM conference on internet measurement conference, ACM, pp 1–14

SáEz JA, Luengo J, Herrera F (2013) Predicting noise filtering efficacy with data complexity measures for nearest neighbor classification. Pattern Recognit 46(1):355–364

Sahs J, Khan L (2012) A machine learning approach to android malware detection. In: Intelligence and security informatics conference (EISIC), IEEE, pp 141–147

Saini U (2008) Machine learning in the presence of an adversary: attacking and defending the spambayes spam filter. Tech. rep, DTIC Document

Satpute K, Agrawal S, Agrawal J, Sharma S (2013) A survey on anomaly detection in network intrusion detection system using particle swarm optimization based machine learning techniques. In: Proceedings of the international conference on frontiers of intelligent computing: theory and applications (FICTA), pp 441–452

Servedio RA (2003) Smooth boosting and learning with malicious noise. J Mach Learn Res 4:633–648

Singh S (2003) Multiresolution estimates of classification complexity. IEEE Trans Pattern Anal Mach Intell 12:1534–1539

Smith FW (1968) Pattern classifier design by linear programming. IEEE Trans Comput 100(4):367–372

Suthaharan S (2014) Big data classification: problems and challenges in network intrusion prediction with machine learning. ACM SIGMETRICS Perform Eval Rev 41(4):70–73

Wittel GL, Wu SF (2004) On attacking statistical spam filters. In: Conference on email and anti-spam

Xiao H, Xiao H, Eckert C (2012) Adversarial label flips attack on support vector machines. In: ECAI, pp 870–875

Xiao H, Biggio B, Brown G, Fumera G, Eckert C, Roli F (2015a) Is feature selection secure against training data poisoning? In: Proceedings of the 32nd international conference on machine learning (ICML’15), pp 1689–1698

Xiao H, Biggio B, Nelson B, Xiao H, Eckert C, Roli F (2015b) Support vector machines under adversarial label contamination. Neurocomputing 160:53–62

Zhang F, Chan P, Biggio B, Yeung D, Roli F (2016) Adversarial feature selection against evasion attacks. IEEE Trans Cybernet 46:766–777

Zhou B, Yao Y, Luo J (2014) Cost-sensitive three-way email spam filtering. J Intell Inf Syst 42(1):19–45

Acknowledgements

This work is supported by the Fundamental Research Funds for the Central Universities (2015ZZ092).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chan, P.P.K., He, ZM., Li, H. et al. Data sanitization against adversarial label contamination based on data complexity. Int. J. Mach. Learn. & Cyber. 9, 1039–1052 (2018). https://doi.org/10.1007/s13042-016-0629-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-016-0629-5