Abstract

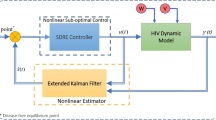

This paper surveys a new method to reduce the infected cells and free virus particles (virions) via a nonlinear HIV model. Three scenarios are considered for control performance evaluation. At first, the system and initial conditions are considered known completely. In the second case, the initial conditions are taken randomly. In the third scenario, in addition to uncertainty in initial condition, an additive noise is taken into account. The optimal control method is used to design an effective drug-schedule to reduce the number of infected cells and free virions with and without uncertainty. By using the Q-learning algorithm, which is the most applicable algorithm in reinforcement learning, the drug delivery rate is obtained off-line. Since Q-learning is a model-free algorithm, it is expected that the performance of the control in the presence of uncertainty does not change significantly. Simulation results confirm that the proposed control method has a good performance and high functionality in controlling the free virions for both certain and uncertain HIV models.

Similar content being viewed by others

References

Jiang X, Burke V, Totrov M, Williams C, Cardozo T, Gomy MK, Pazner SZ, Kong XP (2010) Conserved structural elements in the V3 crown of HIV-1 gp120. Nat Struct Mol Biol 17:955–961

Wein L, Zenio S, Nowak M (1997) Dynamics multidrug therapies for HIV: a theoretic approach. J Theor Biol 185:15–29

Ge S, Tian Z, Lee T (2005) Nonlinear control of a dynamic model of HIV-1. IEEE Trans Biomed Eng 52(3):353–361

Brandt ME, Chen G (2001) Feedback control of a biodynamical model of HIV-1. IEEE Trans Biomed Eng 48(7):754–759

Ledzewicz U, Schattler H (2002) On optimal controls for a general mathematical model for chemotherapy of HIV. In: Proceedings of the American control conference, pp 3454–3459

Ouattara DA (2005) Mathematical analysis of the HIV-1 infection: parameter estimation, therapies effectiveness and therapeutical failures. The 27th annual conference on engineering in medicine and biology, September 1–4, 2005, Shanghai, China

Kirschner D, Lenhart S, Serbin S (1997) Optimal control of the chemotherapy of HIV. J Math Biol 35:775–792

Kubiak S, Lehr H, Levy R, Moeller T, Parker A, Swim E (2001) Modeling control of HIV infection through structured treatment interruptions with recommendations for experimental protocol. CRSC Technical Report (CRSCTR01-27)

Kutch JJ, Gurfil P (2002) Optimal control of HIV infection with a continuously-mutating viral population. In: Proceedings of American control conference, pp 4033–4038

H Shim, SJ Han, CC Chung, SW Nam, JH Seo (2003) Optimal scheduling of drug treatment for HIV infection: continues dose control and receding horizon control. Int J Control Autom Syst 1(3):282–288

Kaelbling LP, Littman ML, Moore AW (1996) Reinforcement learning: a survey. J Artif Intell:237–285

Sutton RS, Barto AG (1998) Reinforcement learning: an introduction. MIT Press, Cambridge

Bertsekas DP (2007) Dynamic programming and optimal control, 3 ed. Athena Scientic, Belmont

Shoham Y, Powers R, Grenager T (2003) Multi-agent reinforcement learning: a critical survey. Web Manuscript

Cao XR (2007) Stochastic learning and optimization: a sensitivity-based approach. Springer, Berlin

Powell WB (2007) Approximate dynamic programming: solving the curses of dimensionality. Wiley, New York

Chang HS, Fu MC, Hu J, Marcus SI (2008) Simulation-based algorithms for markov decision processes. Springer, Berlin

Taylor ME, Stone P (2009) Transfer learning for reinforcement learning domains: a survey. J Mach Learn Res 10:1633–1685

Wiering MO, Otterlo MV (2012) Reinforcement learning state-of-the-art. Springer, Berlin

Faust A (2012) Reinforcement learning as a motion planner—a survey. Technical report, University of New Mexico, Department of Computer Science, 2012. Online: http://www.cs.unm.edu/~pdevineni/papers/Faust.pdf

Kober J, Bagnell JA, Peters J (2013) Reinforcement learning in robotics: a survey. Int J Robot Res

Liu DR, Li HL, Wang D (2015) Feature selection and feature learning for high-dimensional batch reinforcement learning: a survey. Int J Autom Comp:1–14

García J, Fernando F (2015) A comprehensive survey on safe reinforcement learning. J Mach Learn Res 16:1437–1480

Orellana JM (2011) Optimal drug scheduling for HIV therapy efficiency improvement. Biomed Signal Process Control 6:379–386

Costanza V, Rivadeneira PS, Biafore FL, D’Attellis CE (2013) Optimizing thymic recovery in HIV patients through multidrug therapies. Biomed Signal Process Control 8:90–97

Agusto FB, Adekunle AI (2014) Optimal control of a two-strain tuberculosis-HIV/AIDS co-infection model. Biosystems 119:20–44

Guo BZ, Sun B (2012) Dynamic programming approach to the numerical solution of optimal control with paradigm by a mathematical model for drug therapies of HIV/AIDS. Optim Eng 115:119–136

Wang D et al (2009) A comparison of three computational modelling methods for the prediction of virological response to combination HIV therapy. Artif Intell Med 47:63–74

Abharian E, Sarabi SZ, Yomi M (2014) Optimal sigmoid nonlinear stochastic control of HIV-1 infection based on bacteria foraging optimization method. Biomed Signal Process Control 10:184–191

Parbhoo S (2014) A reinforcement learning design for HIV clinical trials. PhD Diss

Gaweda E et al (2005) Individualization of pharmacological anemia management using reinforcement learning. Neural Netw 18:826–834

Noori A, Naghibi Sistani MB, Pariz N (2011) Hepatitis B virus infection control using reinforcement learning, presented at the ICEEE

Yassini S, Naghibi-Sistani MB (2009) Agent-based simulation for blood glucose control in diabetic patients. Int J Appl Sci Eng Technol 5:2009

Wong WC, Lee JH (2008) A reinforcement learning based scheme for adaptive optimal control of linear stochastic systems. American Control Conference, Seatle, Washington, USA, June 2008

Kamina RW, Makuch, H Zhao (2001) A stochastic modeling of early HIV-1 population dynamics. J Math Biosci 170:187–198

Alazabi FA, Zohdy MA (2012) Nonlinear uncertain HIV-1 model controller by using control Lyapunov function. Int J Mod Nonlinear Theory Appl:33–39

Wodarz D, Nowak MA (2002) Mathematical models of HIV pathogenesis and treatment. Bioessays 24:1178–1187

Ortega H, Martin-Landrove M (1999) A model for continuously mutant HIV-1. In: Proceedings of 22nd annual EMBS international conference, Chicago, pp 1917–1920, 2000

Perelson AS, Nelson PW (1999) Mathematical analysis of HIV-1 dynamics in vivo. SIAM Rev 41(1):3–44

Wodarz D, Nowak MA (1999) Specific therapy regimes could lead to long-term immunological control of HIV. Proc Natl Acad Sci 96(25):14464–14469

Wodarz D (2001) Helper-dependent vs. helper-independent CTL responses in HIV infection: implications for drug therapy and resistance. J Theor Biol 213:447–459

Jeffrey M, Xia X, Craig I (2003) When to initiate HIV therapy: a control theoretic approach. IEEE Trans Biomed Eng 50(11):1213–1220

Perelson AS (1989) Modeling the interaction of the immune system with HIV, Castillo–Chavez, mathematical and statistical approaches to AIDS epidemiology, (Lect. Notes in Biomath 83, pp. 350–370). Springer, New York, p 1989

Perelson A, Kirschner D, DeBoer R (1993) The dynamics of HIV infection of CD4 T-cells. Math Biosci 114:125

Watkins C (1998) Learning from delayed rewards. Ph. D. Dissertation Cambridge University

Chen CT (1995) Linear system theory and design, 3rd edition. Oxford University Press, Oxford

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gholizade-Narm, H., Noori, A. Control the population of free viruses in nonlinear uncertain HIV system using Q-learning. Int. J. Mach. Learn. & Cyber. 9, 1169–1179 (2018). https://doi.org/10.1007/s13042-017-0639-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-017-0639-y