Abstract

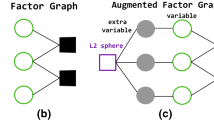

How to transform a family of simple distributions to approximate an intractable posterior distribution in a scalable manner is a key problem in variational inference. Recent researches have been studied to generate a flexible approximate posterior distribution by utilizing a flow-based model with a long flow structure. However, when the dimension of the data increases, a long flow structure brings the problem of computational complexity and large variance. Therefore, we propose a variational inference with dynamic routing flow (DRF), which ensures the multiformity of the flows with shorter flow structure in this paper. The proposed model consists of a series of iterative sub-modules transformations, and each sub-module is enabled with a greater expression power by routing-by-agreement to achieve a group of weighted mixture of invertible transformations. These sub-modules route can be computed parallelly and they share the same group of invertible functions, which makes the inference more efficiency. The experimental results show that the proposed DRF model achieves significant performance on the posterior distribution estimation both in accuracy and precision.

Similar content being viewed by others

References

Hinton G, Van Camp D (1993) Keeping neural networks simple by minimizing the description length of the weights. In: Conference on computational learning theory, Santa Cruz, CA, USA, 26–28 July 1993

Jordan MI, Ghahramani Z, Jaakkola TS et al (1999) An introduction to variational methods for graphical models. Mach Learn 37(2):183–233

Waterhouse SR, MacKay D, Robinson AJ (1996) Bayesian methods for mixtures of experts. In: Advances in neural information processing systems, NIPS Denver, CO, USA, 2–5 December 1996, pp 351–357

Hoffman MD, Blei DM, Wang C et al (2013) Stochastic variational inference. J Mach Learn Res 14(1):1303–1347

Paisley J, Blei D, Jordan M (2012) Variational Bayesian inference with stochastic search. arXiv preprint arXiv:1206.6430

Gershman S, Goodman N (2014) Amortized inference in probabilistic reasoning. In: Proceedings of the annual meeting of the cognitive science society, vol 36, no 36, Quebec, Canada, 23–26 July 2014

Kingma DP, Welling M (2013) Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114

Rezende D J, Mohamed S, Wierstra D (2014) Stochastic backpropagation and approximate inference in deep generative models. arXiv preprint arXiv:1401.4082

Rezende DJ, Mohamed S (2015) Variational inference with normalizing flows. arXiv preprint arXiv:1505.05770

Kingma DP, Salimans T, Jozefowicz R et al (2016) Improving variational inference with inverse autoregressive flow. arXiv preprint arXiv:1606.04934

Sabour S, Frosst N, Hinton GE (2017) Dynamic routing between capsules. In: Advances in neural information processing systems, Long Beach, CA, USA, 4–9 December 2017, pp 3856–3866

Hinton GE, Krizhevsky A, Wang SD (2011) Transforming auto-encoders. In: International conference on artificial neural networks. Springer, Berlin, pp 44–51

LeCun Y, Cortes C, Burges C (1998) The MNIST database of handwritten digits. http://yann.lecun.com/exdb/mnist/. Accessed Nov 2018

Xiao H, Rasul K, Vollgraf R (2017) Fashion-mnist: a novel image dataset for benchmarking machine learning algorithms. arXiv preprint arXiv:1708.07747

Kingma DP, Ba J (2015) Adam: a method for stochastic optimization. In: Proc. ICLR

Khrulkov V, Oseledets I (2018) Geometry score: a method for comparing generative adversarial networks. arXiv preprint arXiv:1802.02664

Acknowledgements

We would like to thank all anonymous reviewers for their insightful suggestions, which improve this manuscript a lot. This research is supported by the Natural Science Foundation of Hebei Province (F2018201115, F2018201096), the Key Science and Technology Foundation of the Education Department of Hebei Province, China (ZD2019021) and the Youth Scientific Research Foundation of Education Department of Hebei Province (QN2017019).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Hua, Q., Wei, L., Dong, C. et al. Improved variational inference with dynamic routing flow. Int. J. Mach. Learn. & Cyber. 11, 301–312 (2020). https://doi.org/10.1007/s13042-019-00974-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-019-00974-x