Abstract

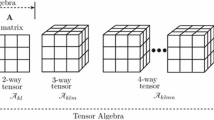

To exploit the information from two-dimensional structured data, two-dimensional principal component analysis (2-DPCA) has been widely used for dimensionality reduction and feature extraction. However, 2-DPCA is sensitive to outliers which are common in real applications. Therefore, many robust 2-DPCA methods have been proposed to improve the robustness of 2-DPCA. But existing robust 2-DPCAs have several weaknesses. First, these methods cannot be robust enough to outliers. Second, to center a sample set mixed with outliers using the L2-norm distance is usually biased. Third, most methods do not preserve the nice property of 2-DPCA (rotational invariance), which is important for learning algorithm. To alleviate these issues, we present a generalized robust 2-DPCA, which is named as 2-DPCA with \(\ell _{2,p}\)-norm minimization (\(\ell _{2,p}\)-2-DPCA), for image representation and recognition. In \(\ell _{2,p}\)-2-DPCA, \(\ell _{2,p}\)-norm is employed as the distance metric to measure the reconstruction error, which can alleviate the effect of outliers. Therefore, the proposed method is robust to outliers and preserves the desirable property of 2-DPCA which is invariant to rotational and well characterizes the geometric structure of samples. Moreover, most existing robust PCA methods estimate sample mean from database with outliers by averaging, which is usually biased. Sample mean are treated as an unknown variable to remedy the bias of computing sample mean in \(\ell _{2,p}\)-2-DPCA. To solve \(\ell _{2,p}\)-2-DPCA, we propose an iterative algorithm, which has a closed-form solution in each iteration. Experimental results on several benchmark databases demonstrate the effectiveness and advantages of our method.

Similar content being viewed by others

References

Chang X, Nie F, Wang S, Yang Y, Zhou X, Zhang C (2016) Compound rank-\(k\) projections for bilinear analysis. IEEE Trans Neural Netw Learn Syst 27(7):1502–1513

Parsons L, Haque E, Liu H (2004) Subspace clustering for high dimensional data: a review. ACM Sigkdd Explor Newsl 6(1):90–105

Chang X, Nie F, Yang Y, Zhang C, Huang H (2016) Convex sparse PCA for unsupervised feature learning. ACM Trans Knowl Discov Data (TKDD) 11(1):3

Shi X, Xing F, Guo Z, Su H, Liu F, Yang L (2019) Structured orthogonal matching pursuit for feature selection. Neurocomputing 349:164–172

Wold S, Esbensen K, Geladi P (1987) Principal component analysis. Chemom Intell Lab Syst 2(1–3):37–52

Belhumeur PN, Hespanha JP, Kriegman DJ (1997) Eigenfaces vs. fisherfaces: recognition using class specific linear projection. Technical report. Yale University New Haven United States

He X, Cai D, Yan S, Zhang HJ (2005) Neighborhood preserving embedding. In: Tenth IEEE international conference on computer vision (ICCV’05) volume 1, vol 2. IEEE, pp 1208–1213

He X, Niyogi P (2004) Locality preserving projections. In: Advances in neural information processing systems, pp 153–160

Belkin M, Niyogi P (2003) Laplacian eigenmaps for dimensionality reduction and data representation. Neural Comput 15(6):1373–1396

Roweis ST, Saul LK (2000) Nonlinear dimensionality reduction by locally linear embedding. Science 290(5500):2323–2326

Liu H, Lai Z, Chen Y (2019) Joint sparse neighborhood preserving embedding. J Phys Conf Ser 1176:032023

Liu H, Lai Z, Chen Y (2017) Joint sparse locality preserving projections. In: International conference on smart computing and communication. Springer, pp 125–133

Lu Y, Yuan C, Lai Z, Li X, Wong WK, Zhang D (2017) Nuclear norm-based 2dlpp for image classification. IEEE Trans Multimed 19(11):2391–2403

Ding C, Zhou D, He X, Zha H (2006) R 1-PCA: rotational invariant l 1-norm principal component analysis for robust subspace factorization. In: Proceedings of the 23rd international conference on Machine learning. ACM, pp 281–288

Kwak N (2008) Principal component analysis based on l1-norm maximization. IEEE Trans Pattern Anal Mach Intell 30(9):1672–1680

Shi X, Guo Z, Nie F, Yang L, You J, Tao D (2015) Two-dimensional whitening reconstruction for enhancing robustness of principal component analysis. IEEE Trans Pattern Anal Mach Intell 38(10):2130–2136

Candès EJ, Li X, Ma Y, Wright J (2011) Robust principal component analysis? J ACM (JACM) 58(3):11

Liu JX, Xu Y, Zheng CH, Kong H, Lai ZH (2015) Rpca-based tumor classification using gene expression data. IEEE/ACM Trans Comput Biol Bioinform (TCBB) 12(4):964–970

Shi X, Nie F, Lai Z, Guo Z (2018) Robust principal component analysis via optimal mean by joint \(\ell _{2,1}\) and schatten p-norms minimization. Neurocomputing 283:205–213

Wang Q, Gao Q, Gao X, Nie F (2016) \(\ell\)2, p-norm based pca for image recognition. IEEE Trans Image Process 27:1

Baccini A, Besse P, Falguerolles A (1996) A l1-norm pca and a heuristic approach. Ordinal Symb Data Anal 1(1):359–368

Nie F, Huang H, Ding C, Luo D, Wang H (2011) Robust principal component analysis with non-greedy l1-norm maximization. In: IJCAI Proceedings-international joint conference on artificial intelligence, vol 22, p 1433

Markopoulos PP, Kundu S, Chamadia S, Pados DA (2017) Efficient l1-norm principal-component analysis via bit flipping. IEEE Trans Signal Process 65(16):4252–4264

Park YW, Klabjan D (2016) Iteratively reweighted least squares algorithms for l1-norm principal component analysis. In: 2016 IEEE 16th international conference on data mining (ICDM). IEEE, pp 430–438

Brooks JP, Dulá J, Boone EL (2013) A pure l1-norm principal component analysis. Comput Stat Data Anal 61:83–98

Lu GF, Zou J, Wang Y, Wang Z (2016) L1-norm-based principal component analysis with adaptive regularization. Pattern Recognit 60:901–907

Tsagkarakis N, Markopoulos PP, Sklivanitis G, Pados DA (2018) L1-norm principal-component analysis of complex data. IEEE Trans Signal Process 66(12):3256–3267

Pang Y, Li X, Yuan Y (2010) Robust tensor analysis with l1-norm. IEEE Trans Circuits Syst Video Technol 20(2):172–178

Yi S, Lai Z, He Z, Ym Cheung, Liu Y (2017) Joint sparse principal component analysis. Pattern Recognit 61:524–536

Kwak N (2014) Principal component analysis by \(l\_\)\(p\)-norm maximization. IEEE Trans Cybern 44(5):594–609

Shi X, Guo Z, Xing F, Cai J, Yang L (2018) Self-learning for face clustering. Pattern Recognit 79:279–289

Li X, Pang Y, Yuan Y (2010) L1-norm-based 2dpca. IEEE Trans Syst Man Cybern Part B (Cybern) 40(4):1170–1175

Yang J, Zhang D, Frangi AF, Jy Yang (2004) Two-dimensional pca: a new approach to appearance-based face representation and recognition. IEEE Trans Pattern Anal Mach Intell 26(1):131–137

Kong H, Wang L, Teoh EK, Li X, Wang JG, Venkateswarlu R (2005) Generalized 2d principal component analysis for face image representation and recognition. Neural Netw 18(5–6):585–594

Gu Z, Shao M, Li L, Fu Y (2012) Discriminative metric: Schatten norm vs. vector norm. In: 2012 21st international conference on pattern recognition (ICPR). IEEE, pp 1213–1216

Zhang F, Yang J, Qian J, Xu Y (2015) Nuclear norm-based 2-dpca for extracting features from images. IEEE Trans Neural Netw Learn Syst 26(10):2247–2260

Chen Y, Lai Z, Zhang Y (2016) Sparse nuclear norm two dimensional principal component analysis. In: Chinese conference on biometric recognition. Springer, pp 547–555

Chen Y, Lai Z, Wen J, Gao C (2018) Nuclear norm based two-dimensional sparse principal component analysis. Int J Wavelets Multiresolution Inf Process 16(02):1840002

Mi JX, Zhang YN, Lai Z, Li W, Zhou L, Zhong F (2019) Principal component analysis based on nuclear norm minimization. Neural Netw 118:1–16

Wang H, Wang J (2013) 2dpca with l1-norm for simultaneously robust and sparse modelling. Neural Netw 46:190–198

Lai Z, Xu Y, Chen Q, Yang J, Zhang D (2014) Multilinear sparse principal component analysis. IEEE Trans Neural Netw Learn Syst 25(10):1942–1950

Lai Z, Xu Y, Yang J, Shen L, Zhang D (2016) Rotational invariant dimensionality reduction algorithms. IEEE Trans Cybern 47(11):3733–3746

Li Q, Shi X, Zhou L, Bao Z, Guo Z (2017) Active learning via local structure reconstruction. Pattern Recognit Lett 92:81–88

Shi X, Yang Y, Guo Z, Lai Z (2014) Face recognition by sparse discriminant analysis via joint l2, 1-norm minimization. Pattern Recognit 47(7):2447–2453

Wen J, Lai Z, Wong WK, Cui J, Wan M (2014) Optimal feature selection for robust classification via l2, 1-norms regularization. In: 2014 22nd international conference on pattern recognition. IEEE, pp 517–521

Xu X, Lai Z, Chen Y, Kong H (2018) Robust discriminative principal component analysis. In: Chinese conference on biometric recognition. Springer, pp 231–238

Shi X, Guo Z, Lai Z, Yang Y, Bao Z, Zhang D (2015) A framework of joint graph embedding and sparse regression for dimensionality reduction. IEEE Trans Image Process 24(4):1341–1355

Wang Q, Gao Q (2017) Two-dimensional pca with f-norm minimization. In: AAAI, pp 2718–2724

Gao Q, Xu S, Chen F, Ding C, Gao X, Li Y (2018) \(r_1\)-2-dpca and face recognition. IEEE Trans Cybern 49(4):1212–1223

Chartrand R, Yin W (2008) Iteratively reweighted algorithms for compressive sensing. In: 2008 IEEE international conference on acoustics, speech and signal processing. IEEE, pp 3869–3872

Lee KC, Ho J, Kriegman DJ (2005) Acquiring linear subspaces for face recognition under variable lighting. IEEE Trans Pattern Anal Mach Intell 5:684–698

Sim T, Baker S, Bsat M (2002) The cmu pose, illumination, and expression (pie) database. In: Proceedings of the fifth IEEE international conference on automatic face and gesture recognition. IEEE, pp 53–58

Martinez AM (1998) The ar face database. CVC Technical report 24

Nene SA, Nayar SK, Murase H et al (1996) Columbia object image library (coil-20)

Nefian AV (2013) Georgia tech face database

Keller JM, Gray MR, Givens JA (1985) A fuzzy k-nearest neighbor algorithm. IEEE Trans Syst Man Cybern 4:580–585

Acknowledgements

This work was supported by Natural Science Foundation of Chongqing (under Grant Nos. cstc2018jcyjAX0532 and cstc2016jcyjA0407) and National Natural Science Foundation of China 61906024.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Mi, JX., Zhang, YN., Li, Y. et al. Generalized two-dimensional PCA based on \(\ell _{2,p}\)-norm minimization. Int. J. Mach. Learn. & Cyber. 11, 2421–2438 (2020). https://doi.org/10.1007/s13042-020-01127-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-020-01127-1