Abstract

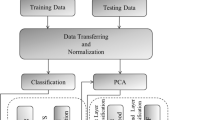

A causative attack which manipulates training samples to mislead learning is a common attack scenario. Current countermeasures reduce the influence of the attack to a classifier with the loss of generalization ability. Therefore, the collected samples should be analyzed carefully. Most countermeasures of current causative attack focus on data sanitization and robust classifier design. To our best knowledge, there is no work to determinate whether a given dataset is contaminated by a causative attack. In this study, we formulate a causative attack detection as a 2-class classification problem in which a sample represents a dataset quantified by data complexity measures, which describe the geometrical characteristics of data. As geometrical natures of a dataset are changed by a causative attack, we believe data complexity measures provide useful information for causative attack detection. Furthermore, a two-step secure classification model is proposed to demonstrate how the proposed causative attack detection improves the robustness of learning. Either a robust or traditional learning method is used according to the existence of causative attack. Experimental results illustrate that data complexity measures separate untainted datasets from attacked ones clearly, and confirm the promising performance of the proposed methods in terms of accuracy and robustness. The results consistently suggest that data complexity measures provide the crucial information to detect causative attack, and are useful to increase the robustness of learning.

Similar content being viewed by others

References

Aha DW, Kibler D (1989) Noise-tolerant instance-based learning algorithms. In: Proceedings of the 11th international joint conference on artificial intelligence—Volume 1, Morgan Kaufmann Publishers Inc., San Francisco, CA, USA, IJCAI’89, pp 794–799

Alcalá-Fdez J, Fernández A, Luengo J, Derrac J, García S, Sánchez L, Herrera F (2011) Keel data-mining software tool: data set repository, integration of algorithms and experimental analysis framework. J Multiple-Valued Logic Soft Comput 17(2–3):255–287

Bache K, Lichman M (2013) UCI machine learning repository. http://archive.ics.uci.edu/ml. Accessed 6 Oct 2018

Barreno M, Nelson B, Sears R, Joseph AD, Tygar JD (2006) Can machine learning be secure? In: Proceedings of the 2006 ACM symposium on information, computer and communications security, ACM, ASIACCS ’06, pp 16–25

Barreno M, Nelson B, Joseph AD, Tygar JD (2010) The security of machine learning. Mach Learning 81(2):121–148

Bernado-Mansilla E, Ho TK (2005) Domain of competence of xcs classifier system in complexity measurement space. IEEE Trans Evolut Comput 9(1):82–104

Bhagoji AN, He W, Li B, Song D (2018) Practical black-box attacks on deep neural networks using efficient query mechanisms. In: European conference on computer vision, Springer, pp 158–174

Biggio B (2010) Adversarial pattern classification. PhD thesis, University of Cagliari, Cagliari (Italy)

Biggio B, Roli F (2018) Wild patterns: ten years after the rise of adversarial machine learning. Pattern Recognition 84:317–331

Biggio B, Fumera G, Roli F (2010) Multiple classifier systems for robust classifier design in adversarial environments. Int J Mach Learning Cybernet 1(1–4):27–41

Biggio B, Corona I, Fumera G, Giacinto G, Roli F (2011a) Bagging classifiers for fighting poisoning attacks in adversarial classification tasks. In: International workshop on multiple classifier systems. Springer, Berlin, pp 350–359

Biggio B, Nelson B, Laskov P (2011b) Support vector machines under adversarial label noise. In: Journal of machine learning research—proc. 3rd Asian conference on machine learning (ACML 2011), Taoyuan, Taiwan, vol 20, pp 97–112

Biggio B, Nelson B, Laskov P (2012) Poisoning attacks against support vector machines. In: 29th Int’l Conf. on Machine Learning (ICML), Omnipress

Biggio B, Corona I, Maiorca D, Nelson B, Srndic N, Laskov P, Giacinto G, Roli F (2013) Evasion attacks against machine learning at test time. In: European conference on machine learning and principles and practice of knowledge discovery in databases (ECML PKDD), Springer-Verlag Berlin Heidelberg, vol 8190, pp 387–402

Biggio B, Fumera G, Roli F (2014) Security evaluation of pattern classifiers under attack. IEEE Trans Knowl Data Eng 26:984–996

Biggio B, Corona I, He ZM, Chan PPK, Giacinto G, Yeung DS, Roli F (2015) One-and-a-half-class multiple classifier systems for secure learning against evasion attacks at test time. Int’l Workshop Multiple Classifier Syst (MCS) 9132:168–180

Britto AS, Sabourin R, Oliveira LE (2014) Dynamic selection of classifiersa comprehensive review. Pattern Recognition 47(11):3665–3680

Callison-Burch C, Dredze M (2010) Creating speech and language data with amazon’s mechanical turk. In: Proceedings of the NAACL HLT 2010 workshop on creating speech and language data with Amazon’s Mechanical Turk, Association for Computational Linguistics, pp 1–12

Carlini N, Wagner D (2017) Towards evaluating the robustness of neural networks. In: 2017 IEEE symposium on security and privacy (SP), IEEE, pp 39–57

Chan PP, He ZM, Li H, Hsu CC (2018) Data sanitization against adversarial label contamination based on data complexity. Int J Mach Learn Cybernet 9(6):1039–1052

Chandola V, Banerjee A, Kumar V (2009) Anomaly detection: a survey. ACM Comput Surveys (CSUR) 41(3):15

Chang CC, Lin CJ (2011) Libsvm: a library for support vector machines. ACM Transactions on Intelligent Systems and Technology (TIST) 2(3):27

Cheng H, Yan X, Han J, Hsu CW (2007) Discriminative frequent pattern analysis for effective classification. In: IEEE 23rd international conference on data engineering, IEEE, pp 716–725

Chung SP, Mok AK (2006) Allergy attack against automatic signature generation. In: Proceedings of the 9th international conference on recent advances in intrusion detection, Springer-Verlag, RAID’06, pp 61–80

Cretu GF, Stavrou A, Locasto ME, Stolfo SJ, Keromytis AD (2008) Casting out demons: sanitizing training data for anomaly sensors. In: Security and privacy, 2008. SP 2008. IEEE symposium on, IEEE, pp 81–95

Dalvi N, Domingos P, Mausam, Sanghai S, Verma D (2004) Adversarial classification. In: Proceedings of the Tenth ACM SIGKDD International conference on knowledge discovery and data mining, ACM, KDD ’04, pp 99–108

Dekel O, Shamir O (2009) Good learners for evil teachers. In: Proceedings of the 26th annual international conference on machine learning, ACM, pp 233–240

Demontis A, Biggio B, Fumera G, Giacinto G, Roli F (2017) Infinity-norm support vector machines against adversarial label contamination. In: ITASEC, pp 106–115

Dries A, Rückert U (2009) Adaptive concept drift detection. Stat Anal Data Mining 2(5–6):311–327

Fefilatyev S, Shreve M, Kramer K, Hall L, Goldgof D, Kasturi R, Daly K, Remsen A, Bunke H (2012) Label-noise reduction with support vector machines. In: 21st international conference on pattern recognition (ICPR), IEEE, pp 3504–3508

Fierrez-Aguilar J, Ortega-Garcia J, Gonzalez-Rodriguez J, Bigun J (2005) Discriminative multimodal biometric authentication based on quality measures. Pattern Recognition 38(5):777–779

He ZM (2012) Cost-sensitive steganalysis with stochastic sensitvity and cost sensitive training error. Int Conf Mach Learn Cybernet 1:349–354

He ZM, Chan PPK, Yeung DS, Pedrycz W, Ng WWY (2015) Quantification of side-channel information leaks based on data complexity measures for web browsing. Int J Mach Learn Cybernet 6(4):607–619

Ho TK (2002) A data complexity analysis of comparative advantages of decision forest constructors. Pattern Anal Appl 5(2):102–112

Ho TK, Basu M (2002) Complexity measures of supervised classification problems. IEEE Trans Pattern Anal Mach Intell 24(3):289–300

Huang L, Joseph AD, Nelson B, Rubinstein BI, Tygar J (2011) Adversarial machine learning. In: Proceedings of the 4th ACM workshop on Security and artificial intelligence, ACM, pp 43–58

Kantchelian A, Tygar J, Joseph A (2016) Evasion and hardening of tree ensemble classifiers. In: International conference on machine learning, pp 2387–2396

Lakhina A, Crovella M, Diot C (2004) Diagnosing network-wide traffic anomalies. ACM SIGCOMM Comput Commun Rev ACM 34:219–230

Li B, Wang Y, Singh A, Vorobeychik Y (2016) Data poisoning attacks on factorization-based collaborative filtering. In: Advances in neural information processing systems, pp 1885–1893

Lowd D, Meek C (2005) Adversarial learning. In: Proceedings of the eleventh ACM SIGKDD international conference on knowledge discovery in data mining, ACM, New York, NY, USA, KDD ’05, pp 641–647

Luengo J, Herrera F (2012) Shared domains of competence of approximate learning models using measures of separability of classes. Inform Sci 185(1):43–65

Madani P, Vlajic N (2018) Robustness of deep autoencoder in intrusion detection under adversarial contamination. In: Proceedings of the 5th annual symposium and Bootcamp on hot topics in the science of security, ACM, p 1

Mao K (2002) Rbf neural network center selection based on fisher ratio class separability measure. IEEE Trans Neural Netw 13(5):1211–1217

Nelson B (2010) Behavior of machine learning algorithms in adversarial environments. PhD thesis, EECS Department, University of California, Berkeley

Nelson B, Barreno M, Chi FJ, Joseph AD, Rubinstein BI, Saini U, Sutton CA, Tygar JD, Xia K (2008) Exploiting machine learning to subvert your spam filter. LEET 8:1–9

Papernot N, McDaniel P, Jha S, Fredrikson M, Celik ZB, Swami A (2016) The limitations of deep learning in adversarial settings. In: IEEE European symposium on security and privacy, IEEE, pp 372–387

Papernot N, McDaniel P, Goodfellow I, Jha S, Celik ZB, Swami A (2017) Practical black-box attacks against machine learning. In: Proceedings of the 2017 ACM on Asia conference on computer and communications security, pp 506–519

Pekalska E, Paclik P, Duin RPW (2002) A generalized kernel approach to dissimilarity-based classification. J Mach Learn Res 2:175–211

Ramachandran A, Feamster N, Vempala S (2007) Filtering spam with behavioral blacklisting. In: Proceedings of the 14th ACM conference on computer and communications security, ACM, pp 342–351

Roli F, Biggio B, Fumera G (2013) Pattern recognition systems under attack. Progress in pattern recognition, image analysis, computer vision, and applications. Springer, Berlin, pp 1–8

Rubinstein BI, Nelson B, Huang L, Joseph AD, Lau Sh, Rao S, Taft N, Tygar J (2009) Antidote: understanding and defending against poisoning of anomaly detectors. In: Proceedings of the 9th ACM SIGCOMM conference on internet measurement conference, ACM, pp 1–14

SáEz JA, Luengo J, Herrera F (2013) Predicting noise filtering efficacy with data complexity measures for nearest neighbor classification. Pattern Recognition 46(1):355–364

Sahami M, Dumais S, Heckerman D, Horvitz E (1998) A bayesian approach to filtering junk e-mail. In: Learning for text categorization: papers from the 1998 workshop, vol 62, pp 98–105

Sánchez JS, Mollineda RA, Sotoca JM (2007) An analysis of how training data complexity affects the nearest neighbor classifiers. Pattern Anal Appl 10(3):189–201

Servedio RA (2003) Smooth boosting and learning with malicious noise. J Mach Learn Res 4:633–648

Smith FW (1968) Pattern classifier design by linear programming. IEEE Trans Comput 100(4):367–372

Smutz C, Stavrou A (2012) Malicious pdf detection using metadata and structural features. In: Proceedings of the 28th annual computer security applications conference, ACM, pp 239–248

Soule A, Salamatian K, Taft N (2005) Combining filtering and statistical methods for anomaly detection. In: Proceedings of the 5th ACM SIGCOMM conference on internet measurement, USENIX Association

Su J, Vargas DV, Sakurai K (2019) One pixel attack for fooling deep neural networks. IEEE Transactions on Evolutionary Computation

Tuv E, Borisov A, Runger G, Torkkola K (2009) Feature selection with ensembles, artificial variables, and redundancy elimination. J Mach Learn Res 10(Jul):1341–1366

Wang Y, Chaudhuri K (2018) Data poisoning attacks against online learning. arXiv preprint arXiv:180808994

Whitehill J, Wu Tf, Bergsma J, Movellan JR, Ruvolo PL (2009) Whose vote should count more: Optimal integration of labels from labelers of unknown expertise. In: Advances in neural information processing systems, pp 2035–2043

Xiao H, Xiao H, Eckert C (2012) Adversarial label flips attack on support vector machines. 20th European Conference on artificial intelligence (ECAI). Montepellier, France, pp 870–875

Xiao H, Biggio B, Brown G, Fumera G, Eckert C, Roli F (2015a) Is feature selection secure against training data poisoning? In: Proceedings of The 32nd international conference on machine learning (ICML’15), pp 1689–1698

Xiao H, Biggio B, Nelson B, Xiao H, Eckert C, Roli F (2015b) Support vector machines under adversarial label contamination. Neurocomputing 160:53–62

Zhang F, Chan PP, Tang TQ (2015) L-gem based robust learning against poisoning attack. In: 2015 International conference on wavelet analysis and pattern recognition (ICWAPR), IEEE, pp 175–178

Zhang F, Chan P, Biggio B, Yeung D, Roli F (2016) Adversarial feature selection against evasion attacks. IEEE Trans Cybernet 46:766–777

Zügner D, Akbarnejad A, Günnemann S (2018) Adversarial attacks on neural networks for graph data. In: Proceedings of the 24th ACM SIGKDD international conference on knowledge discovery & data mining, ACM, pp 2847–2856

Acknowledgements

This work is supported by Natural Science Foundation of Guangdong Province, China (No. 2018A030313203), the Fundamental Research Funds for the Central Universities SCUT (No. 2018ZD32), the National Natural Science Foundation of China (No. 61802061) and the Project of Department of Education of Guangdong Province (No. 2017KQNCX216).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Dataset used in the experiments

Dataset used in the experiments

Total 70 2-class and multi-class datasets with a wide range of variety are selected from UCI Machine Learning Repository [3] and KEEL-dataset Repository [2] are as follows: ala, australian, banana shape, banana subset, card, circular, diabetes, fourclass, german numer, hepatitis, highleyman, ionosphere, krvskp, liver-disorders, pima, promoters, sonar, splice, tic-tac-toe, two spirals, vote, balance, dna, gene, horse, iris, post-op, wine, hypo, lymph, vehicle, anneal, page-blocks, autos, dermatology, glass, satimage, segment, zoo, ecoli, pendigits, poker, car, mushroom, spambase, bands, yeast, cleveland, contraceptive, wisconsin, dermatology, ecoli, wdbc, haberman, hayes-roth, hepatitis, housevotes, titanic, mammographic, marketing, monk-2, newthyroid, texture, optdigits, page-blocks, penbased, phoneme, tae, ring, and saheart.

Rights and permissions

About this article

Cite this article

Chan, P.P.K., He, Z., Hu, X. et al. Causative label flip attack detection with data complexity measures. Int. J. Mach. Learn. & Cyber. 12, 103–116 (2021). https://doi.org/10.1007/s13042-020-01159-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-020-01159-7