Abstract

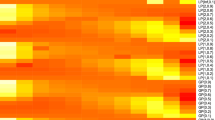

There exist two formulations of the theory of rough sets, consisting of the conceptual formulations and the computational formulations. Class-specific and classification-based attribute reducts are two crucial notions in three-way probabilistic rough set models. In terms of conceptual formulations, the two types of attribute reducts can be defined by considering probabilistic positive or negative region preservations of a decision class and a decision classification, respectively. However, in three-way probabilistic rough set models, there are few studies on the computational formulations of the two types of attribute reducts due to the non-monotonicity of probabilistic positive and negative regions. In this paper, we examine the computational formulations of the two types of attribute reducts in three-way probabilistic rough set models based on fuzzy entropies. We construct monotonic measures based on fuzzy entropies, from which we can obtain the computational formulations of the two types of attribute reducts. On this basis, we develop algorithms for finding the two types of attribute reducts based on addition-deletion method or deletion method. Finally, the experimental results verify the monotonicity of the proposed measures with respect to the set inclusion of attributes and show that class-specific attribute reducts provide a more effective way of attribute reduction with respect to a particular decision class compared with classification-based attribute reducts.

Similar content being viewed by others

References

Azam N, Zhang Y, Yao JT (2017) Evaluation functions and decision conditions of three-way decisions with game-theoretic rough sets. Eur J Oper Res 261(2):704–714

Benítez-Caballero MJ, Medina J, Ramírez-Poussa E, Ślęzak D (2018) Bireducts with tolerance relations. Inf Sci 435:26–39

Chakrabarty K, Biswas R, Nanda S (2000) Fuzziness in rough sets. Fuzzy Sets Syst 110(2):247–251

Dai JH, Hu QH, Hu H, Huang DB (2018) Neighbor inconsistent pair selection for attribute reduction by rough set approach. IEEE Trans Fuzzy Syst 26(2):937–950

De Luca A, Termini S (1972) A definition of a nonprobabilistic entropy in the setting of fuzzy sets theory. Inf Control 20(4):301–312

D’eer L, Cornelis C (2019) Decision reducts and bireducts in a covering approximation space and their relationship to set definability. Int J Approx Reason 109:42–54

Gao M, Zhang QH, Zhao F, Wang GY (2020) Mean-entropy-based shadowed sets: a novel three-way approximation of fuzzy sets. Int J Approx Reason 120:102–124

Hall M, Frank E, Holmes G, Pfahringer B, Reutemann P, Witten IH (2009) The weka data mining software: an update. sigkdd explorations 11(1):10–18

Hu QH, Zhang LJ, Zhou YC, Pedrycz W (2018) Large-scale multimodality attribute reduction with multi-kernel fuzzy rough sets. IEEE Trans Fuzzy Syst 26(1):226–238

Huang GS, Yao YY (2015) Region vector based attribute reducts in decision-theoretic rough sets. In: Rough sets, fuzzy sets, data mining, and granular computing. Springer, Berlin, pp 355–365

Kaufmann A (1975) Introduction to the theory of fuzzy subsets, vol 2. Academic Press, Cambridge

Lang GM (2020) A general conflict analysis model based on three-way decision. Int J Mach Learn Cybernet 11:1083–1094

Li JH, Kumar CA, Mei CL, Wang XZ (2017) Comparison of reduction in formal decision contexts. Int J Approx Reason 80:100–122

Li JZ, Yang XB, Song XN, Li JH, Wang PX, Yu DJ (2019) Neighborhood attribute reduction: a multi-criterion approach. Int J Mach Learn Cybernet 10(4):731–742

Liang DC, Xu ZS, Liu D, Wu Y (2018) Method for three-way decisions using ideal topsis solutions at pythagorean fuzzy information. Inf Sci 435:282–295

Liang JY, Chin KS, Dang CY, Yam RCM (2002) A new method for measuring uncertainty and fuzziness in rough set theory. Int J Gen Syst 31(4):331–342

Liu GL, Hua Z, Zou JY (2018) Local attribute reductions for decision tables. Inf Sci 422:204–217

Liu JB, Li HX, Zhou XZ, Huang B, Wang TX (2019) An optimization-based formulation for three-way decisions. Inf Sci 495:185–214

Luo C, Li TR, Huang YY, Fujita H (2019) Updating three-way decisions in incomplete multi-scale information systems. Inf Sci 476:274–289

Ma XA, Yao YY (2018) Three-way decision perspectives on class-specific attribute reducts. Inf Sci 450:227–245

Ma XA, Yao YY (2019) Min-max attribute-object bireducts: on unifying models of reducts in rough set theory. Inf Sci 501:68–83

Ma XA, Zhao XR (2019) Cost-sensitive three-way class-specific attribute reduction. Int J Approx Reason 105:153–174

Mi JS, Leung Y, Wu WZ (2005) An uncertainty measure in partition-based fuzzy rough sets. Int J Gen Syst 34(1):77–90

Mi JS, Wu WZ, Zhang WX (2004) Approaches to knowledge reduction based on variable precision rough set model. Inf Sci 159(3):255–272

Min F, Hu QH, Zhu W (2014) Feature selection with test cost constraint. Int J Approx Reason 55(1):167–179

Min F, Zhang SM, Ciucci D, Wang M (2020) Three-way active learning through clustering selection. Int J Mach Learn Cybernet 11:1033–1046

Pawlak Z (1982) Rough sets. Int J Comput Inf Sci 11(5):341–356

Pawlak Z (1991) Rough sets: theoretical aspects of reasoning about data. Kluwer Academic Publishers, Boston

Qian J, Liu CH, Yue XD (2019) Multigranulation sequential three-way decisions based on multiple thresholds. Int J Approx Reason 105:396–416

Qian YH, Liang JY, Pedrycz W, Dang CY (2010) Positive approximation: an accelerator for attribute reduction in rough set theory. Artif Intell 174(9–10):597–618

Qian YH, Liang XY, Wang Q, Liang JY, Liu B, Skowron A, Yao YY, Ma JM, Dang CY (2018) Local rough set: a solution to rough data analysis in big data. Int J Approx Reason 97:38–63

Stepaniuk J (1998) Approximation spaces, reducts and representatives. In: Rough sets in knowledge discovery 2. Springer, Berlin, pp 109–126

Sun BZ, Chen XT, Zhang LY, Ma WM (2020) Three-way decision making approach to conflict analysis and resolution using probabilistic rough set over two universes. Inf Sci 507:809–822

Wang GY, Ma XA, Yu H (2015) Monotonic uncertainty measures for attribute reduction in probabilistic rough set model. Int J Approx Reason 59:41–67

Wang GY, Zhang QH (2008) Uncertainty of rough sets in different knowledge granularities. Chin J Comput 31(9):1588–1598

Wang GY, Zhao J, An JJ, Wu Y (2005) A comparative study of algebra viewpoint and information viewpoint in attribute reduction. Fundamenta Informaticae 68(3):289–301

Wang PX, Shi H, Yang XB, Mi JS (2019) Three-way k-means: integrating k-means and three-way decision. Int J Mach Learn Cybernet 10:2767–2777

Wei L, Liu L, Qi JJ, Qian T (2020) Rules acquisition of formal decision contexts based on three-way concept lattices. Inf Sci 516:529–544

Wei W, Liang JY (2019) Information fusion in rough set theory: an overview. Inf Fusion 48:107–118

Wei W, Liang JY, Qian YH, Dang CY (2013) Can fuzzy entropies be effective measures for evaluating the roughness of a rough set? Inf Sci 232:143–166

Wu WZ, Qian Y, Li TJ, Gu SM (2017) On rule acquisition in incomplete multi-scale decision tables. Inf Sci 378:282–302

Yager RR (1979) On the measure of fuzziness and negation part i: membership in the unit interval. Int J General Syst 5(4):221–229

Yager RR (1980) On the measure of fuzziness and negation. ii. lattices. Inf Control 44(3):236–260

Yang B, Li JH (2020) Complex network analysis of three-way decision researches. Int J Mach Learn Cybernet 11:973–987

Yang X, Li TR, Liu D, Chen HM, Luo C (2017) A unified framework of dynamic three-way probabilistic rough sets. Inf Sci 420:126–147

Yang XB, Yao YY (2018) Ensemble selector for attribute reduction. Appl Soft Comput 70:1–11

Yao YY (1998) A comparative study of fuzzy sets and rough sets. Inf Sci 109(1–4):227–242

Yao YY (2004) Semantics of fuzzy sets in rough set theory. In: Transactions on rough sets II. Springer, Berlin, pp 297–318

Yao YY (2010) Three-way decisions with probabilistic rough sets. Inf Sci 180(3):341–353

Yao YY (2015) The two sides of the theory of rough sets. Knowl-Based Syst 80:67–77

Yao YY (2018) Three-way decision and granular computing. Int J Approx Reason 103:107–123

Yao YY (2020) Tri-level thinking: models of three-way decision. Int J Mach Learn Cybernet 11:947–959

Yao YY, Zhang XY (2017) Class-specific attribute reducts in rough set theory. Inf Sci 418:601–618

Yu H, Chang ZH, Wang GY, Chen XF (2020) An efficient three-way clustering algorithm based on gravitational search. Int J Mach Learn Cybernet 11:1003–1016

Zedeh L (1965) Fuzzy sets. Inf Control 8(3):338–353

Zhang QH, Chen YH, Yang J, Wang GY (2019) Fuzzy entropy: a more comprehensible perspective for interval shadowed sets of fuzzy sets. IEEE Trans Fuzzy Syst. https://doi.org/10.1109/TFUZZ.2019.2947224

Zhang QH, Pang GH, Wang GY (2020) A novel sequential three-way decisions model based on penalty function. Know-Based Syst. https://doi.org/10.1016/j.knosys.2019.105350

Zhang QH, Xia DY, Liu KX, Wang GY (2020) A general model of decision-theoretic three-way approximations of fuzzy sets based on a heuristic algorithm. Inf Sci 507:522–539

Zhang QH, Xiao Y (2011) Fuzziness of rough set with different granularity levels. J Inf Comput Sci 8(3):385–392

Zhang QH, Yang CC, Wang GY (2019) A sequential three-way decision model with intuitionistic fuzzy numbers. IEEE Trans Syst Man Cybernet. https://doi.org/10.1109/TSMC.2019.2908518

Zhang QH, Yang SH, Wang GY (2016) Measuring uncertainty of probabilistic rough set model from its three regions. IEEE Trans Syst Man Cybernet 47(12):3299–3309

Zhang QH, Zhang Q, Wang GY (2016) The uncertainty of probabilistic rough sets in multi-granulation spaces. Int J Approx Reason 77:38–54

Zhang XY, Miao DQ (2017) Three-way attribute reducts. Int J Approx Reason 88:401–434

Zhang XY, Tang X, Yang JL, Lv ZY (2020) Quantitative three-way class-specific attribute reducts based on region preservations. Int J Approx Reason 117:96–121

Zhang XY, Yang JL, Tang LY (2020) Three-way class-specific attribute reducts from the information viewpoint. Inf Sci 507:840–872

Acknowledgements

This work is supported by the National Natural Science Foundation of China (Grant No. 61502419), the China Scholarship Council (No. 201608330146), the Key Industrial Technology Development Project of Chongqing Development and Reform Commission (Grant No. 2018148208), and the Innovation and Entrepreneurship Demonstration Team of Yingcai Program of Chongqing (Grant No. CQYC201903167). The author thanks the reviewers for their constructive comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A Proofs

Appendix A Proofs

Proof of Theorem 1

Since \(P \supseteq Q\), \(OB / P ~\preceq ~ OB / Q\). For simplicity and without any loss of generality, suppose that \(OB / P = \{P_1, \ldots , P_i, \ldots , P_k, \ldots , P_t\}\) and \(OB / Q = \{P_1, \ldots , P_{i-1}, P_{i+1}, \ldots , P_{k-1}, P_{k+1}, \ldots , P_t, P_i \cup P_k\}\). That is, the partition OB/Q is generated through combining equivalence classes \(P_i\) and \(P_k\) in the partition OB/P to \(P_i \cup P_k\).

Let \(Q_i = P_i \cup P_k\). Suppose that \(Q_i \cap D_j \ne \emptyset\). We have

For all \(y \in P_i\) and \(z \in P_k\), there are four cases.

Case (1): \(\mu _{F_{\widetilde{{D_j}}}^Q}(y) = \mu _{F_{\widetilde{{D_j}}}^Q}(z) > \frac{1}{2}\), \(\mu _{F_{\widetilde{{D_j}}}^P}(y) > \frac{1}{2}\) and \(\mu _{F_{\widetilde{{D_j}}}^P}(z) > \frac{1}{2}\).

We have \(\mu _{{F_{\widetilde{{D_j}}}^Q}_{near}}(y) = 1\), \(\mu _{{F_{\widetilde{{D_j}}}^Q}_{near}}(z) = 1\), \(\mu _{{F_{\widetilde{{D_j}}}^P}_{near}}(y) = 1\) and \(\mu _{{F_{\widetilde{{D_j}}}^P}_{near}}(z) = 1\). Thus, it has \(|P_i \cap D_j| - \sum \limits _{y \in P_i} \mu _{{F_{\widetilde{{D_j}}}^Q}_{near}}(y) \le 0\) and \(|P_k \cap D_j| - \sum \limits _{z \in P_k} \mu _{{F_{\widetilde{{D_j}}}^Q}_{near}}(z) \le 0\). Hence, one has

As a result, \(e_{\mathrm{K}_1}(F_{\widetilde{{D_j}}}^Q) = e_{\mathrm{K}_1}(F_{\widetilde{{D_j}}}^P)\).

Case (2): \(\mu _{F_{\widetilde{{D_j}}}^Q}(y) = \mu _{F_{\widetilde{{D_j}}}^Q}(z) > \frac{1}{2}\), \(\mu _{F_{\widetilde{{D_j}}}^P}(y) > \frac{1}{2}\) and \(\mu _{F_{\widetilde{{D_j}}}^P}(z) \le \frac{1}{2}\) (or \(\mu _{F_{\widetilde{{D_j}}}^P}(y) \le \frac{1}{2}\) and \(\mu _{F_{\widetilde{{D_j}}}^P}(z) > \frac{1}{2}\)).

In the following, we only prove the case \(\mu _{F_{\widetilde{{D_j}}}^Q}(y) = \mu _{F_{\widetilde{{D_j}}}^Q}(z) > \frac{1}{2}\), \(\mu _{F_{\widetilde{{D_j}}}^P}(y) > \frac{1}{2}\) and \(\mu _{F_{\widetilde{{D_j}}}^P}(z) \le \frac{1}{2}\).

We have \(\mu _{{F_{\widetilde{{D_j}}}^Q}_{near}}(y) = 1\), \(\mu _{{F_{\widetilde{{D_j}}}^Q}_{near}}(z) = 1\), \(\mu _{{F_{\widetilde{{D_j}}}^P}_{near}}(y) = 1\) and \(\mu _{{F_{\widetilde{{D_j}}}^P}_{near}}(z) = 0\). Since \(\mu _{F_{\widetilde{{D_j}}}^P}(z) \le \frac{1}{2}\), it has \(\Big ||P_k \cap D_j| - |P_k|\Big | \ge \Big ||P_k \cap D_j|\Big |\). Hence, one has

As a result, \(e_{\mathrm{K}_1}(F_{\widetilde{{D_j}}}^Q) \ge e_{\mathrm{K}_1}(F_{\widetilde{{D_j}}}^P)\).

Case (3): \(\mu _{F_{\widetilde{{D_j}}}^Q}(y) = \mu _{F_{\widetilde{{D_j}}}^Q}(z) \le \frac{1}{2}\), \(\mu _{F_{\widetilde{{D_j}}}^P}(y) \le \frac{1}{2}\) and \(\mu _{F_{\widetilde{{D_j}}}^P}(z) \le \frac{1}{2}\).

We have \(\mu _{{F_{\widetilde{{D_j}}}^Q}_{near}}(y) = 0\), \(\mu _{{F_{\widetilde{{D_j}}}^Q}_{near}}(z) = 0\), \(\mu _{{F_{\widetilde{{D_j}}}^P}_{near}}(y) = 0\) and \(\mu _{{F_{\widetilde{{D_j}}}^P}_{near}}(z) = 0\). Thus,

As a result, \(e_{\mathrm{K}_1}(F_{\widetilde{{D_j}}}^Q) = e_{\mathrm{K}_1}(F_{\widetilde{{D_j}}}^P)\).

Case (4): \(\mu _{F_{\widetilde{{D_j}}}^Q}(y) = \mu _{F_{\widetilde{{D_j}}}^Q}(z) \le \frac{1}{2}\), \(\mu _{F_{\widetilde{{D_j}}}^P}(y) \le \frac{1}{2}\) and \(\mu _{F_{\widetilde{{D_j}}}^P}(z) > \frac{1}{2}\) (or \(\mu _{F_{\widetilde{{D_j}}}^P}(y) > \frac{1}{2}\) and \(\mu _{F_{\widetilde{{D_j}}}^P}(z) \le \frac{1}{2}\)).

In what follows, we only prove the case \(\mu _{F_{\widetilde{{D_j}}}^Q}(y) = \mu _{F_{\widetilde{{D_j}}}^Q}(z) \le \frac{1}{2}\), \(\mu _{F_{\widetilde{{D_j}}}^P}(y) \le \frac{1}{2}\) and \(\mu _{F_{\widetilde{{D_j}}}^P}(z) > \frac{1}{2}\).

We have \(\mu _{{F_{\widetilde{{D_j}}}^Q}_{near}}(y) = 0\), \(\mu _{{F_{\widetilde{{D_j}}}^Q}_{near}}(z) = 0\), \(\mu _{{F_{\widetilde{{D_j}}}^P}_{near}}(y) = 0\) and \(\mu _{{F_{\widetilde{{D_j}}}^P}_{near}}(z) = 1\). Since \(\mu _{F_{\widetilde{{D_j}}}^P}(z) > \frac{1}{2}\), \(\Big ||P_k \cap D_j|\Big | > \Big ||P_k \cap D_j| - |P_k|\Big |\). Thus,

As a result, \(e_{\mathrm{K}_1}(F_{\widetilde{{D_j}}}^Q) > e_{\mathrm{K}_1}(F_{\widetilde{{D_j}}}^P)\).

On the other hand, suppose that \(Q_i \cap D_j = \emptyset\). We have

Hence, \(e_{\mathrm{K}_1}(F_{\widetilde{{D_j}}}^Q) = e_{\mathrm{K}_1}(F_{\widetilde{{D_j}}}^P)\).

In conclusion, the fuzzy entropy \(e_{\mathrm{K}_1}(F_{\widetilde{{D_j}}}^P)\) will be increased or preserved with the combining of equivalence classes. Hence, we have \(P \supseteq Q \Rightarrow e_{\mathrm{K}_1}(F_{\widetilde{{D_j}}}^P) \le e_{\mathrm{K}_1}(F_{\widetilde{{D_j}}}^Q)\). \(\square\)

Proof of Theorem 6

Since \(B \subseteq C\), \(\xi ({[x]_B}) = \{ {[y]_C}:{[y]_C} \subseteq {[x]_B}\}\) is a partition of \([x]_B\).

(1) “\(\Rightarrow\)” Since \({\mathrm{PO}}{{\mathrm{S}}_{({\alpha _j},{\beta _j})}}({D_j}{\mathrm{| }}B) = {\mathrm{PO}}{{\mathrm{S}}_{({\alpha _j},{\beta _j})}}({D_j}{\mathrm{| }}C)\), we have

“\(\Leftarrow\)” Suppose that \(x \in {\mathrm{PO}}{{\mathrm{S}}_{({\alpha _j},{\beta _j})}}({D_j}{\mathrm{| }}C)\). Since \(e_{\varDelta }(F_{\widetilde{{\mathrm{POS}}}_{(\alpha _j, \beta _j)}(D_j|C)}^B) = 0\), we have \(\mu _{\widetilde{{\mathrm{POS}}}_{(\alpha _j, \beta _j)}(D_j|C)}^B(x) = 0\) or \(\mu _{\widetilde{{\mathrm{POS}}}_{(\alpha _j, \beta _j)}(D_j|C)}^B(x) = 1\). That is, \({{ | {{[x]}_B} \cap {\mathrm{POS}}_{(\alpha _j, \beta _j)}(D_j|C) | } \over { | {{[x]}_B} | }} = 0\) or \({{ | {{[x]}_B} \cap {\mathrm{POS}}_{(\alpha _j, \beta _j)}(D_j|C) | } \over { | {{[x]}_B} | }} = 1\). Therefore, \([x]_B \cap {\mathrm{POS}}_{(\alpha _j, \beta _j)}(D_j|C) = \emptyset\) or \([x]_B \subseteq {\mathrm{POS}}_{(\alpha _j, \beta _j)}(D_j|C)\).

If \([x]_B \cap {\mathrm{POS}}_{(\alpha _j, \beta _j)}(D_j|C) = \emptyset\), then \(x \notin {\mathrm{PO}}{{\mathrm{S}}_{({\alpha _j},{\beta _j})}}({D_j}{\mathrm{| }}C)\). This statement conflicts with condition \(x \in {\mathrm{PO}}{{\mathrm{S}}_{({\alpha _j},{\beta _j})}}({D_j}{\mathrm{| }}C)\). Hence, we have \([x]_B \subseteq {\mathrm{POS}}_{(\alpha _j, \beta _j)}(D_j|C)\). Since \({[x]_B} = \cup \{ {[y]_C}:{[y]_C} \in \xi ({[x]_B})\}\), we obtain that \({[y]_C} \subseteq {[x]_B} \subseteq {\mathrm{POS}}_{(\alpha _j, \beta _j)}(D_j|C)\) for all \({[y]_C} \in \xi ({[x]_B})\). That is, for all \({[y]_C} \in \xi ({[x]_B})\), \(Pr({D_j} | {[y]_C}) \ge \alpha _j\). Thus,

As a result, \(x \in {\mathrm{PO}}{{\mathrm{S}}_{({\alpha _j},{\beta _j})}}({D_j}{\mathrm{| }}B)\).

On the other hand, if \(x \in {\mathrm{PO}}{{\mathrm{S}}_{({\alpha _j},{\beta _j})}}({D_j}{\mathrm{| }}B)\), then \({[x]_B} \subseteq {\mathrm{PO}}{{\mathrm{S}}_{({\alpha _j},{\beta _j})}}({D_j}{\mathrm{| }}B)\).

Since \(e_{\mathrm{K}_1}(F_{\widetilde{{\mathrm{POS}}}_{(\alpha _j, \beta _j)}(D_j|C)}^B) = 0\), \([x]_B \cap {\mathrm{POS}}_{(\alpha _j, \beta _j)}(D_j|C) = \emptyset\) or \([x]_B \in {\mathrm{POS}}_{(\alpha _j, \beta _j)}(D_j|C)\). If \([x]_B \cap {\mathrm{POS}}_{(\alpha _j, \beta _j)}(D_j|C) = \emptyset\), then we have \({[y]_C} \cap {\mathrm{POS}}_{(\alpha _j, \beta _j)}(D_j|C) = \emptyset\) for all \({[y]_C} \in \xi ({[x]_B})\). That is, \({\mathrm{Pr}}({D_j} | {[y]_C}) < \alpha _j\) for all \({[y]_C} \in \xi ({[x]_B})\).

As a result, \([x]_B \nsubseteq {\mathrm{POS}}_{(\alpha _j, \beta _j)}(D_j|B)\). It contradicts with \([x]_B \subseteq {\mathrm{POS}}_{(\alpha _j, \beta _j)}(D_j|B)\).

Hence, \([x]_B \subseteq {\mathrm{POS}}_{(\alpha _j, \beta _j)}(D_j|C)\), namely, \(x \in {\mathrm{POS}}_{(\alpha _j, \beta _j)}(D_j|C)\).

Therefore, we conclude that \({\mathrm{PO}}{{\mathrm{S}}_{({\alpha _j},{\beta _j})}}({D_j}{{| }}B) = {\mathrm{PO}}{{\mathrm{S}}_{({\alpha _j},{\beta _j})}}({D_j}{{| }}C)\).

The proof of (2) is similar to that of (1). \(\square\)

Rights and permissions

About this article

Cite this article

Ma, XA. Fuzzy entropies for class-specific and classification-based attribute reducts in three-way probabilistic rough set models. Int. J. Mach. Learn. & Cyber. 12, 433–457 (2021). https://doi.org/10.1007/s13042-020-01179-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-020-01179-3