Abstract

In recent years, algorithms to recovery low-rank matrix have become one of the research hotspots, and more corresponding optimization models with nuclear norm have also been proposed. However, nuclear norm is not a good approximation to the rank function. This paper proposes a matrix completion model and a low-rank sparse decomposition model based on truncated Schatten p-norm, respectively, which combine Schatten p-norm with truncated nuclear norm, so that the models are more flexible. To solve these models, the function expansion method is first used to transform the non-convex optimization models into the convex optimization ones. Then, the two-step iterative algorithm based on alternating direction multiplier method (ADMM) is employed to solve the models. Further, the convergence of the proposed algorithm is proved mathematically. The superiority of the proposed method is further verified by comparing the existing methods in synthetic data and actual images.

Similar content being viewed by others

References

Hu Y, Zhang D, Ye J, Li X, He X (2013) Fast and accurate matrix completion via truncated nuclear norm regularization. IEEE Trans Pattern Anal Mach Intell 35(9):2117–2130

Fan J, Chow TW (2017) Matrix completion by least-square, low-rank, and sparse self-representations. Pattern Recognit 71:290–305

Yu Y, Peng J, Yue S (2018) A new nonconvex approach to low-rank matrix completion with application to image inpainting. Multidimens Syst Signal Process 1:1–30

Qian W, Cao F (2019) Adaptive algorithms for low-rank and sparse matrix recovery with truncated nuclear norm. Int J Mach Learn Cybern 10(6):1341–1355

Ji H, Liu C, Shen Z, Xu Y (2010) Robust video denoising using low rank matrix completion. In: Proc IEEE Conf Comp Vis Pattern Recognit (CVPR), pp 1791–1798

Nie F, Wang H, Cai X, Huang H, Ding C (2012). Robust matrix completion via joint Schatten \(p\)-norm and \(l_{p}\)-norm minimization. In: Proc Int Comp Data Mining (ICDM), pp 566–574

Lu J, Liang G, Sun J, Bi J (2016) A sparse interactive model for matrix completion with side information. In: Proc Adv Neural Inf Proc Syst (NIPS), pp 4071–4079

Bennett J, Lanning S (2007) The netflix prize. Proc KDD Cup Workshop 2007:35–38

Otazo R, Candès E, Sodickson DK (2015) Low-rank plus sparse matrix decomposition for accelerated dynamic MRI with separation of background and dynamic components. Magn Res Med 73(3):1125–1136

Ravishankar S, Moore BE, Nadakuditi RR, Fessler JA (2017) Low-rank and adaptive sparse signal (LASSI) models for highly accelerated dynamic imaging. IEEE Trans Med Imaging 36(5):1116–1128

Javed S, Bouwmans T, Shah M, Jung SK (2017) Moving object detection on RGB-D videos using graph regularized spatiotemporal RPCA. In: Proc Int Conf Image Anal Proc (ICIAP), pp 230–241

Dang C, Radha H (2015) RPCA-KFE: key frame extraction for video using robust principal component analysis. IEEE Trans Image Process 24(11):3742–3753

Jin M, Li R, Jiang J, Qin B (2017) Extracting contrast-filled vessels in X-ray angiography by graduated RPCA with motion coherency constraint. Pattern Recognit 63:653–666

Werner R, Wilmsy M, Cheng B, Forkert ND (2016) Beyond cost function masking: RPCA-based non-linear registration in the context of VLSM. In: Proc Int Workshop Pattern Recognit Neuroimaging (PRNI), pp 1–4

Song Z, Cui K, Cheng G (2019) Image set face recognition based on extended low rank recovery and collaborative representation. Int J Mach Learn Cybern. https://doi.org/10.1007/s13042-019-00941-6

Fazel M (2002) Matrix rank minimization with applications. Doctoral dissertation, PhD thesis, Stanford University

Candès EJ, Recht B (2009) Exact matrix completion via convex optimization. Found Comput Math 9(6):717–772

Oymak S, Mohan K, Fazel M, Hassibi B (2011) A simplified approach to recovery conditions for low rank matrices. In: Proc Int Symp Inf Theory (ISIT), pp 2318–2322

Li G, Yan Z (2019) Reconstruction of sparse signals via neurodynamic optimization. Int J Mach Learn Cybern 10(1):15–26

Lin Z, Ganesh A, Wright J, Wu L, Chen M, Ma Y (2009) Fast convex optimization algorithms for exact recovery of a corrupted low-rank matrix. Comput Adv Multisens Adapt Process 61(6):1–18

Lin Z, Chen M, Ma Y (2010) The augmented lagrange multiplier method for exact recovery of corrupted low-rank matrices. arXiv preprint arXiv: 1009.5055

Toh KC, Yun S (2010) An accelerated proximal gradient algorithm for nuclear norm regularized linear least squares problems. Pac J Optim 6(3):615–640

Yang AY, Sastry SS, Ganesh A, Ma Y (2010) Fast \(\ell _{1}\)-minimization algorithms and an application in robust face recognition: a review. In: Proc 17th Int Conf Image Process, pp 1849–1852

Gu S, Zhang L, Zuo W, Feng X (2014) Weighted nuclear norm minimization with application to image denoising. In: Proc Conf Comp Vis Pattern Recognit, pp 2862–2869

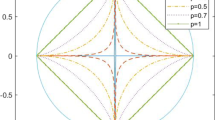

Feng L, Sun H, Sun Q, Xia G (2016) Image compressive sensing via Truncated Schatten-\(p\) Norm regularization. Signal Proc Image Commun 47:28–41

Chen B, Sun H, Xia G, Feng L, Li B (2018) Human motion recovery utilizing truncated Schatten \(p\)-norm and kinematic constraints. Inf Sci 450:89–108

Boyd S, Parikh N, Chu E, Peleato B, Eckstein J (2011) Distributed optimization and statistical learning via the alternating direction method of multipliers. Found Trends Mach Learn 3(1):1–122

Candès EJ, Li X, Ma Y, Wright J (2011) Robust principal component analysis? J ACM 58(3):1–37

Gu S, Xie Q, Meng D, Zuo W, Feng X, Zhang L (2017) Weighted nuclear norm minimization and its applications to low level vision. Int J Comput Vis 121(2):183–208

Cao F, Chen J, Ye H, Zhao J, Zhou Z (2017) Recovering low-rank and sparse matrix based on the truncated nuclear norm. Neural Netw 85:10–20

Beck A, Teboulle M (2009) A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J Imaging Sci 2(1):183–202

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grant 61933013.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

The proof of Theorem 2.1

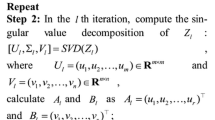

Let \(Q_{X}=M-N_{k+1}+\frac{1}{\mu _{k}}Y_{k}\), then in \((k+1)\)-th iteration, its singular value decomposition is \([U_{k}, \Delta _{k}, V_{k}]=SVD(Q_{X})\). From Lemma 2.2, it follows that \(X_{k+1}=U_{k}\Lambda _{k}V_{k}^{\top }\), where \(\Lambda _{k}=\{(\Delta _{k}-\frac{1}{\mu _{k}}W)_{+}\}\). So from (2.14) it follows that

which shows that \(\{Y_{k}\}\) is bounded. Recalling the definition of augmented Lagrangian function (2.8), for the solution of \((k+1)\)-th iteration \(\{X_{k+1}, N_{k+1}\}\), there is

Noticing \(Y_{k+1}=Y_{k}+\mu _{k}(M-X_{k+1}-N_{k+1})\), we have

Therefore,

Since \(\{Y_{k}\}\) is bound, and there is \(\mu _{k+1}=\min (\rho \mu _{k}, \mu _{\text {max}})\), \(\Gamma (X_{k+1}, N_{k+1}, Y_{k}, \mu _{k})\) is bounded.

And because

\(\{X_{k}\}\) is bounded. From \(N_{k+1}=M-X_{k}+\frac{1}{\mu _{k}}Y_{k}\), it can be deduced that \(\{N_{k}\}\) is also bounded. So \(\{X_{k}, N_{k}, Y_{k}\}\) has at least one point of accumulation, and

We prove the convergence of \(X_{k}\) below. Because

we have

This completes the proof of Theorem 2.1. \(\square \)

Rights and permissions

About this article

Cite this article

Wen, C., Qian, W., Zhang, Q. et al. Algorithms of matrix recovery based on truncated Schatten p-norm. Int. J. Mach. Learn. & Cyber. 12, 1557–1570 (2021). https://doi.org/10.1007/s13042-020-01256-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-020-01256-7