Abstract

Model Agnostic Meta Learning (MAML) has become the most representative meta learning algorithm to solve few-shot learning problems. This paper mainly discusses MAML framework, focusing on the key problem of solving few-shot learning through meta learning. However, MAML is sensitive to the base model for the inner loop, and training instability occur during the training process, resulting in an increase of the training difficulty of the model in the process of training and verification process, causing degradation of model performance. In order to solve these problems, we propose a multi-stage loss optimization meta-learning algorithm. By discussing a learning mechanism for inner and outer loops, it improves the training stability and accelerates the convergence for the model. The generalization ability of MAML has been enhanced.

Similar content being viewed by others

1 Introduction

Machine learning model requires a large number of supervised samples to obtain a better learning performance. However, labeling the samples is time-consuming and laborious. The problem prompts us to discuss the few-shot learning [1]. And the advantage of the few-shot learning is that we only need a small number of labeled samples for learning, avoiding the problem of time and cost caused by collecting large number of labeled data.

The nature of few-shot learning is a hard problem without prior knowledge about the task. Knowledge transfer [2] enables a model to acquire prior knowledge from similar tasks, quickly adapting to new tasks. However, when the source domain is different from the target domain, the knowledge transfer will become a time-consuming and ultimately ineffective process. Meta-learning [3] is recently proposed to solve few-shot learning problems, which usually consists of two parts: the initialization of the base model and an updating strategy. Initialization parameters are learned and strategy is updated for the base model, which improve the model generalization in different tasks.

Meta-learning can also be called learn to learn [4]. More specific meta-learning includes two levels: (1) task-level, the base model needs to learn the knowledge of specific tasks; (2) meta-level, the meta model will learn Cross-task knowledge. In the past few years, to solve few-shot learning problems, many meta learning methods have been proposed. In contrast, Model Agnostic Meta Learning algorithm (MAML [5]) only learns the initialization parameters of the model. MAML proposes to learn initialization parameters for a base model. The base model is updated on the training set with a small number of gradient updates, and the base model will have a strong generalization in the test set. However, MAML also has some issues: (1) training instability because of gradient issues; (2) the computational is overhead due to the existence of the second order gradients.

Aiming at these problems, this paper proposes a Model Agnostic multi-stage loss optimization meta-learning framework (MOL-MAML). By designing a learning mechanism for inner and outer loops, it improves the stability of training and accelerates model convergence. Furthermore, in order to reduce the computation of second order gradients, we propose an adaptive training strategy for stepped training, which speeds up the training process and reduces the computation without reducing the performance of the model. And we validate the MOL-MAML on the regression task and the classification task respectively.

The main contributions of the paper are:

-

A novel meta learning framework for Model-Agnostic Multi-Stage Loss Optimization is established.

-

An adaptive training strategy for stepped training is proposed, speeding up the training process and reduces the computation without reducing the performance of the model.

-

The multi-stage loss optimization propose a new learning mechanism for inner and outer loops by changing the update mode and training strategy of MAML.

-

We provide both qualitative and quantitative results for framework on Few-shot learning to show the advantage for Omniglot and Mini-ImageNet datasets. The solutions for training instability problems in MAML are achieved, and the accuracy of the model is improved.

The structure of this paper is as follows: In Sect. 2, we introduce the related work of solving the few-shot learning problem of supervised learning via the meta-learning. In Sect. 3, the Model-Agnostic Meta Learning framework (MAML) is discussed, theoretical analysis show that the gradient descent in the MAML optimization depends on the computation of the second order gradients. Computational complexity is reduced by the adaptive staged training strategy as we proposed. And although, the strategy could alleviate the instability of the MAML during the training process. The problem of stability caused by gradient changes are still treated to further solve in the research. Focusing on the problems of unstable training and slow convergence of MAML, we propose a Model Agnostic multi-stage loss optimization meta-learning framework (MLO-MAML). In Sect. 4, we evaluate the proposed methods by experiment. Compared to other methods, MLO-MAML enhances the training stability and accelerates model’s convergence. In Sect. 5, we summarize the main work and draw the conclusions.

2 Related work

Few-shot learning has always been a hot issue in machine learning. In this paper, we mainly focus on solving few-shot learning problems of supervised learning via meta-learning methods [6]. Meta learning has three common approaches, which are metric-based, model-based, and optimization-based meta learning.

Metric-Based: The key idea of metric-based meta learning is to learn a distance function which is similar to the K-NN [7] and K-means. Koch, Zemel & Salakhutdinov [8] propose the Siamese neural network [9]. Siamese networks usually consist of two twin networks, sharing the same weights and architecture. The loss function is designed to learn the relationship between pairs of input samples. Relation Network (RN) [10] is similar to Siamese networks, but the difference is that RN consists of embedding and relation modules. Matching-networks [11] is the first metric-based algorithm using meta learning published by Google’s DeepMind. In Matching-networks, the author solve few-shot learning problems by matching target set to support set and uses the LSTM to make all data interact.

Model-Based: Model-based meta-learning can achieve fast-adapt depending on its own internal architecture or another meta-learner model. Some model-based approaches such as Neural Turing Machines [12] have the ability to store information from the external memory storage. Model-based meta-learning approaches mostly depend on internal memory such as RNN or LSTM [13], but the memory used in RNNs is unlikely to quickly encode large amounts of new information. MANN [14] is expected to encode fast new knowledge adapting to new tasks with only a few samples, so it fits well for meta-learning.

Optimization-Based: As the backpropagation of gradients is used wildly in machine learning. Meta learning based stochastic gradient descent has appeared in recent years. However, the gradient based optimization required a large number of training data with slow convergence. The most famous approach MAML [5], short for Model-Agnostic Meta-Learning is a general optimization algorithm, with two levels of backpropagation through neural network. Alex Nichol et al. analyzed a set of algorithms that only use first-order derivatives for meta-learning updates. On the new task, fast fine-tuning parameter initialization is achieved. The author introduces the Reptile algorithm, which trains the task by repeatedly sampling the task and shifts the initialization to the training weight of the task [15]. The basic idea of MAML is to learn a model with good initial parameters, learning quickly on new tasks with a few gradient steps. As an extension of MAML, Meta-SGD [16] learns the initialization parameters of the model and the learning rate of model updates. However, in the actual application process of Meta-SGD, due to the increase in the amounts of parameters, the slow training speed and the increase in computation cost make it unable to be widely used in few-shot learning.

Improvement based on the MAML framework is still important for meta learning algorithms. In this paper, we mainly discuss the framework of MAML and improve its inner loop. By comparing other meta learning algorithms on regression and classification tasks, the feasibility of the improvement is verified.

3 Approach

In this section, we discuss the Model-Agnostic Meta Learning algorithm, and then propose a step-wise adaptive training strategy to alleviate the second order gradients computation problem in MAML. Finally, in order to solve the problems of training instability and slow convergence for MAML, a multi-stage loss optimization meta-learning framework (MLO-MAML) is proposed.

3.1 Model-agnostic meta-learning (MAML)

Model Agnostic Meta Learning algorithm (MAML) is a meta learning framework for few-shot learning. The currently accepted view is that the base model will learn good initialization parameters after MAML is trained on a few-shot dataset. The base model uses the learned initialization parameters and fits well on few-shot learning tasks. Generally, we define the base model as \({f}_{\theta }\), and initialize a parameter \(\theta ={\theta }_{0}\). We sample a task \({T}_{i}\) from a task distribution \(p(T)\). \({T}_{i}\) is divided into support set \({S}_{b}\) and target set \({T}_{b}\). Gradient updates on the support set \({S}_{b}\) for N times to get the parameter \({\theta }_{N}\), and the loss is minimized on the target set \({T}_{b}\).

The base model parameters after \(i\) updates on the support set \({S}_{b}\) can be written as:

Here \(\alpha\) is the learning rate of the inner loop, and \({\theta }_{i}^{b}\) is the weight parameter that the base model updates \(i\) times on task \(b\). \({L}_{{S}_{b}}\left({f}_{{\theta }_{(i-1)}}\right)\) is the loss of updating \(i-1\) times in support set \(b\).

In the general meta-learning training, loss function for the inner and outer loop are as follows:

-

1.

The loss function used in the regression task is the mean squared error [17] (MSE):

$$L_{{T_{i} }} \left( {f_{\theta } } \right) = \mathop \sum \limits_{{x^{j} ,y^{j} \sim T_{i} }} ||f_{\theta } \left( {x^{j} } \right) - y^{j} ||^{2}$$(2) -

2.

The loss function used in the class task classification task is the cross-entropy loss [18]:

$$L_{{T_{i} }} \left( {f_{\theta } } \right) = \mathop \sum \limits_{{x^{j} ,y^{j} \sim T_{i} }} y^{j} logf_{\theta } (x^{j} ) + \left( {1 - y^{j} } \right){\text{log}}\left( {1 - f_{\theta } \left( {x^{j} } \right)} \right)$$(3)Here \({x}^{j}\), \({y}^{j}\) are sampling points on task \({T}_{i}\), and \({f}_{\theta }({x}^{j})\) is the prediction of the base model.

Assuming the batch-size of sampled task is B, we define the loss of the outer loop in MAML training as:

\({\theta }_{N}^{b}\) can be obtained from \({\theta }_{0}\). MAML optimizes the initialization parameter \({\theta }_{0}\), and obtain cross-task knowledge through the meta-training. This process is called the outer loop of MAML.

The update of the meta parameter \({\theta }_{0}\) is expressed as follows:

Here \(\beta\) is the learning rate of the outer loop, and \({L}_{{T}_{b}}\) represents the loss on the target set task \(b\).

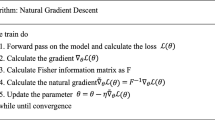

Therefore, Model Agnostic Meta Learning algorithm (MAML) is as follows

The training process of MAML can be regarded as a bi-level optimization consisting of an inner loop and an outer loop, also called meta train and meta test. First MAML randomly initializes \({\theta }_{0}\), and then starts the inner loop. In the process, the support set \({T}_{{s}_{b}}\) and weight \({\theta }_{i-1}\) are inputted to the basic model \({f}_{\theta }\) to obtain the output \({f}_{{\theta }_{i-1}^{b}}\). Then the loss \({L}_{{S}_{b}}\left({f}_{{\theta }_{i-1}^{b}}\right)\) is calculated to update the new weight \({\theta }_{i}\) after the entire inner loop process which is executed \(N\) times. In the next step, the prediction \({f}_{{\theta }_{N}^{b}\left({\theta }_{0}\right)}\) is calculated through applying the model \({f}_{{\theta }_{N}}\) obtained by the inner loop to the target set \({T}_{{T}_{b}}\). The loss \({L}_{{T}_{b}}\left({f}_{{\theta }_{N}^{b}\left({\theta }_{0}\right)}\right)\) is compared with the true label to update the meta parameter \({\theta }_{0}\).

However, MAML poses challenges in training. (1) In Eq. (5), Hessian-vector products (the second derivatives), which is computation costly. (2) As shown in Fig. 1, MAML only uses the final weights for outer loop after training for the inner loop. As a result, the gradient explosion/diminishment will happen resulting in instability for training.

3.2 Second order derivative cost and stepwise training strategy

Gradient descent in MAML optimization relies on second derivatives. Based on the previous considerations for saving computing cost, the stepwise scheme can be extended to First-Order MAML (FOMAML) as follows.

The inner loop has performed k inner gradient steps, \(k\ge 1\),and the initial parameter of the model is \({\theta }_{meta}\):

This process is an inner loop update, where \({\theta }_{0..k}\) is the model parameter after updating, and \({L}^{s}\) represents the loss of the model on the support set.

In the outer loop, the meta parameters can be updated by the target set of the task we sampled.

where the MAML gradient is:

In the First-Order MAML, we ignore the second derivative part \({\nabla }_{{\theta }_{i-1}}\left({\nabla }_{\theta }{L}^{S}\left({\theta }_{i-1}\right)\right)\), which can be simplified as follows.

FOMAML reduces the computation of MAML during the training, but the neglect of the second derivative will degrade the performance of MAML on actual tasks.

To solve these problems, we separate the training process as stepped training. Specifically, we use FOMAML in the first K epochs, and then switch on the second-order gradient in the last K epochs. In the early stage of training, since the gradient changes sharply, turning on the second-order gradient will cause problems such as gradient explosion/diminishment issues. However, in the later stage of training, the model gradually stabilizes. Turning on the second-order gradient will further improve the performance of the model. Therefore, we consider the training phase that ignores the second derivative as the pre-training process of meta-learning training. Thus, when the second-order gradient is used, the training process would become more stable in the later stage, accelerating the model’s convergence speed.

The K value of step training is determined by the rate of loss change \(\nabla L\) of the model training. In order to facilitate the calculation, the above-mentioned first-order gradient value \({g}_{FOMAML}\) is used as the estimation of the loss change rate \(\nabla L\). which is:

At the same time, a threshold \(\sigma\) is set. When \(\nabla L\le \sigma\), the second-order gradient is turned on.

Compared with using only first-order gradient, the training of the base model has better generalization. For using only second-order gradient, step training does not easily cause problems such as the gradient explosion/diminishment issues. The training process is more stable and the model converges faster. However, the stability problem caused by the gradient explosion/diminishment in the training process is determined by many factors. The stepwise training strategy only alleviates this problem, the stability cannot be guaranteed. Another solution will be proposed for this issue in the next section.

3.3 Multi-stage loss optimization

The problems of instability in training caused by the gradient explosion/diminishment issues are not solved. In this section, a multi-Stage loss optimization method is proposed, providing another solution for the problem of stability. In MAML, the gradient of backpropagation during the optimization of the outer loop depends on multiple updates of the inner loop. This update mode will generate gradient problems. When classifying images in MAML, a 4-layer standard convolutional neural network without skip-connections is used.

In MAML, the base model always completes the inner loop process and then minimizes the loss on the target set, which would cause the instability in training because of gradient explosion/diminishment. To be specific, the iteration time for the inner loop depends on the number of tasks, which will lead to a multiple increase in gradient updates for the whole inner loop. The outer loop is performed after the end of inner loop, so the multiple increase in gradient updates would cause the gradient problems. Instead, we improve this training strategy, the base model directly computes the loss on the target set after each inner loop update. Furthermore, we weight the loss at each step on the target set as follows:

Here \(\beta\) is the learning rate of the outer loop process. \({L}_{{T}_{b}}({f}_{{\theta }_{i}^{b}})\) represents the loss when the parameters of the base model are updated \(i\) times on the support set of task b and applied to the target set. \({w}_{i}\) represents the weight loss on the target set at step \(i\).

We propose multi-stage loss to optimize the gradient propagation process of MAML (MLO-MAML), that is, the base network model simultaneously obtains the gradient of the current update step and the previous update step. To solve the problem of training instability caused by gradient update mode, in which the gradient updates are performed for the outer loop after the gradients in the inner cycle are completely updated. Here, we change the update mode in training strategy. The base model directly computes the loss after each inner loop update. At the same time, a weighting process is performed for each step loss. All of the loss functions have the same weight at the beginning of the training, and they have the same importance. As the training progresses, the importance of the previous step will decrease and the importance of the subsequent steps will increase. This will ensure that, the last update step will receive more attention, and the final loss reaches the lowest loss.

The algorithm for the MLO-MAML is as follows:

MAML based on multi-stage loss optimization first randomly initializes \({\theta }_{0}\), and then starts the inner loop. In the process, the support set \({T}_{{s}_{b}}\) and the weight \({\theta }_{i-1}\) are inputted to the basic model \({f}_{\theta }\) to calculate the prediction \({f}_{{\theta }_{i-1}^{b}}\). We compare the obtained output with the true label to compute the loss \({L}_{{S}_{b}}\left({f}_{{\theta }_{i-1}^{b}}\right)\) and update to obtain a new weight \({\theta }_{i}\), and then immediately start the outer loop process. The loss \({L}_{{T}_{b}}({f}_{{\theta }_{i}^{b}})\) on the target set is computed, and the loss for each update is weighted. Finally, the gradient descent algorithm is used to update the outer loop parameter \({\theta }_{0}\), and the entire process is performed N times. By changing the update mode and training strategy of MAML, the gradient of each inner loop process can be received by the outer loop process, which improves the stability of training and speeds up the convergence speed.

We visualize the training process of the MLO-MAML by comparing the training process of MAML, as shown in the Fig. 2:

4 Experimental results

We mainly evaluate the effect of meta-learning on few-shot learning from two aspects: few-shot image classification and few-shot regression tasks. In addition, in order to verify the feasibility of stepwise training, this paper records the time required for each epoch in a few-shot classification task. Compared with the use of stepwise training and the second derivative, variations in training time and accuracy rate are discussed. The feasibility of the method is verified.

4.1 Experimental environment

The experiment was performed on 64 bit Ubuntu 16.04 LTS operation system, GPU is NVIDIA GeForce 2080TI, and PyTorch framework is used for evaluations. The information about the implementation of our software and hardware platforms are shown in Table 1.

4.2 Evaluation for regression

This paper will describe the basic principles of MAML with a simple regression problem. The main form of the regression task is to predict a sine function \(y=a\mathrm{sin}(x+b)\) with few-shot of sample points, where the \(a\) and \(b\) vary within [0.1,0.5] and [0, π] respectively.

Throughout the training and testing process, data points \(x\) are sampled uniformly from [-5.0, 5.0] and the mean-squared error loss function is used to measure the error between the prediction \(f(x)\) and the true value.

Here \({x}^{\left(j\right)}\), \({y}^{\left(j\right)}\) are sampling points on task \({T}_{i}\)

The base model used in the regression task is a FC neural network model which has 2 hidden layers with size of 16, as shown in the following Fig. 3:

When training with MAML and MLO-MAML, we set up a 5-way K-shot with K = 10 comparison experiment, where the inner loop learning rate \(\alpha\) is 0.01, the outer loop learning rate \(\beta\) is 0.1. Adam is used as the meta-optimizer.

We obtain results by fine-tuning MAML and MLO-MAML learned models and pre-trained models. During fine-tuning, to ensure the reliability of the experiment, we use the same K sampling points, and the number of fine-tuning gradient steps which is {0, 1, 10}. The experimental results are shown in the following figure:

The Fig. 4 verifies the advantages of the meta-learning algorithm in few-shot learning. It consists of three experimental parts: (1) model pretrained; (2) MAML; (3) MLO-MAML. Each part sets the number points sampled is 10, and the number of training times for updating and fine-tuning are set to {0,1,10}. The experimental curves include: (1) Training Points; (2) True Functions; (3) Function after different fine-tuning times. 100 samples (x1,y1), (x2,y2)….(x100,y100) are selected for testing, and predicted results are calculated using the three models. Predicted results \({y}_{mlo}\), \({y}_{maml}\), \({y}_{pre}\), are compared with true value y of the sample to compute the prediction accuracy. The matching is achieved if the predicted values equal to true values. The regression accuracy is obtained by calculating the ratio of the matching samples to all the 100 samples.

In the training process of regression experiments, the model is trained for 100 epoch. MAML converges in 55th–60th epoch, and MLO-MAML achieves the convergence in 30th–33th epoch, which proves that the MLO-MAML proposed in this paper has also greatly improved the convergence speed.

In the subsequent fine-tuning, the base model can initially adapt well with only updating 1 time. But after 10 steps, the accuracy of MLO-MAML can reach 90.1%, while the MAML only has 81.2%, and the pretrained model only has 64.2% when 10 steps is proceed. Quantitative results of MSE for MAML and MLO-MAML have been added in Table 2 in the revision.

Therefore, MAML would need more steps for fine-tuning to achieve the similar fitting accuracy with MLO-MAML. In contrast, MAML based on multi-stage loss optimization has faster fitting speed and stronger fitting ability than MAML.

4.3 Evaluation for classification

To verify the performance of MAML and MLO-MAML in few-shot classification problems, this paper performed 5/20-way 1/5-shot, 5-way 1/5-shot few-shot image classification experiments on the Omniglot and Mini-ImageNet datasets.

Our basic model of classification consists of 4 basic modules with the same architecture as shown in the Fig. 5, where the module is composed of a 3 × 3 convolution kernel with 64 filters, and a BN layer followed by a 2 × 2 max-pooling. The optimizer is Adam, the inner loop learning rate is 0.01, and the outer loop learning rate is 0.001. Among them, the task batch size in Omniglot is 16. In Mini-ImageNet, the task batch size for the 5-way 1-shot is 4, and the task batch size for the 5-way 5-shot is set to 2.

4.3.1 Experimental results on Omniglot [19]

The Omniglot dataset contains 1,623 different handwritten characters from 50 different letters (languages). Each character was drawn online by 20 different people via Amazon's Mechanical Turk.

In this section, we randomly select 1150 characters for the training set, 50 characters for the validation set, and the rest for the test set. In addition, all images in the dataset are resized in 28 × 28, and the data is augmented by randomly rotating (Fig. 6).

Figure 7 shows the accuracy and loss values of 100 epochs trained on this dataset for MAML and MLO-MAML respectively.

In Fig. 7, the dark line is MLO-MAML and the light line is MAML. As can be seen from the results, it is shown that MLO-MAML could be more smoothly, whose validation accuracy can reach the about 99.2% at the 62th ~ 75th epoch. While the MAML reaches the highest validation accuracy 97.7% at the 90th ~ 100th epoch. Figure 8 compares the validation accuracy of MLO-MAML with current small sample models. The test accuracy of MLO-MAML, MAML, Siamese Nets, Meta-SGD, and matching Nets are 99.3, 98.9, 97.0, 98.5, and 98.97% respectively. It is illustrated that MAML and other models have problems such as instability training and long convergence time. Compared with the current models, the training of model based on multi-stage loss optimization is more stable, converges faster, and improves the accuracy.

Table 3 shows the different accuracy of different methods for few-shot learning on Omniglot dataset, including Siamese nets, Matching net, Meta-SGD, MAML, and proposed MOL-MAML.

As shown in Table 3, compared with other few-shot learning methods, the multi-level loss optimization-based MAML proposed in this paper achieves an accuracy of 99.5% ± on the 5-way and 97.1%, 99.3% accuracy on the 20-way respectively. Compared with MAML, MLO-MAML improves the accuracy rate. More importantly, it can be seen from the result that MAML based on multi-stage loss optimization speeds up the model to reach the optimal state, and solves the training stability issues of MAML, discussed in Sect. 3 of this paper.

4.3.2 Experimental results on Mini-ImageNet

The Mini-ImageNet dataset is proposed by Vinyals et al. to test few-shot learning approaches. Compared to using ImageNet, it is less complex and requires less resources and infrastructure than running on a full ImageNet dataset. There are 100 categories, and each category has 600 samples of 84 × 84 color images. In this experiment, we use 64 classes as the training set, 12 classes as the validation set, and 24 classes as the test set. The Mini-ImageNet dataset is shown in Fig. 9:

Compared to the previous section, the base model used in Mini-Imagenet has been slightly adjusted, changing the number of convolution kernels from 64 to 32. The other links are the same as the Omniglot.

In this section, the few-shot image classification experiments for 5-way 1-shot and 5-way 5-shot are performed. Figure 9 shows the accuracy and loss for training 100 epochs on this dataset with MAML and MLO-MAML.

In Fig. 10, the dark line is MAML based on multi-stage loss optimization, and the light line is MAML. In the experiments, the accuracy of the validation set of the proposed MLO-MAML reaches 66% + . Compared with MAML, the accuracy is greatly improved. The validation accuracy of MLO-MAML can reach the about 64.3% at the 50th ~ 60th epoch. While the MAML reaches the highest validation accuracy 60.7% at the 90th ~ 100th epoch, and the test accuracy of MLO-MAML and MAML are 68.32% and 64.39%. In Fig. 11, we preform the comparison evaluations for MLO-MAML, MAML, Siamese Nets, Meta-SGD, and matching Nets on Mini-ImageNet. The test accuracy of MLO-MAML, MAML, Siamese Nets, Meta-SGD, and matching Nets are 68.32% and 64.39, 52.31, 64.03 and 55.31%. The Meta-SGD and Matching Net also have a fast convergence rate, but the high computational-complexity and the large number of parameters, lead to relatively higher time complexity and space complexity in the two models. Therefore, compared with other small sample models, the MLO-MAML proposed in this paper has advantages in convergence speed, complexity, stability and accuracy.

Table 4 shows the comparison accuracy of several methods for few-shot learning on Mini-ImageNet dataset, including metric learning, MAML, MLO-MAML.

Table 4 presents the performance of MAML based on multi-stage loss optimization on the Mini-ImageNet dataset, and 5-way 1-shot and 5-way 5-shot experiments are performed respectively. The accuracy of proposed method can achieve 52.15% and 68.32%, increased by 3.9% and 3.93% compared with MAML.

4.4 Stepwise training

To verify the feasibility of stepwise training, we compare the time consuming of each epoch using stepwise training and second-order gradient throughout. The experiment is mainly performed on the classification task. In this paper, the thresholds in the stepwise training strategy for the Mini-ImageNet and Omniglot are different. The threshold for Mini-ImageNet is set higher, because Mini-ImageNet converges more slowly, and the gradient change rate is larger. In this experiment, the threshold \(\sigma\) turning on the second-order gradient for Omniglot is set to 0.001, and 0.01 for Mini-ImageNet. When \(\nabla L\le \sigma\), the second-order gradient is turned on. The experiments compare the training time and model accuracy to verify the feasibility of stepwise training.

The experimental settings are the same as the classification task settings above, and experiments are performed on Omniglot and Mini-ImageNet. The results are shown as follows:

Figure 12 is the training time comparison chart of the stepwise training experiment. The Y-axis represents the time required for each epoch, and the X-axis represents the number of epochs trained. The light-colored line indicates that second-order gradients used throughout the process and red line is the stepwise training proposed in this paper. As can be seen from the figure, the stepwise training greatly speeds up the training speed of meta-learning and stabilizes the training process.

Table 5 compares the accuracy of stepwise training and normal training on Omniglot and Mini-ImageNet. As can be seen from the table, the accuracy of stepwise training in the case of ignoring the second-order gradient is basically similar to that of normal training.

Table 6 shows the average time when one epoch is executed for different models. It is illustrated that the time consuming of MLO-MAML for one epoch is reduced, and the epoch number for convergence is relatively smaller, so MLO-MAML shows the advantage in convergence speed, which executes less time for training.

This shows that the method we proposed in this paper can speed up the convergence of training and improve the stability of the mode without affecting the accuracy.

5 Conclusion

This paper mainly discusses the defects of Model Agnostic Meta Learning algorithm (MAML) to handle the few-shot learning problems. It has been shown that the MAML can lead to training instability caused by gradient update mode. As a solution, the learning strategy in the inner and outer loop is optimized and a method for multi-stage loss optimization meta-learning (MLO-MAML) is proposed for improving the stability of training. In addition, we propose a stepwise training strategy for First-Order MAML to solve the problem of computation problems. We evaluate the performance of the model through a few-shot regression task and a few-shot classification task. It is verified by experiments that the MLO-MAML has achieved improvement over the MAML algorithm. While improving the stability of the model, the accuracy of the model is improved and the convergence of MAML training is also accelerated.

References

Snell J, Swersky K, Zemel R (2017) Prototypical networks for few-shot learning[C]//Advances in Neural Information Processing Systems, pp. 4077–4087

Caruana R (1995) Learning many related tasks at the same time with backpropagation. In Advances in neural information processing systems, pp. 657–664

Vilalta R, Drissi Y (2002) A perspective view and survey of meta-learning. Artif Intell Rev 18(2):77–95

Thrun S, Pratt L (1998) Learning to learn: introduction and overview. In Learning to learn. Springer, Boston, pp. 3-17

Finn C, Abbeel P, Levine S (2017) Model-agnostic meta-learning for fast adaptation of deep networks. In Proceedings of the 34th International Conference on Machine Learning-Volume 70 pp. 1126–1135

Naik DK, Mammone RJ (1992) Meta-neural networks that learn by learning. In [Proceedings 1992] IJCNN International Joint Conference on Neural Networks (1) : 437–442

Boytsov L, Naidan B (2013) Learning to prune in metric and non-metric spaces. In Advances in Neural Information Processing Systems pp. 1574–1582

Koch G, Zemel R, Salakhutdinov R (2015) Siamese neural networks for one-shot image recognition. In ICML deep learning workshop 2

Bromley J, Guyon I, LeCun Y, Säckinger E, Shah R (1994) Signature verification using a “Siamese” time delay neural network. In Advances in neural information processing systems pp. 737–744

Sung F, Yang Y, Zhang L, Xiang T, Torr PH, Hospedales TM (2018) Learning to compare: Relation network for few-shot learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition pp. 1199–1208

Vinyals O, Blundell C, Lillicrap T, Wierstra D (2016) Matching networks for one shot learning. In Advances in neural information processing systems pp. 3630–3638

Graves A, Wayne G, Danihelka I (2014) Neural turing machines. arXiv preprint arXiv:1410.5401

Chen J, Qiu X, Liu P, Huang X (2018) Meta multi-task learning for sequence modeling. In Thirty-Second AAAI Conference on Artificial Intelligence

Santoro A, Bartunov S, Botvinick M, Wierstra D, Lillicrap T (2016) One-shot learning with memory-augmented neural networks. arXiv preprint arXiv:1605.06065

Nichol A, Achiam J, Schulman J (2018) On first-order meta-learning algorithms. arXiv preprint arXiv:1803.02999

Li Z, Zhou F, Chen F, Li H (2017) Meta-SGD: learning to learn quickly for few-shot learning. arXiv preprint arXiv:1707.09835

Xingjian SHI, Chen Z, Wang H, Yeung DY, Wong WK, Woo WC (2015) Convolutional LSTM network: a machine learning approach for precipitation nowcasting. In Advances in neural information processing systems pp. 802–810

De Boer PT, Kroese DP, Mannor S, Rubinstein RY (2005) A tutorial on the cross-entropy method. Ann Oper Res 134(1):19–67

Lake BM, Salakhutdinov R, Tenenbaum JB (2015) Human-level concept learning through probabilistic program induction. Science 350(6266):1332–1338

Acknowledgements

This work is supported by the Fundamental Research Funds for the Central Universities B200202205, the Key Research and Development Program of Jiangsu under Grants BE2017071, BE2017647 and BE2018004-04, BK20192004. National Nature Science Foundation of China under Grants (61501170, 41876097, 61401148, 61471157, 61671202), the Open Research Fund of State Key Laboratory of Bioelectronics, Southeast University under Grant 2019005, and the State Key Laboratory of Integrated Management of Pest Insects and Rodents under Grant IPM1914. Shenzhen Science and Technology Plan Project (JSGG20180507183020876).

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yao, X., Zhu, J., Huo, G. et al. Model-agnostic multi-stage loss optimization meta learning. Int. J. Mach. Learn. & Cyber. 12, 2349–2363 (2021). https://doi.org/10.1007/s13042-021-01316-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-021-01316-6