Abstract

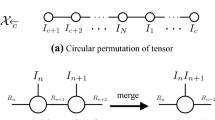

In recent years, tensor data appear more frequently in machine learning. Tucker decomposition is a powerful tool for processing tensor data. However, from the perspective of dimensionality reduction, Tucker decomposition without any regularization is only a tensor version of principal component analysis (PCA) that learning a linear subspace to minimize the distance between the data and their projections on the subspace. However, many high-dimensional tensor data usually reside in low-dimensional submanifolds, rather than low-dimensional linear subspaces. It is necessary to fill the gap between submanifolds and linear subspaces to achieve superior performance. In this paper, we proposed a new dimensionality reduction algorithm of tensor data based on manifold regularized Tucker decomposition (called MRTD for short), in which manifold regularization is in addition to Tucker decomposition. Furthermore, unlike many other similar algorithms that ignore the matrices of Tucker decomposition by absorbing them into a whole matrix, in this paper, we proposed an iterative solution to MRTD that the matrices are calculated based on each other iteratively. Matrices in Tucker decomposition represent different dimensionality reduction operations for each dimension of tensor. Therefore, MRTD is a dimensionality reduction algorithm specially designed for tensor data, not for vector data. The experimental results of MRTD and 5 other related state-of-the-art algorithms on 6 commonly-used real-world datasets are presented, showing the better performance of MRTD.

Similar content being viewed by others

References

Abdi H, Williams LJ (1967) Principal component analysis. Wiley Interdiscip Rev Comput Stat 2(4):433–459

Jolliffe IT (2002) Principal component analysis. J Mark Res 87(4):513

Fukunaga K (1972) Introduction to statistical pattern recognition. Academic Press, New York

He X, Yan S, Hu Y, Niyogi P, Zhang H-J (2005) Face recognition using Laplacian faces. IEEE Trans Pattern Anal Mach Intell 27(3):328–340

Yang Y, Nie F, Xu D, Luo J, Zhuang Y, Pan Y (2012) A multimedia retrieval framework based on semi-supervised ranking and relevance feedback. IEEE Trans Pattern Anal Mach Intell 34(4):723–742

Roweis ST, Saul LK (2000) Nonlinear dimensionality reduction by locally linear embedding. Science 290(5500):2323–2326

Belkin M, Niyogi P (2001) Laplacian Eigenmaps and spectral techniques for embedding and clustering. Neural Inf Process Syst (NIPS) 14:585–591

He XF, Cai D, Yan SC, Zhang HJ (2005) Neighborhood preserving embedding. In: IEEE international conference on computer vision, pp 1208–1213. https://doi.org/10.1109/ICCV.2005.167

He XF, Niyogi P (2003) Locality preserving projections. Adv Neural inf Process Syst 16(1):186–197

Tenenbaum JB, de Silva V, Langford JC (2000) A global geometric framework for nonlinear dimensionality reduction. Science 290(5500):2319–2323

Paatero P, Tapper U (1994) Positive matrix factorization: a non- negative factor model with optimal utilization of error estimates of data values. Environmetrics 5(2):111–126

Lee DD, Seung HS (1999) Learning the parts of objects by non- negative matrix factorization. Nature 401:788–791

Li SZ, Hou X, Zhang H, Cheng Q (2001) Learning spatially localized, parts-based representation. In: Proceedings of the 2001 IEEE computer society conference on computer vision and pattern recognition. https://doi.org/10.1109/CVPR.2001.990477

Cai D, He X, Han J, Huang TS (2011) Graph regularized nonnegative matrix factorization for data representation. IEEE Trans Pattern Anal Mach Intell 33(8):1548–1560

Zhang Z, Zhao K (2013) Low-rank matrix approximation with manifold regularization. IEEE Trans Pattern Anal Mach Intell 35(7):1717–1729

Tucker LR (1966) Some mathematical notes on three-mode factor analysis. Psychometrika 31(3):279–311

Al KH (2000) Towards a standardized notation and terminology in multiway analysis. J Chemom 14(3):105–122

Kolda TG, Bader BW (2009) Tensor decompositions and applications. SIAM Rev 51(3):455–500

Lieven DL, Bart DM, Joos V (2000) A multilinear singular value decomposition. SIAM J Matrix Anal Appl 21(4):1253–1278

De Lathauwer L, De Moor B, Vandewalle J (2000) On the best rank-1 and rank-(r 1, r 2,..., rn) approximation of higher-order tensors. SIAM J Matrix Anal Appl 21(4):1324–1342

Kim Y-D, Choi S (2007) Nonnegative tucker decomposition. In: IEEE conference on computer vision and pattern recognition (CVPR), pp 1–8. https://doi.org/10.1109/CVPR.2007.383405

Wang C et al (2011) Image representation using Laplacian regularized nonnegative tensor factorization. Pattern Recogn 44(10–11):2516–2526

Benetos E, Kotropoulos C (2010) Non-negative tensor factorization applied to music genre classification. IEEE Trans Audio Speech Signal Process 18(8):1955–1967

Zhang J, Li X, Jing P, Liu J, Su Y (2018) Low-rank regularized heterogeneous tensor decomposition for subspace clustering. IEEE Signal Process Lett 25(3):333–337

Li X, Ng MK, Cong G, Ye Y, Wu Q (2017) MR-NTD: manifold regularization nonnegative tucker decomposition for tensor data dimension reduction and representation. IEEE Trans Neural Netw Learn Syst 28(8):1787–1800

Kolda TG (2006) Multilinear operators for higher-order decompositions. Office of Scientific and Technical Information Technical Reports. https://doi.org/10.2172/923081

Zhang Z, Zha H (2005) Principal manifolds and nonlinear dimension reduction via local tangent space alignment. SIAM J Sci Comput 26(1):313–338

Sun Y, Gao J, Hong X, Mishra B, Yin B (2016) Heterogeneous tensor decomposition for clustering via manifold optimization. IEEE Trans Pattern Anal Mach Intell 38(3):476–489

Jiang B, Ding C, Tang J, Luo B (2019) Image representation and learning with graph-Laplacian tucker tensor decomposition. IEEE Trans Cybern 49(4):1417–1426

Mirowski P, LeCun Y (2012) Statistical machine learning and dissolved gas analysis: a review. IEEE Trans Power Deliv 27(4):1791–1799

Nene SA, Nayar SK, Murase H (1996) Columbia object image library (coil-20). Columbia Univ., New York, NY, USA, Tech. Rep. CUCS-005-96

Graham DB (1998) Characterizing virtual Eigensignatures for general purpose face recognition. Face Recogn Theory Appl 163(2):446–456

Zheng M, Bu J, Chen CA, Wang C, Zhang L, Qiu G et al (2011) Graph regularized sparse coding for image representation. IEEE Trans Image Process 20(5):1327–1336. https://doi.org/10.1109/TIP.2010.2090535

Qiu Y, Zhou G, Wang Y, Zhang Y, Xie S (2020) A generalized graph regularized non-negative tucker decomposition framework for tensor data representation. IEEE Trans Cybern. https://doi.org/10.1109/TCYB.2020.2979344

Yin W, Ma Z (2019) LE & LLE regularized nonnegative tucker decomposition for clustering of high dimensional datasets. Neurocomputing 364:77–94

Chen Y, Hsu C, Liao HM (2014) Simultaneous tensor decomposition and completion using factor priors. IEEE Trans Pattern Anal Mach Intell 36(3):577–591

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported in part by the Natural Science Foundation of China through the Project “Research on Nonlinear Alignment Algorithm of Local Coordinates in Manifold Learning” under Grant 61773022.

Rights and permissions

About this article

Cite this article

Huang, H., Ma, Z. & Zhang, G. Dimensionality reduction of tensors based on manifold-regularized tucker decomposition and its iterative solution. Int. J. Mach. Learn. & Cyber. 13, 509–522 (2022). https://doi.org/10.1007/s13042-021-01422-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-021-01422-5