Abstract

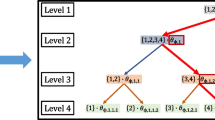

A model-independent sensitivity analysis for (deep) neural network, Bilateral sensitivity analysis (BiSA), is proposed to measure the relationship or dependency between neurons and layers. For any feed-forward neural networks including deep networks, we have defined the BiSA between a pair of layers as well as the same between any pair neurons in different layers. This sensitivity can quantify the influence or contribution from any layer to any other higher level layer. It provides a helpful tool to interpret the learned model. The BiSA can also measure the influence from any neuron to another neuron in a subsequent layer and it is critical to analyze the relationship between neurons in different layers. Then the BiSA from any input to any output of the network is easily defined to assess the strength of connection between the inputs and outputs. We have applied BiSA to characterize the well connectivity in oil fields—a very important and challenging problem in reservoir engineering. Given a network trained by Water Injection Rates and Liquid Production Rates data, the well connectivity can be efficiently discovered through BiSA. The empirical results verify the effectiveness of BiSA. Our comparison with exiting methods demonstrates the robustness and the superior performance. Besides, we also investigate the effectiveness of BiSA for a feature selection task using a deep neural network. The experimental results on MNIST data set demonstrate a satisfactory performance of BiSA on this issue with 1,536,640,000 parameters in the neural network.

Similar content being viewed by others

References

Li Y, Xu Z, Wang X et al (2020) A bibliometric analysis on deep learning during 2007–2019. Int J Mach Learn Cybern 11:2807–2826

Guidotti R, Monreale A, Ruggieri S, Turini F, Pedreschi D, Giannotti F (2018) A survey of methods for explaining black box models. ACM Comput Surv 51(5):1–42

Borges RV, Garcez AD, Lamb LC (2011) Learning and representing temporal knowledge in recurrent networks. IEEE Trans Neural Netw 22(12):2409–2421

Suarez A, Lutsko JF (1999) Globally optimal fuzzy decision trees for classification and regression. IEEE Trans Pattern Anal Mach Intell 21(12):1297–1311

Craven MW, Shavlik JW (1994) Using sampling and queries to extract rules from trained neural networks. In: Proceedings of 11th International Conference on Machine Learning, pp 37–45

Garcez ASD, Broda K, Gabbay DM (2001) Symbolic knowledge extraction from trained neural networks: a sound approach. Artif Intell 125:155–207

Towell GG, Shavlik JW (1994) Knowledge-based artificial neural networks. Artif Intell 70(1–2):119–165

Tsopze N, Mephu-Nguifo E, Tindo G (2011) Towards a generalization of decompositional approach of rule extraction from multilayer artificial neural network. The 2011 International Joint Conference on Neural Networks, San Jose, CA, USA, pp 1562–1569. https://doi.org/10.1109/IJCNN.2011.6033410

Marcon M, Paracchini MBM, Tubaro S (2019) A framework for interpreting, modeling and recognizing human body gestures through 3D eigenpostures. Int J Mach Learn Cybern 10:1205–1226

Samek W, Binder A, Montavon G, Lapuschkin S, Müller K-R (2017) Evaluating the visualization of what a deep neural network has learned. IEEE Trans Neural Netw Learn Syst 28(11):2660–2673

Chowdhury IM, Su K, Zhao Q (2020) MS-NET: modular selective network. Int J Mach Learn Cybern. https://doi.org/10.1007/s13042-020-01201-8

Townsend NW, Tarassenko L (1999) Estimations of error bounds for neural-network function approximators. IEEE Trans Neural Netw 10(2):217–230

Saltelli A, Tarantola S, Campolongo F, Ratto M (2004) Sensitivity analysis in practice: a guide to assessing scientific models. Wiley, Hoboken

Ng WWY, Yeung DS, Wang XZ, Cloete I (2004) A study of the difference between partial derivative and stochastic neural network sensitivity analysis for applications in supervised pattern classification problems. The 3rd international conference on machine learning and cybernetics, pp 4283–4288

Stevenson M, Winter R, Widrow B (1990) Sensitivity of feedforward neural networks to weight errors. IEEE Trans Neural Netw 1(1):71–80

Zeng X, Yeung DS (2001) Sensitivity analysis of multilayer perceptron to input and weight peaurbations. IEEE Trans Neural Netw 12(6):1358–1366

Ng WWY, Tuo Y, Zhang J et al (2020) Training error and sensitivity-based ensemble feature selection. Int J Mach Learn Cybern 11(2):2313–2326

Hashem S (1992) Sensitivity analysis for feedforward artificial neural networks with differentiable activation functions. International Joint Conference on Neural Networks (IJCNN). pp 419–424

Choi JY, Choi CH (1992) Sensitivity analysis of multilayer perceptron with differentiable activation functions. IEEE Trans Neural Netw 3(1):101–107

Zhang L, Sun X, Li Y, Zhang Z (2019) A noise-sensitivity-analysis-based test prioritization technique for deep neural networks. arXiv:1901.00054v3

Engelbrecht AP (2001) A new pruning heuristic based on variance analysis of sensitivity information. IEEE Trans Neural Netw 12(6):1386–1399

Kowalski PA, Kusy M (2018) Sensitivity analysis for probabilistic neural network structure reduction. IEEE Trans Neural Netw Learn Syst 29(5):1919–1932

Hoff ME (1962) Leaming phenomena in networks of adaptive switching circuits. Stanford University, Stanford

Piché SW (1995) The selection of weight accuracies for Madalines. IEEE Trans Neural Netw 6(2):432–445

Iwatsuki M, Kawamata M, Higuchi T (1990) Statistical sensitivity and minimum sensitivity structures with fewer coefficients in discrete time linear systems. IEEE Trans Circuits Syst 37(1):72–80

Alippi C, Piuri V, Sami M (1995) Sensitivity to errors in artificial neural networks: a behavioral approach. IEEE Trans Circuits Syst I 42(6):358–361

Sobol IM (1990) On sensitivity estimation for nonlinear mathematical models. Matematicheskoe Modelirovanie 2(1):112–118

Fock E (2014) Global sensitivity analysis approach for input selection and system identification purposes: a new framework for feedforward neural networks. IEEE Trans Neural Netw Learn Syst 25(8):1484–1495

Fernández-Navarro F, Carbonero-Ruz M, Alonsoet DB, Torres-Jiménez M (2017) Global sensitivity estimates for neural network classifiers. IEEE Trans Neural Netw Learn Syst 28(11):2592–2604

Cheng AY, Yeung DS (1999) Sensitivity analysis of neocognitron. IEEE Trans Syst Man Cybern Part C (Applications and Reviews) 29(2):238–249

Yeung DS, Sun X (2002) Using function approximation to analyze the sensitivity of MLP with antisymmetric squashing activation function. IEEE Trans Neural Netw 13(1):34–44

Shi D, Yeung DS, Gao J (2005) Sensitivity analysis applied to the construction of radial basis function networks. Neural Netw 18(7):951–957

Yeung DS, Ng WWY, Wang D, Tsang ECC, Wang X-Z (2007) Localized generalization error model and its application to architecture selection for radial basis function neural network. IEEE Trans Neural Netw 18(5):1294–1305

Yeung DS, Li J-C, Ng WWY, Chan PPK (2016) MLPNN training via a multiobjective optimization of training error and stochastic sensitivity. IEEE Trans Neural Netw Learn Syst 27(5):978–992

Ng WWY, Yeung DS, Firth M, Tsang ECC, Wang X-Z (2008) Feature selection using localized generalization error for supervised classification problems using RBFNN. Pattern Recognit 41(12):3706–3719

Ng WWY, He Z-M, Yeung DS, Chan PPK (2014) Steganalysis classifier training via minimizing sensitivity for different imaging sources. Inf Sci 281:211–224

Karmakar B, Pal NR (2018) How to make a neural network say ‘Don’t Know’. Inf Sci 430–431:444–466

Xiang W, Tran H-D, Johnson TT (2018) Output reachable set estimation and verification for multi-layer neural networks. IEEE Trans Neural Netw Learn Syst 29(11):5777–5783

Li B, Saad D (2019) Large deviation analysis of function sensitivity in random deep neural networks. arXiv:1910.05769

Baykal C, Liebenwein L, Gilitschenski I, Feldman D, Rus D (2019) SiPPing neural networks: sensitivity-informed provable pruning of neural networks. arXiv:1910.05422v1

Shu H, Zhu H (2019) Sensitivity analysis of deep neural networks. arXiv:1901.07152v1

Zhang C, Liu A, Liu X, Xu Y, Yu H, Ma Y, Li T (2019) Interpreting and improving adversarial robustness with neuron sensitivity. arXiv:1909.06978v2

Unal E, Siddiqui F, Rezaei A, Eltaleb I, Kabir S, Soliman MY, Dindoruk B (2019) Use of wavelet transform and signal processing techniques for inferring interwell connectivity in waterflooding operations. Soc Pet Eng. https://doi.org/10.2118/196063-MS

Sayarpour M, Zuluaga E, Kabir CS, Lake LW (2007) The use of capacitance-resistive models for rapid estimation of waterflood performance. Soc Pet Eng. https://doi.org/10.2118/110081-MS

Wang Y, Kabir CS, Reza Z (2018) Inferring well connectivity in waterfloods using novel signal processing techniques. Soc Pet Eng. https://doi.org/10.2118/191643-MS

Artun E (2016) Characterizing reservoir connectivity and forecasting waterflood performance using data driven and reduced-physics models. Soc Pet Eng. https://doi.org/10.2118/180488-MS

Liu W, Liu WD, Gu J (2019) Reservoir inter-well connectivity analysis based on a data driven method. Soc Pet Eng. https://doi.org/10.2118/197654-MS

Albertoni A, Lake LW (2003) Inferring interwell connectivity only from well-rate fluctuations in waterfloods. Soc Pet Eng. https://doi.org/10.2118/83381-PA

Demiryurek U, Banaei-Kashani F, Shahabi C (2008) Neural-network based sensitivity analysis for injector-producer relationship identification. Soc Pet Eng. https://doi.org/10.2118/112124-MS

Cheng H, Vyatkin V, Osipov E, Zeng P, Yu H (2020) LSTM based EFAST global sensitivity analysis for interwell connectivity evaluation using injection and production fluctuation data. IEEE Access 8:67289–67299

Ba JL, Kiros JR, Hinton GE (2016) Layer normalization. arXiv preprint arXiv:1607.06450

Jain A, Nandakumar K, Ross A (2005) Score normalization in multimodal biometric systems. Pattern Recognit 38(12):2270–2285

Artun E (2017) Erratum to: Characterizing interwell connectivity in waterflooded reservoirs using data-driven and reduced-physics models: a comparative study. Neural Comput Appl 28:1905–1906

Whitaker S (1986) Flow in porous media I: A theoretical derivation of Darcy’s law. Transport in Porous Media 1:3–25

LeCun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Zhang H, Wang J, Sun ZQ, Zurada JM, Pal NR (2020) Feature selection for neural networks using group lasso regularization. IEEE Trans Knowl Data Eng 32(4):659–673

Acknowledgements

This work was supported in part by the National Key Research and Development Program of China under Grant 2018AAA0100100; in part by the National Natural Science Foundation of China under Grants 62173345, 51722406, 61573018 and 51874335, ZR2018MF004; in part by the Major Scientific and Technological Projects of China National Petroleum Corporation (CNPC) under Grant ZD2019-183-008; and in part by the Fundamental Research Funds for the Central Universities under Grants 20CX05002A, 20CX05012A, and 18CX02097A; in part by Joint fund of Science and Technology Department of Liaoning Province and State Key Laboratory of Robotics under Grant 2021030195-JH3/103; in part by Joint fund of Science and Technology Department of Liaoning Province and State Key Laboratory of Robotics under Grant 2021030195-JH3/103 and Grant 2021-KF-22-07; and in part by the Shandong Provincial Natural Science Foundation under Grant JQ201808.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhang, H., Jiang, Y., Wang, J. et al. Bilateral sensitivity analysis: a better understanding of a neural network. Int. J. Mach. Learn. & Cyber. 13, 2135–2152 (2022). https://doi.org/10.1007/s13042-022-01511-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-022-01511-z