Abstract

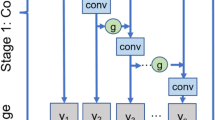

One of the dilemmas faced by forecasting is that the data are collected at different frequencies in some practical applications. This paper treats mixed frequency data as a special kind of multi-source data, that is, data from each source is collected at a different sampling frequency. Based on this cognition, this paper draws on the idea of neural network processing multi-source data to proposes a temporal-attribute attention neural network (TAA-NN for short) model to study the raw mixed frequency data. The new method avoids the problems caused by frequency alignments, such as information loss and artificial assumption of data distribution. First, this paper proposes a new sliding window strategy for mixed frequency data to determine the amount of data input into the model from each source data. Then, a group of convolutional neural network (CNN) with the same number of filters is used to extract or expand temporal features from the hidden state for each source data (a frequency data), so as to realize the information fusion of mixed frequency data at the feature layer. In addition, a temporal-attribute attention mechanism is proposed to mine essential information from the fused feature matrix in temporal and attribute dimensions. Experiments on two simulations and real-world datasets demonstrate that TAA-NN outperforms the compared methods and provides a new solution to the mixed frequency data forecasting.

Similar content being viewed by others

Notes

References

Yu H, Yang J, Chen X, Zou Z, Wang G, Sang T (2018) A soft sensing prediction model of superheat degree in the aluminum electrolysis production. In: 2018 IEEE International Conference on Big Data (Big Data), pp 2679–2684, https://doi.org/10.1109/BigData.2018.8622489

Ghysels E, Sinko A, Valkanov R (2007) Midas regressions: Further results and new directions. Econometric Reviews 26(1):53–90. https://doi.org/10.1080/07474930600972467

Chiu CW, Eraker B, Foerster AT, Kim TB, Seoane HD (2011) Estimating VAR’s sampled at mixed or irregular spaced frequencies : a Bayesian approach. Research Working Paper RWP 11-11, Federal Reserve Bank of Kansas City, https://ideas.repec.org/p/fip/fedkrw/rwp11-11.html

Schmidhuber J (2015) Deep learning in neural networks: An overview. Neural Networks 61:85–117, https://doi.org/10.1016/j.neunet.2014.09.003, https://www.sciencedirect.com/science/article/pii/S0893608014002135

Fischer T, Krauss C (2018) Deep learning with long short-term memory networks for financial market predictions. European Journal of Operational Research 270(2):654–669, https://doi.org/10.1016/j.ejor.2017.11.054, https://www.sciencedirect.com/science/article/pii/S0377221717310652

Stisen A, Blunck H, Bhattacharya S, Prentow TS, Kjærgaard MB, Dey A, Sonne T, Jensen MM (2015) Smart devices are different: Assessing and mitigatingmobile sensing heterogeneities for activity recognition. In: Proceedings of the 13th ACM Conference on Embedded Networked Sensor Systems, Association for Computing Machinery, New York, NY, USA, SenSys ’15, p 127–140, https://doi.org/10.1145/2809695.2809718,

Yu H, Yang Q, Wang G, Xie Y (2020) A novel discriminative dictionary pair learning constrained by ordinal locality for mixed frequency data classification. IEEE Transactions on Knowledge and Data Engineering pp 1–1, https://doi.org/10.1109/TKDE.2020.3046114

Foroni C, Marcellino M, Schumacher C (2015) Unrestricted mixed data sampling (midas): Midas regressions with unrestricted lag polynomials. J Royal Statist Soc Series A (Statistics in Society) 178(1):57–82, http://www.jstor.org/stable/43965717

Foroni C, Guérin P, Marcellino M (2018) Using low frequency information for predicting high frequency variables. Int J Forecast 34(4):774–787, https://doi.org/10.1016/j.ijforecast.2018.06.004, https://www.sciencedirect.com/science/article/pii/S0169207018300967

Xu Q, Zhuo X, Jiang C (2019) Predicting market interest rates via reverse restricted midas model. J Manag Sci China 22(10):55–71

Mariano RS, Murasawa Y (2010) A coincident index, common factors, and monthly real gdp*. Oxford Bull Econom Statist 72(1):27–46. https://doi.org/10.1111/j.1468-0084.2009.00567.x

Ghysels E (2016) Macroeconomics and the reality of mixed frequency data. J Economet 193(2):294–314, https://doi.org/10.1016/j.jeconom.2016.04.008, https://www.sciencedirect.com/science/article/pii/S0304407616300653, the Econometric Analysis of Mixed Frequency Data Sampling

Xu Q, Zhuo X, Jiang C, Liu Y (2019) An artificial neural network for mixed frequency data. Expert Syst Applic 118:127–139, https://doi.org/10.1016/j.eswa.2018.10.013, https://www.sciencedirect.com/science/article/pii/S0957417418306559

Xu Q, Wang L, Jiang C, Liu Y (2020) A novel (u)midas-svr model with multi-source market sentiment for forecasting stock returns. Neural Comput Applic 32(10):5875–5888. https://doi.org/10.1007/s00521-019-04063-6

Rangapuram SS, Seeger M, Gasthaus J, Stella L, Wang Y, Januschowski T (2018) Deep state space models for time series forecasting. In: Bengio S, Wallach H, Larochelle H, Grauman K, Cesa-Bianchi N, Garnett R (eds) Proceedings of the 32nd International Conference on Neural Information Processing Systems, Curran Associates Inc., Red Hook, NY, USA, NIPS’18, vol 31, p 7796–7805, https://proceedings.neurips.cc/paper/2018/file/5cf68969fb67aa6082363a6d4e6468e2-Paper.pdf

Connor JT, Martin RD, Atlas LE (1994) Recurrent neural networks and robust time series prediction. IEEE Transact Neural Networks 5(2):240–254. https://doi.org/10.1109/72.279188

Cho K, van Merrienboer B, Bahdanau D, Bengio Y (2014) On the properties of neural machine translation: Encoder-decoder approaches. In: Wu D, Carpuat M, Carreras X, Vecchi EM (eds) Proceedings of SSST-8, Eighth Workshop on Syntax, Semantics and Structure in Statistical Translation, Association for Computational Linguistics, pp 103–111, https://doi.org/10.3115/v1/W14-4012, https://www.aclweb.org/anthology/W14-4012/

Gers FA, Eck D, Schmidhuber J (2002) Applying lstm to time series predictable through time-window approaches. In: Tagliaferri R, Marinaro M (eds) Neural Nets WIRN Vietri-01. Springer, London, London, pp 193–200

Cho K, van Merrienboer B, Gülçehre Ç, Bahdanau D, Bougares F, Schwenk H, Bengio Y (2014) Learning phrase representations using RNN encoder-decoder for statistical machine translation. In: Moschitti A, Pang B, Daelemans W (eds) Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, EMNLP 2014, October 25-29, 2014, Doha, Qatar, A meeting of SIGDAT, a Special Interest Group of the ACL, ACL, pp 1724–1734, https://doi.org/10.3115/v1/d14-1179,

Sutskever I, Vinyals O, Le QV (2014) Sequence to sequence learning with neural networks. In: Ghahramani Z, Welling M, Cortes C, Lawrence ND, Weinberger KQ (eds) Advances in Neural Information Processing Systems 27: Annual Conference on Neural Information Processing Systems 2014, December 8-13 2014, Montreal, Quebec, Canada, pp 3104–3112, https://proceedings.neurips.cc/paper/2014/hash/a14ac55a4f27472c5d894ec1c3c743d2-Abstract.html

Lai G, Chang W, Yang Y, Liu H (2018) Modeling long- and short-term temporal patterns with deep neural networks. In: Collins-Thompson K, Mei Q, Davison BD, Liu Y, Yilmaz E (eds) The 41st International ACM SIGIR Conference on Research & Development in Information Retrieval, SIGIR 2018, Ann Arbor, MI, USA, July 08-12, 2018, ACM, pp 95–104, https://doi.org/10.1145/3209978.3210006,

Ma F, Chitta R, Zhou J, You Q, Sun T, Gao J (2017) Dipole: Diagnosis prediction in healthcare via attention-based bidirectional recurrent neural networks. In: Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, August 13 - 17, 2017, ACM, pp 1903–1911, https://doi.org/10.1145/3097983.3098088,

Choi E, Bahadori MT, Song L, Stewart WF, Sun J (2017) GRAM: graph-based attention model for healthcare representation learning. In: Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Halifax, NS, Canada, August 13 - 17, 2017, ACM, pp 787–795, https://doi.org/10.1145/3097983.3098126,

Yuan Y, Xun G, Ma F, Suo Q, Xue H, Jia K, Zhang A (2018) A novel channel-aware attention framework for multi-channel EEG seizure detection via multi-view deep learning. In: 2018 IEEE EMBS International Conference on Biomedical & Health Informatics, BHI 2018, Las Vegas, NV, USA, March 4-7, 2018, IEEE, pp 206–209, https://doi.org/10.1109/BHI.2018.8333405,

Shih S, Sun F, Lee H (2019) Temporal pattern attention for multivariate time series forecasting. Mach Learn 108(8–9):1421–1441. https://doi.org/10.1007/s10994-019-05815-0

Zhou H, Zhang S, Peng J, Zhang S, Li J, Xiong H, Zhang W (2021) Informer: Beyond efficient transformer for long sequence time-series forecasting. Proc AAAI Confer Artific Intellig 35(12):11106–11115, https://ojs.aaai.org/index.php/AAAI/article/view/17325

Zerveas G, Jayaraman S, Patel D, Bhamidipaty A, Eickhoff C (2021) A transformer-based framework for multivariate time series representation learning. In: Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, Association for Computing Machinery, New York, NY, USA, KDD ’21, p 2114–2124, https://doi.org/10.1145/3447548.3467401,

Klímek J, Klimek J, Kraskiewicz W, Topolewski M (2021) Long-term series forecasting with query selector - efficient model of sparse attention. CoRR arxiv: abs/2107.08687

Xu Q, Bo Z, Jiang C, Liu Y (2019) Does google search index really help predicting stock market volatility? evidence from a modified mixed data sampling model on volatility. Knowl Based Syst 166:170–185. https://doi.org/10.1016/j.knosys.2018.12.025

Reiss A, Indlekofer I, Schmidt P, Laerhoven KV (2019) Deep PPG: large-scale heart rate estimation with convolutional neural networks. Sensors 19(14):3079. https://doi.org/10.3390/s19143079

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was jointly supported by the National Natural Science Foundation of China (62136002, 61876027 and 61751312), and the Natural Science Foundation of Chongqing (cstc2019jcyj-cxttX0002)

Rights and permissions

About this article

Cite this article

Wu, P., Yu, H., Hu, F. et al. A temporal-attribute attention neural network for mixed frequency data forecasting. Int. J. Mach. Learn. & Cyber. 13, 2519–2531 (2022). https://doi.org/10.1007/s13042-022-01541-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-022-01541-7