Abstract

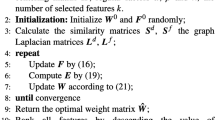

Multi-label feature selection is a hot topic in multi-label high-dimensional data processing. However, some multi-label feature selection models use manifold graphs. Due to its fixed graph matrix, the model performance is poor, and learning a better fundamental graph matrix is also an urgent problem. Therefore, a sparse multi-label feature selection method is proposed via dynamic graph manifold learning (DMMFS). In this method, the sample space is mapped to the pseudo-label space with a real-label base manifold structure through linear mapping. Then, the Frobenius norm constructed the dynamic graph matrix, and the mutual constraint between the weight matrix and dynamic graph matrix is realized by feature manifold. Finally, experimental comparisons are made on eight multi-label reference data sets with seven of the latest methods. The experimental results prove the superiority of DMMFS.

Similar content being viewed by others

References

Gui J, Sun ZN, Jis W et al (2016) Feature selection based on structured sparsity: a comprehensive study. IEEE Trans Neural Netw Learn Syst 28(7):1–18

Paniri M, Dowlatshahi MB, Nezamabadi-Pour H (2019) MLACO: a multi-label feature selection algorithm based on ant colony optimization. Knowl-Based Syst 192:105285

Kashef S, Nezamabadi-Pour H, Nikpour B (2018) Multi-label feature selection: a comprehensive review and guiding experiments. Wiley Interdiscip Rev Data Min Knowl Discov 8(2):12–40

Ding CC, Zhao M, Lin J et al (2019) Multi-objective iterative optimization algorithm based optimal wavelet filter selection for multi-fault diagnosis of rolling element bearings. ISA Trans 82:199–215

Labani M, Moradi P, Ahmadizar F et al (2018) A novel multivariate filter method for feature selection in text classification problems. Eng Appl Artif Intell 70:25–37

Yao C, Liu YF, Jiang B et al (2017) LLE score: a new filter-based unsupervised feature selection method based on nonlinear manifold embedding and its application to image recognition. IEEE Trans Image Process 26(11):5257–5269

Gonzalez J, Ortega J, Damas M et al (2019) A new multi-objective wrapper method for feature selection-accuracy and stability analysis for BCI. Neurocomputing 333:407–418

Swati J, Hongmei H, Karl J (2018) Information gain directed genetic algorithm wrapper feature selection for credit rating. Appl Soft Comput 69:541–553

Maldonado S, López J (2018) Dealing with high-dimensional class-imbalanced datasets: embedded feature selection for SVM classification. Appl Soft Comput 67:94–105

Kong YC, Yu TW (2018) A graph-embedded deep feedforward network for disease outcome classification and feature selection using gene expression data. Bioinformatics 34(21):3727–3737

Zhang Y, Ma YC (2022) Non-negative multi-label feature selection with dynamic graph constraints. Knowl-Based Syst 238:107924107924

Li XP, Member S, Wang YD et al (2020) A survey on sparse learning models for feature selection. IEEE Trans Cybern 99:1–19

Tang C, Liu XW, Zhu XZ et al (2020) Feature selective projection with low-rank embedding and dual laplacian regularization. IEEE Trans Knowl Data Eng 32(9):1747–1760

Tang C, Zheng X, Liu XW et al (2021) Cross-view locality preserved diversity and consensus learning for multi-view unsupervised feature selection. IEEE Trans Knowl Data Eng. https://doi.org/10.1109/TKDE.2020.3048678

Zhang Y, Ma YC, Yang XF (2022) Multi-label feature selection based on logistic regression and manifold learning. Appl Intell. https://doi.org/10.1007/s10489-021-03008-8

Lee J, Kim DW (2013) Feature selection for multi-label classification using multivariate mutual information. Pattern Recogn Lett 34(3):349–357

Lee J, Kim DW (2015) Fast multi-label feature selection based on information-theoretic feature ranking—ScienceDirect. Pattern Recogn 48(9):2761–2771

Lee J, Kim DW (2017) SCLS: multi-label feature selection based on scalable criterion for large label set. Pattern Recogn 66:342–352

Gao WF, Hu L, Zhang P (2018) Class-specific mutual information variation for feature selection. Pattern Recogn 2:328–339

Lee J, Kim DW (2018) Scalable multi-label learning based on feature and label dimensionality reduction. Complexity 23:1–15

Zhang P, Gao WF, Hu JC et al (2020) Multi-label feature selection based on high-order label correlation assumption. Entropy 22(7):797

Song XY, Li JX, Tang YF et al (2021) JKT: a joint graph convolutional network based deep knowledge tracing. Inf Sci 580:510–523

Song XY, Li JX, Lei Q et al (2022) Bi-CLKT: bi-graph contrastive learning based knowledge tracing. Knowl-Based Syst 241:108274

Hu XC, Shen YH, Pedrycz W et al (2021) Identification of fuzzy rule-based models with collaborative fuzzy clustering. IEEE Trans Cybern 2:1–14

Liu KY, Yang XB, Fujita H et al (2019) An efficient selector for multi-granularity attribute reduction. Inf Sci 505:457–472

Chen Y, Liu KY, Song JJ et al (2020) Attribute group for attribute reduction. Inf Sci 535:64–80

Jing YG, Li TR, Fujita H et al (2017) An incremental attribute reduction approach based on knowledge granularity with a multi-granulation view. Inf Sci 411:23–38

Kawano S (2013) Semi-supervised logistic discrimination via labeled data and unlabeled data from different sampling distributions. Stat Anal Data Min 6(6):472–481

Kawano S, Misumi T, Konishi S (2012) Semi-supervised logistic discrimination via graph-based regularization. Neural Process Lett 36(3):203–216

Jian L, Li JD, Shu K et al (2016) Multi-label informed feature selection. International Joint Conference on Artificial Intelligence. AAAI Press, 1627–1633

Huang R, Wu ZJ (2021) Multi-label feature selection via manifold regularization and dependence maximization. Pattern Recogn 120:108149

Gao WF, Li YH, Hu L (2021) Multi-label feature selection with constrained latent structure shared term. IEEE Trans Neural Netw Learn Syst 2:1–10

Mohapatra P, Chakravarty S, Dash PK (2016) Microarray medical data classification using kernel ridge regression and modified cat swarm optimization based gene selection system. Swarm Evol Comput 28:144–160

Fan YL, Liu JH, Weng W et al (2021) Multi-label feature selection with constraint regression and adaptive spectral graph. Knowl-Based Syst 212:106621

Tang C, Liu XW, Li MM et al (2018) Robust unsupervised feature selection via dual self-representation and manifold regularization. Knowl-Based Syst 145:109–120

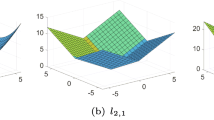

Nie FP, Huang H, Cai X et al (2010) Efficient and robust feature selection via joint \(L_{2,1}\)-norms minimization. International Conference on Neural Information Processing Systems. Curran Associates Inc. 1813–1821

Hashemi A, Dowlatshahi M, Nezamabadi-pour H (2020) Mfs-mcdm: multi-label feature selection using multi-criteria decision making. Knowl-Based Syst 206:106365

Lin Y, Hu Q, Liu J et al (2015) Multi-label feature selection based on maxdependency and min-redundancy. Neurocomputing 168:92–103

Zhang ML, Zhou ZH (2007) ML-KNN: a lazy learning approach to multi-label learning. Pattern Recogn 40(7):2038–2048

Dougherty J, Kohavi R, Sahami M et al (1995) Supervised and unsupervised discretization of continuous features. Mach Learn Proc 2:194–202

Dunn OJ (1961) Multiple comparisons among means. Publ Am Stat Assoc 56(293):52–64

Friedman M (1940) A comparison of alternative tests of significance for the problem of m rankings. Ann Math Stat 11(1):86–92

Acknowledgements

This work was supported by the Natural Science Foundation of China (61976130), the Key Research and Development Project of Shaanxi Province (2018KW-021), the Natural Science Foundation of Shaanxi Province (2020JQ-923).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declared that they have no conflicts of interest to this work. We declare that we do not have any commercial or associative interest that represents a conflict of interest in connection with the work submitted.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, Y., Ma, Y. Sparse multi-label feature selection via dynamic graph manifold regularization. Int. J. Mach. Learn. & Cyber. 14, 1021–1036 (2023). https://doi.org/10.1007/s13042-022-01679-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-022-01679-4