Abstract

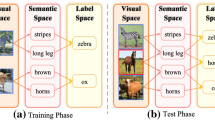

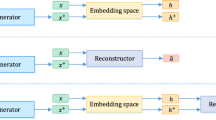

This paper develops a novel feature generation framework for zero-shot learning (ZSL) to recognize both fine-grained seen and unseen classes by constructing embedding from synthesized features. The observation is that the original feature space fails to capture the discriminative information for unseen classes. We first introduce a contrastive visual-semantic embedding (CVSE) approach, which integrates contrastive learning with semantic embedding to obtain both visual and semantic discriminative information for feature generation. In case, we propose to enforce contrastive learning on both real seen class samples and synthetic unseen class samples under a contrastive semantic embedding-based feature generation framework. The synthesized unseen class features together with synthesized seen class features are transformed into embedding features and utilized during classification to reduce ambiguities among semantics. We conduct experiments on four publicly available datasets of AWA1, AWA2, CUB, aPaY, showing that our method can outperform the state-of-the-art by a large margin on most of the datasets.

Similar content being viewed by others

References

Wang W, Zheng VW, Yu H, Miao C (2019) A survey of zero-shot learning: settings, methods, and applications. ACM Trans Intell Syst Technol (TIST) 10(2):1–37

Xian Y, Lampert CH, Schiele B, Akata Z (2018) Zero-shot learning-a comprehensive evaluation of the good, the bad and the ugly. IEEE Trans Pattern Anal Mach Intell 41(9):2251–2265

Ding Z, Shao M, Fu Y (2018) Generative zero-shot learning via low-rank embedded semantic dictionary. IEEE Trans Pattern Anal Mach Intell 41(12):2861–2874

Akata Z, Reed S, Walter D, Lee H, Schiele B (2015) Evaluation of output embeddings for fine-grained image classification,” in Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2927–2936

Xian Y, Schiele B, Akata Z (2017) Zero-shot learning-the good, the bad and the ugly. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 4582–4591

Xian Y, Lorenz T, Schiele B, Akata Z (2018) Feature generating networks for zero-shot learning. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5542–5551

Zhu Y, Elhoseiny M, Liu B, Peng X, Elgammal A (2018) A generative adversarial approach for zero-shot learning from noisy texts. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1004–1013

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. Advances in neural information processing systems 27

Kingma DP, Welling M (2013) Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114

Xian Y, Sharma S, Schiele B, Akata Z (2019) f-vaegan-d2: A feature generating framework for any-shot learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 10275–10284

Liu X, Zhang F, Hou Z, Mian L, Wang Z, Zhang J, Tang J (2021) Self-supervised learning: Generative or contrastive. IEEE Transactions on Knowledge and Data Engineering

Li J, Jing M, Lu K, Zhu L, Yang Y, Huang Z (2019) Alleviating feature confusion for generative zero-shot learning. In: Proceedings of the 27th ACM International Conference on Multimedia, pp 1587–1595

Lampert CH, Nickisch H, Harmeling S (2009) Learning to detect unseen object classes by between-class attribute transfer. In: 2009 IEEE conference on computer vision and pattern recognition, pp 951–958, IEEE

Palatucci M, Pomerleau D, Hinton GE, Mitchell TM (2009) Zero-shot learning with semantic output codes,” Advances in neural information processing systems 22

Larsen ABL, Sønderby SK, Larochelle H, Winther O (2016) Autoencoding beyond pixels using a learned similarity metric. In: International conference on machine learning, pp. 1558–1566, PMLR

Zhang Z, Saligrama V (2015) Zero-shot learning via semantic similarity embedding. In: Proceedings of the IEEE international conference on computer vision, pp. 4166–4174

Jaiswal A, Babu AR, Zadeh MZ, Banerjee D, Makedon F (2020) A survey on contrastive self-supervised learning. Technologies 9(1):2

Wang X, Zhang R, Shen C, Kong T, Li L (2021) Dense contrastive learning for self-supervised visual pre-training. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3024–3033

Chen T, Kornblith S, Norouzi M, Hinton G (2020) A simple framework for contrastive learning of visual representations. In: International conference on machine learning, pp 1597–1607, PMLR

Gao T, Yao X, Chen D (2021) Simcse: simple contrastive learning of sentence embeddings. arXiv preprint arXiv:2104.08821

Han Z, Fu Z, Chen S, Yang J (2021) Contrastive embedding for generalized zero-shot learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2371–2381

Chen T, Sun Y, Shi Y, Hong L (2017) On sampling strategies for neural network-based collaborative filtering. In: Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 767–776

Wang T, Isola P (2020) Understanding contrastive representation learning through alignment and uniformity on the hypersphere. In: International Conference on Machine Learning, pp. 9929–9939, PMLR

Arjovsky M, Chintala S, Bottou L (2017) Wasserstein gan

Deng J, Dong W, Socher R, Li L-J, Li K, Fei-Fei L (2009) Imagenet: A large-scale hierarchical image database. In: 2009 IEEE conference on computer vision and pattern recognition, pp. 248–255, Ieee

Reed S, Akata Z, Lee H, Schiele B (2016) Learning deep representations of fine-grained visual descriptions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 49–58

Wah C, Branson S, Welinder P, Perona P, Belongie S (2011) The caltech-ucsd birds-200-2011 dataset

Farhadi A, Endres I, Hoiem D, Forsyth D (2009) Describing objects by their attributes. In: 2009 IEEE conference on computer vision and pattern recognition, pp. 1778–1785, IEEE

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778

Yu R, Lu W, Lu H, Wang S, Yu J (2021) Sentence pair modeling based on semantic feature map for human interaction with iot devices. Int J Mach Learn Cybern (2)

Heusel M, Ramsauer H, Unterthiner T, Nessler B, Hochreiter S (2017) Gans trained by a two time-scale update rule converge to a local nash equilibrium,” Advances in neural information processing systems 30

Romera-Paredes B, Torr P (2015) An embarrassingly simple approach to zero-shot learning. In: International conference on machine learning, pp. 2152–2161, PMLR

Akata Z, Perronnin F, Harchaoui Z, Schmid C (2013) Label-embedding for attribute-based classification. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 819–826

Changpinyo S, Chao W-L, Gong B, Sha F (2016) Synthesized classifiers for zero-shot learning. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 5327–5336

Jiang H, Wang R, Shan S, Chen X (2019) Transferable contrastive network for generalized zero-shot learning. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 9765–9774

Li J, Jing M, Lu K, Zhu L, Yang Y, Huang Z (2019) Alleviating feature confusion for generative zero-shot learning. In: Proceedings of the 27th ACM International Conference on Multimedia, pp. 1587–1595

Han Z, Fu Z, Yang J (2020) Learning the redundancy-free features for generalized zero-shot object recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12865–12874

Shen Y, Qin J, Huang L, Liu L, Zhu F, Shao L (2020) Invertible zero-shot recognition flows. In: European Conference on Computer Vision, pp. 614–631, Springer

Narayan S, Gupta A, Khan FS, Snoek CG, Shao L (2020) Latent embedding feedback and discriminative features for zero-shot classification. In: European Conference on Computer Vision, pp. 479–495, Springer

Yue Z, Wang T, Sun Q, Hua X, Zhang H (2021) Counterfactual zero-shot and open-set visual recognition. In: IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2021, virtual, June 19-25, 2021, pp 15404–15414, Computer Vision Foundation / IEEE

Chen X, Li J, Lan X, Zheng N (2022) Generalized zero-shot learning via multi-modal aggregated posterior aligning neural network. IEEE Trans Multimed 24:177–187

Van der Maaten L, Hinton G (2008) Visualizing data using t-sne. J Mach Learn Res 9(11)

Acknowledgements

This work was supported in part by the Fundamental Research Funds for the Central Universities (2021ZY86) and the Natural Science Foundation of China (NSFC) (61703046).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, H., Zhang, T. & Zhang, X. Contrastive embedding-based feature generation for generalized zero-shot learning. Int. J. Mach. Learn. & Cyber. 14, 1669–1681 (2023). https://doi.org/10.1007/s13042-022-01719-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-022-01719-z