Abstract

How can a rational player manipulate a myopic best response player in a repeated two-player game? We show that in games with strategic substitutes or strategic complements the optimal control strategy is monotone in the initial action of the opponent, in time periods, and in the discount rate. As an interesting example outside this class of games we present a repeated “textbook-like” Cournot duopoly with nonnegative prices and show that the optimal control strategy involves a cycle.

Similar content being viewed by others

Notes

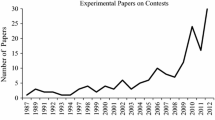

The game was repeated over 40 rounds. The participant played the cycle of quantities (108, 70, 54, 42). This cycle yields an average payoff of 1520 which is well above Stackelberg leader payoff of 1458. In this game, the Stackelberg leader’s quantity is 54, the follower’s quantity is 27 (payoff 728), the Cournot Nash equilibrium quantity 36 (payoff 1296). The computer is programmed to myopic best response with some noise. The x-axis in Fig. 1 indicates the rounds of play, the y-axis the quantities. The lower time series depicts the computer’s sequence of actions. The upper time series shows the participant’s quantities. See Duersch et al. [15] for details of the game and the experiment.

In fact, the average payoff of the optimal cycle is 1522, only a minor improvement over the average payoff (1520) of the cycle played by the participant.

In Sect. 4 we explain why we do not consider here multi-dimensional strategy sets.

Note that throughout the analysis we do not allow the manipulator to choose suitably the initial action of the puppet.

As a reviewer rightfully points out this would be problematic if the manipulator does not know the learning heuristic used by the puppet.

As a reviewer pointed out, we could have stated the model just in terms of assumptions on m and a continuous best-response function b. This might be even more realistic as the manipulator may observe the opponent’s best responses but not necessarily the opponent’s payoff function.

Amir ([1], Theorem 2 (ii)) does not state explicitly that the one-period value function is increasing and \(X_y\) is expanding. Yet, this property is required in the proof.

This finding that an optimal control strategy involves strictly dominated actions is not restricted to games for which monotone differences differ among players.

Since we look at cycles (of finite length), we can neglect discounting in the calculations below.

To save space, we write out only the objective functions for \(n = 1, 2, 3\).

Interestingly, the denominator in the linear factor in \(s_n\) is identical the numerator of the linear factor in \(s_{n+1}\).

One reviewer suggested that if the puppet uses fictitious play rather than myopic best response, then it is much more difficult to manipulate with a cycle. Fictitious play is an uncoupled learning heuristic. Moreover, in our Cournot example, the Stackelberg outcome is unique. Thus, it follows from Schipper [40] that the payoff to the dynamic optimizer would be strictly above Nash equilibrium. So fictitious play can be exploited by a patient dynamic optimizers in our Cournot example although the strategy may not be cyclic. At present, the form of the optimal manipulation strategy against a fictitious player is not clear to us and is left for future research.

A real-valued function f on a lattice X is supermodular on X if \(f(x'' \vee x') - f(x'') \ge f(x') - f(x'' \wedge x')\) for all \(x'', x' \in X\) (see [45], p. 43).

References

Amir R (1996a) Sensitivity analysis of multisector optimal economic dynamics. J Math Econ 25:123–141

Amir R (1996b) Cournot oligopoly and the theory of supermodular games. Games Econ Behav 15:132–148

Aoyagi M (1996) Evolution of beliefs and the Nash equilibrium of normal form games. J Econ Theory 70:444–469

Banerjee A, Weibull JW (1995) Evolutionary selection and rational behavior. In: Kirman A, Salmon M (eds) Learning and rationality in economics. Blackwell, Oxford, pp 343–363

Benhabib J, Nishimura K (1985) Competitive equilibrium cycles. J Econ Theory 35:284–306

Berge C (1963) Topological spaces, Dover edition, 1997. Dover Publications Inc, Mineola

Bertsekas DP (2005) Dynamic programming and optimal control, vol I & II, 3rd edn. Athena Scientific, Belmont

Boldrin M, Montrucchio L (1986) On the indeterminancy of capital accumulation paths. J Econ Theory 40:26–29

Bryant J (1983) A simple rational expectations Keynes-type coordination model. Q J Econ 98:525–528

Bulavsky VA, Kalashnikov VV (1996) Equilibria in generalized Cournot and Stackelberg markets. Z Angew Math Mech 76(S3):387–388

Camerer CF, Ho T-H, Chong J-K (2002) Sophisticated experience-weighted attraction learning and strategic teaching in repeated games. J Econ Theory 104:137–188

Chong J-K, Camerer CF, Ho T-H (2006) A learning-based model of repeated games with incomplete information. Games Econ Behav 55:340–371

Cournot A (1838) Researches into the mathematical principles of the theory of wealth. MacMillan, London

Dubey P, Haimanko O, Zapechelnyuk A (2006) Strategic substitutes and complements, and potential games. Games Econ Behav 54:77–94

Duersch P, Kolb A, Oechssler J, Schipper BC (2010) Rage against the machines: how subjects learn to play against computers. Econ Theory 43:407–430

Duersch P, Oechssler J, Schipper BC (2012) Unbeatable imitation. Games Econ Behav 76:88–96

Duersch P, Oechssler J, Schipper BC (2014) When is tit-for-tat unbeatable? Int J Game Theory 43:25–36

Dutta PK (1995) A folk theorem for stochastic games. J Econ Theory 66:1–32

Droste E, Hommes C, Tuinstra J (2002) Endogenous fluctuations under evolutionary pressure in Cournot competition. Games Econ Behav 40:232–269

Ellison G (1997) Learning from personal experience: one rational guy and the justification of myopia. Games Econ Behav 19:180–210

Fudenberg D, Kreps DM, Maskin ES (1990) Repeated games with long-run short-run players. Rev Econ Stud 57:555–573

Fudenberg D, Levine DK (1998) The theory of learning in games. The MIT Press, Cambridge

Fudenberg D, Levine DK (1994) Efficiency and observability with long-run and short-run players. J Econ Theory 62:103–135

Fudenberg D, Levine DK (1989) Reputation and equilibrium selection in games with a patient player. Econometrica 57:759–778

Güth W, Peleg B (2001) When will payoff maximization survive? An indirect evolutionary analysis. J Evol Econ 11:479–499

Hart S, Mas-Colell A (2013) Simple adaptive strategies: from regret-matching to uncoupled dynamics. World Scientific Publishing, Singapore

Hart S, Mas-Colell A (2006) Stochastic uncoupled dynamics and Nash equilibrium. Games Econ Behav 57:286–303

Hehenkamp B, Kaarbøe O (2006) Imitators and optimizers in a changing environment. J Econ Dyn Control 32:1357–1380

Heifetz A, Shannon C, Spiegel Y (2007) What to maximize if you must. J Econ Theory 133:31–57

Hyndman K, Ozbay EY, Schotter A, Ehrblatt WZ (2012) Convergence: an experimental study of teaching and learning in repeated games. J Eur Econ Assoc 10:573–604

Juang WT (2002) Rule evolution and equilibrium selection. Games Econ Behav 39:71–90

Kordonis I, Charalampidis AC, Papavassilopoulos GP (2018) Pretending in dynamic games: alternative outcomes and application to electricity markets. Dyn Games Appl 8:844–873

Kukushkin NS (2004) Best response dynamics in finite games with additive aggregation. Games Econ Behav 48:94–110

Milgrom P, Roberts J (1990) Rationalizability, learning, and equilibrium in games with strategic complementarities. Econometrica 58:1255–1277

Milgrom P, Shannon C (1994) Monotone comparative statics. Econometrica 62:157–180

Monderer D, Shapley LS (1996) Potential games. Games Econ Behav 14:124–143

Osborne M (2004) An introduction to game theory. Oxford University Press, Oxford

Puterman ML (1994) Markov decision processes. Discrete stochastic dynamic programming. Wiley, New York

Rand D (1978) Excotic phenomena in games and duopoly models. J Math Econ 5:173–184

Schipper BC (2017) Strategic teaching and learning in games. The University of California, Davis, Davis

Schipper BC (2009) Imitators and optimizers in Cournot oligopoly. J Econ Dyn Control 33:1981–1990

Stokey NL, Lucas RE, Prescott EC (1989) Recursive methods in economic dynamics. Harvard University Press, Cambridge

Terracol A, Vaksmann J (2009) Dumbing down rational players: learning and teaching in an experimental game. J Econ Behav Organ 70:54–71

Topkis D (1978) Minimizing a submodular function on a lattice. Oper Res 26:305–321

Topkis D (1998) Supermodularity and complementarity. Princeton University Press, Princeton

Van Huyck J, Battalio R, Beil R (1990) Tacit coordination games, strategic uncertainty and coordination failure. Am Econ Rev 80:234–248

Vives X (1999) Oligopoly pricing. Old ideas and new tools. Cambridge University Press, Cambridge

Walker JM, Gardner R, Ostrom E (1990) Rent dissipation in a limited access Common-Pool resource: experimental evidence. J Environ Econ Manag 19:203–211

Young P (2013) Strategic learning and its limits. Oxford University Press, Oxford

Acknowledgements

I thank Rabah Amir, the associate editor, and three anonymous reviewers as well as participants at the 2007 Stony Brook Game Theory Festival for helpful comments. I also thank Zhong Sichen for very able research assistance. This work developed from a joint experimental project with Dürsch et al. [15].

Author information

Authors and Affiliations

Corresponding author

Additional information

Financial support by NSF CCF-1101226 is gratefully acknowledged.

A Proofs and Auxiliary Results

A Proofs and Auxiliary Results

Proof of Lemma 1

If p is upper semicontinuous in y on Y, then by the Weierstrass Theorem an argmax exist. By Theorem of the Maximum [6], the argmax correspondence is upper hemicontinuous and compact-valued in x. Since p is strictly quasiconcave, the argmax is unique. Hence the upper hemicontinuous best-response correspondence is a continuous best-response function. Since m is upper semicontinuous and b is continuous, we have that \({\hat{m}}\) is upper semicontinuous. \(\square \)

Proof of Lemma 2

Under the conditions of the Lemma we have by Lemma 1 that \({\hat{m}}\) is upper semicontinuous on \(X \times X\). By the Theorem of the Maximum [6], \(M_1\) is upper semicontinuous on X. If \(M_{n-1}\) is upper semicontinuous on X and \({\hat{m}}\) is upper semicontinuous on \(X \times X\), then since \(\delta \ge 0\), \({\hat{m}}(x', x) + \delta M_{n-1}(x')\) is upper semicontinuous in \(x'\) on X. Again, by the Theorem of the Maximum, \(M_n\) is upper semicontinuous on X. Thus by induction \(M_n\) is upper semicontinuous on X for any n.

Let L be an operator on the space of bounded upper semicontinuous functions on X defined by \(L M_{\infty } (x) = sup_{x' \in X_{x}} \{{\hat{m}}(x', x) + \delta M_{\infty }(x') \}\). This function is upper semicontinuous by the Theorem of the Maximum. Hence L maps bounded upper semicontinuous functions to bounded upper semicontinuous functions. L is a contraction mapping by Blackwell’s sufficiency conditions [42]. Since the space of bounded upper semicontinuous functions is a complete subset of the complete metric space of bounded functions with the \(\sup \) distance, it follows from the Contraction Mapping Theorem that L has a unique fixed point \(M_{\infty }\) which is upper semicontinuous on X. \(\square \)

Proof of Lemma 3

We state the proof just for one case. The proof of the other cases follow analogously.

- (i)

If p has strongly decreasing differences in (y, x) on \(Y \times X\), then by Topkis [45] b is strictly decreasing in x on X. If m has strongly decreasing differences in (x, y) on \(X \times Y\), \({\hat{m}}(\cdot , \cdot ) = m(\cdot , b(\cdot ))\) must have strongly increasing differences on \(X \times X\).

- (ii)

If p has decreasing differences in (y, x) on \(Y \times X\), then by Topkis [45] b is decreasing in x on X. Hence, if m has negative externalities, \({\hat{m}}(x', x) = m(x', b(x))\) must be increasing in x.

Proof of Proposition 1 (ii)

The proofs of the first two lines in table of Proposition 1 (ii) follow directly from previous Lemmata and Amir ([1], Theorem 2 (i)). The last two lines require a proof.

Line 3 (resp. Line 4): If m has positive externalities, and both m and p have decreasing differences (resp. m has negative externalities, and both m and p have increasing differences), and \(X_y\) is contracting, then \({\bar{s}}_{n+1} \le {\bar{s}}_n\) and \(\underline{s}_{n+1} \le \underline{s}_n\).

We first show that in this case \(M_n(x)\) has decreasing differences in (n, x) on \(\mathbb {N} \times X\). We proceed by induction by showing that for \(x'' \ge x'\) and for all \(n \in \mathbb {N}\),

For \(n = 1\), inequality (6) reduces to \(M_1(x'') \le M_1(x')\) since \(M_0 \equiv 0\). Since m has positive externalities and p has decreasing differences (resp. m has negative externalities and p has increasing differences), and \(X_y\) is contracting, we have by Lemma 4, line 3 (resp. line 4), that \(M_n\) is decreasing on X. Hence, the claim follows for \(n = 1\).

Next, suppose that inequality (6) holds for all \(n \in \{1, 2, \ldots , k - 1\}\). We have to show that it holds for \(k = n\). Consider the maximand in Eq. (4), i.e.,

Since both m and p have decreasing differences (resp. both m and p have increasing differences), we have by Lemma 3 (i), line 2 (resp. line 1), that \({\hat{m}}(z, x)\) has increasing differences in (z, x). \(M_n(z)\) has decreasing differences in (n, z) on \(\{1, 2, \ldots , k - 1\} \times X\) by the induction hypothesis. Hence \(M_n(z)\) has increasing differences in \((-n, x)\) on \(\{-(k - 1), \ldots , -2, -1\} \times X\). We conclude that the maximand is supermodular in \((z, x, -n)\) on \(X_y \times X \times \{-(k - 1), \ldots , -2, -1\}\).Footnote 16 By Topkis’s ([45], Theorem 2.7.6), \(M_n(x)\) has increasing differences in \((x, -n)\) on \(X \times \{-k, -(k - 1), \ldots , -2, -1\}\). Thus it has decreasing differences in (x, n) on \(X \times \{1, 2, \ldots , k\}\). This proves the claim that \(M_n(x)\) has decreasing differences in (n, x) on \(\mathbb {N} \times X\).

Finally, the dual result for decreasing differences to Topkis ([45], Theorem 2.8.3 (a)) implies that both \({\bar{s}}_{n+1} \le {\bar{s}}_n\) and \(\underline{s}_{n+1} \le \underline{s}_n\). This completes the proof of line 3 (resp. line 4) in Proposition 1 (ii). \(\square \)

Auxiliary Result to Proposition 1(ii) Proposition 1 (ii) makes no mentioning of four other cases in which the monotone differences of m and p may differ. The following proposition show that analogous results for those cases cannot be obtained.

Proposition 3

-

(i)

If [m has positive externalities and decreasing differences, and p has increasing differences] or [m has negative externalities and increasing differences, and p has decreasing differences], and \(X_y\) is expanding, then \(M_n(x)\) has neither increasing nor decreasing differences in (n, x) unless it is a valuation.

-

(ii)

If [m has positive externalities and increasing differences, and p has decreasing differences] or [m has negative externalities and decreasing differences, and p has increasing differences], and \(X_y\) is expanding, then \(M_n(x)\) has neither increasing nor decreasing differences in (n, x) unless it is a valuation.

Proof

We just prove here part (i). Part (ii) follows analogously.

Suppose to the contrary that \(M_n(x)\) has decreasing differences in (n, x). We want to show inductively that for \(x'' \ge x'\) we have for all \(n \in \mathbb {N}\) inequality (6). For \(n = 1\), inequality (6) reduces to \(M_1(x'') \le M_1(x')\) since \(M_0 \equiv 0\). Since either [m has positive externalities and p has increasing differences] or [m has negative externalities and p has decreasing differences], and \(X_y\) is expanding, we have by Lemma 4, line 3 (resp. line 4), that \(M_n\) is increasing on X. Hence, a contradiction unless \(M_1(x'') = M_1(x')\).

Suppose now to the contrary that \(M_n(x)\) has increasing differences in (n, x). We want to show inductively that for \(x'' \ge x'\) we have for all \(n \in \mathbb {N}\),

For \(n = 1\), inequality (7) reduces to \(M_1(x'') \ge M_1(x')\) since \(M_0 \equiv 0\). Since either [m has positive externalities and p has increasing differences] or [m has negative externalities and p has decreasing differences], and \(X_y\) is expanding, we have by Lemma 4, line 3 (resp. line 4), that \(M_n\) is increasing on X, which implies \(M_1(x'') \ge M_1(x')\).

Furthermore, suppose that inequality (7) holds for all \(n \in \{1, 2, \ldots , k-1\}\). We have to show that it holds for \(k = n\). Consider the maximand in Eq. (4), i.e. \({\hat{m}}(z, x) + \delta M_{k - 1}(z)\). Since [m has decreasing differences and p has increasing differences] or [m has increasing differences and p has decreasing differences], we have by Lemma 3 (i), line 3 or 4, that \({\hat{m}}(z, x)\) has decreasing differences in (z, x). Hence \({\hat{m}}(z, x)\) has increasing differences in \((z, -x)\). \(M_n(z)\) has increasing differences in (n, z) on \(\{1, 2, \ldots , k - 1\} \times X\) by the induction hypothesis. We conclude that the maximand is supermodular in \((z, -x, n)\) on \(X_y \times X \times \{1, 2, \ldots , k-1\}\). By Topkis’s ([45], Theorem 2.7.6), \(M_n(x)\) has increasing differences in \((-x, n)\) on \(X \times \{1, 2, \ldots , k-1\}\). Thus it has decreasing differences in (x, n) on \(X \times \{1, 2, \ldots , k\}\), a contradiction unless it is a valuation. \(\square \)

Proof of Proposition 1 (iii)

The proof is essentially analogous to the proof of Theorem 2 (ii) in Amir [1]. We explicitly state where we require that \({\hat{m}}\) is increasing on X and \(X_y\) is expanding.

We show by induction on n that \(M_n(x, \delta )\) has increasing differences in \((x, \delta ) \in X \times (0, 1)\). For \(n = 1\), the claim holds trivially since \(M_1\) is independent of \(\delta \).

Assume that \(M_{k - 1}(x, \delta )\) has increasing differences in \((x, \delta )\). We need to show that \(M_k(x, \delta )\) has increasing differences in \((x, \delta )\) has well. We rewrite Eq. (4) with explicit dependence on \(\delta \) and \(n = k\),

Since [both m and p have increasing differences] or [both m and p have decreasing difference], we have by Lemma 3 (i), line 1 or 2, that \({\hat{m}}(z, x)\) has increasing differences in (z, x). \(M_{k - 1}(z, \delta )\) has increasing differences in \((\delta , z)\) by the induction hypothesis. That is, for \(\delta '' \ge \delta '\) and \(z'' \ge z'\),

Since [m has positive externalities and p has increasing differences] or [m has negative externalities and p has decreasing differences] and \(X_y\) is expanding, we have by Lemma 4, line 1 or 2, that \(M_{k-1}(z, \delta )\) is increasing in z on \(X_y\). Hence both the LHS and the RHS of inequality (9) are positive. Therefore, multiplying the LHS with \(\delta ''\) and the RHS with \(\delta '\) preserves the inequality. We conclude that \(\delta M_{k - 1}(z, \delta )\) has increasing differences in \((\delta , z)\). Hence the maximand in Eq. (8) is supermodular in \((\delta , z, x)\) on \((0, 1) \times X_y \times X\).

By Topkis’s ([45], Theorem 2.7.6), \(M_n(x, \delta )\) has increasing differences in \((\delta , x)\) on \(X \times (0, 1)\). Finally, Topkis ([45], Theorem 2.8.3 (a)) implies that \({\bar{s}}_n(\cdot , \delta '') \ge {\bar{s}}_n(\cdot , \delta ')\) and \(\underline{s}_n(\cdot , \delta '') \ge \underline{s}_n(\cdot , \delta ')\). This completes the proof of Proposition 1 (iii). \(\square \)

Auxiliary Results to Proposition 1(iii) Proposition 1 (ii) is silent on a number of cases:

Proposition 4

Suppose that [m has positive externalities, m has decreasing differences, and p has increasing differences] or [m has negative externalities, m has increasing differences, and p has decreasing differences] and \(X_y\) is expanding. Then \(M_n(x, \delta )\) has NOT increasing differences in \((\delta , x)\) on \((0, 1) \times X\) unless it is a valuation.

Proof

Suppose to the contrary that \(M_n(x, \delta )\) has increasing differences in \((\delta , x) \in (0, 1) \times X\). For \(n = 1\) the claim is trivial since \(M_n\) is independent of \(\delta \).

Assume that \(M_{k - 1}(x, \delta )\) has increasing differences in \((x, \delta )\). We need to show that \(M_k(x, \delta )\) has increasing differences in \((x, \delta )\) has well. Consider the maximand in Eq. (8). Since [m has decreasing differences and p has increasing differences] or [m has increasing differences and p has decreasing difference], we have by Lemma 3 (i), line 3 or 4, that \({\hat{m}}(z, x)\) has decreasing differences in (z, x). Hence, it has increasing differences in \((z, -x)\). \(M_{k - 1}(z, \delta )\) has increasing differences in \((\delta , z)\) by the induction hypothesis so that inequality (9) holds.

Since [m has positive externalities and p has increasing differences] or [m has negative externalities and p has decreasing differences] and \(X_y\) is expanding, we have by Lemma 4, line 1 or 2, that \(M_{k-1}(z, \delta )\) is increasing in z on \(X_y\). Hence both the LHS and the RHS of inequality (9) are positive. Therefore, multiplying the LHS with \(\delta ''\) and the RHS with \(\delta '\) preserves the inequality. We conclude that \(\delta M_{k - 1}(z, \delta )\) has increasing differences in \((\delta , z)\). Hence the maximand in Eq. (8) is supermodular in \((\delta , z, -x)\) on \((0, 1) \times X_y \times X\).

By Topkis’s ([45], Theorem 2.7.6), \(M_n(x, \delta )\) has increasing differences in \((\delta , -x)\) on \(X \times (0, 1)\). Hence it has decreasing differences in \((\delta , x)\), a contradiction unless it is a valuation. \(\square \)

Two other cases, namely

- (i)

[m has positive externalities and both m and p have decreasing differences] or [m has negative externalities and both m and p have increasing differences] and \(X_y\) is contracting,

- (ii)

[m has positive externalities, increasing differences, and p has decreasing differences] or [m has negative externalities, decreasing differences, and p has increasing differences] and \(X_y\) is contracting,

cannot be dealt with the method used to prove Proposition 1 (iii) and Proposition 4. Both cases are such that according to Lemma 4 we have that \(M_n(x, \delta )\) is decreasing on X. Therefore the analogous inequality to (9) may be reversed if multiplying the LHS with \(\delta ''\) and the RHS with \(\delta '\).

Proof of Proposition 2 (i)

Note that the first-order condition for the maximization in Eq. (5) (analogously for Eq. (4)) is

Suppose that for some \(x'' > x'\), \(s(x'') = s(x')\). Then from Eq. (10) we conclude \(\frac{\partial {\hat{m}}(x'', s(x''))}{\partial z} = \frac{\partial {\hat{m}}(x', s(x'))}{\partial z}\), which contradicts that \({\hat{m}}\) has strongly decreasing differences in (x, z). Hence, \(s(x'') = s(x')\) is not possible, and then by Proposition 1 (i), \(s(x'') < s(x')\). This completes the proof of part (i). \(\square \)

Proof of Proposition 2 (ii)

The proof is essentially “dual” to the proof of Amir ([1], Theorem 3 (ii)).

By Proposition 1 (ii) that \(s_{n + 1}(x) \ge s_n(x)\) for all \(x \in X\). Suppose that for some \(x_n \in X\), \(s_{n + 1}(x_n) = s_{n}(x_n)\). We will show that there exists \(x' \in X\) such that \(s_{n-1}(x') = s_{n-2}(x')\).

Plugging \(s_{n + 1}(x_n) = s_{n}(x_n)\) in the Euler equations corresponding to the problem given in Eq. (4) for \(n = 2, 3, \ldots \)

leads to

Since \({\hat{m}}\) has strongly increasing differences by Lemma 3 (i) we must have \(s_{n-1}(s_n(x_n)) = s_{n}(s_{n+1}(x_n))\). Hence \(s_{n-1}(s_n(x_n)) = s_n(s_{n}(x_n))\). Set \(x_{n-1} \equiv s_n(x_n)\). Thus \(s_{n-1}(x_{n-1}) = s_n(x_{n-1})\). Plugging into the Euler equations,

leads to

Since \({\hat{m}}\) has strongly increasing differences by Lemma 3 (i) last equation implies that \(s_{n-1}(s_{n}(x_{n-1})) = s_{n-2}(s_{n-1}(x_{n-1})) = s_{n-2}(s_n(x_{n-1}))\). Hence there exists \(x' \in X\) such that \(s_{n-1}(x') = s_{n-2}(x')\).

By induction we obtain the existence of \(x_2 \in X\) for which \(s_1(x_2) = s_2(x_2)\). The Euler equations for the one- and two-period problems at \(x_2\) are given by

Since \(x_2 \in X\) is such that \(s_1(x_2) = s_2(x_2)\), the Euler equations imply \(\frac{\partial {\hat{m}}(s_1(s_2(x_2)), s_2(x_2))}{\partial x} = 0\).

Note that the conditions of line 3 or 4 in Proposition 2 (ii) imply by Lemma 3 (ii) that \(\frac{\partial {\hat{m}}(z, x)}{\partial x} < 0\), a contradiction. \(\square \)

Rights and permissions

About this article

Cite this article

Schipper, B.C. Dynamic Exploitation of Myopic Best Response. Dyn Games Appl 9, 1143–1167 (2019). https://doi.org/10.1007/s13235-018-0289-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13235-018-0289-z