Abstract

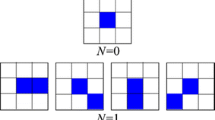

The 3D auto-correlation gradient features have demonstrated only limited success on depth action data, whereas the 2D auto-correlation gradient features have been successful in the domain. In this paper, we propose to calculate three depth motion map sequences from each depth action video by accumulating only the motion information of the action. We then obtain the three vectors of 3D auto-correlation gradient features by applying the space-time auto-correlation of gradients (STACOG) descriptor on the depth motion map sequences. The three vectors are then concatenated and passed to an unsupervised classifier to recognize the action. The experimental evaluation on four public datasets (MSR-Action3D, DHA, UTD-MHAD, and MSR-Gesture3D dataset) demonstrates the superiority of our proposed method over state-of-the-art methods.

Similar content being viewed by others

References

Ahmad Z, Khan N (2020) Cnn-based multistage gated average fusion (mgaf) for human action recognition using depth and inertial sensors. IEEE Sens J 21(3):3623–3634

Al-Obaidi S, Abhayaratne C (2019) Privacy protected recognition of activities of daily living in video. In: 3rd IET international conference on technologies for active and assisted living (TechAAL 2019), pp 1–6. https://doi.org/10.1049/cp.2019.0101

Ali HH, Moftah HM, Youssif AA (2018) Depth-based human activity recognition: a comparative perspective study on feature extraction. Future Comput Inform J 3(1):51–67

Azad R, Asadi-Aghbolaghi M, Kasaei S, Escalera S (2018) Dynamic 3D hand gesture recognition by learning weighted depth motion maps. IEEE Trans Circ Syst Video Technol 29(6):1729–1740

Blank M, Gorelick L, Shechtman E, Irani M, Basri R (2005) Actions as space-time shapes. In: Tenth IEEE international conference on computer vision (ICCV’05), vols 1 and 2. IEEE, pp 1395–1402

Bulbul MF, Galib SM, Ali H (2019) Human action recognition using GLAC features on multi-view binary coded images. In: 2019 UK/China emerging technologies (UCET). IEEE, pp 1–4

Bulbul MF, Islam S, Ali H (2019) 3D human action analysis and recognition through GLAC descriptor on 2D motion and static posture images. Multim Tools Appl 78(15):21085–21111

Bulbul MF, Islam S, Zhou Y, Ali H (2019) Improving human action recognition using hierarchical features and multiple classifier ensembles. Comput J

Bulbul MF, Jiang Y, Ma J (2015) DMMs-based multiple features fusion for human action recognition. Int J Multim Data Eng Manage (IJMDEM) 6(4):23–39

Bulbul MF, Tabussum S, Ali H, Zheng W, Lee MY, Ullah A (2021) Exploring 3D human action recognition using STACOG on multi-view depth motion maps sequences. Sensors 21(11):3642

Chen C, Jafari R, Kehtarnavaz N (2015)Action recognition from depth sequences using depth motion maps-based local binary patterns. In: 2015 IEEE winter conference on applications of computer vision. IEEE, pp 1092–1099

Chen C, Jafari R, Kehtarnavaz N (2015) UTD-MHAD: a multimodal dataset for human action recognition utilizing a depth camera and a wearable inertial sensor. In: 2015 IEEE international conference on image processing (ICIP). IEEE, pp 168–172

Chen C, Jafari R, Kehtarnavaz N (2017) A survey of depth and inertial sensor fusion for human action recognition. Multim Tools Appl 76(3):4405–4425

Chen C, Liu K, Kehtarnavaz N (2016) Real-time human action recognition based on depth motion maps. J Real-Time Image Proc 12(1):155–163

Chen C, Liu M, Liu H, Zhang B, Han J, Kehtarnavaz N (2017) Multi-temporal depth motion maps-based local binary patterns for 3-D human action recognition. IEEE Access 5:22590–22604

Chen C, Zhang B, Hou Z, Jiang J, Liu M, Yang Y (2017) Action recognition from depth sequences using weighted fusion of 2D and 3D auto-correlation of gradients features. Multim Tools Appl 76(3):4651–4669

Chen L, Wei H, Ferryman J (2013) A survey of human motion analysis using depth imagery. Pattern Recogn Lett 34(15):1995–2006

Chen Y, Wang L, Li C, Hou Y, Li W (2020) ConvNets-based action recognition from skeleton motion maps. Multim Tools Appl 79(3):1707–1725

De Smedt Q, Wannous H, Vandeborre, JP (2016) 3D hand gesture recognition by analysing set-of-joints trajectories. In: International workshop on understanding human activities through 3D sensors. Springer, pp 86–97

Gao Z, Song Jm, Zhang H, Liu AA, Xue Yb, Xu Gp (2014) Human action recognition via multi-modality information. J Electr Eng Technol 9(2):739–748

Gao Z, Zhang H, Liu AA, Xue Y, Xu G (2014) Human action recognition using pyramid histograms of oriented gradients and collaborative multi-task learning. KSII Trans Internet Inform Syst 8(2)

Gul MA, Yousaf MH, Nawaz S, Ur Rehman Z, Kim H (2020) Patient monitoring by abnormal human activity recognition based on cnn architecture. Electronics 9(12):1993

Hou Y, Li Z, Wang P, Li W (2016) Skeleton optical spectra-based action recognition using convolutional neural networks. IEEE Trans Circuits Syst Video Technol 28(3):807–811

Ji X, Cheng J, Feng W (2016) Spatio-temporal cuboid pyramid for action recognition using depth motion sequences. In: 2016 eighth international conference on advanced computational intelligence (ICACI). IEEE, pp 208–213

Jin K, Jiang M, Kong J, Huo H, Wang X (2017) Action recognition using vague division DMMs. J Eng 2017(4):77–84

Khan S, Ali H, Ullah Z, Bulbul MF (2018) An intelligent monitoring system of vehicles on highway traffic. In: 2018 12th international conference on open source systems and technologies (ICOSST). IEEE, pp 71–75

Kobayashi T, Otsu N (2012) Motion recognition using local auto-correlation of space-time gradients. Pattern Recogn Lett 33(9):1188–1195

Kong J, Zan B, Jiang M (2018) Human action recognition using depth motion maps pyramid and discriminative collaborative representation classifier. J Electron Imag 27(3):027–033

Kurakin A, Zhan Z, Liu Z A (2012) real time system for dynamic hand gesture recognition with a depth sensor. In: 2012 Proceedings of the 20th European signal processing conference (EUSIPCO). IEEE, pp 1975–1979

Kwon D, Hodkiewicz MR, Fan J, Shibutani T, Pecht MG (2016) IoT-based prognostics and systems health management for industrial applications. IEEE Access 4:3659–3670

Lemieux N, Noumeir R (2020) A hierarchical learning approach for human action recognition. Sensors 20(17):4946

Li C, Zhong Q, Xie D, Pu S (2017) Skeleton-based action recognition with convolutional neural networks. In: 2017 IEEE international conference on multimedia & Expo workshops (ICMEW). IEEE, pp 597–600

Li, W, Zhang Z, Liu Z (2010) Action recognition based on a bag of 3D points. In: 2010 IEEE computer society conference on computer vision and pattern recognition-workshops. IEEE, pp 9–14

Lin YC, Hu MC, Cheng WH, Hsieh, YH, Chen HM (2012) Human action recognition and retrieval using sole depth information. In: Proceedings of the 20th ACM international conference on Multimedia, pp 1053–1056

Liu H, Tian L, Liu M, Tang H (2015) Sdm-bsm: A fusing depth scheme for human action recognition. In: 2015 IEEE international conference on image processing (ICIP). IEEE, pp 4674–4678

Liu M, Liu H, Chen C, Najafian M (2016) Energy-based global ternary image for action recognition using sole depth sequences. In: 2016 Fourth international conference on 3D vision (3DV). IEEE, pp 47–55

Liu M, Meng F, Chen C, Wu S (2019) Joint dynamic pose image and space time reversal for human action recognition from videos. In: Proceedings of the AAAI conference on artificial intelligence, vol 33, pp 8762–8769

Liu Z, Zhang C, Tian Y (2016) 3D-based deep convolutional neural network for action recognition with depth sequences. Image Vis Comput 55:93–100

Martin M, Roitberg A, Haurile, M, Horne M, Reiß S, Voit M, Stiefelhagen R (2019) Drive&act: a multi-modal dataset for fine-grained driver behavior recognition in autonomous vehicles. In: Proceedings of the IEEE international conference on computer vision, pp 2801–2810

Oreifej O, Liu Z (2013) Hon4d: histogram of oriented 4D normals for activity recognition from depth sequences. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 716–723

Ou H, Sun J (2021) The multidimensional motion features of spatial depth feature maps: An effective motion information representation method for video-based action recognition. Math Probl Eng 2021

Park S, Kim D (2018) Video surveillance system based on 3d action recognition. In: 2018 Tenth international conference on ubiquitous and future networks (ICUFN). IEEE, pp 868–870

Rahmani H, Huynh DQ, Mahmood A, Mian A (2016) Discriminative human action classification using locality-constrained linear coding. Pattern Recogn Lett 72:62–71

Rahmani H, Mahmood A, Huynh DQ, Mian A (2014) Real time action recognition using histograms of depth gradients and random decision forests. In: IEEE winter conference on applications of computer vision. IEEE, pp 626–633

Rani SS, Naidu GA, Shree VU (2021) Kinematic joint descriptor and depth motion descriptor with convolutional neural networks for human action recognition. Mater Today Proc 37:3164–3173

Rodomagoulakis I, Kardaris N, Pitsikalis V, Mavroudi E, Katsamanis A, Tsiami, A, Marago, P (2016) Multimodal human action recognition in assistive human-robot interaction. In: 2016 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, pp 2702–2706

Shojaei-Hashemi A, Nasiopoulos P, Little JJ, Pourazad MT (2018) Video-based human fall detection in smart homes using deep learning. In: 2018 IEEE international symposium on circuits and systems (ISCAS). IEEE, pp 1–5

Sidor K, Wysocki M (2020) Recognition of human activities using depth maps and the viewpoint feature histogram descriptor. Sensors 20(10):2940

Sung J, Ponce C, Selman B, Saxena A (2012) Unstructured human activity detection from RGBD images. In: 2012 IEEE international conference on robotics and automation. IEEE, pp 842–849

Tang NC, Lin YY, Hua JH, Weng MF, Liao HYM (2014) Human action recognition using associated depth and skeleton information. In: 2014 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, pp 4608–4612

Tasnim N, Islam M, Baek JH et al (2020) Deep learning-based action recognition using 3d skeleton joints information. Inventions 5(3):49

Tikhonov AN, Arsenin VY (1979) Solutions of ill-posed problems. SIAM Rev 21(2):266–267

Vieira AW, Nascimento ER, Oliveira GL, Liu Z, Campos MF (2012) Stop: space-time occupancy patterns for 3d action recognition from depth map sequences. In: Iberoamerican congress on pattern recognition. Springer, pp 252–259

Viet VH, Phuc NTT, Hoang PM, Nghia LK (2018) Spatial-temporal shape and motion features for dynamic hand gesture recognition in depth video. Int J Image Graph Sig Process 10(9)

Wang H, Schmid C (2013) Action recognition with improved trajectories. In: Proceedings of the IEEE international conference on computer vision, pp 3551–3558

Wang J, Liu Z, Chorowski J, Chen Z, Wu Y (2012) Robust 3D action recognition with random occupancy patterns. In: European conference on computer vision. Springer, pp 872–885

Wang J, Liu Z, Wu Y, Yuan J (2013) Learning actionlet ensemble for 3D human action recognition. IEEE Trans Pattern Anal Mach Intell 36(5):914–927

Wang L, Ding Z, Tao Z, Liu Y, Fu Y (2019) Generative multi-view human action recognition. In: Proceedings of the IEEE international conference on computer vision, pp 6212–6221

Wang P, Li W, Gao Z, Zhang J, Tang C, Ogunbona PO (2015) Action recognition from depth maps using deep convolutional neural networks. IEEE Trans Human Mach Syst 46(4):498–509

Wang P, Li W, Ogunbona P, Wan J, Escalera S (2018) RGB-D-based human motion recognition with deep learning: a survey. Comput Vis Image Underst 171:118–139

Wang P, Wang S, Gao Z, Hou Y, Li W (2017) Structured images for RGB-D action recognition. In: Proceedings of the IEEE international conference on computer vision workshops, pp 1005–1014

Wang Q, Chen K (2020) Multi-label zero-shot human action recognition via joint latent ranking embedding. Neural Netw 122:1–23

Xia L, Aggarwal J (2013) Spatio-temporal depth cuboid similarity feature for activity recognition using depth camera. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2834–2841

Yang R, Yang R (2014) DMM-pyramid based deep architectures for action recognition with depth cameras. In: Asian conference on computer vision. Springer, pp 37–49

Yang X, Zhang C, Tian Y (2012) Recognizing actions using depth motion maps-based histograms of oriented gradients. In: Proceedings of the 20th ACM international conference on multimedia, pp 1057–1060

Zanfir M, Leordeanu M, Sminchisescu C (2013) The moving pose: an efficient 3d kinematics descriptor for low-latency action recognition and detection. In: Proceedings of the IEEE international conference on computer vision, pp 2752–2759

Zhang B, Yang Y, Chen C, Yang L, Han J, Shao L (2017) Action recognition using 3D histograms of texture and a multi-class boosting classifier. IEEE Trans Image Process 26(10):4648–4660

Zhang C, Tian Y (2015) Histogram of 3D facets: a depth descriptor for human action and hand gesture recognition. Comput Vis Image Underst 139:29–39

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Bulbul, M.F., Islam, S., Azme, Z. et al. Enhancing the performance of 3D auto-correlation gradient features in depth action classification. Int J Multimed Info Retr 11, 61–76 (2022). https://doi.org/10.1007/s13735-021-00226-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13735-021-00226-1