Abstract

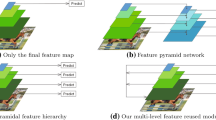

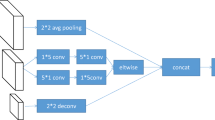

Numerous recent object detectors and classifiers have shown acceptable performance in recent years by using convolutional neural networks and other efficient architectures. However, most of them continue to encounter difficulties like overfitting, increased computational costs, and low efficiency and performance in real-time scenarios. This paper proposes a new lightweight model for detecting and classifying objects in images. This model presents a backbone for extracting in-depth features and a spatial feature pyramid network (SFPN) for accurately detecting and categorizing objects. The proposed backbone uses point-wise separable (PWS) and depth-wise separable convolutions, which are more efficient than standard convolution. The PWS convolution utilizes a residual shortcut link to reduce computation time. We also propose a SFPN that comprises concatenation, transformer encoder–decoder, and feature fusion modules, which enables the simultaneous processing of multi-scale features, the extraction of low-level characteristics, and the creation of a pyramid of features to increase the effectiveness of the proposed model. The proposed model outperforms all of the existing backbones for object detection and classification in three publicly accessible datasets: PASCAL VOC 2007, PASCAL VOC 2012, and MS-COCO. Our extensive experiments show that the proposed model outperforms state-of-the-art detectors, with mAP improvements of 2.4% and 2.5% on VOC 2007, 3.0% and 2.6% on VOC 2012, and 2.5% and 3.6% on MS-COCO in the small and large sizes of the images, respectively. In the MS-COCO dataset, our model achieves FPS of 39.4 and 33.1 in a single GPU for the small (\(320 \times 320\)) and large (\(512 \times 512\)) sizes of the images, respectively, which shows that our method can run in real-time.

Similar content being viewed by others

References

Ning J, Zhang L, Zhang D, Wu C (2009) Robust object tracking using joint color-texture histogram. Int J Pattern Recognit Artif Intell 23(07):1245–1263

Dalal N, Triggs B (2005) Histograms of oriented gradients for human detection. In: IEEE computer society conference on computer vision and pattern recognition (CVPR’05), vol 1. IEEE, pp 886–893

Mani MR, Potukuchi D, Satyanarayana C (2016) A novel approach for shape-based object recognition with curvelet transform. Int J Multimed Inf Retriev 5(4):219–228

Adelson EH, Anderson CH, Bergen JR, Burt PJ, Ogden JM (1984) Pyramid methods in image processing. RCA Eng 29(6):33–41

Bastian BT, Jiji CV (2019) Pedestrian detection using first-and second-order aggregate channel features. Int J Multimed Inf Retriev 8(2):127–133

Deng J, Dong W, Socher R, Li L-J, Li K, Fei-Fei L (2009) Imagenet: a large-scale hierarchical image database. In: IEEE conference on computer vision and pattern recognition. IEEE, pp 248–255

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M et al (2015) Imagenet large scale visual recognition challenge. Int J Comput Vision 115(3):211–252

Geiger A, Lenz P, Urtasun R (2012) Are we ready for autonomous driving? the Kitti vision benchmark suite. In: 2012 IEEE conference on computer vision and pattern recognition. IEEE, pp 3354–3361

Everingham M, Eslami SA, Van Gool L, Williams CK, Winn J, Zisserman A (2015) The pascal visual object classes challenge: a retrospective. Int J Comput Vision 111(1):98–136

Lin T-Y, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollár P, Zitnick CL (2014) Microsoft coco: common objects in context. In: European conference on computer vision. Springer, pp 740–755

Solovyev R, Wang W, Gabruseva T (2021) Weighted boxes fusion: ensembling boxes from different object detection models. Image Vis Comput 107:104117

Shi C, Zhang W, Duan C, Chen H (2021) A pooling-based feature pyramid network for salient object detection. Image Vis Comput 107:104099

Girshick R (2015) Fast r-cnn. In: Proceedings of the IEEE international conference on computer vision, pp 1440–1448

Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu C-Y, Berg AC (2016) Ssd: single shot multibox detector. In: European conference on computer vision. Springer, pp 21–37

He K, Gkioxari G, Dollár P, Girshick R (2017) Mask R-CNN. In: Proceedings of the IEEE international conference on computer vision, pp 2961–2969

Dai J, Li Y, He K, Sun J R-FCN: object detection via region-based fully convolutional networks. arXiv:1605.06409

Redmon J, Divvala S, Girshick R, Farhadi A (2016) You only look once: unified, real-time object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 779–788

Redmon J, Farhadi A Yolov3: an incremental improvement. arXiv:1804.02767

Soviany P, Ionescu RT (2018) Optimizing the trade-off between single-stage and two-stage deep object detectors using image difficulty prediction. In: 20th international symposium on symbolic and numeric algorithms for scientific computing (SYNASC). IEEE, pp 209–214

Wu S, Li X, Wang X (2020) IOU-aware single-stage object detector for accurate localization. Image Vis Comput 97:103911

Ren S, He K, Girshick R, Sun J Faster R-CNN: Towards real-time object detection with region proposal networks. arXiv:1506.01497

Kong T, Sun F, Yao A, Liu H, Lu M, Chen Y (2017) Ron: Reverse connection with objectness prior networks for object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5936–5944

de Oliveira BAG, Ferreira FMF, da Silva Martins CAP (2018) Fast and lightweight object detection network: detection and recognition on resource constrained devices. IEEE Access 6:8714–8724

Wang D, Chen X, Yi H, Zhao F (2019) Improvement of non-maximum suppression in RGB-D object detection. IEEE Access 7:144134–144143

Bochkovskiy A, Wang C-Y, Liao H-YM Yolov4: Optimal speed and accuracy of object detection. arXiv:2004.10934

Chollet F (2017) Xception: Deep learning with depthwise separable convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1251–1258

Ren S, He K, Girshick R, Sun J (2016) Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell 39(6):1137–1149

Li Y, Chen Y, Wang N, Zhang Z (2019) Scale-aware trident networks for object detection. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 6054–6063

Najibi M, Singh B, Davis LS (2019) Autofocus: efficient multi-scale inference. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 9745–9755

Bell S, Zitnick CL, Bala K, Girshick R (2016) Inside-outside net: detecting objects in context with skip pooling and recurrent neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2874–2883

Lin T-Y, Dollár P, Girshick R, He K, Hariharan B, Belongie S (2017) Feature pyramid networks for object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2117–2125

Shrivastava A, Gupta A, Girshick R (2016) Training region-based object detectors with online hard example mining. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 761–769

Jiang B, Luo R, Mao J, Xiao T, Jiang Y (2018) Acquisition of localization confidence for accurate object detection. In: Proceedings of the European conference on computer vision (ECCV), pp 784–799

Kong T, Yao A, Chen Y, Sun F (2016) Hypernet: towards accurate region proposal generation and joint object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 845–853

Cai Z, Fan Q, Feris R. S, Vasconcelos N (2016) A unified multi-scale deep convolutional neural network for fast object detection. In: European conference on computer vision. Springer, pp 354–370

Liu S, Qi L, Qin H, Shi J, Jia J (2018) Path aggregation network for instance segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8759–8768

Zhou P, Ni B, Geng C, Hu J, Xu Y (2018) Scale-transferrable object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 528–537

Zhao Q, Sheng T, Wang Y, Tang Z, Chen Y, Cai L, Ling H (2019) M2det: A single-shot object detector based on multi-level feature pyramid network. In: Proceedings of the AAAI conference on artificial intelligence, vol 33, pp 9259–9266

Law H, Deng J (2018) Cornernet: detecting objects as paired keypoints. In: Proceedings of the European conference on computer vision (ECCV), pp 734–750

Dai J, He K, Sun J (2016) Instance-aware semantic segmentation via multi-task network cascades. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3150–3158

Zhao B, Feng J, Wu X, Yan S (2017) A survey on deep learning-based fine-grained object classification and semantic segmentation. Int J Autom Comput 14(2):119–135

Vinyals O, Toshev A, Bengio S, Erhan D (2015) Show and tell: a neural image caption generator. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3156–3164

Ghiasi G, Lin T-Y, Le QV (2019) Nas-fpn: learning scalable feature pyramid architecture for object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 7036–7045

Xu H, Yao L, Zhang W, Liang X, Li Z (2019) Auto-FPN: automatic network architecture adaptation for object detection beyond classification. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 6649–6658

Tan M, Pang R, Le QV (2020) Efficientdet: scalable and efficient object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10781–10790

He W, Wu Y, Liang P, Hao G (2020) Using darts to improve mold id recognition model based on mask R-CNN. J Phys Conf Ser 1518:012042

Huang Z, Huang L, Gong Y, Huang C, Wang X (2019) Mask scoring R-CNN. in: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 6409–6418

Redmon J, Farhadi A (2017) Yolo9000: better, faster, stronger. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7263–7271

Farhadi A, Redmon J Yolov3: An incremental improvement. Comput Vis Pattern Recognit cite as

Zhang Z, Qiao S, Xie C, Shen W, Wang B, Yuille AL (2018) Single-shot object detection with enriched semantics. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5813–5821

Liu Y, Li H, Yan J, Wei F, Wang X, Tang X (2017) Recurrent scale approximation for object detection in CNN. In: Proceedings of the IEEE international conference on computer vision, pp 571–579

Singh B, Davis LS (2018) An analysis of scale invariance in object detection snip. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3578–3587

Wang G, Xiong Z, Liu D, Luo C (2018) Cascade mask generation framework for fast small object detection. In: 2018 IEEE international conference on multimedia and expo (ICME). IEEE, pp 1–6

Lin T-Y, Goyal P, Girshick R, He K, Dollár P (2017) Focal loss for dense object detection. In: Proceedings of the IEEE international conference on computer vision, pp 2980–2988

Fu C-Y, Liu W, Ranga A, Tyagi A, Berg AC Dssd: deconvolutional single shot detector. arXiv:1701.06659

Li S, Yang L, Huang J, Hua X-S, Zhang L (2019) Dynamic anchor feature selection for single-shot object detection. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 6609–6618

Zhu Y, Zhao C, Wang J, Zhao X, Wu Y, Lu H (2017) Couplenet: coupling global structure with local parts for object detection. In: Proceedings of the IEEE international conference on computer vision, pp 4126–4134

Duan K, Bai S, Xie L, Qi H, Huang Q, Tian Q (2019) Centernet: object detection with keypoint triplets. arXiv:1904.08189

Sun P, Zhang R, Jiang Y, Kong T, Xu C, Zhan W, Tomizuka M, Li L, Yuan Z, Wang C et al (2021) Sparse R-CNN: End-to-end object detection with learnable proposals. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 14454–14463

Li J, Cheng B, Feris R, Xiong J, Huang TS, Hwu W-M, Shi H (2021) Pseudo-IOU: improving label assignment in anchor-free object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2378–2387

Li Y, Pang Y, Cao J, Shen J, Shao L (2021) Improving single shot object detection with feature scale unmixing. IEEE Trans Image Process 30:2708–2721

Carion N, Massa F, Synnaeve G, Usunier N, Kirillov A, Zagoruyko S (2020) End-to-end object detection with transformers. In: European conference on computer vision. Springer, pp 213–229

Li B, He Y (2018) An improved resnet based on the adjustable shortcut connections. IEEE Access 6:18967–18974

Mahmood A, Bennamoun M, An S, Sohel F, Boussaid F (2020) Resfeats: residual network based features for underwater image classification. Image Vis Comput 93:103811

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S et al, An image is worth 16x16 words: Transformers for image recognition at scale. arXiv:2010.11929

Everingham M, Van Gool L, Williams CK, Winn J, Zisserman A (2010) The pascal visual object classes (VOC) challenge. Int J Comput Vision 88(2):303–338

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Chen X, Li H, Wu Q, Meng F, Qiu H Bal-R2CNN: high quality recurrent object detection with balance optimization. IEEE Trans Multimed

Aziz L, FC MSBHS, Ayub S (2021) Multi-level refinement enriched feature pyramid network for object detection. Image Visi Comput 115:104287

Acknowledgements

This work was supported in part by the Scientific and Technological Research Council of Turkey (TUBITAK) through the 2232 Outstanding International Researchers Program under Project No. 118C301.

Author information

Authors and Affiliations

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Junayed, M.S., Islam, M.B., Imani, H. et al. PDS-Net: A novel point and depth-wise separable convolution for real-time object detection. Int J Multimed Info Retr 11, 171–188 (2022). https://doi.org/10.1007/s13735-022-00229-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13735-022-00229-6