Abstract

In order to be effective, a learning process requires the use of valid and suitable educational resources. However, measuring the quality of an educational resource is not an easy task for a teacher. The data of the performance of the students can be used to measure how appropriate the didactic resources are. Besides this data, adequate metrics and statistics are also needed. In this paper, TEA, a Visual Learning Analytics tool for measuring the quality of a particular type of educational resources, in particular test-based exercises, is presented. TEA is a teacher-oriented tool aimed at helping them to improve the quality of the learning material they have created by analyzing and visualizing the performance of the students. TEA evaluates not only the adequacy of individual items but also the appropriateness of a whole test. TEA provides the results of the evaluation so that they are easily interpretable by teachers and developers of educational material. The development of TEA required a thorough analysis and classification of metrics and statistics to identify those which are useful to measure the quality of test-based exercises using the data about the performance of the students. The tool provides visual representations of the performance of the students to allow teachers to evaluate the appropriateness of the test-based exercises they have created. The experimentation carried out with TEA at higher education level is also presented.

Similar content being viewed by others

Introduction

Advances in the psychology of learning, especially in our understanding of higher-order cognitive processes, have led to a steady evolution in understanding the teaching-learning process. Instead of viewing the learner as an agent reacting to the stimuli generated by the teacher, the learner is considered to be an active participant in the teaching-learning process (Weinstein and Mayer 1986). Professor Jim Greer is one of the pioneers in the application of Artificial Intelligence in education to construct teaching-learning systems adapted to the needs of the students. From this learner-centred perspective, in a joint effort, the GaLan research group of the University of the Basque Country UPV/EHU and the ARIES laboratory of the University of Saskatchewan developed a cognitive theory of instruction, the CLAI (Cognitive Learning from Automated Instruction) Model, under the supervision of Professor Isabel Fernández de Castro and Professor Jim Greer (Arruarte et al. 1996). Professor Jim Greer’s special interest to the psychological aspects of learning greatly influenced the design of the CLAI Model. According to the way in which Professor Jim Greer understood research, any research work should also have its practical side and include the development of real systems. This led to a model that relates the psychological aspects of learning with concepts that manage real technology-based learning systems.

For learning to be effective, it is necessary to use good quality educational resources during the learning process. Quality is associated to the suitability of a resource for the teaching-learning practice (Clements et al. 2014). Precisely, it is in the quality of a particular type of educational resource, test-based exercises, which this paper will focus on. The main objective of this work is to evaluate the quality of test-based exercises based on the performance of the students using Learning Analytics techniques. Although the lecturers carefully design and prepare their assessment material in order to properly measure and infer the knowledge level of the students, they often find out that the students struggle with items they were supposed to pass or items that fail to discriminate those students that master the contents of the course from those that do not master those contents. The reasons why these assessment items fail can be varied, from inappropriate difficulty levels to ambiguous or hard to understand problem statements. Analyzing the outcomes of the students on the tests and questions will allow the following research question to be answered: “How can we know whether or not an item or a test is good enough to be considered suitable for assessing learning?”

The paper is structured as follows. "From CLAI Model to Learning Analytics for Evaluating Test-type Exercises" section describes the journey from the development of a pragmatic cognitive theory of instruction to the application of Learning Analytics with the mentoring and inspiration of Professor Jim Greer. "Visual Learning Analytics" section introduces the area of Visual Learning Analytics. In "Can the Quality of Test-based Exercises Be Inferred From the Performance of the Students?" section, metrics and statistics that might be used to measure the quality of test-based exercises, using the performance data of the students are presented. "Are the Results of the Exercises Consistent?" section introduces TEA, a system that uses Visual Learning Analytics techniques to provide teachers and instructors with information about the validity of the test-based exercises they have created. "Can We Infer Undesired Situations From the Results?" section presents the evaluation carried out to validate TEA. Finally, "Does the Exercise Meet With the Student’s Level of Knowledge?" section provides some conclusions and future research lines.

From CLAI Model to Learning Analytics for Evaluating Test-type Exercises

The CLAI Model, a cognitive theory of instruction, was created from a learner-centred perspective, considering psychological aspects of learning and relating them to concepts that manage real technology-based learning systems (Arruarte et al. 1996). The CLAI Model integrates issues about human learning, cognitive processes, and learning strategies together with aspects from teaching processes. The CLAI Model relies on a three-level framework that relates cognitive processes, instructional events and instructional actions. Cognitive processes are the psychological processes or mental activities that have to occur within the student in order to achieve learning about some particular content. Instructional events occur in learning situations and each provides the external conditions of learning. External conditions refer to various ways in which the instructional events outside the learner activate and support the internal cognitive processes of learning. Finally, Instructional actions are instances of instructional activities that the system uses to provide instruction about both specific contents of the teaching domain and learning strategies.

CLAI is a practical model that was conceived with the clear intention of being applied in real systems. The CLAI model can be integrated inside a Technology Supported Learning System (TSLS), specifically in the pedagogic component of the system. In collaboration with Professor Jim Greer, the IRIS-D Shell was developed (Arruarte et al. 1997, 2003). IRIS-D is a shell for building Intelligent Tutoring Systems that incorporates the CLAI Model. Inspired by Professor Jim Greer, IRIS-D pays special attention to the student model and the pedagogic component to get more adaptive learning systems.

The authors share the opinion of Rigney (1978) who states that “cognitive processes are always performed by the learners but the initiation of their use may come from the learner’s self-instruction or from the instructional agent”. It is desirable to construct learning environments in which the learner can initiate the learning process but if an instructional system is going to be responsible for initiating the cognitive processes, it will be necessary for the system to carry out different instructional actions in order to invoke in the learner the appropriate cognitive processes for learning the presented information.

Most of the instructional actions identified in the CLAI Model can be initiated either by the instructional system or by the learner. All the instructional actions collected in the CLAI model are accessible in Appendix A of (Arruarte et al. 1997). Each instructional action has three associated attributes:

-

INITIATED-BY: indicates whether the instructional action can be initiated by the instructional agent, by the learner, or by either of them.

-

INSTRUCTIONAL-EVENT-REFINED: the instructional event/s that can be refined from the instructional action.

-

COGNITIVE-PROCESS-TRIGGERED: the cognitive processes that are eventually triggered by the instructional action.

For example, to represent the instructional Test action authors use the following notation:

Test

-

INITIATED-BY: Instructor, Learner.

-

INSTRUCTIONAL-EVENT-REFINED: Eliciting performance, Retention and transfer, Enhancing retention and transfer.

-

COGNITIVE-PROCESS-TRIGGERED: Responding with a performance, Generalizing performance, Providing cues that are used in recall.

This representation indicates: with the first slot, that the action can be initiated either by the instructor or the learner; with the second slot, that it is an action that can be used to refine one or more of the instructional events Eliciting performance, Retention and transfer, and Enhancing retention and transfer; and finally, with the third slot, that using this action may trigger one of the cognitive processes Responding with a performance, Generalizing performance, and Providing cues that are used in recall.

Although many things have changed since the 90 s, when the CLAI model was defined, others remain unchanged. Even today, for any technology supported learning system, it is still absolutely necessary to decide what kind of instructional actions the system will use. In addition, it is necessary to populate the technology supported learning system with the set of instances of instructional actions, properly represented and stored. Brooks et al. (2006) argue for using “computational agents to represent the e-learning delivery tool (a pedagogical agent), as well as the learning object repository (a repository agent). The agents essentially act as brokers of content, negotiating contextually sensitive interactions between the various parties in a learning situation”.

To represent the domain module of a TSLS, the authors of this paper rely on an educational ontology, called Learning Domain Ontology, which describes the topics to be mastered, along with the pedagogical relationships between the topics, whilst the Learning Object Base includes the didactic resources that can be used to learn each domain topic (Larrañaga et al. 2014; Conde et al. 2016, 2019). Professor Greer also acted as a counselor in the early stages of these research works (Larrañaga 2002).

For learning to be effective, it is necessary, without any doubt, to use good quality educational resources during the learning process. Quality is associated with the suitability of a resource for the teaching-learning practice (Clements et al. 2014). Precisely, it is in the quality of a particular type of educational resource, test-based exercise, which this paper will focus on. Authors will try to answer the following research question: “How can we know whether or not an item or a test is good enough to be considered suitable for learning?”

Test-based resources have been widely used as assessment tools to measure the achievements of the learners during the learning process. Properly calibrating the set of items is one of the biggest problems that many e-learning systems have faced (Conejo et al. 2016). The purpose of this research work is quite different. Besides using test-based resources to analyze the progress of the students during their learning process, we want to evaluate the quality of the questions that are included in those systems. This work attempts to determine which metrics and representations can allow instructors to identify those test-based resources that are not appropriate or valid. A wide range of metrics and statistics have been commonly used in the evaluation of tests and items. Classical Test Theory (Novick 1966) and/or Item Response Theory (Hambleton et al. 1991; Baker 2001) are used to grade the results of different items of evidence obtained from the results of students (Conejo et al. 2019). In those theories, metrics such as item difficulty and item discrimination are used to measure various aspects of test-type exercises and questions, always with the aim of measuring the quality of these questions and exercises.

Teachers prepare their assessment resources assuming that all the included exercises are adequate. However, this is not always the case and, sometimes, the answers obtained for a question might lead teachers to wonder about whether or not that question meets the objective for which it was developed. Providing teachers with a mechanism to identify, intuitively, which questions or collections of questions do not behave as expected would greatly facilitate their work. The use of metrics and statistics can help to face this problem. Learning Analytics techniques (another field in which Professor Jim Greer contributed significantly (Greer et al. 2016) - in fact he became Senior Strategist in Learning Analytics at the University of Saskatchewan) can also be helpful. Learning Analytics, in general, and Visual Learning Analytics in particular, aim at improving learning processes by providing tools (e.g., visualizations) that allow users to better understand what is happening in the learning process. The analysis of the performance of the students may allow questions or tests that are not behaving as expected to be identified. This way, the teachers would realise that some assessment items should be inspected, improved or even substituted. However, not all the teachers are experts in statistical analysis. Therefore, a tool that automatizes this analysis, presenting the results in a graphical way or even using alerting mechanisms to highlight those questions or tests that are suspicious, would remarkably make their work easier.

Visual Learning Analytics

Learning Analytics can bring many benefits within the learning process. As Greer and colleagues pointed out “Learning Analytics can drive improvements in teaching practices, instructional and curricular design, and academic program delivery” (Greer et al. 2016). Among those benefits, it is worth mentioning, for example, the monitoring of the student’s activity and progress to provide better feedback, the measurement of the impact of the student’s involvement with the course material, the help the students receive to track their own progress, or making use of student’s performance data to better redesign the used resources and curriculum. Data is the main “food” of data analysis; without data, nothing can be done. Data come from different sources, although the most common source of data in learning analytics comes through ICT, e.g., Moodle, MOOC.

Once data is gathered, it must be processed. Different technologies can be used to perform learning data analysis: classical statistics, advanced visualization or representation techniques, educational data mining, machine learning, language processing, or social media analysis techniques. The technique chosen will always depend on the goal. For example, Mendez and colleagues (Mendez et al. 2014), apply different statistical analysis and visual techniques on historic student performance data to provide insights into the difficulty of the course. The first Research Question they faced in the paper was: “Which data-based indicators can support curricular designers or program coordinators to decide which courses should be redesigned or revised due to their difficulty level?” Precisely, that research question can be easily particularized to the main goal pursued in our work: to identify which data-based indicators (statistics, metrics and visualizations) can support curricular designers (teachers) to decide which course elements (test-based exercises and test questions) should be redesigned or revised to increase their quality.

There are researchers who focus their attention on the use of more complex techniques to improve the creation of learning material. For example, Virvou et al. (2020) propose the combination of machine learning and learning analytics techniques to measure student engagement, quantify the learning experience, predict student performance or create material and educational courses. As Pelánek (2020) pointed out, “while some research papers typically focus on optimizing the predictive performance of learning analytics techniques, from a practical perspective, it makes sense to sacrifice some predictive accuracy when we can use a simpler model”. For teachers who are not experts in statistics, simple models might be easier to understand provided that they include appropriate visualization mechanisms to interpret the results.

Visual analytics is “the science of analytical reasoning facilitated by interactive visual interfaces” (Thomas and Cook 2006). The gap at the intersection of learning analytics and visual analytics is addressed in some works. For example, in the work of Greer and colleagues (Brooks et al. 2013), both statistical techniques and visual techniques to understand the patterns of interaction learners have with video lectures are used. Paul and colleagues (Paul et al. 2020) combine both techniques to evaluate the quality of learning based on 19,249 grades received from 511 courses. In the work proposed by (Vieira et al. 2018) a systematic literature review in the intersection of these two areas is presented. In the paper, the authors defined visual learning analytics as “the use of computational tools and methods for understanding educational phenomena through interactive visualization techniques”. However, visualizations are often difficult to interpret. Learning dashboards defined as “a single display that aggregated different indicators about learner(s), learning process(es) and/or learning context(s) into one or multiple visualizations” are a common way used to visualize and provide information to different actors involved in the teaching/learning processes –teachers, students, administrators and researchers– (Schwendimann et al. 2017). Although teachers and students are direct actors involved in the teaching/learning process, the benefits of visual learning analytics also extend to organizations (Charleer et al. 2018) and researchers (Leitner et al. 2017).

In the work carried out by Mitchell and Ryder (2013) examples of key indicators and considerations for developing and implementing learning dashboards are presented. Most of the dashboards are deployed to support teachers to gain a better overview of the course activity, to reflect on their teaching practice, and to find students at risk or isolated students (Verbert et al. 2013). Graphs, dials, gauges, scatter plots, pie charts, node-link diagram, concept maps or graphs are different types of visualizations provided in learning dashboards (Baker 2007; Vieira et al. 2018), but traditional statistical visualization techniques, such as bar plots and scatter plots, are still the most commonly used in learning analytics contexts (Viera et al. 2018). The potential of dashboards in a variety of learning settings had been already proved. Current research is more focused on the use of multimodal data acquisition techniques (Vertbert et al. 2020). For example, the work proposed by Noorozi et al. (2019) faced the acquisition and deployment of multimodal data in collaborative settings –cognitive, social and/or emotional.

Vieira et al. (2018) also pointed out that educators, having access to effective visualizations of educational data, could potentially use them to improve the instructional materials. Some effort has already been made with the purpose of measuring and visualizing the quality of educational materials used. For example, in the case of videos, Chen and colleagues (Chen et al. 2016) identified those video segments that generate numerous clickstreams or peaks.

In our case, the final aim we pursued is to provide a mechanism to analyze and visualize the performance of students on test-based exercises that helps teachers to identify those questions or tests that must be improved or even replaced.

Can the Quality of Test-based Exercises Be Inferred From the Performance of the Students?

In this section, metrics and statistics that might be used to measure the quality of test-based exercised using the performance data of students, i.e., answers to the questions, are presented and discussed. As in the work of (Ross et al. 2006), it has been assumed that students have only one attempt to answer each question, and that all the students have already answered. Peña (2014) presented the statistics and how the values can be interpreted to identify problems or unexpected scenarios. This work was used as the starting point to determine which statistics and metrics might be used to analyze the quality of test-based exercises using the responses of the students. In fact, some platforms such as Moodle also use some of these metrics and statistics, although the way they present that information might be quite useless for teachers that lack expertise in statistics.

To determine the appropriateness of exercises being evaluated, four main aspects have been considered:

-

Are the results of the exercises consistent?

-

Can we infer undesired situations from the results?

-

Does the exercise meet with the student’s level of knowledge?

-

Is the exercise able to determine the student’s knowledge level?

Next, the statistics and metrics that might answer these questions are presented.

Are the Results of the Exercises Consistent?

The Coefficient of Internal Consistency (CIC) measures the reliability or internal consistency of a test. In learning scenarios, CIC examines how accurately the items, i.e., test-questions of an exam, are able to measure the students’ knowledge level by considering whether or not the different items produce similar scores. The statistic used to calculate this measure is Cronbach’s alpha.

The True Weight metric estimates to what extent an item explains the variance of the grades the students obtained in the whole test, i.e., it determines how much each item influences the grade of the test. It is desirable that all the items have the same true weight, or close true weights. If the weight of an item is remarkably lower than the weight of the rest of the items, it means that the item fails to evaluate what the others are evaluating.

Can We Infer Undesired Situations From the Results?

Statistic measures provide the means to summarize the performance of the students on each test or exercise run. Centrality measures such as the arithmetic mean allow the central value of data to be calculated. This measure is frequently used to detect abnormal situations: very high means will indicate very easy tests and very low means will serve to detect very difficult or wrong items. The median provides similar information, although this metric is less sensible to outliers and extreme values. While centrality measures summarize the average outcomes, dispersion measures provide additional information with clues to detect when a test cannot distinguish good students from average students. The standard deviation of the scores measures the amount of variation or dispersion of the students’ grades regarding the average grade. If the standard deviation is very low, it might suggest that the students’ scores are too close and, therefore, it might be concluded that the test cannot distinguish good students from bad students. In those cases, the test should be revised. The skewness can be used to measure the asymmetry of the grade distribution. A value below − 1 suggests high negative skew, a tail on the lower grade values. This issue suggests that the test discriminates or classifies students with lower knowledge levels, but fails to discriminate those students with higher knowledge levels. A value above 1, on the other hand, fails to classify those students with lower knowledge levels while it discriminates the students with higher knowledge levels. The kurtosis of the grades describes how the grades of the students concentrate around the mean grade. The bigger the value, the more the grades approximate the mean. This issue suggests that the test discriminates students with extreme (low and high) knowledge levels, but fails to discriminate or classify intermediate knowledge levels.

Does the Exercise Meet With the Student’s Level of Knowledge?

The difficulty of the tests should be appropriate for the learning scenario in which tests are being used. While some tests might be appropriate for university degrees, they might be too difficult for secondary education. However, how can this adequacy be measured?

The Ease Index determines how easy passing the test has been. This metric is usually computed as the average score obtained in the test. Very high ease index values might show that the knowledge level required is much lower, while low values indicate that the students need higher knowledge levels than expected to pass the test.

Considering the items of the test, the Item Difficulty corresponds to the percentage of students who answered the item correctly. The higher the value, the easier the item was for the students who did the test.

Is the Exercise Able to Detect the Students’ Knowledge Level?

The main goal of the tests is to infer the knowledge level of the students on a topic. The tests that fail on that purpose are not valid. The results students get when answering exercises are expected to be related to their knowledge. However, students might sometimes guess the correct answer without knowing it, especially in test-based exams. The Error Ratio (ER) statistic, which is directly related to CIC, measures what percentage of the standard deviation might correspond to random guessing. Therefore, the smaller the error ratio, the better, as high ER values mean that random guessing level is high.

In a similar way, the Standard Error (SE) statistic can be used to estimate the luck or guess factor in the test. This measure allows the confidence interval of the grades to be defined. The lower the standard error, the higher the confidence that the grade is accurate.

The Random Guess Score (RGS) measures the probability of a student randomly guessing an item. It depends on the type of item and, for example, on multiple-choice questions (including true/false and matching pairs) it can be computed as the average score of all possible options. The higher this value, the more probable the student might pass the test by chance.

The Discrimination Index statistic is used to determine whether the item is good for classifying students that are good and not so good. Items that separate good students from not so good students in an appropriate way must be correlated with the overall grades of the students. The higher the discrimination index, the better. This statistic might be highly influenced by the ease index items, as items that are too easy or too difficult will fail to classify students according to their knowledge level.

The Discrimination Efficiency estimates to what extent the discrimination index is good regarding the difficulty of the item. A very simple or a very difficult question cannot be used to distinguish between students with different knowledge levels, as most students will score similarly in this item. A low discrimination efficiency value shows that the item being analyzed is not able to discriminate between students with different knowledge levels and, consequently, it is not a good item.

The Item Discrimination Index statistic measures the discriminatory power of an item. To this end, it analyzes the performance of the top students compared to the performance of the students with the lowest knowledge levels. The item discrimination index of an item that is answered correctly or incorrectly by all the students is zero.

TEA – A Visual Learning Analytics Tool for Analyzing the Quality of Test-based Exercises

TEA– test ariketen aztertzaileaFootnote 1 is a tool that uses Visual Learning Analytics to help instructors to determine the appropriateness of a particular type of educational resource, specifically test-based exercises. TEA uses two main visualization resources: Visual Learning Analytics mechanisms and Alerts.

Visual Learning Analytics to Analyze Test-based Exercises in TEA

TEA provides mechanisms to analyze the quality of the didactic resources at two granularity levels: analyzing the performance of the students on each item of the test, and analyzing the performance of the students on the whole test. It must be pointed out that some of the metrics and statistics reviewed in "Can the Quality of Test-based Exercises Be Inferred From the Performance of the Students?" section are valid only at the item level, for example CIC, true weight, error ratio and standard error; others are valid at the test level, for example, standard deviation, skewness or Kurtosis; and, there are some, such as the mean, that are valid in both cases.

Given that statistical values may be difficult to interpret for some users, TEA incorporates visualization mechanisms that provide information in an alternative way. In particular, TEA employs bar charts –vertical columns to show numerical comparisons between categories, circular charts –concentric circles to show numerical comparisons between categories, and boxplots –graphical representations of groups of numerical data through their quartiles.

Regarding how a teacher can use TEA during the teaching-learning process, it should be noted that it is a tool that can be used either to supervise the quality of the resources used throughout a course or to conduct, once the course is completed, a retrospective analysis of the resources used. To carry out a retrospective analysis, TEA requires additional information such as the final marks of the students, which could be considered their ground-truth knowledge level. For example, Fig. 1 shows the boxplot of the score distribution obtained by the students in the tests. A boxplot for each test is displayed, where the line that crosses each box indicates the median of that test. The colour saturation shows how the scores obtained in the test correlate with the final marks of the students. The more saturated the colour, the higher the correlation. If the correlation is high, it means that the students in the test obtained a level close to their final mark, while if the correlation is low, they did not. For example, the 4th test-based exercise (the fourth boxplot starting from the left) achieved a remarkably lower median score than most of the other tests. This information suggests a problem in that test. However, this test-based exercise has a high correlation with the final grades of the students. In fact, that test corresponded to an extra attempt the students were offered in order to improve their grades. So, only students with lower performance sat for the test and their performance was not as good as the mean of a group of standard students.

In addition, TEA uses other approaches to identify test-based exercises that should be checked. In a test in which all the questions (items) are appropriate, their True Weights should be balanced. Whenever a test presents unbalanced True Weights, there might be problems in that test.

Regarding the quality of the items, TEA also provides the users with diverse visualizations that allow them to identify those that do not behave as expected. These visualizations present information such as the distribution of the answers or Item discrimination index (see Fig. 2). Items with a low discrimination index are not valid to measure the knowledge level of the students. For example, the fifth item (drawn in light green) presents a very low discrimination index and effective discrimination value. This means that the item fails to discriminate good students, those that master the concepts related to the items, from those that do not have that knowledge.

Bearing in mind that not all the teachers are proficient in statistics, in fact, many teachers may have problems to understand what some metrics and visualizations such as boxplots mean, TEA includes a guide describing each metric and plot. This guide also includes examples in which troublesome tests or items can be identified, and examples of appropriate tests and items. This way, the teachers will have some clues that would make it easier for them to start using TEA to analyze their didactic resources.

Test-type Exercise Quality Alerts in TEA

.

TEA also incorporates an alert mechanism that makes it easier for users to identify which tests or items are suspicious of being inadequate. To this end, TEA highlights with the alert symbol (!) those tests or items that have inappropriate values of the metrics (see Fig. 3). This way, the user can rapidly identify those which are more likely to be troublesome and, thus, save a lot of time in the reviewing process. Figure 3 shows a snapshot of the left panel of TEA in which, besides allowing the user to choose which questions (items) have to be shown in the analysis, those questions which are suspicious (e.g., those with unbalanced true weight values) are highlighted; Question 5 in the figure. In a similar way, TEA highlights those tests that are not performing as expected in order to make it easier to identify troublesome tests.

TEA allows the users to determine the threshold values for the metrics, adapting them to their preferences. In fact, some teachers might be harsher than others or might expect the students to achieve higher scores. By specifying these values, the users can adapt TEA to their preferences.

Experimentation

TEA has been tested on a real learning scenario in order to validate its adequacy for measuring the quality of test-based exercises, answering the four research questions presented in "Can the Quality of Test-based Exercises Be Inferred From the Performance of the Students?" section.

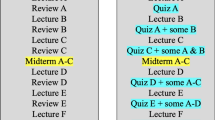

Experimental Setting

TEA was used to perform a retrospective analysis of the “Fundamentals of Computer Science” module of the Degree on Automotive Engineering and Bachelor Degrees in Industrial and Mechanical Engineering at the University of the Basque Country (UPV/EHU). In 2018 that module went through a thorough redesign, which entailed, among other improvements, changes in the evaluation protocol. The module comprises both theory lessons and computer sessions in which the students put into practice what they learnt in the theory lessons. In each of the 10 computer sessions, the 50 students enrolled in the module had to perform a test comprising 5 items each. The 3 instructors used the Moodle Learning Management System to apply the instruction, to share the didactic material and to conduct the tests. Whenever the students perform a test, Moodle records their answers. Students were allowed to make just one attempt per test and any empty answer was considered wrong.

Evaluation Results

At the end of the academic year, the instructors of the module used TEA to analyze whether the conducted tests were appropriate, i.e., were they accurate to measure the knowledge level of students, or should they be either improved or replaced for the following academic years. The instructors used TEA to analyze both the tests and items considering the answers of the students recorded in Moodle. In addition, they provided TEA with the final grades of the students, which were used as ground-truth knowledge levels. TEA could use this information to correlate the outcomes of the tests with the “real” knowledge level of the students. After using TEA, the instructors were requested to answer the four research questions presented above.

-

Are the results of the test consistent?

Most of the tests presented adequate values for the Coefficient of Internal Consistency (CIC), which determines how consistent the answers of the students to the test items are. In addition, most of the tests presented quite homogeneous True Weights in their items. However, inconsistencies, i.e., low CIC values and unbalanced True Weights of the items, were observed in three of the tests. As an example, Fig. 4 shows the True Weights of the five items of one of these tests in a speedometer graph. The difference between their True Weights can be observed. As stated in "Can the Quality of Test-based Exercises Be Inferred From the Performance of the Students?" section, remarkably different True Weights suggests suspicious tests, so the instructors marked them for further thorough analysis.

-

Can we infer undesired situations from the results?

To answer this question, the instructors analyzed the distributions of the grades in the tests (see Fig. 1). The boxplot visualization allowed the instructors to identify those tests which had remarkably worse results than those obtained in the other tests. In fact, the tests with the worst results were the same as those which were identified as suspicious above, due to their low CIC and unbalanced True Weight values. In addition, as the instructors provided the overall marks of the students, that information was used to enrich the boxplot visualization using the colour saturation to represent the correlation of both student outcomes in the tests and their overall marks. Analyzing this boxplot visualization, the instructors observed that one of the suspicious tests corresponded to an extra attempt they offered to students who wanted to improve their marks. In that test, only a few students sat for the exam, and they were the students with lower knowledge levels, so no conclusion could be drawn. However, in the other two suspicious tests, all the students performed worse without any abnormal condition in the context of the test. Therefore, the instructors decided to modify those tests for the next academic year.

-

Does the exercise meet with the student’s level of knowledge?

The distribution of the students’ marks in most of the tests proved that good students could obtain top grades, whereas those students with lower knowledge levels achieved worse marks. This information was confirmed by the metrics TEA used to this end, i.e., Ease Index and Item Difficulty. The only exceptions were again those suspicious tests in which the results were inconsistent with the knowledge level of the students.

-

Is the exercise able to determine the student’s knowledge level?

The visualizations allowed the instructors to analyze whether the evaluated tests were able to determine the knowledge level of the students. Considering the boxplot visualizations along with the correlation, CIC and True Weight values, the instructors were able to identify those tests that failed to measure the students’ knowledge in an accurate way.

In addition, the instructors analyzed the results on each test item, using the visualizations corresponding to metrics such as item discrimination index or discrimination efficiency. In this analysis, they identified troublesome items, some of them entailed statements that might be hard to understand, even for top students, and decided to replace or modify them for the following academic years.

In summary, it can be pointed out that the instructors found TEA very useful to analyze the quality of the tests they created and to identify which tests and items should be improved.

Conclusions and Future Research Lines

Test-based exercises are one of the most common forms of evaluation used in education, especially in the case of Technology Supported Learning Systems. Effective learning requires the use of valid and high-quality educational resources. Therefore, it is essential that there are mechanisms to assess their quality. Instructors want to know if those test-based exercises they have created are good enough to be considered suitable for evaluating the knowledge of students.

In this article, a collection of metrics and statistics that allow teachers to evaluate the quality of the test-type exercises has been presented. Those metrics and statistics are classified according to 4 different aspects: (1) Are the results of the exercise consistent?, (2) Can teachers infer undesired situations from the results?, (3) Does the exercise meet with the student’s level of knowledge?, and (4) Is the exercise able to determine the student’s knowledge level?

In addition, the metrics and statistics have been implemented in a Visual Learning Analytic tool, called TEA, that allows teachers to analyze and visualize, in a graphical way, the students’ performance on test-based exercises providing some indicators that help them to confirm the validity of the exercises created or to improve their quality when exercises do not meet what teachers expected.

TEA has been tested on a real scenario in which the instructors used the tool to evaluate the test-based exercises they defined for the “Fundamentals of Computer Science” module. TEA allowed them to identify which tests were inappropriate and should be reviewed.

Although TEA has been evaluated only on a single subject, giving satisfactory results, the tool is ready for greater use. In fact, in the current academic year, instructors of other modules will use TEA to evaluate their material. Despite the fact that the evaluation was conducted only on a particular course, TEA does not use any technique that might be exclusive for a particular domain. Therefore, this tool should be applicable to any domain and be beneficial for any teacher that is interested in assuring that test-based exercises and the items they comprise are fair for measuring the knowledge level of the students.

So far, TEA has only been used to evaluate manually created exercises, but it could also be used, in the same way, to evaluate automatically generated test exercises, for example, test-based exercises automatically generated using Natural Language Processing techniques. The evaluation of the students is the final objective pursued in the majority of works related to the automatic generation of exercises (Kurdi et al. 2019). Therefore, in order to assess the quality of automatically generated questions, not only the opinion of the experts (Amedei et al. 2018) but also the performance of the students would incorporate a new point of view in the measurement of the quality of the automatically generated questions.

In addition, TEA could be improved to include more types of exercises (fill in the gaps, matching pairs, etc.) and even open response exercises. The incorporation of open response exercises would require the use of additional techniques, for example those from the Natural Language Processing area.

TEA provides an alert mechanism that highlights those exercises and questions that are more suspicious. To this end, it relies on a set of rules and predefined threshold values, which can be set by each user. However, machine learning techniques might be used to infer those rules and even threshold values from data. To this end, TEA should include means to annotate (correct or wrong) the exercises and questions after the review in order to generate a dataset (in which the student data is anonymized). This data set would be exploited using machine learning techniques to infer, and even personalize, the rules and values used by the alert module.

Finally, the integration of TEA in a learning tool, for example in a LMS, would make it possible to carry out the complete process of generation, quality analysis and improvement of the test-based exercises in a single learning system, greatly facilitating the work of the teacher.

Change history

07 March 2021

A Correction to this paper has been published: https://doi.org/10.1007/s40593-021-00245-3

Notes

Basque language translation of “Test Exercises Examiner”.

References

Amedei, J., Piwek, P., & Willis, A. (2918). Evaluation Methodologies in Automatic Question Generation 2013–2018. In Proceedings of the 11th International Conference on Natural Language Generation (pp. 307–317).

Arruarte, A., Fernández, I., Ferrero, B., & Greer, J. (1997). The IRIS Shell: How to Build ITSs from Pedagogical and Design Requisites. Journal of Artificial Intelligence in Education, 8, 341–381.

Arruarte, A., Fernández, I., & Greer, J. (1996). The CLAI Model. A Cognitive Theory to Guide ITS Development. Journal of Artificial Intelligence in Education, 7(3/4), 277–313.

Arruarte, A., Ferrero, B., Fernández-Castro, I., Urretavizcaya, M., Álvarez, A., & Greer, J. (2003). The IRIS Authoring Tool. In T. Murray, S. Ainsworth & S. Blessing (Eds.), Automating Tools for Advanced Learning Environments (pp. 233–267). Dordrecht: Kluwer Academic Publishers.

Baker, B. M. (2007). A conceptual framework for making knowledge actionable through capital formation, D.M. dissertation, University of Maryland, College Park, USA.

Baker, F. B. (2001). The basics of item response theory. ERIC.

Brooks, C., Bateman, S., McCalla, G., & Greer, J. (2006). Applying the Agent Metaphor to Learning Content Management Systems and Learning Object Repositories. In M. Ikeda, K. Ashley & T.-W. Chan (Eds.), ITS 2006, LNCS 4053 (pp. 808–810). Berlin Heidelberg: Springer-Verlag.

Brooks, C., Thompson, C., & Greer, J. (2013). Visualizing lecture capture usage: A learning analytics case study. WAVe 2013 workshop at LAK13, April 8, 2013, Leuven, Belgium.

Charleer, S., Moere, A. V., Klerkx, J., Verbert, K., & Laet, T. D. (2018). Learning analytics dashboards to support adviser-student dialogue. IEEE Transactions on Learning Technologies (pp. 1–1). https://doi.org/10.1109/TLT.2017.2720670.

Chen, Q., Chen, Y., Liu, D., Shi, C., Wu, Y., & Qu, H. (2016). PeakVizor: Visual Analytics of Peaks in Video Clikstreams from Massive Open Online Courses. IEEE Transactions on Visualization and Computer Graphics, 22(10), 2315–2330.

Clements, K., Pawlowski, J., & Manouselis, N. (2014). Why open educational resources repositories fail -review of quality assurance approaches. In International Association of Technology, Education and Development (IATED 2014).

Conde, A., Larrañaga, M., Arruarte, A., & Elorriaga, J. A. (2019). A combined approach for eliciting relationships for educational ontologies using general-purpose knowledge bases. IEEE ACCESS, 7, 48339–48355.

Conde, A., Larrañaga, M., Arruarte, A., Elorriaga, J. A., & Roth, D. (2016). LiTeWi: A combined term extraction and entity linking method for eliciting educational Ontologies from textbooks’. Journal of the Association for Information Science and Technology, 67(2), 380–399.

Conejo, R., Barros, B., & Bertoa, M. F. (2019). Automated assessment of complex programming tasks using SIETTE. IEEE Transactions on Learning Technologies, 12(4), 470–484.

Conejo, R., Guzmán, E., & Trella, M. (2016). The SIETTE automatic assessment environment. International Journal of Artificial Intelligence in Education, 26, 270–292.

Greer, J., Molinaro, M., Ochoa, X., & McKay, T. (2016). Learning Analytics for Curriculum and Program Quality Improvement. In Proceedings of the Sixth International Conference on Learning Analytics & Knowledge, pp. 494–495.

Hambleton, R. K., Swaminathan, H., & Rogers, H. J. (1991). Fundamentals of item response theory, vol. 2. Thousand Oaks: Sage

Kurdi, G., Leo, J., Parsia, B., Sattler, U., & Al-Emari, S. (2019). A Systematic Review of Automatic Question Generation for Educational Purposes, International Journal of Artificial Intelligence in Education, https://doi.org/10.1007/s40593-019-00186-y.

Larrañaga, M. (2002). Enhancing ITS building process with semi-automatic domain acquisition using ontologies and NLP techniques. Proceedings of the Young Researches Track. Intelligent Tutoring Systems 2002.

Larrañaga, M., Conde, A., Calvo, I., Elorriaga, J. A., & Arruarte, A. (2014). Automatic generation of the domain module from electronic textbooks: Method and validation. IEEE Transactions on Knowledge and Data Engineering, 26(1), 69–82.

Leitner, P., Khalil, M., & Ebner, M. (2017). Learning analytics in higher education—A literature review. In A. Peña-Ayala (Ed.), Learning Analytics: Fundaments, Applications, and Trends (vol. 94, pp. 1–23). Cham: Springer International Publishing. https://doi.org/10.1007/978-3-319-52977-6_1.

Mendez, G., Ochoa, X., Chiluiza, K., de Wever, B. (2014). Mendez, G., Ochoa, X., Chiluiza, K., & de Wever, B. (2014). Curricular Design Analysis: A Data-Driven Perspective. Journal of Learning Analytics, 1(3), 84–119. https://doi.org/10.18608/jla.2014.13.6.

Mitchell, J., & Ryder, A. (2013). Developing and using dashboard indicators in student affairs assessment. New Directions for Student Services, 142, 71–81.

Noorozi, O., Alikhani, I., Järvelä, S., Kirschner, P. A., Juuso, I., & Seppänen, T. (2019). Multimodal data to design visual learning analytics for understanding regulation of learning. Computers in Human Behavior, 100, 298–304. https://doi.org/10.1016/j.chb.2018.12.019.

Novick, M. R. (1966). The axioms and principal results of classical test theory. Journal of Mathematical Psychology, 3(1), 1–18.

Paul, R., Gaftandzhieva, S., Kausar, S., Hussain, S., Doneva, R., & Baruah, A. K. (2020). Exploring student academicperformance using data mining tools. iJET International Journal of Emerging Technologies in Learning, 15(8), 195–208.

Pelánek, R. (2020). Learning Analytics Challenges: Trade-offs, Methodology, Scalability. In Proceedings of the 10th International Learning Analytics & Knowledge (pp. 554–558). New York City: ACM.

Peña, D. (2014). Fundamentos de estadística. Alianza editorial.

Rigney, J. W. (1978). Learning Strategies: A Traditional Perspective. O’Neil, H.F. (Eds.), Learning Strategies (pp. 165–205). New York: Academic Press.

Ross, S., Jordan, S., & Butcher, P. (2006). 10 online instantaneous and targeted feedback for remote learners. Innovative assessment in higher education (p. 123).

Schwendimann, B. A., Rodríguez-Triana, M. J., Vozkiuk, A., Prieto, L. P., Boroujeni, M. S., Holzer, A., Gillet, D., & Dillenbourg, P. (2017). Perceiving Learning at a Glance: A Systematic Literature Review of Learning Dashboard Research. IEEE Transactions on Learning Technologies, 10(1), 30–41.

Thomas, J. J., & Cook, K. A. (2006). A visual Analytics Agenda. IEEE Computer Graphics and Applications, 26(1), 10–13.

Verbert, K., Duval, E., Klerkx, J., Govaerts, S., & Santos, J. L. (2013). Learning analytics dashboard applications. American Behavioral Scientist, 1–10, https://doi.org/10.1177/0002764213479363.

Verbert, K., Ochoa, X., De Croon, R., Dourado, R. A., & De Laet. (2020). T. Learning analytics dashboards: the past, the present and the future. In Proceedings of the 10th International Learning Analytics & Knowledge (pp. 35–40). New York City: ACM.

Vieira, C., Parsons, P., & Byrd, V. (2018). Visual Learning Analytics of Educational Data: A Systematic Literature Review and Research Agenda. Computers & Education, 122, 119–135.

Virvou, M., Alepis, E., Tsihrintzis, G. A., & Jain, L. C. (2020). Machine Learning Paradigms. Advanced in Learning Analytics. Berlin: Springer.

Weinstein, C. E., & Mayer, R. E. (1986). The teaching of Learning Strategies. In C. Wittrock (Ed.), Handbook of Research on Teaching (pp. 315–327). New York: Macmillan Publishing Company.

Acknowledgements

This work was supported by the Basque Government through ADIAN, under Grant IT980-16, and by MCIU/AEI/FEDER, UE, under Grant RTI2018-096846-B-C21.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this article was revised: The last part of the Conclusion section (see below), which appeared in the proofs reviewed by the author, did not appear in the published article. “In honor to Jim We acknowledge the help and inspiration of Professor Greer during the time we were fortunate to share with him. The academic and friendship relation between Professor Jim Greer and several of the members of the Ga-Lan group began in the early 1990s. In those years Jim acted as mentor to some of us, PhD students, and instilled in us a formal, yet pragmatic, way of researching. In 1993, he welcomed us warmly at the ARIES laboratory in the cold Saskatoon winter. Then, we got to know his dedication to the students who worked with him, the closeness with which he treated those students, how open-minded he was with their ideas and how he improved them with his great experience and insights. Thereafter, informal meetings with Jim during conferences were a good reason to attend them. Jim’s influence prompted us to work on learning systems adapted to the needs of the students. Like him, we believe in the importance of student models for this adaptation to be possible. We also share other lines of research such as learning analytics, the focus of this article. Eskerrik asko, Jim! (Thanks, Jim).”

Rights and permissions

About this article

Cite this article

Arruarte, J., Larrañaga, M., Arruarte, A. et al. Measuring the Quality of Test-based Exercises Based on the Performance of Students. Int J Artif Intell Educ 31, 585–602 (2021). https://doi.org/10.1007/s40593-020-00208-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40593-020-00208-0