Abstract

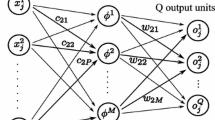

Conventional supervised learning in neural networks is carried out by performing unconstrained minimization of a suitably defined cost function. This approach has certain drawbacks, which can be overcome by incorporating additional knowledge in the training formalism. In this paper, two types of such additional knowledge are examined: Network specific knowledge (associated with the neural network irrespectively of the problem whose solution is sought) or problem specific knowledge (which helps to solve a specific learning task). A constrained optimization framework is introduced for incorporating these types of knowledge into the learning formalism. We present three examples of improvement in the learning behaviour of neural networks using additional knowledge in the context of our constrained optimization framework. The two network specific examples are designed to improve convergence and learning speed in the broad class of feedforward networks, while the third problem specific example is related to the efficient factorization of 2-D polynomials using suitably constructed sigma-pi networks.

Similar content being viewed by others

References

N. Ampazis, S.J. Perantonis and J. Taylor, Dynamics of multilayer networks in the vicinity of temporary minima, Neural Networks 12 (1999) 43–58.

D. Barber and D. Saad, Does extra knowledge necessarily improve generalization?, Neural Computation 8 (1996) 202–214.

J.C. Bezdek, Pattern Recognition with Fuzzy Objective Function Algorithms (Plenum, New York, 1981).

A.E. Bryson and W.F. Denham, A steepest ascent method for solving optimum programming problems, Journal App. Mech. 29 (1962) 247–257.

Y. le Cun, L.D. Jackel, B.E. Boser, J.S. Denker, H.-P. Graf, I. Guyon, D. Henderson, R.E. Howard and W. Hubbard, Handwritten digit recognition: Applications of neural network chips and automatic learning, IEEE Communications Magazine (1989) pp. 41–46.

S.E. Fahlman, Faster learning variations on back-propagation: An empirical study, in: Proceedings of the Connectionist Models Summer School, eds. D. Touretzky et al. (Morgan Kaufmann, 1988) pp. 29–37.

S. Gold, A. Rangarajan and E. Mjolsness, Learning with preknowledge: clustering with point and graph matching distance, Neural Computation 8 (1996) 787–804.

T. Grossman, The CHIR algorithm for feed forward networks with binary weights, The 'moving targets' training algorithm, in: Advances in Neural Information Processing Systems, ed. D.S. Touretzky (Morgan Kaufmann, 1990) pp. 516–523.

J. Hertz, A. Krogh and R.G. Palmer, Introduction to the Theory of Neural Computation (Addison-Wesley, 1991).

R.A. Jacobs, Increased rates of convergence through learning rate adaptation, Neural Networks 1 (1988) 295–307.

E.M. Johansson, F.U. Dowla and D.M. Goodman, Backpropagation learning for multilayer feedforward networks using the conjugate gradient method, International Journal of Neural Systems 2 (1992) 291–301.

A. Krogh, G.I. Thorbergsson and J.A. Hertz, A cost function for internal representations, in: Advances in Neural Information Processing Systems, ed. D.S. Touretzky (Morgan Kaufmann, 1990) pp. 733–740.

S.J. Perantonis, N. Ampazis, S. Varoufakis and G. Antoniou, Constrained learning in neural networks: Application to stable factorization of 2-D polynomials, Neural Proc. Lett. 7 (1998) 5–14.

S.J. Perantonis and D.A. Karras, An efficient learning algorithm with momentum acceleration, Neural Networks 8 (1995) 237–249.

L. Prechelt, PROBEN1-A set of neural network benchmark problems and benchmarking rules, Technical Report 21/94, Universität Karlsruhe, Germany (1994).

S.S. Rao, Optimization Theory and Applications (Wiley Eastern, New Delhi, 1984).

M. Riedmiller and H. Braun, A direct adaptive method for faster backpropagation learning: The RPROP algorithm, in: Proceedings of the International Conference on Neural Networks, San Francisco 1 (1993) pp. 586–591.

R. Rohwer, The 'moving targets' training algorithm, in: Advances in Neural Information Processing Systems, ed. D.S. Touretzky (Morgan Kaufmann, 1990) pp. 558–565.

D.E. Rumelhart, J.E. Hinton and R.J. Williams, Learning internal representations by error propagation, in: Parallel Distributed Processing: Explorations in the Microstructures of Cognition, Vol. 1, Foundations, eds. D.E. Rumelhart and J.L. McLelland (MIT Press, 1986) pp. 318–362.

P. Simard, Y. le Cun and J. Denker, Efficient pattern recognition using a new transformation distance, in: Advances in Neural Processing Systems, eds. S.J. Hanson et al. (Morgan Kaufmann, 1993) pp. 50–58.

T.B. Trafalis and N.P Couellan, Neural network training via an affine scaling quadratic optimization algorithm, Neural Networks 9 (1996) 475–481.

A.S. Weigend, D.E. Rumelhart and B.A. Huberman, Generalization by weight elimination with application to forecasting, in: Advances in Neural Information Processing Systems, ed. D.S. Touretzky (Morgan Kaufmann, 1991) pp. 875–882.

R. Yager and D. Filev, Generation of fuzzy rules by mountain clustering, Journal of Intelligent and Fuzzy Systems 2 (1994) 209–219.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Perantonis, S.J., Ampazis, N. & Virvilis, V. A Learning Framework for Neural Networks Using Constrained Optimization Methods. Annals of Operations Research 99, 385–401 (2000). https://doi.org/10.1023/A:1019240304484

Issue Date:

DOI: https://doi.org/10.1023/A:1019240304484