Abstract

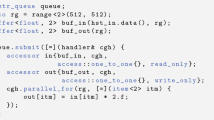

This paper presents a novel scheme for maintaining accurate information about distributed data in message-passing programs. We describe static single assignment (SSA) based algorithms to build up an intermediate representation of a sequential program while targeting code generation for distributed memory machines employing the single program multiple data (SPMD) model of programming. This SSA-based intermediate representation helps in a variety of optimizations performed by our automatic parallelizing compiler, PARADIGM, which generates message passing programs and targets distributed memory machines. In this paper, we concentrate on the semantics and implementation of this SSA-form for message-passing programs while giving some examples of the kind of optimizations they enable. We describe in detail the need for various kinds of merge functions to maintain the single assignment property of distributed data. We give algorithms for placement and semantics of these merge functions and show how the requirements are substantially different owing to the presence of distributed data and arbitrary array addressing functions. This scheme has been incorporated in our compiler framework which can use uniform methods to compile, parallelize, and optimize a sequential program irrespective of the subscripts used in array addressing functions. Experimental results for a number of benchmarks on an IBM SP-2 show a significant improvement in the total runtimes owing to some of the optimizations enabled by the SSA-based intermediate representation. We have observed up to around 10–25% reduction in total runtimes in our SSA-based schemes compared to non-SSA-based schemes on 16 processors.

Similar content being viewed by others

REFERENCES

B. K. Rosen, M. Wegman, and F. K. Zadeck, Global Value Numbers and Redundant Computations, Conf. Record 15th ACM Symp. Principles of Progr. Lang., pp. 12-27 (January 1988).

B. Alpern, M. Wegman, and F. K. Zadeck, Detecting Equality of Values in Programs, Conf. Record 15th ACM Symp. Principles of Progr. Lang., pp. 1-11 (January 1988).

R. Cytron, J. Ferrante, B. K. Rosen, M. Wegman, and F. K. Zadeck, Efficiently Computing Static Single Assignment Form and the Control Dependence Graph, ACM Trans. Progr. Lang. Syst., pp. 451-490 (October 1991).

R. Cytron, A. Lowry, and F. K. Zadeck, Code Motion of Control Structures in High-Level Languages, Conf. Record of the 13th ACM Symp. Principles of Progr. Lang., pp. 70-85 (January 1986).

M. Wegman and F. K. Zadeck, Constant Propagation with Conditional Branches, Conf. 12th ACM Symp. on Principles of Progr. Lang., pp. 291-299 (January 1985).

K. Knobe and V. Sarkar, Array SSA Form and Its Use in Parallelization, ACM Symp. Principles of Progr. Lang., pp. 107-120 (January 1998).

J. F. Collard, Array SSA: Why? How? How Much? Technical Report, PRiSM, University of Versailles (1998).

J. Lee, S. Midkiff, and D. A. Padua, Concurrent Static Single Assignment Form and Constant Propagation for Explicitly Parallel Programs, Workshop on Languages and Compilers for Parallel Computing (August 1997).

D. Novillo, R. Unrau, and J. Schaeffer, Concurrent SSA Form in the Presence of Mutual Exclusion, Int'l. Conf. Parallel Processing, pp. 356-364 (August 1998).

H. Srinivasan, J. Hook, and M. Wolfe, Static Single Assignment Form for Explicitly Parallel Programs, 20th ACM Symp. Principles of Progr. Lang., pp. 260-272 (January 1993).

J. F. Collard, Array SSA for Explicitly Parallel Programs, Technical Report, CNRS and PRiSM, University of Versailles. http://www.prism.uvsq.fr/jfcollar/assaepp.ps.gz

K. Kennedy and N. Nedeljkovic, Combining Dependence and Data-Flow Analyses to Optimize Communication, Proc. Ninth Int'l. Parallel Processing Symp. (April 1995).

Dhruva Chakrabarti, Design and Evaluation of a Uniform Compilation Framework for Hybrid Applications, Technical report, Ph.D. thesis, ECE Department, Northwestern University (June 2000).

K. Kennedy and A. Sethi, A Constraint-based Communication Placement Framework, Technical Report CRPC-TR95515-S, CRPC, Rice University (1995).

High Performance Fortran Forum, High Performance Fortran Language Specification, Version 2.0 (January 1997).

M. Ujaldon, E. Zapata, B. Chapman, and H. Zima, Vienna-Fortran/HPF Extensions for Sparse and Irregular Problems and their Compilation, Technical Report TR 95-5, Institute for Software Technology and Parallel Systems, University of Vienna (October 1995).

W. Gropp, E. Lusk, and A. Skjellum, Using MPI: Portable Parallel Programming with the Message Passing Interface, The MIT Press, Cambridge, Massachusetts (1994).

R. Mirchandaney, J. Saltz, R. M. Smith, D. M. Nicol, and Kay Crowley, Principles of Runtime Support for Parallel Processors, Proc. ACM Int'l. Conf. Supercomputing, pp. 140-152 (July 1988).

S. Chakrabarti, M. Gupta, and J. D. Choi, Global Communication Analysis and Optimization, Proc. ACM SIGPLAN Conf. Progr. Lang. Design and Implementation (May 1996).

J. Lee, D. Padua, and S. P. Midkiff, Basic Compiler Algorithms for Parallel Programs, Seventh ACM SIGPLAN Symp. Principles and Practice of Parallel Programming (May 1999).

M. Wolfe, High Performance Compilers for Parallel Computing, Addison-Wesley (1996).

M. Gupta, E. Schonberg, and H. Srinivasan, A Unified Framework for Optimizing Communication in Data-Parallel Programs, IEEE Trans. Parallel and Distrib. Syst., pp. 689-704 (July 1996).

P. Havlak and K. Kennedy, An Implementation of Interprocedural Bounded Regular Section Analysis, IEEE Trans. Parallel and Distrib. Syst., pp. 350-360 (July 1991).

J. Saltz, K. Crowley, R. Mirchandaney, and H. Berryman, Runtime Scheduling and Execution of Loops on Message Passing Machines, J. Parallel and Distrib. Computing (April 1990).

J. Saltz, R. Ponnusamy, S. D. Sharma, B. Moon, Yuan-Shim Hwang, M. Uysal, and R. Das, A Manual for the CHAOS Runtime Library, Technical Report, UMIACS, University of Maryland (1994).

D. Palermo, Compiler Techniques for Optimizing Communication and Data Distribution for Distributed-Memory Computers, Technical Report, University of Illinois at Urbana-Champaign (May 1996).

T. Mowry, M. Lam, and A. Gupta, Design and Evaluation of a Compiler Algorithm for Prefetching, Fifth Int'l. Conf. Architectural Support for Progr. Lang. Operat. Syst., pp. 62-73 (1992).

C. Click, Global Code Motion Global Value Numbering, Progr. Lang. Design and Implementation, pp. 246-257 (1995).

J. F. Collard, The Advantages of Instance-wise Reaching Definition Analyses in Array (S)SA, Eleventh Int'l. Workshop on Languages and Compilers for Parallel Computing, Chapel Hill, North Carolina (August 1998).

D. R. Chakrabarti and P. Banerjee, Accurate Data and Context Management in Message-Passing Programs, Twelfth Int'l. Workshop on Languages and Compilers for Parallel Computing (August 1999).

D. R. Chakrabarti and P. Banerjee, SSA-based Global Optimizations for Message-Passing Programs with Arbitrarily Subscripted Arrays, Technical Report CPDC-TR-9911-019, Center for Parallel and Distributed Computing, Northwestern University (November 1999). http://www.ece.nwu.edu/cpdc

A. Lain, Compiler and Runtime Support for Irregular Computations, Technical Report, Ph.D. thesis, University of Illinois at Urbana-Champaign (1995).

P. Banerjee, J. Chandy, M. Gupta, E. W. Hodges IV, J. G. Holm, A. Lain, D. J. Palermo, S. Ramaswamy, and E. Su, The PARADIGM Compiler for Distributed-Memory Multi-Computers, IEEE Computer (October 1995).

D. R. Chakrabarti, N. Shenoy, A. Choudhary, and P. Banerjee, An Efficient Uniform Runtime Scheme for Mixed Regular-Irregular Applications, Proc. 12th ACM Int'l. Conf. Supercomputing (July 1998).

I. Duff, R. Grimes, and J. Lewis, User's Guide for the Harwell-Boeing Sparse Matrix Collection, Release 1, CERFACS, France.

V. Kumar, A. Grama, A. Gupta, and G. Karypis, Introduction to Parallel Computing, Benjamin-Cummings (1994).

ITPACK, http://www.netlib.org/itpack/index.html

Lanczos Algorithm, http://www.netlib.org/lanczos

S. P. Amarasinghe and M. S. Lam, Communication Optimization and Code Generation for Distributed Memory Machines, Proc. SIGPLAN'93 Conf. Progr. Lang. Design and Implementation (June 1993).

R. v. Hanxleden and K. Kennedy, Give-N-Take-A Balanced Code Placement Frame-work, Proc. SIGPLAN'94 Conf. Progr. Lang. Design and Implementation (June 1994). 184 Chakrabarti and Banerjee

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Chakrabarti, D.R., Banerjee, P. Static Single Assignment Form for Message-Passing Programs. International Journal of Parallel Programming 29, 139–184 (2001). https://doi.org/10.1023/A:1007633018973

Issue Date:

DOI: https://doi.org/10.1023/A:1007633018973