Abstract

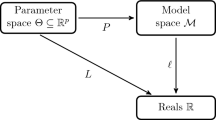

We consider a discrete time, finite state Markov reward process that depends on a set of parameters. We start with a brief review of (stochastic) gradient descent methods that tune the parameters in order to optimize the average reward, using a single (possibly simulated) sample path of the process of interest. The resulting algorithms can be implemented online, and have the property that the gradient of the average reward converges to zero with probability 1. On the other hand, the updates can have a high variance, resulting in slow convergence. We address this issue and propose two approaches to reduce the variance. These approaches rely on approximate gradient formulas, which introduce an additional bias into the update direction. We derive bounds for the resulting bias terms and characterize the asymptotic behavior of the resulting algorithms. For one of the approaches considered, the magnitude of the bias term exhibits an interesting dependence on the time it takes for the rewards to reach steady-state. We also apply the methodology to Markov reward processes with a reward-free termination state, and an expected total reward criterion. We use a call admission control problem to illustrate the performance of the proposed algorithms.

Similar content being viewed by others

References

Baxter, J., and Bartlett, P. L. 1999. Direct Gradient-Based Reinforcement Learning: I. Gradient Estimation Algorithms. Unpublished manuscript, November.

Bertsekas, D. P. 1995. Dynamic Programming and Optimal Control, Vol. I and II. Belmont, MA: Athena Scientific.

Bertsekas, D. P. 1995. Nonlinear Programming. Belmont, MA: Athena Scientific.

Cao, X. R. 2000. A unified approach to Markov decision problems and performance sensitivity analysis. Automatica 36: 771–774.

Cao, X. R., and Chen, H. F. 1997. Perturbation realization, potentials, and sensitivity analysis of Markov processes. IEEE Transactions on Automatic Control 42: 1382–1393.

Chong, E. K. P., and Ramadage, P. J. 1994. Stochastic optimization of regenerative systems using infinitesimal perturbation analysis. IEEE Trans. on Automatic Control 39: 1400–1410.

Cao, X. R., and Wan, Y. W. 1998. Algorithms for sensitivity analysis of Markov systems through potentials and perturbation realization. IEEE Trans. on Control Systems Technology 6: 482–494.

Fu, M. C., and Hu, J.-Q. 1994. Smoothed perturbation analysis derivative estimation for Markov chains. Operations Research Letters 15: 241–251.

Fu, M. and Hu, J.-Q. 1997. Conditional Monte Carlo: Gradient Estimation and Optimization Applications. Boston, MA: Kluwer Academic Publishers.

Gallager, R. G. 1995. Discrete Stochastic Processes. Boston/Dordrech/London: Kluwer Academic Publishers.

Glynn, P. W. 1986. Stochastic approximation for Monte Carlo optimization. Proceedings of the 1986 Winter Simulation Conference, pp. 285–289.

Glynn, P. W. 1987. Likelihood ratio gradient estimation: An overview. Proceedings of the 1987 Winter Simulation Conference, pp. 366–375.

Jaakkola, T., Singh, S. P., and Jordan, M. I. 1995. Reinforcement learning algorithm for partially observable Markov decision problems. Advances in Neural Information Processing Systems. Vol. 7, San Francisco, CA: Morgan Kaufman, pp. 345–352.

Kimura, H., Miyazaki, K., and Kobayashi, S. (1997) Reinforcement learning in POMDPs with function approximation. In D. H. Fisher (editor), Proceedings of the 14th International Conference on Machine Learning, pp. 152–160.

Marbach, P. 1998. Simulation-based optimization of Markov decision processes. Ph.D. Thesis, Department of Electrical Engineering and Computer Science, Massachusetts Institute of Technology, MA.

Marbach, P., and Tsitsiklis, J. N. (2001) Simulation-based optimization of Markov reward processes. IEEE Transactions on Automatic Control 46(2): 191–209.

Marbach, P. and Tsitsiklis, J. N. (1999) Simulation-based optimization of Markov reward processes: Implementation issues. Proceedings of the 38th IEEE Conference on Decision and Control, Phoenix, Arizona, pp. 1769–1774, December.

Tresp, V., and Hofmann, R. 1995. Missing and Noisy Data in Nonlinear Time-Series Prediction. In Neural Networks for Signal Processing, S. F. Girosi, J. Mahoul, E. Manolakos and E. Wilson (editors), New York: IEEE Signal Processing Society, pp. 1–10.

Williams, R. J. 1992. Simple statistical gradient-following algorithms for connectionist reinforcement learning. Machine Learning 8: 229–256.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Marbach, P., Tsitsiklis, J.N. Approximate Gradient Methods in Policy-Space Optimization of Markov Reward Processes. Discrete Event Dynamic Systems 13, 111–148 (2003). https://doi.org/10.1023/A:1022145020786

Issue Date:

DOI: https://doi.org/10.1023/A:1022145020786