Abstract

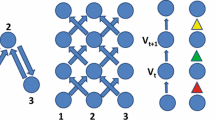

In this paper we develop novel algorithmic ideas for building a natural language parser grounded upon the hypothesis of incrementality. Although widely accepted and experimentally supported under a cognitive perspective as a model of the human parser, the incrementality assumption has never been exploited for building automatic parsers of unconstrained real texts. The essentials of the hypothesis are that words are processed in a left-to-right fashion, and the syntactic structure is kept totally connected at each step.

Our proposal relies on a machine learning technique for predicting the correctness of partial syntactic structures that are built during the parsing process. A recursive neural network architecture is employed for computing predictions after a training phase on examples drawn from a corpus of parsed sentences, the Penn Treebank. Our results indicate the viability of the approach and lay out the premises for a novel generation of algorithms for natural language processing which more closely model human parsing. These algorithms may prove very useful in the development of efficient parsers.

Similar content being viewed by others

References

W. Marslen-Wilson, “Linguistic structure and speech shadowing at very short latencies,” Nature, vol. 244, pp. 522–533, 1973.

M. Collins, “A new statistical parser based on bigram lexical dependencies,” in Proc. of 34th ACL, 1996, pp. 184–191.

J.P. Kimball, “Seven principles of surface structure parsing in natural language,” Cognition, vol. 2, pp. 15–47, 1973.

L. Frazier and J.D. Fodor, “The sausage machine: A new two-stage parsing model,” Cognition, vol. 6, pp. 291–325, 1978.

J. Hobbs and J. Bear, “Two principles of parse preference,” in Proceedings of COLING90, 1990, pp. 162–167.

M. Nagao, “Varieties of heuristics in sentence processing,” in Current Issues in Natural Language Processing: In Honour of Don Walker, Giardini with Kluwer, 1994.

E.P. Stabler, “The finite connectivity of linguistic structure,” in Perspectives on Sentence Processing, edited by C. Clifton, L. Frazier, and K. Reyner, Lawrence Erlbaum Associates, 1994, pp. 303–336.

M. Marcus, B. Santorini, and M.A. Marcinkiewicz, “Building a large annotated corpus of english: The penn treebank,” Computational Linguistics, vol. 19, pp. 313–330, 1993.

U. Hermjakob and R.J. Mooney, “Learning parse and translation decisions from examples with rich context,” in Proceedings of ACL97, 1997, pp. 482–489.

C. Goller and A. Küchler, “Learning task-dependent distributed structure-representations by backpropagation through structure,” in IEEE International Conference on Neural Networks, pp. 347–352, 1996.

A. Sperduti and A. Starita, “Supervised neural networks for the classification of structures,” IEEE Transactions on Neural Networks, vol. 8, no.3, 1997.

P. Frasconi, M. Gori, and A. Sperduti, “A general framework for adaptive processing of data structures,” IEEE Trans. on Neural Networks, vol. 9, no.5, pp. 768–786, 1998.

G.E. Hinton, “Mapping part-whole hierarchies into connectionist networks,” Artificial Intelligence, vol. 46, pp. 47–75, 1990.

J.B. Pollack, “Recursive distributed representations,” Artificial Intelligence, vol. 46, no.1/2, pp. 77–106, 1990.

T.A. Plate, “Holographic reduced representations,” IEEE Transactions on Neural Networks, vol. 6, no.3, pp. 623–641, 1995.

A. Bianucci, A. Micheli, A. Sperduti, and A. Starita, “Application of cascade-correlation networks for structures to chemistry,” Applied Intelligence, vol. 12, pp. 115–145, 2000.

E. Francesconi, P. Frasconi, M. Gori, S. Marinai, J. Sheng, G. Soda, and A. Sperduti, “Logo recognition by recursive neural networks,” in Graphics Recognition—Algorithms and Systems, edited by R. Kasturi and K. Tombre, Springer Verlag, 1997.

C. Goller, “A Connectionist Approach for Learning Search-Control Heuristics for Automated Deduction Systems,” Ph.D. Thesis, Tech. Univ. Munich, Computer Science, 1997.

M. Bader and I. Lasser, “German verb-final clauses and sentence processing,” in Perspectives on Sentence Processing, edited by C. Clifton, L. Frazier, and K. Reyner, Lawrence Erlbaum Associates, pp. 225–242, 1994.

L. Frazier, “Syntactic processing: Evidence from dutch,” Natural Language and Linguistic Theory, vol. 5, pp. 519–559, 1987.

Y. Kamide and D.C. Mitchell, “Incremental pre-head attachment in japanese parsing,” Language andCognitive Processes, vol. 14, no.5/6, pp. 631–662, 1999.

K. Yamashita, “Processing of Japanese and Korean,” Ph.D. Thesis, Ohio State University, Columbus, Ohio, 1994.

K.M. Eberhard, M.J. Spivey-Knowlton, J. Sedivy, and M.K. Tanenhaus, “Eye movements as a window into real-time spoken language comprehension in natural contexts,” Journal of Psycholinguistic Research, vol. 24, pp. 409–436, 1995.

V. Lombardo, L. Lesmo, L. Ferraris, and C. Seidenari, “Incremental processing and lexicalized grammars,” in Proceedings of the XXI Annual Meeting of the Cognitive Science Society, 1998, pp. 621–626.

D. Milward, “Incremental interpretation of categorial grammar,” in Proceedings of EACL95, 1995.

M.J. Steedman, “Grammar, interpretation and processing from the lexicon,” in Lexical Representation and Process, edited by W.M. Marslen-Wilson, MIT Press, 1989, pp. 463–504.

P. Sturt and M. Crocker, “Monotonic syntactic processing: A cross-linguistic study of attachment and reanalysis,” Language and Cognitive Processes, vol. 11, no.5, pp. 449–494, 1996.

V. Lombardo and P. Sturt, “Incrementality and lexicalism: A treebank study,” in Lexical Representations in Sentence Processing, edited by S. Stevenson and P. Merlo, John Benjamins, 1999.

J.D. Fodor and F. Ferreira (eds.). Reanalysis in Sentence Processing, Kluwer Academic Publishers, 1998.

P. Auer, “On learning from multi-instance examples: Empirical evaluation of a theoretical approach,” in Proc. 14th Int. Conf. Machine Learning, edited by D.H. Fisher, Morgan Kaufmann, pp. 21–29, 1997.

G. Dietterich, R.H. Lathrop, and T. Lozano-Perez, “Solving the multiple-instance problem with axis-parallel rectangles,” Artificial Intelligence, vol. 89, no.1/2, pp. 31–71, 1997.

J. Kolen and S. Kremer (eds.). A Field Guide to Dynamical Recurrent Networks. IEEE Press, 2000.

Y. Bengio, P. Simard, and P. Frasconi, “Learning long-term dependencies with gradient descent is difficult,” IEEE Transactions on Neural Networks, vol. 5, no.2, pp. 157–166, 1994.

J. Thacher, “Tree automata: An informal survey,” in Currents in the Theory of Computing, edited by A. Aho, Prentice-Hall Inc.: Englewood Cliffs, pp. 143–172, 1973.

D.E. Rumelhart, R. Durbin, R. Golden, and Y. Chauvin, “Backpropagation: The basic theory,” in Backpropagation: Theory Architectures and Applications, Lawrence Erlbaum Associates, Hillsdale, NJ, 1995, pp. 1–34.

R. Weischedel, M. Meter, R. Schwartz, L. Ramshaw, and J. Palmucci, “Coping with ambiguity and unknown words through probabilistic models,” Computational Linguistics, vol. 19, no.2, pp. 359–382, 1993.

B. Srinivas and A. Joshi, “Supertagging: An approach to almost parsing,” Computational Linguistics, vol. 25, no.2, pp. 237–265, 1999.

P. Sturt, V. Lombardo, F. Costa, and P. Frasconi, “A wide-coverage model of first-pass structural preferences in human parsing,” in 14th Annual CUNY Conference on Human Sentence Processing, Philadelpha, PA, 2001.

P. Sturt, F. Costa, V. Lombardo, and P. Frasconi, “Learning first-pass attachment preferences with dynamic grammars and recursive neural networks,” in preparation, 2001.

B. Roark and M. Johnson, “Efficient probabilistic top-down and left-corner parsing,” in Proceedings of the 37th Annual Meeting of the Association for Computational Linguistics, pp. 421–428, 1999.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Costa, F., Frasconi, P., Lombardo, V. et al. Towards Incremental Parsing of Natural Language Using Recursive Neural Networks. Applied Intelligence 19, 9–25 (2003). https://doi.org/10.1023/A:1023860521975

Issue Date:

DOI: https://doi.org/10.1023/A:1023860521975