Abstract

This paper considers the problem of source separation in the case of noisy instantaneous mixtures. In a previous work [1], sources have been modeled by a mixture of Gaussians leading to an hierarchical Bayesian model by considering the labels of the mixture as i.i.d hidden variables. We extend this modelization to incorporate a Markovian structure for the labels. This extension is important for practical applications which are abundant: unsupervised classification and segmentation, pattern recognition and speech signal processing.

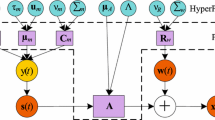

In order to estimate the mixing matrix and the a priori model parameters, we consider observations as incomplete data. The missing data are sources and labels: sources are missing data for observations and labels are missing data for incomplete missing sources. This hierarchical modelization leads to specific restoration maximization type algorithms. Restoration step can be held in three different manners: (i) Complete likelihood is estimated by its conditional expectation. This leads to the EM (expectation-maximization) algorithm [2], (ii) Missing data are estimated by their maximum a posteriori. This leads to JMAP (Joint maximum a posteriori) algorithm [3], (iii) Missing data are sampled from their a posteriori distributions. This leads to the SEM (stochastic EM) algorithm [4]. A Gibbs sampling scheme is implemented to generate missing data. We have also introduced a relaxation strategy into these algorithms to reduce the computational cost which is due to the exponential influence of the number of source components and the number of the mixture Gaussian components.

Similar content being viewed by others

References

H. Snoussi and A. Mohammad-Djafari, “Bayesian Source Separation with Mixture of Gaussians Prior for Sources and Gaussian Prior for Mixture Coefficients,” in Bayesian Inference and Maximum Entropy Methods, A. Mohammad-Djafari (Ed.), Gif-sur-Yvette, France, July 2000, pp. 388-406, Proc. of MaxEnt, Amer. Inst. Physics.

A.P. Dempster, N.M. Laird, and D.B. Rubin, “Maximum Likelihood from Incomplete Data via the EM Algorithm,” J. R. Statist. Soc. B, vol. 39, 1977, pp. 1-38.

W. Qian and D.M. Titterington, “Bayesian Image Restoration: An Application to Edge-Preserving Surface Recovery,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 15, no. 7, 1993, pp. 748-752.

G. Celeux and J. Diebolt, “The SEM algorithm: A Probabilistic Teacher Algorithm Derived from the EM algorithm for the Mixture Problem,” Comput. Statist. Quat., vol. 2, 1985, pp. 73-82.

A. Cichocki and R. Unbehaunen, “Robust Neural Networks with On-Line Learning for Blind Identification and Blind Separation of Sources,” IEEE Trans. on Circuits and Systems, vol. 43, no. 11, 1996, pp. 894-906.

S.J. Roberts, “Independent Component Analysis: Source Assessment, and Separation, a Bayesian Approach,” IEE Proceedings—Vision, Image, and Signal Processing, vol. 145, no. 3, 1998.

T. Lee, M. Lewicki, and T. Sejnowski, “Unsupervised Classification with non Gaussian Mixture Models Using ICA,” Advances in Neural Information Processing Systems, 1999, (in press).

T. Lee, M. Lewicki, and T. Sejnowski, “Independent Component Analysis using an Extended Infomax Algorithm for Mixed Sub-Gaussian and Super-Gaussian Sources,” Neural Computation, vol. 11, no. 2 1999, pp. 409-433.

T. Lee, M. Girolami, A. Bell, and T. Sejnowski, “A Unifying Informationtheoretic Framework for Independent Component Analysis,” Int. Journal of Computers and Mathematics with Applications Computation, 1999, (in press).

I. Ziskind and M. Wax, “Maximum Likelihood Localization of Multiple Sources by Alternating Projection,” IEEE Trans. Acoust. Speech, Signal Processing, vol. ASSP-36, no. 10, 1988, pp. 1553-1560.

M. Wax, “Detection and Localization of Multiple SSources via the Stochastic Signals Model,” IEEE Trans. Signal Processing, vol. 39, no. 11, 1991, pp. 2450-2456.

J.-F. Cardoso, “Infomax and Maximum Likelihood for Source Separation,” IEEE Letters on Signal Processing, vol. 4, no. 4, 1997, pp. 112-114.

J.-L. Lacoume, “A Survey of Source Separation,” in Proc. First International Conference on Independent Component Analysis and Blind Source Separation ICA'99, Aussois, France, Jan. 11–15, 1999, pp. 1-6.

E. Oja, “Nonlinear PCA Criterion and Maximum Likelihood in Independent Component Analysis,” in Proc. First International Conference on Independent Component Analysis and Blind Source Separation ICA'99, Aussois, France, Jan. 11–15, 1999, pp. 143-148.

R.B. MacLeod and D.W. Tufts, “Fast Maximum Likelihood Estimation for Independent Component Analysis,” in Proc. First International Conference on Independent Component Analysis and Blind Source Separation ICA'99, Aussois, France, January 11–15, 1999, pp. 319-324.

O. Bermond and J.-F. Cardoso, “Approximate Likelihood for Noisy Mixtures,” in Proc. First International Conference on Independent Component Analysis and Blind Source Separation ICA'99, Aussois, France, Jan. 11–15, 1999, pp. 325-330.

P. Comon, C. Jutten, and J. Herault, “Blind Separation of Sources.2. Problems Statement,” Signal Processing, vol. 24, no. 1, 1991, pp. 11-20.

C. Jutten and J. Herault, “Blind Separation of Sources.1. An Adaptive Algorithm based on Neuromimetic Architecture,” Signal Processing, vol. 24, no. 1, 1991, pp. 1-10.

E. Moreau and B. Stoll, “An Iterative Block Procedure for the Optimization of Constrained Contrast Functions,” in Proc. First International Conference on Independent Component Analysis and Blind Source Separation ICA'99, Aussois, France, Jan. 11–15, 1999, pp. 59-64.

P. Comon and O. Grellier, “Non-linear Inversion of Underdetermined Mixtures,” in Proc. First International Conference on Independent Component Analysis and Blind Source Separation ICA'99, Aussois, France, Jan. 11–15, 1999, pp. 461-465.

J.-F. Cardoso and B. Laheld, “Equivariant Adaptive Source Separation,” IEEE Trans. on Sig. Proc., vol. 44, no. 12, 1996, pp. 3017-3030.

A. Belouchrani, K. Abed Meraim, J.-F. Cardoso, and Éric Moulines, “A Blind Source Separation Technique Based on Second order Statistics,” IEEE Trans. on Sig. Proc., vol. 45, no. 2, 1997, pp. 434-44.

S.-I. Amari and J.-F. Cardoso, “Blind Source Separation—Semiparametric Statistical Approach,” IEEE Trans. on Sig. Proc., vol. 45, no. 11, 1997, pp. 2692-2700.

J.-F. Cardoso, “Blind Signal Separation: Statistical Principles,” Proceedings of the IEEE, vol. 90, no. 8, pp. 2009-2026, Oct. 1998, Special Issue on Blind Identification and Estimation, R.-W. Liu and L. Tong (Eds.).

J.J. Rajan and P.J.W. Rayner, “Decomposition and the Discrete Karhunenloeve Transformation Using a Bayesian Approach,” IEE Proceedings-Vision, Image, and Signal Processing, vol. 144, no. 2, 1997, pp. 116-123.

K. Knuth, “Bayesian Source Separation and Localization,” in SPIE'98 Proceedings: Bayesian Inference for Inverse Problems, A. Mohammad-Djafari (Ed.), San Diego, CA, July 1998, pp. 147-158.

K.H. Knuth and H.G. Vaughan Jr., “Convergent Bayesian Formulations of Blind Source Separation and Electromagnetic Source Estimation,” in Maximum Entropy and Bayesian Methods, Munich 1998, W. von der Linden, V. Dose, R. Fischer, and R. Preuss (Eds.), Dordrecht, Kluwer, 1999, pp. 217-226.

S.E. Lee and S.J. Press, “Robustness of Bayesian Factor Analysis Estimates,” Communications in Statistics—Theory And Methods, vol. 27, no. 8, 1998.

K. Knuth, “A Bayesian Approach to Source Separation,” in Proceedings of the First International Workshop on Independent Component Analysis and Signal Separation: ICA'99, C.J.J.-F. Cardoso and P. Loubaton (Eds.), Aussios, France, 1999, pp. 283-288.

T. Lee, M. Lewicki, M. Girolami, and T. Sejnowski, “Blind Source Separation of more Sources than Mixtures Using Over-complete Representation,” IEEE Signal Processing Letters, 1999 (in press).

A. Mohammad-Djafari, “A Bayesian Approach to Source Separation,” in Bayesian Inference and Maximum Entropy Methods, J.R.G. Erikson and C. Smith (Eds.), Boise, IH July 1999, MaxEnt Workshops, Amer. Inst. Physics.

O. Bermond, Méthodes statistiques pour la séparation de Sources, Phd thesis, Ecole Nationale Supérieure des Télécommunications, 2000.

H. Attias, “Blind Separation of Noisy Mixture: An EM Algorithm for Independent Factor Analysis,” Neural Computation, vol. 11, 1999, pp. 803-851.

R.J. Hathaway, “A Constrained EM Algorithm for Univariate Normal Mixtures,” J. Statist. Comput. Simul., vol. 23, 1986, pp. 211-230.

A. Ridolfi and J. Idier, “Penalized Maximum Likelihood Estimation for Univariate Normal Mixture Distributions,” in Actes 17e coll. GRETSI, Vannes, France, Sept. 1999, pp. 259-262.

H. Snoussi and A. Mohammad-Djafari, “Penalized Maximum Likelihood for Multivariate Gaussian Mixture,” in Bayesian Inference and Maximum Entropy Methods, MaxEnt Workshops, Aug. 2001, to appear in Amer. Inst. Physics.

A. Belouchrani, “Séparation Autodidacte de Sources: Algorithmes, Performances et Application à des Signaux Expérimentaux,” Phd thesis, Ecole Nationale Supérieure des Télécommunications, 1995.

Z. Ghahramani and M. Jordan, “Factorial Hidden Markov Models,” Machine Learning, no. 29, 1997, pp. 245-273.

J. Cardoso and B. Labeld, “Equivariant Adaptative Source Separation,” Signal Processing, vol. 44, 1996, pp. 3017-3030.

J.W. Brewer, “Kronecker Products and Matrix Calculus in System Theory,” IEEE Trans. Circ. Syst., vol. CS-25, no. 9, 1978, pp. 772-781.

L.R. Rabiner and B.H. Juang, “An Introduction to Hidden Markov Models,” IEEE ASSP Mag., 1986, pp. 4-16.

E. Moreau and O. Macchi, “High-Order Contrasts for Self-Adaptative Source Separation,” Adaptative Control Signal Process, vol. 10, 1996, pp. 19-46.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Snoussi, H., Mohammad-Djafari, A. Bayesian Unsupervised Learning for Source Separation with Mixture of Gaussians Prior. The Journal of VLSI Signal Processing-Systems for Signal, Image, and Video Technology 37, 263–279 (2004). https://doi.org/10.1023/B:VLSI.0000027490.49527.47

Published:

Issue Date:

DOI: https://doi.org/10.1023/B:VLSI.0000027490.49527.47