Abstract

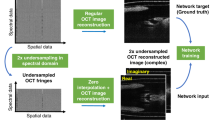

The rapidly evolving field of optoacoustic (photoacoustic) imaging and tomography is driven by a constant need for better imaging performance in terms of resolution, speed, sensitivity, depth and contrast. In practice, data acquisition strategies commonly involve sub-optimal sampling of the tomographic data, resulting in inevitable performance trade-offs and diminished image quality. We propose a new framework for efficient recovery of image quality from sparse optoacoustic data based on a deep convolutional neural network and demonstrate its performance with whole body mouse imaging in vivo. To generate accurate high-resolution reference images for optimal training, a full-view tomographic scanner capable of attaining superior cross-sectional image quality from living mice was devised. When provided with images reconstructed from substantially undersampled data or limited-view scans, the trained network was capable of enhancing the visibility of arbitrarily oriented structures and restoring the expected image quality. Notably, the network also eliminated some reconstruction artefacts present in reference images rendered from densely sampled data. No comparable gains were achieved when the training was performed with synthetic or phantom data, underlining the importance of training with high-quality in vivo images acquired by full-view scanners. The new method can benefit numerous optoacoustic imaging applications by mitigating common image artefacts, enhancing anatomical contrast and image quantification capacities, accelerating data acquisition and image reconstruction approaches, while also facilitating the development of practical and affordable imaging systems. The suggested approach operates solely on image-domain data and thus can be seamlessly applied to artefactual images reconstructed with other modalities.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The datasets used for the current study were generated and analysed in our laboratory and are downloadable at https://doi.org/10.6084/m9.figshare.9250784. The code to reproduce the results of the paper is available at https://github.com/ndavoudi/sparse_artefact_unet.

References

Jathoul, A. P. et al. Deep in vivo photoacoustic imaging of mammalian tissues using a tyrosinase-based genetic reporter. Nat. Photon. 9, 239–246 (2015).

Yao, J. et al. High-speed label-free functional photoacoustic microscopy of mouse brain in action. Nat. Methods 12, 407–410 (2015).

Lin, H.-C. A. et al. Characterization of cardiac dynamics in an acute myocardial infarction model by four-dimensional optoacoustic and magnetic resonance imaging. Theranostics 7, 4470–4479 (2017).

Stoffels, I. et al. Metastatic status of sentinel lymph nodes in melanoma determined noninvasively with multispectral optoacoustic imaging. Sci. Transl. Med. 7, 317ra199 (2015).

Diot, G. et al. Multi-spectral optoacoustic tomography (MSOT) of human breast cancer. Clin. Cancer Res. 23, 6912–6922 (2017).

Knieling, F. et al. Multispectral optoacoustic tomography for assessment of Crohn’s disease activity. N. Engl. J. Med. 376, 1292–1294 (2017).

Lin, L. et al. Single-breath-hold photoacoustic computed tomography of the breast. Nat. Commun. 9, 2352 (2018).

Wang, L. V. & Yao, J. A practical guide to photoacoustic tomography in the life sciences. Nat. Methods 13, 627–638 (2016).

Weber, J., Beard, P. C. & Bohndiek, S. E. Contrast agents for molecular photoacoustic imaging. Nat. Methods 13, 639–650 (2016).

Deán-Ben, X., Gottschalk, S., Mc Larney, B., Shoham, S. & Razansky, D. Advanced optoacoustic methods for multiscale imaging of in vivo dynamics. Chem. Soc. Rev. 46, 2158–2198 (2017).

Taruttis, A. & Ntziachristos, V. Advances in real-time multispectral optoacoustic imaging and its applications. Nat. Photon. 9, 219–227 (2015).

Deán-Ben, X. L. et al. Functional optoacoustic neuro-tomography for scalable whole-brain monitoring of calcium indicators. Light Sci. Appl. 5, e16201 (2016).

Özbek, A., Deán-Ben, X. L. & Razansky, D. Optoacoustic imaging at kilohertz volumetric frame rates. Optica 5, 857–863 (2018).

Guo, Z., Li, C., Song, L. & Wang, L. V. Compressed sensing in photoacoustic tomography in vivo. J. Biomed. Opt. 15, 021311 (2010).

Sandbichler, M., Krahmer, F., Berer, T., Burgholzer, P. & Haltmeier, M. A novel compressed sensing scheme for photoacoustic tomography. SIAM J. Appl. Math. 75, 2475–2494 (2015).

Arridge, S. et al. Accelerated high-resolution photoacoustic tomography via compressed sensing. Phys. Med. Biol. 61, 8908–8940 (2016).

Meng, J. et al. High-speed, sparse-sampling three-dimensional photoacoustic computed tomography in vivo based on principal component analysis. J. Biomed. Opt. 21, 076007 (2016).

Gratt, S., Nuster, R., Wurzinger, G., Bugl, M. & Paltauf, G. 64-line-sensor array: fast imaging system for photoactive tomography. Proc. SPIE 8943, 894365 (2014).

Rosenthal, A., Ntziachristos, V. & Razansky, D. Acoustic inversion in optoacoustic tomography: a review. Curr. Med. Imaging Rev. 9, 318–336 (2013).

Hyun, C. M., Kim, H. P., Lee, S. M., Lee, S. & Seo, J. K. Deep learning for undersampled MRI reconstruction. Phys. Med. Biol. 63, 135007 (2018).

Zhu, B., Liu, J. Z., Cauley, S. F., Rosen, B. R. & Rosen, M. S. Image reconstruction by domain-transform manifold learning. Nature 555, 487–492 (2018).

Hammernik, K., Würfl, T., Pock, T. & Maier, A. in Bildverarbeitung für die Medizin 2017 92–97 (Springer, 2017).

Kelly, B., Matthews, T. P. & Anastasio, M. A. Deep learning-guided image reconstruction from incomplete data. Preprint at https://arxiv.org/abs/1709.00584 (2017).

Bungart, B. L. et al. Photoacoustic tomography of intact human prostates and vascular texture analysis identify prostate cancer biopsy targets. Photoacoustics 11, 46–55 (2018).

Zhang, J., Chen, B., Zhou, M., Lan, H. & Gao, F. Photoacoustic image classification and segmentation of breast cancer: a feasibility study. IEEE Access 7, 5457–5466 (2019).

Antholzer, S., Haltmeier, M. & Schwab, J. Deep learning for photoacoustic tomography from sparse data. Inverse Probl. Sci. Eng. 27, 987–1005 (2018).

Guan, S., Khan, A., Sikdar, S. & Chitnis, P. V. Fully dense UNet for 2D sparse photoacoustic tomography artifact removal. IEEE J. Biomed. Health Inform. https://doi.org/10.1109/JBHI.2019.2912935 (2019).

Hauptmann, A. et al. Model-based learning for accelerated, limited-view 3-D photoacoustic tomography. IEEE Trans. Med. Imaging 37, 1382–1393 (2018).

Waibel, D. et al. Reconstruction of initial pressure from limited view photoacoustic images using deep learning. Proc. SPIE 10494, 104942S (2018).

Cai, C., Deng, K., Ma, C. & Luo, J. End-to-end deep neural network for optical inversion in quantitative photoacoustic imaging. Opt. Lett. 43, 2752–2755 (2018).

Kirchner, T., Gröhl, J. & Maier-Hein, L. Context encoding enables machine learning-based quantitative photoacoustics. J. Biomed. Opt. 23, 056008 (2018).

Reiter, A. & Bell, M. A. L. A machine learning approach to identifying point source locations in photoacoustic data. Proc. SPIE 10064, 100643J (2017).

Allman, D., Reiter, A. & Bell, M. A. L. Photoacoustic source detection and reflection artifact removal enabled by deep learning. IEEE Trans. Med. Imaging 37, 1464–1477 (2018).

Govinahallisathyanarayana, S., Ning, B., Cao, R., Hu, S. & Hossack, J. A. Dictionary learning-based reverberation removal enables depth-resolved photoacoustic microscopy of cortical microvasculature in the mouse brain. Sci. Rep. 8, 985 (2018).

Gutta, S. et al. Deep neural network-based bandwidth enhancement of photoacoustic data. J. Biomed. Opt. 22, 116001 (2017).

Lutzweiler, C. & Razansky, D. Optoacoustic imaging and tomography: reconstruction approaches and outstanding challenges in image performance and quantification. Sensors 13, 7345–7384 (2013).

Ronneberger, O., Fischer, P. & Brox, T. U-Net: convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-assisted Intervention (eds Navab, N., Hornegger, J., Wells, W. M. & Frangi, A. F.) 234–241 (Springer, 2015).

Dean-Ben, X. L., Buehler, A., Ntziachristos, V. & Razansky, D. Accurate model-based reconstruction algorithm for three-dimensional optoacoustic tomography. IEEE Trans. Med. Imaging 31, 1922–1928 (2012).

Schwab, J., Antholzer, S., Nuster, R. & Haltmeier, M. Real-time photoacoustic projection imaging using deep learning. Preprint at https://arxiv.org/abs/1801.06693 (2018).

Omar, M. et al. Optical imaging of post-embryonic zebrafish using multi orientation raster scan optoacoustic mesoscopy. Light Sci. Appl. 6, e16186 (2017).

Xu, Y., Wang, L. V., Ambartsoumian, G. & Kuchment, P. Reconstructions in limited‐view thermoacoustic tomography. Med. Phys. 31, 724–733 (2004).

Deán-Ben, X. L. & Razansky, D. On the link between the speckle free nature of optoacoustics and visibility of structures in limited-view tomography. Photoacoustics 4, 133–140 (2016).

Li, L. et al. Single-impulse panoramic photoacoustic computed tomography of small-animal whole-body dynamics at high spatiotemporal resolution. Nat. Biomed. Eng. 1, 0071 (2017).

Queiros, D. et al. Modeling the shape of cylindrically focused transducers in three-dimensional optoacoustic tomography. J. Biomed. Opt. 18, 076014 (2013).

Dean-Ben, X. L., Ntziachristos, V. & Razansky, D. Effects of small variations of speed of sound in optoacoustic tomographic imaging. Med. Phys. 41, 073301 (2014).

Fehm, T. F., Deán-Ben, X. L., Ford, S. J. & Razansky, D. In vivo whole-body optoacoustic scanner with real-time volumetric imaging capacity. Optica 3, 1153–1159 (2016).

Tang, J., Dai, X. & Jiang, H. Wearable scanning photoacoustic brain imaging in behaving rats. J. Biophoton. 9, 570–575 (2016).

Nair, A. A., Gubbi, M. R., Tran, T. D., Reiter, A. & Bell, M. A. L. A fully convolutional neural network for beamforming ultrasound images. In 2018 IEEE International Ultrasonics Symposium (IUS) 1–4 (IEEE, 2018).

Neuschmelting, V. et al. Performance of a multispectral optoacoustic tomography (MSOT) system equipped with 2D vs. 3D handheld probes for potential clinical translation. Photoacoustics 4, 1–10 (2016).

Kingma, D. P. & Ba, J. Adam: a method for stochastic optimization. Preprint at https://arxiv.org/abs/1412.6980 (2014).

Bastien, F. et al. Theano: new features and speed improvements. Preprint at https://arxiv.org/abs/1211.5590 (2012).

Dieleman, S. et al. Lasagne: first release 3 (Zenodo, 2015).

Merčep, E., Herraiz, J. L., Deán-Ben, X. L. & Razansky, D. Transmission–reflection optoacoustic ultrasound (TROPUS) computed tomography of small animals. Light Sci. Appl. 8, 18 (2019).

Xu, M. & Wang, L. V. Universal back-projection algorithm for photoacoustic computed tomography. Phys. Rev. E 71, 016706 (2005).

Estrada, H. C. et al. Virtual craniotomy for high-resolution optoacoustic brain microscopy. Sci. Rep. 8, 1459 (2018).

Rosenthal, A., Razansky, D. & Ntziachristos, V. Fast semi-analytical model-based acoustic inversion for quantitative optoacoustic tomography. IEEE Trans. Med. Imaging 29, 1275–1285 (2010).

Dean-Ben, X. L., Ntziachristos, V. & Razansky, D. Acceleration of optoacoustic model-based reconstruction using angular image discretization. IEEE Trans. Med. Imaging 31, 1154–1162 (2012).

Wang, L. V. & Wu, H.-i. Biomedical Optics: Principles and Imaging (Wiley, 2012).

Acknowledgements

This work was partially supported by the European Research Council under grant agreement ERC-2015-CoG-682379.

Author information

Authors and Affiliations

Contributions

N.D., X.L.D.-B. and D.R. conceived the study. N.D. and X.L.D.-B. carried out the experiments. N.D. implemented the image reconstruction and processing algorithms and analysed the data. D.R. and X.L.D.-B. supervised the study and data analysis. All authors discussed the results and contributed to writing the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Figs. 1–10 and description of the Supplementary Videos.

Supplementary Video 1

Breathing mouse reconstruction with 32 channels.

Supplementary Video 2

Breathing mouse reconstruction with 128 channels.

Supplementary Video 3

Fly-through reconstruction with 32 channels.

Supplementary Video 4

Fly-through reconstruction with 128 channels.

Rights and permissions

About this article

Cite this article

Davoudi, N., Deán-Ben, X.L. & Razansky, D. Deep learning optoacoustic tomography with sparse data. Nat Mach Intell 1, 453–460 (2019). https://doi.org/10.1038/s42256-019-0095-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-019-0095-3

This article is cited by

-

The emerging role of photoacoustic imaging in clinical oncology

Nature Reviews Clinical Oncology (2022)

-

Silicon-photonics acoustic detector for optoacoustic micro-tomography

Nature Communications (2022)

-

Single-detector 3D optoacoustic tomography via coded spatial acoustic modulation

Communications Engineering (2022)

-

Real-time tomography of the human brain

Nature Biomedical Engineering (2022)

-

Multiscale optical and optoacoustic imaging of amyloid-β deposits in mice

Nature Biomedical Engineering (2022)