Abstract

As artificial intelligence (AI) transitions from research to deployment, creating the appropriate datasets and data pipelines to develop and evaluate AI models is increasingly the biggest challenge. Automated AI model builders that are publicly available can now achieve top performance in many applications. In contrast, the design and sculpting of the data used to develop AI often rely on bespoke manual work, and they critically affect the trustworthiness of the model. This Perspective discusses key considerations for each stage of the data-for-AI pipeline—starting from data design to data sculpting (for example, cleaning, valuation and annotation) and data evaluation—to make AI more reliable. We highlight technical advances that help to make the data-for-AI pipeline more scalable and rigorous. Furthermore, we discuss how recent data regulations and policies can impact AI.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Change history

21 September 2022

A Correction to this paper has been published: https://doi.org/10.1038/s42256-022-00548-7

References

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Ouyang, D. et al. Video-based AI for beat-to-beat assessment of cardiac function. Nature 580, 252–256 (2020).

Hutson, M. Robo-writers: the rise and risks of language-generating AI. Nature 591, 22–25 (2021).

Paszke, A. et al. PyTorch: an imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 32, 8026–8037 (2019).

Abadi, M. et al. TensorFlow: a system for large-scale machine learning. In Proc. 12th USENIX Symposium on Operating Systems Design and Implementation 265–283 (USENIX Association, 2016).

Zhang, X. et al. Dnnbuilder: an automated tool for building high-performance dnn hardware accelerators for fpgas. In 2018 IEEE/ACM International Conference on Computer-Aided Design (ICCAD) 1–8 (IEEE, 2018).

Code-free machine learning: AutoML with AutoGluon, Amazon SageMaker, and AWS Lambda. AWS Machine Learning Blog https://aws.amazon.com/blogs/machine-learning/code-free-machine-learning-automl-with-autogluon-amazon-sagemaker-and-aws-lambda/ (2020).

Korot, E. et al. Code-free deep learning for multi-modality medical image classification. Nat. Mach. Intell. 3, 288–298 (2021).

Dimensional Research. What Data Scientists Tell Us About AI Model Training Today. Alegion https://content.alegion.com/dimensional-researchs-survey (2019).

Forrester Consulting. Overcome Obstacles To Get To AI At Scale. IBM https://www.ibm.com/downloads/cas/VBMPEQLN (2020).

State of data science 2020. Anaconda https://www.anaconda.com/state-of-data-science-2020 (2020).

Petrone, J. Roche pays $1.9 billion for Flatiron’s army of electronic health record curators. Nat. Biotechnol. 36, 289–290 (2018).

Geirhos, R. et al. Shortcut learning in deep neural networks. Nat. Mach. Intell. 2, 665–673 (2020).

Daneshjou, R. et al. Disparities in dermatology AI: assessments using diverse clinical images. Preprint at http://arxiv.org/abs/2111.08006 (2021).

Koch, B., Denton, E., Hanna, A. & Foster, J. G. Reduced, reused and recycled: the life of a dataset in machine learning research. In NeurIPS 2021 Datasets and Benchmarks Track 50 (OpenReview, 2021).

Coleman, C. et al. DAWNBench: An end-to-end deep learning benchmark and competition. In NeurIPS MLSys Workshop 10 (MLSys, 2017).

Krishna, R. et al. Visual genome: connecting language and vision using crowdsourced dense image annotations. Int. J. Comput. Vision 123, 32–73 (2017).

Kiela, D. et al. Dynabench: rethinking benchmarking in NLP. In Proc. 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies 4110–4124 (ACL, 2021).

Sambasivan, N. et al. ‘Everyone wants to do the model work, not the data work’: data cascades in high-stakes AI. In Proc. 2021 CHI Conference on Human Factors in Computing Systems (ACM, 2021); https://doi.org/10.1145/3411764.3445518

Daneshjou, R., Smith, M. P., Sun, M. D., Rotemberg, V. & Zou, J. Lack of transparency and potential bias in artificial intelligence data sets and algorithms: a scoping review. JAMA Dermatol. 157, 1362–1369 (2021).

Wu, E. et al. How medical AI devices are evaluated: limitations and recommendations from an analysis of FDA approvals. Nat. Med. 27, 582–584 (2021).

Paullada, A., Raji, I. D., Bender, E. M., Denton, E. & Hanna, A. Data and its (dis)contents: a survey of dataset development and use in machine learning research. Patterns 2, 100336 (2021).

Smucker, B., Krzywinski, M. & Altman, N. Optimal experimental design. Nat. Methods 15, 559–560 (2018).

Fan, W. & Geerts, F. Foundations of data quality management. Synth. Lect. Data Manag. 4, 1–217 (2012).

Mehrabi, N., Morstatter, F., Saxena, N., Lerman, K. & Galstyan, A. A survey on bias and fairness in machine learning. ACM Comput. Surv. 54, 1–35 (2021).

Buolamwini, J. & Gebru, T. Gender shades: intersectional accuracy disparities in commercial gender classification. In Proc. 1st Conference on Fairness, Accountability and Transparency 77–91 (PMLR, 2018).

Kaushal, A., Altman, R. & Langlotz, C. Geographic distribution of US cohorts used to train deep learning algorithms. J. Am. Med. Assoc. 324, 1212–1213 (2020).

Zou, J. & Schiebinger, L. AI can be sexist and racist—it’s time to make it fair. Nature 559, 324–326 (2018).

Coston, A. et al. Leveraging administrative data for bias audits: assessing disparate coverage with mobility data for COVID-19 policy. In Proc. 2021 ACM Conference on Fairness, Accountability, and Transparency 173–184 (ACM, 2021); https://doi.org/10.1145/3442188.3445881

Mozilla. Mozilla Common Voice receives $3.4 million investment to democratize and diversify voice tech in East Africa. Mozilla Foundation https://foundation.mozilla.org/en/blog/mozilla-common-voice-receives-34-million-investment-to-democratize-and-diversify-voice-tech-in-east-africa/ (2021).

Reid, K. Community partnerships and technical excellence unlock open voice technology success in Rwanda. Mozilla Foundation https://foundation.mozilla.org/en/blog/open-voice-success-in-rwanda/ (2021).

Van Noorden, R. The ethical questions that haunt facial-recognition research. Nature 587, 354–358 (2020).

Build more ethical AI. Synthesis AI https://synthesis.ai/use-cases/bias-reduction/ (2022).

Kortylewski, A. et al. Analyzing and reducing the damage of dataset bias to face recognition with synthetic data. In IEEE Conference on Computer Vision and Pattern Recognition Workshops 2261–2268 (IEEE, 2019).

Nikolenko, S. I. Synthetic Data for Deep Learning Vol. 174 (Springer, 2021).

Srivastava, S. et al. BEHAVIOR: Benchmark for Everyday Household Activities in Virtual, Interactive, and Ecological Environments. In Proc. 5th Annual Conference on Robot Learning Vol. 164 477–490 (PMLR, 2022).

Li, C. et al. iGibson 2.0: object-centric simulation for robot learning of everyday household tasks. In Proc. 5th Annual Conference on Robot Learning Vol. 164 455–465 (PMLR, 2022).

Höfer, S. et al. Perspectives on Sim2Real transfer for robotics: a summary of the R:SS 2020 workshop. Preprint at http://arxiv.org/abs/2012.03806 (2020)

Egger, B. et al. 3D morphable face models—past, present, and future. ACM Trans. Graph. 39, 1–38 (2020).

Choi, K., Grover, A., Singh, T., Shu, R. & Ermon, S. Fair generative modeling via weak supervision. Proc. Mach. Learn. Res. 119, 1887–1898 (2020).

Holland, S., Hosny, A., Newman, S., Joseph, J. & Chmielinski, K. The dataset nutrition label: a framework to drive higher data quality standards. Preprint at https://arxiv.org/abs/1805.03677 (2018).

Gebru, T. et al. Datasheets for datasets. Commun. ACM 64, 86–92 (2021).

Bender, E. M. & Friedman, B. Data statements for natural language processing: toward mitigating system bias and enabling better science. Trans. Assoc. Comput. Linguist. 6, 587–604 (2018).

Wang, A., Narayanan, A. & Russakovsky, O. REVISE: a tool for measuring and mitigating bias in visual datasets. In European Conference on Computer Vision 733–751 (Springer, 2020).

Miceli, M. et al. Documenting computer vision datasets: an invitation to reflexive data practices. In Proc. 2021 ACM on Conference on Fairness, Accountability, and Transparency 161–172 (2021).

Scheuerman, M. K., Hanna, A. & Denton, E. Do datasets have politics? Disciplinary values in computer vision dataset development. Proc. ACM Hum. Comput. Interact. 5, 317:1–317:37 (2021).

Liang, W. & Zou, J. MetaShift: a dataset of datasets for evaluating contextual distribution shifts and training conflicts. In International Conference on Learning Representations 400 (OpenReview, 2022).

Ghorbani, A. & Zou, J. Data Shapley: equitable valuation of data for machine learning. Proc. Mach. Learn. Res. 97, 2242–2251 (2019).

Kwon, Y., Rivas, M. A. & Zou, J. Efficient computation and analysis of distributional Shapley values. Proc. Mach. Learn. Res. 130, 793–801 (2021).

Jia, R. et al. Towards efficient data valuation based on the Shapley value. Proc. Mach. Learn. Res. 89, 1167–1176 (2019).

Koh, P. W. & Liang, P. Understanding black-box predictions via influence functions. Proc. Mach. Learn. Res. 70, 1885–1894 (2017).

Kwon, Y. & Zou, J. Beta Shapley: a unified and noise-reduced data valuation framework for machine learning. In Proc. 25th International Conference on Artificial Intelligence and Statistics Vol. 151 8780–8802 (PMLR, 2022).

Northcutt, C., Jiang, L. & Chuang, I. Confident learning: estimating uncertainty in dataset labels. J. Artif. Intell. Res. 70, 1373–1411 (2021).

Northcutt, C. G., Athalye, A. & Mueller, J. Pervasive label errors in test sets destabilize machine learning benchmarks. In NeurIPS 2021 Datasets and Benchmarks Track 172 (OpenReview, 2021).

Dodge, J. et al. Documenting large webtext corpora: a case study on the Colossal Clean Crawled Corpus. In Proc. 2021 Conference on Empirical Methods in Natural Language Processing 12861305 (ACL, 2021).

Krishnan, S., Wang, J., Wu, E., Franklin, M. J. & Goldberg, K. ActiveClean: interactive data cleaning for statistical modeling. Proc. VLDB Endow. 9, 948–959 (2016).

Rolnick, D., Veit, A., Belongie, S. & Shavit, N. Deep learning is robust to massive label noise. Preprint at http://arxiv.org/abs/1705.10694 (2018).

Geiger, A., Lenz, P. & Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In 2012 IEEE Conference on Computer Vision and Pattern Recognition 3354–3361 (IEEE, 2012); https://doi.org/10.1109/CVPR.2012.6248074

Sun, P. et al. Scalability in perception for autonomous driving: Waymo Open Dataset. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition 2446–2454 (IEEE, 2020).

Park, J., Krishna, R., Khadpe, P., Fei-Fei, L. & Bernstein, M. AI-based request augmentation to increase crowdsourcing participation. Proc. AAAI Conf. Hum. Comput. Crowdsourcing 7, 115–124 (2019).

Ratner, A. et al. Snorkel: rapid training data creation with weak supervision. VLDB J. 29, 709–730 (2020).

Ratner, A. J., De, Sa,C. M., Wu, S., Selsam, D. & Ré, C. Data programming: creating large training sets, quickly. Adv. Neural Inf. Process. Syst. 29, 3567–3575 (2016).

Liang, W., Liang, K.-H. & Yu, Z. HERALD: an annotation efficient method to detect user disengagement in social conversations. In Proc. 59th Annual Meeting of the Association for Computational Linguistics 3652–3665 (ACL, 2021).

Settles, B. Active Learning Literature Survey. MINDS@UW http://digital.library.wisc.edu/1793/60660 (University of Wisconsin-Madison, 2009).

Coleman, C. et al. Similarity search for efficient active learning and search of rare concepts. In Proc. AAAI Conference on Artificial Intelligence Vol. 36 6402–6410 (2022).

Liang, W., Zou, J. & Yu, Z. ALICE: Active Learning with Contrastive Natural Language Explanations. In Proc. 2020 Conference on Empirical Methods in Natural Language Processing 4380–4391 (ACL, 2020).

Hollenstein, N. & Zhang, C. Entity recognition at first sight: improving NER with eye movement information. In Proc. 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies 1–10 (ACL, 2019).

Valliappan, N. et al. Accelerating eye movement research via accurate and affordable smartphone eye tracking. Nat. Commun. 11, 4553 (2020).

Saab, K. et al. Observational supervision for medical image classification using gaze data. In International Conference on Medical Image Computing and Computer-Assisted Intervention 603–614 (Springer, 2021).

Kang, D., Raghavan, D., Bailis, P. & Zaharia, M. Model assertions for debugging machine learning. In NeurIPS MLSys Workshop 23 (MLSys, 2020).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25, 1097–1105 (2012).

Sennrich, R., Haddow, B. & Birch, A. Improving neural machine translation models with monolingual data. In Proc. 54th Annual Meeting of the Association for Computational Linguistics 86–96 (ACL, 2016).

Zhang, H., Cissé, M., Dauphin, Y. N. & Lopez-Paz, D. mixup: beyond empirical risk minimization. In Proc. International Conference on Learning Representations 296 (OpenReview, 2018).

Liang, W. & Zou, J. Neural group testing to accelerate deep learning. In 2021 IEEE International Symposium on Information Theory (ISIT) 958–963 (IEEE, 2021); https://doi.org/10.1109/ISIT45174.2021.9518038

Cubuk, E. D., Zoph, B., Shlens, J. & Le, Q. V. Randaugment: practical automated data augmentation with a reduced search space. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops 702–703 (IEEE, 2020).

Caron, M., Bojanowski, P., Joulin, A. & Douze, M. Deep clustering for unsupervised learning of visual features. In Proc. European Conference on Computer Vision (ECCV) 132–149 (2018).

Deng, Z., Zhang, L., Ghorbani, A. & Zou, J. Improving adversarial robustness via unlabeled out-of-domain. Data. Proc. Mach. Learn. Res. 130, 2845–2853 (2021).

Zhang, L., Deng, Z., Kawaguchi, K., Ghorbani, A. & Zou, J. How does mixup help with robustness and generalization? In Proc. International Conference on Learning Representations 79 (OpenReview, 2021).

Zech, J. R. et al. Variable generalization performance of a deep learning model to detect pneumonia in chest radiographs: a cross-sectional study. PLoS Med. 15, e1002683 (2018).

Gururangan, S. et al. Annotation artifacts in natural language inference data. In Proc. 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies 107–112 (ACL, 2018).

Hughes, J. W. et al. Deep learning evaluation of biomarkers from echocardiogram videos. EBioMedicine 73, 103613 (2021).

Tannenbaum, C., Ellis, R. P., Eyssel, F., Zou, J. & Schiebinger, L. Sex and gender analysis improves science and engineering. Nature 575, 137–146 (2019).

Kim, M. P., Ghorbani, A. & Zou, J. Y. Multiaccuracy: black-box post-processing for fairness in classification. In Proc. 2019 AAAI/ACM Conference on AI, Ethics, and Society 247–254 (ACM, 2019); https://doi.org/10.1145/3306618.3314287

Eyuboglu, S. et al. Domino: discovering systematic errors with cross-modal embeddings. In Proc. International Conference on Learning Representations 1 (OpenReview, 2022).

Karlaš, B. et al. Building continuous integration services for machine learning. In Proc. 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining 2407–2415 (ACM, 2020); https://doi.org/10.1145/3394486.3403290

Lambert, F. Tesla is collecting insane amount of data from its full self-driving test fleet. Electrek https://electrek.co/2020/10/24/tesla-collecting-insane-amount-data-full-self-driving-test-fleet/ (2020).

Azizzadenesheli, K., Liu, A., Yang, F. & Anandkumar, A. Regularized learning for domain adaptation under label shifts. In Proc. International Conference on Learning Representations 432 (OpenReview, 2019).

Baylor, D. et al. TFX: a TensorFlow-based production-scale machine learning platform. In Proc. 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 1387–1395 (ACM, 2017); https://doi.org/10.1145/3097983.3098021

Zaharia, M. et al. Accelerating the machine learning lifecycle with MLflow. IEEE Data Eng Bull 41, 39–45 (2018).

Proposal for a Regulation of the European Parliament and the Council Laying down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative Acts COM(2021) 206 final (European Commission, 2021); https://eur-lex.europa.eu/legal-content/EN/TXT/HTML/?uri=CELEX:52021PC0206&from=EN

Mello, M. M., Triantis, G., Stanton, R., Blumenkranz, E. & Studdert, D. M. Waiting for data: barriers to executing data use agreements. Science 367, 150–152 (2020).

Andrus, M., Spitzer, E., Brown, J. & Xiang, A. What we can’t measure, we can’t understand: challenges to demographic data procurement in the pursuit of fairness. In Proc. 2021 ACM Conference on Fairness, Accountability, and Transparency 249–260 (ACM, 2021).

Woolf, S. H., Rothemich, S. F., Johnson, R. E. & Marsland, D. W. Selection bias from requiring patients to give consent to examine data for health services research. Arch. Fam. Med. 9, 1111–1118 (2000).

Marshall, E. Is data-hoarding slowing the assault of pathogens? Science 275, 777–780 (1997).

Baeza-Yates, R. Data and algorithmic bias in the web. In Proc. 8th ACM Conference on Web Science 1 (ACM, 2016).

Garrison, N. A. et al. A systematic literature review of individuals’ perspectives on broad consent and data sharing in the United States. Genet. Med. 18, 663–671 (2016).

Cox, N. UK Biobank shares the promise of big data. Nature 562, 194–195 (2018).

Art. 20 GDPR: Right to Data Portability https://gdpr-info.eu/art-20-gdpr/ (General Data Protection Regulation, 2021).

TITLE 1.81.5. California Consumer Privacy Act of 2018 https://leginfo.legislature.ca.gov/faces/codes_displayText.xhtml?division=3.&part=4.&lawCode=CIV&title=1.81.5 (California Legislative Information, 2018).

Krämer, J., Senellart, P. & de Streel, A. Making Data Portability More Effective for the Digital Economy: Economic Implications and Regulatory Challenges (CERRE, 2020).

Loh, W., Hauschke, A., Puntschuh, M. & Hallensleben, S. VDE SPEC 90012 V1.0: VCIO Based Description of Systems for AI Trustworthiness Characterisation (VDE Press, 2022).

Can artificial intelligence conform to values? VDE SPEC as the basis for future developments. VDE Presse https://www.vde.com/ai-trust (2022).

Mitchell, M. et al. Model cards for model reporting. In Proc. Conference on Fairness, Accountability, and Transparency 220–229 (ACM, 2019).

Bagdasaryan, E., Poursaeed, O. & Shmatikov, V. Differential privacy has disparate impact on model accuracy. Adv. Neural Inf. Process. Syst. 32, 15453–15462 (2019).

Lyu, L., Yu, H. & Yang, Q. Threats to federated learning: a survey. Preprint at http://arxiv.org/abs/2003.02133 (2020).

Izzo, Z., Smart, M. A., Chaudhuri, K. & Zou, J. Approximate data deletion from machine learning models. Proc. Mach. Learn. Res. 130, 2008–2016 (2021).

Johnson, G. A., Shriver, S. K. & Du, S. Consumer privacy choice in online advertising: who opts out and at what cost to industry? Mark. Sci. 39, 33–51 (2020).

Wilson, D. R. Beyond probabilistic record linkage: Using neural networks and complex features to improve genealogical record linkage. In 2011 International Joint Conference on Neural Networks 9–14 (IEEE, 2011); https://doi.org/10.1109/IJCNN.2011.6033192

Kallus, N., Mao, X. & Zhou, A. Assessing algorithmic fairness with unobserved protected class using data combination. Manag. Sci. https://doi.org/10.1287/mnsc.2020.3850 (2021).

Deng, J. et al. Imagenet: a large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition 248–255 (IEEE, 2009).

Yang, K., Qinami, K., Fei-Fei, L., Deng, J. & Russakovsky, O. Towards fairer datasets: filtering and balancing the distribution of the people subtree in the ImageNet hierarchy. In Proc. 2020 Conference on Fairness, Accountability, and Transparency 547–558 (ACM, 2020); https://doi.org/10.1145/3351095.3375709

DCBench: a benchmark of data-centric tasks from across the machine learning lifecycle. DCAI https://www.datacentricai.cc/benchmark/ (2021).

Zaugg, I. A., Hossain, A. & Molloy, B. Digitally-disadvantaged languages. Internet Policy Rev. https://doi.org/10.14763/2022.2.1654 (2022).

Victor, D. COCO-Africa: a curation tool and dataset of common objects in the context of Africa. In 2018 Conference on Neural Information Processing, 2nd Black in AI Workshop 1 (NeurIPS, 2019).

Adelani, D. I. et al. MasakhaNER: Named Entity Recognition for African languages. Trans. Assoc. Comput. Linguist. 9, 1116–1131 (2021).

Siminyu, K. et al. AI4D—African language program. Preprint at http://arxiv.org/abs/2104.02516 (2021).

Frija, G. et al. How to improve access to medical imaging in low- and middle-income countries? EClinicalMedicine 38, 101034 (2021).

Acknowledgements

We thank T. Hastie, R. Daneshjou, K. Vodrahalli and A. Ghorbani for discussions. J.Z. is supported by a NSF CAREER grant.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

M.Z. is a co-founder of Databricks. The other authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Emmanuel Kahembwe and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary information

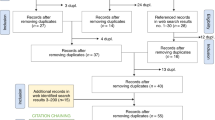

Details of the image classification experiments shown in Fig. 1c.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liang, W., Tadesse, G.A., Ho, D. et al. Advances, challenges and opportunities in creating data for trustworthy AI. Nat Mach Intell 4, 669–677 (2022). https://doi.org/10.1038/s42256-022-00516-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-022-00516-1

This article is cited by

-

Privacy-preserving federated learning based on partial low-quality data

Journal of Cloud Computing (2024)

-

Pathogenomics for accurate diagnosis, treatment, prognosis of oncology: a cutting edge overview

Journal of Translational Medicine (2024)

-

Random-access wide-field mesoscopy for centimetre-scale imaging of biodynamics with subcellular resolution

Nature Photonics (2024)

-

Deep learning-based melt pool and porosity detection in components fabricated by laser powder bed fusion

Progress in Additive Manufacturing (2024)

-

A deep-learning-based framework for identifying and localizing multiple abnormalities and assessing cardiomegaly in chest X-ray

Nature Communications (2024)