Abstract

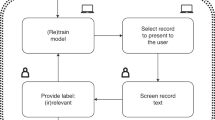

As machine learning models become larger, and are increasingly trained on large and uncurated datasets in weakly supervised mode, it becomes important to establish mechanisms for inspecting, interacting with and revising models. These are necessary to mitigate shortcut learning effects and to guarantee that the model’s learned knowledge is aligned with human knowledge. Recently, several explanatory interactive machine learning methods have been developed for this purpose, but each has different motivations and methodological details. In this work, we provide a unification of various explanatory interactive machine learning methods into a single typology by establishing a common set of basic modules. We discuss benchmarks and other measures for evaluating the overall abilities of explanatory interactive machine learning methods. With this extensive toolbox, we systematically and quantitatively compare several explanatory interactive machine learning methods. In our evaluations, all methods are shown to improve machine learning models in terms of accuracy and explainability. However, we found remarkable differences in individual benchmark tasks, which reveal valuable application-relevant aspects for the integration of these benchmarks in the development of future methods.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

All datasets are publicly available. We have two benchmark datasets in which we add a confounder/shortcut, that is we adjust the original dataset and a scientific dataset where the confounder is not artificially added but inherently present in the images. The MNIST dataset is available at http://yann.lecun.com/exdb/mnist/ and the code to generate its decoy version at https://github.com/dtak/rrr/blob/master/experiments/Decoy%20MNIST.ipynb. The FMNIST dataset is available at https://github.com/zalandoresearch/fashion-mnist and the code to generate its decoy version at https://github.com/ml-research/A-Typology-to-Explore-the-Mitigation-of-Shortcut-Behavior/blob/main/data_store/rawdata/load_decoy_mnist.py. The scientific ISIC dataset and its segmentation masks to highlight the confounders are both available at https://isic-archive.com/api/v1/.

Code availability

All the code37,38,39,40,41 to reproduce the figures and results of this article can be found at https://github.com/ml-research/A-Typology-to-Explore-the-Mitigation-of-Shortcut-Behavior (archived at https://doi.org/10.5281/zenodo.6781501). The CD algorithm is implemented at https://github.com/csinva/hierarchical-dnn-interpretations. Furthermore, other implementations of the evaluated XIL algorithms can be found in the following repositories: RRR at https://github.com/dtak/rrr, CDEP at https://github.com/laura-rieger/deep-explanation-penalization and CE at https://github.com/stefanoteso/calimocho.

References

Trust; Definition and Meaning of trust. Random House Unabridged Dictionary (2022); https://www.dictionary.com/browse/trust

Obermeyer, Z., Powers, B., Vogeli, C. & Mullainathan, S. Dissecting racial bias in an algorithm used to manage the health of populations. Science 366, 447–453 (2019).

Holzinger, A. The next frontier: AI we can really trust. In Machine Learning and Principles and Practice of Knowledge Discovery in Databases—Proc. International Workshops of ECML PKDD 2021 (eds Kamp, M. et al) 427–440 (Springer, 2021).

Geirhos, R. et al. Shortcut learning in deep neural networks. Nat. Mach. Intell. 2, 665–673 (2020).

Brown, T. et al. Language models are few-shot learners. In Adv. Neural Inf. Process. Syst vol. 33. (eds Larochelle, H. et al) 1877–1901 (Curran Associates, Inc., 2020).

Ramesh, A., Dhariwal, P., Nichol, A., Chu, C. & Chen, M. Hierarchical text-conditional image generation with CLIP latents. Preprint at arXiv https://arxiv.org/abs/2204.06125 (2022).

Bender, E. M., Gebru, T., McMillan-Major, A. & Shmitchell, S. On the dangers of stochastic parrots: can language models be too big? In Conference on Fairness, Accountability, and Transparency (FAccT) (eds Elish, M. C. et al.) 610–623 (Association for Computing Machinery, 2021).

Angerschmid, A., Zhou, J., Theuermann, K., Chen, F. & Holzinger, A. Fairness and explanation in AI-informed decision making. Mach. Learn. Knowl. Extr. 4, 556–579 (2022).

Belinkov, Y. & Glass, J. Analysis methods in neural language processing: a survey. Trans. Assoc. Comput. Linguist. 7, 49–72 (2019).

Atanasova, P., Simonsen, J. G., Lioma, C. & Augenstein, I. A diagnostic study of explainability techniques for text classification. In Proc. Conference on Empirical Methods in Natural Language Processing (EMNLP) (eds Webber, B. et al) 3256–3274 (Association for Computational Linguistics, 2020).

Lapuschkin, S. et al. Unmasking Clever Hans predictors and assessing what machines really learn. Nat. Commun. 10, 1096 (2019).

Teso, S. & Kersting, K. Explanatory interactive machine learning. In Proc. AAAI/ACM Conference on AI, Ethics, and Society (AIES) (eds Conitzer, V., et al) 239–245 (Association for Computing Machinery, 2019).

Schramowski, P. et al. Making deep neural networks right for the right scientific reasons by interacting with their explanations. Nat. Mach. Intell. 2, 476–486 (2020).

Popordanoska, T., Kumar, M. & Teso, S. Machine guides, human supervises: interactive learning with global explanations. Preprint at arXiv https://arxiv.org/abs/2009.09723 (2020).

Ross, A. S., Hughes, M. C. & Doshi-Velez, F. Right for the right reasons: training differentiable models by constraining their explanations. In Proc. 26th International Joint Conference on Artificial Intelligence (IJCAI) (ed Sierra, C.) 2662–2670 (AAAI Press, 2017).

Shao, X., Skryagin, A., Schramowski, P., Stammer, W. & Kersting, K. Right for better reasons: training differentiable models by constraining their influence function. In Proc. 35th Conference on Artificial Intelligence (AAAI) (eds Honavar, V. & Spaan, M.) 9533-9540 (AAAI, 2021).

Rieger, L., Singh, C., Murdoch, W. & Yu, B. Interpretations are useful: penalizing explanations to align neural networks with prior knowledge. In Proc. International Conference on Machine Learning (ICML) (eds Daumé, H. & Singh, A.) 8116–8126 (PMLR, 2020).

Selvaraju, R. R. et al. Taking a HINT: leveraging explanations to make vision and language models more grounded. In Proc. IEEE/CVF International Conference on Computer Vision (ICCV) (ed O'Conner, L.) 2591–2600 (The Institute of Electrical and Electronics Engineers, Inc., 2019).

Teso, S., Alkan, Ö., Stammer, W. & Daly, E. Leveraging explanations in interactive machine learning: an overview. Preprint at arXiv https://arxiv.org/abs/2207.14526 (2022).

Hechtlinger, Y. Interpretation of prediction models using the input gradient. Preprint at arXiv https://arxiv.org/abs/1611.07634v1 (2016).

Selvaraju, R. R. et al. Grad-CAM: visual explanations from deep networks via gradient-based localization. In Proc. IEEE International Conference on Computer Vision (ICCV) (ed O'Conner, L.) 618–626 (The Institute of Electrical and Electronics Engineers, Inc., 2017).

Ribeiro, M. T., Singh, S. & Guestrin, C. ‘Why should I trust you?’: explaining the predictions of any classifier. In Proc. 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Demonstrations (eds Bansal, M. & Rush, A. M.) 97–101 (Association for Computing Machinery, 2016).

Stammer, W., Schramowski, P. & Kersting, K. Right for the right concept: revising neuro-symbolic concepts by interacting with their explanations. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (ed O'Conner, L.) 3618–3628 (The Institute of Electrical and Electronics Engineers, Inc., 2021).

Zhong, Y. & Ettinger, G. Enlightening deep neural networks with knowledge of confounding factors. In Proc. IEEE International Conference on Computer Vision Workshops (ICCVW) (ed O'Conner, L.) 1077–1086 (The Institute of Electrical and Electronics Engineers, Inc., 2017).

Adebayo J, Gilmer J, Muelly M, Goodfellow I, Hardt M, Kim B. Sanity checks for saliency maps. Adv. Neural Inf. Process. Syst. 9505–9515 (2018).

Krishna, S. et al. The disagreement problem in explainable machine learning: a practitioner’s perspective. Preprint at arXiv https://arxiv.org/abs/2202.01602v3 (2022).

Tan, A. H., Carpenter, G. A. & Grossberg, S. Intelligence through interaction: towards a unified theory for learning. In Advances in Neural Networks: International Symposium on Neural Networks (ISNN) (eds Derong, L. et al) 1094–1103 (Springer, 2007).

Dafoe, A. et al. Cooperative AI: machines must learn to find common ground. Nature 593 33–36 (2021).

Lang, O. et al. Training a GAN to explain a classifier in StyleSpace. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (ed O'Conner, L.) 673–682 (The Institute of Electrical and Electronics Engineers, Inc., 2021).

Anders, C. J. et al. Analyzing ImageNet with spectral relevance analysis: towards ImageNet un-Hans’ed. Preprint at arXiv https://arxiv.org/abs/1912.11425v1 (2019).

Doshi-Velez, F. & Kim, B. Towards a rigorous science of interpretable machine learning. Preprint at arXiv https://arxiv.org/abs/1702.08608 (2017).

Slany, E., Ott, Y., Scheele, S., Paulus, J. & Schmid, U. CAIPI in practice: towards explainable interactive medical image classification. In AIAI Workshops (eds Maglogiannis, L. I. et al) 389-400 (Springer, 2022).

Kingma, D. P. & Ba, J. Adam: a method for stochastic optimization. In Proc. Third International Conference on Learning Representations (ICLR) (eds Bengio, Y. & LeCun, B.) (2015).

Codella, N. et al. Skin lesion analysis toward melanoma detection: a challenge at the 2017 International Symposium on Biomedical Imaging (ISBI), hosted by the International Skin Imaging Collaboration (ISIC). In 15th International Symposium on Biomedical Imaging (ISBI) (eds Egan, G. & Salvado, O.) 32–36 (The Institute of Electrical and Electronics Engineers, Inc., 2017).

Combalia, M. et al. BCN20000: dermoscopic lesions in the wild. Preprint at arXiv https://arxiv.org/abs/1908.02288 (2019).

Tschandl, P., Rosendahl, C. & Kittler, H. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Sci. Data 5, 180161 (2018).

Friedrich, F., Stammer, W., Schramowski, P. & Kersting, K. A typology to explore the mitigation of shortcut behavior. GitHub https://github.com/ml-research/A-Typology-to-Explore-the-Mitigation-of-Shortcut-Behavior (2022).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proc. International Conference on Learning Representations (ICLR) (eds Bengio, Y. & LeCun, Y.) 1–14 (2015).

Deng, J. et al. Imagenet: a large-scale hierarchical image database. In Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 248–255 (The Institute of Electrical and Electronics Engineers, Inc., 2009).

Paszke, A. et al. PyTorch: an imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 32, 8024–8035 (2019).

Xiao, H., Rasul, K. & Vollgraf, R. Fashion-MNIST: a novel image dataset for benchmarking machine learning algorithms. Preprint at arXiv https://arxiv.org/abs/1708.07747 (2017).

Acknowledgements

We thank L. Meister for preliminary results and insights on this research. This work benefited from the Hessian Ministry of Science and the Arts (HMWK) projects ‘The Third Wave of Artificial Intelligence—3AI’, hessian.AI (F.F., W.S., P.S., K.K.) and ‘The Adaptive Mind’ (K.K.), the ICT-48 Network of AI Research Excellence Centre ‘TAILOR’ (EU Horizon 2020, GA No 952215) (K.K.), the Hessian research priority program LOEWE within the project WhiteBox (K.K.), and from the German Center for Artificial Intelligence (DFKI) project ‘SAINT’ (P.S., K.K.).

Author information

Authors and Affiliations

Contributions

F.F., W.S. and P.S. designed the experiments. F.F. conducted the experiments. F.F., W.S., P.S. and K.K. interpreted the data and drafted the manuscript. K.K. directed the research and gave initial input. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks Mengnan Du and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Friedrich, F., Stammer, W., Schramowski, P. et al. A typology for exploring the mitigation of shortcut behaviour. Nat Mach Intell 5, 319–330 (2023). https://doi.org/10.1038/s42256-023-00612-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-023-00612-w

This article is cited by

-

A typology for exploring the mitigation of shortcut behaviour

Nature Machine Intelligence (2023)