Paper:

Trial of Utilization of an Environmental Map Generated by a High-Precision 3D Scanner for a Mobile Robot

Rikuto Sekine, Tetsuo Tomizawa, and Susumu Tarao

Department of Mechanical Engineering, National Institute of Technology, Tokyo College

1220-2, Kunugida-machi, Hachioji, Tokyo 193-0997, Japan

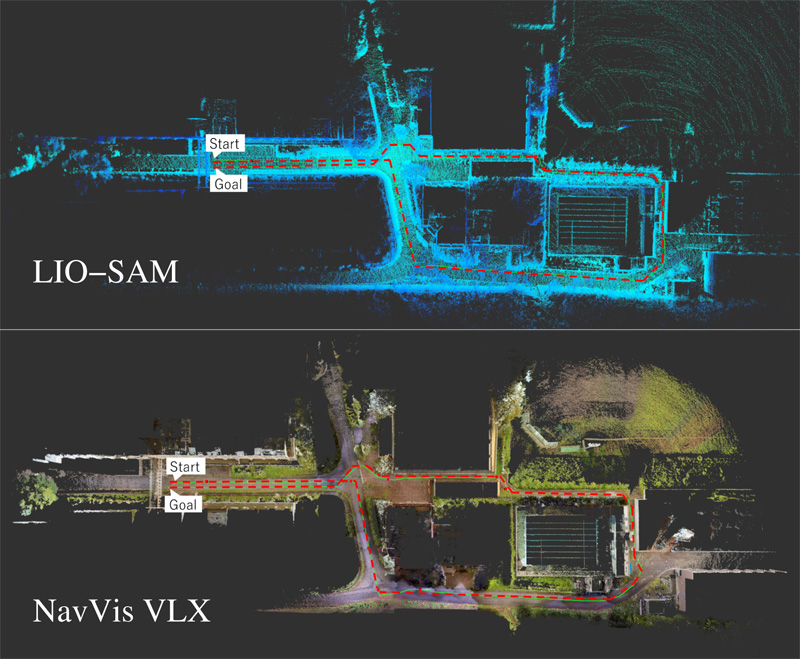

In recent years, high-precision 3D environmental maps have attracted the attention of researchers in various fields and have been put to practical use. For the autonomous movement of mobile robots, it is common to create an environmental map in advance and use it for localization. In this study, to investigate the usefulness of 3D environmental maps, we scanned physical environments using two different simultaneous localization and mapping (SLAM) approaches, specifically a wearable 3D scanner and a 3D LiDAR mounted on a robot. We used the scan data to create 3D environmental maps consisting of 3D point clouds. Wearable 3D scanners can be used to generate high-density and high-precision 3D point-cloud maps. The application of high-precision maps to the field of autonomous navigation is expected to improve the accuracy of self-localization. Navigation experiments were conducted using a robot, which was equipped with the maps obtained from the two approaches described. Autonomous navigation was achieved in this manner, and the performance of the robot using each type of map was assessed by requiring it to halt at specific landmarks set along the route. The high-density colored environmental map generated from the wearable 3D scanner’s data enabled the robot to perform autonomous navigation easily with a high degree of accuracy, showing potential for usage in digital twin applications.

3D maps using two different approaches

- [1] M. Tomono, “Simultaneous Localization and Mapping,” Ohmsha, Ltd., 2018 (in Japanese).

- [2] X. Xu, L. Zhang, J. Yang, C. Cao, W. Wang, Y. Ran, Z. Tan, and M. Luo, “A review of multi-sensor fusion SLAM systems based on 3D LIDAR,” Remote Sensing, Vol.14, No.12, Article No.2835, 2022. https://doi.org/10.3390/rs14122835

- [3] R. Otero, S. Lagüela, I. Garrido, and P. Arias, “Mobile indoor mapping technologies: A review,” Automation in Construction, Vol.120, Article No.103399, 2020. https://doi.org/10.1016/j.autcon.2020.103399

- [4] A. Wagner, “A new approach for geo-monitoring using modern total stations and RGB+D images,” Measurement, Vol.82, pp. 64-74, 2016. https://doi.org/10.1016/j.measurement.2015.12.025

- [5] K. Endou, T. Ikenoya, and R. Kurazume, “Development of 3D scanning system using automatic guiding total station,” J. Robot. Mechatron., Vol.24, No.6, pp. 992-999, 2012. https://doi.org/10.20965/jrm.2012.p0992

- [6] S. Hoshino and H. Yagi, “Mobile robot localization using map based on cadastral data for autonomous navigation,” J. Robot. Mechatron., Vol.34, No.1, pp. 111-120, 2022. https://doi.org/10.20965/jrm.2022.p0111

- [7] S. Ito, R. Kaneko, T. Saito, and Y. Nakamura, “A map creation for LiDAR localization based on the design drawings and tablet scan data,” J. Robot. Mechatron., Vol.35, No.2, pp. 470-482, 2023. https://doi.org/10.20965/jrm.2023.p0470

- [8] S. Muramatsu, T. Tomizawa, S. Kudoh, and T. Suehiro, “Mobile robot navigation utilizing the WEB based aerial images without prior teaching run,” J. Robot. Mechatron., Vol.29, No.4, pp. 697-705, 2017. https://doi.org.10.20965/jrm.2017.p0697

- [9] T. Tomizawa, T. Fujii, Y. Miyazaki, and M. Iwakiri, “A localization method using 3D maps with geographic information,” Tsukuba Challenge 2018 Report, pp. 140-145, 2018 (in Japanese).

- [10] M. Magnusson, “The three-dimensional normal-distributions transform — An efficient representation for registration, surface analysis, and loop detection,” Ph.D. Thesis, Örebro University, 2009.

- [11] K. Koide, J. Miura, and E. Menegatti, “A portable three-dimensional LIDAR-based system for long-term and wide-area people behavior measurement,” Int. J. of Advanced Robotic Systems, Vol.16, No.2, 2019. https://doi.org/10.1177/1729881419841532

- [12] R. Sekine, E. Iwashimizu, N. Nagata, R. Suzuki, T. Watanabe, T. Tomizawa, and S. Tarao, “Development of autonomous mobile wheelchair robot takao w1,” Proc. of 22nd SICE System Integration Division Annual Conf. (SI2021), pp. 3371-3374, 2021 (in Japanese).

- [13] T. Shan, B. Englot, D. Meyers, W. Wang, C. Ratti, and D. Rus, “LIO-SAM: Tightly-coupled lidar inertial odometry via smoothing and mapping,” 2020 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS). pp. 5135-5142, 2020. https://doi.org/10.1109/IROS45743.2020.9341176

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.