Paper:

Deformable Map Matching to Handle Uncertain Loop-Less Maps

Kanji Tanaka

University of Fukui

3-9-1 Bunkyo, Fukui-shi, Fukui 910-8507, Japan

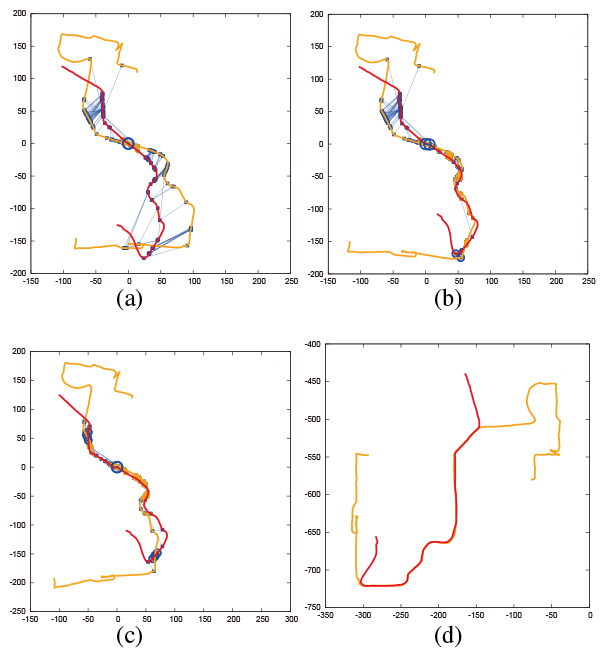

In the classical context of map relative localization, map-matching (MM) is typically defined as the task of finding a rigid transformation (i.e., 3DOF rotation/translation on the 2D moving plane) that aligns two maps, the query and reference maps built by mobile robots. This definition is valid in loop-rich trajectories that enable a mapper robot to close many loops, for which precise maps can be assumed. The same cannot be said about the newly emerging vision only autonomous navigation systems, which typically operate in loop-less trajectories that have no loop (e.g., straight paths). In this paper, we address this limitation by merging the two maps. Our study is motivated by the observation that even when there is no loop in either the query or reference map, many loops can often be obtained in the merged map. We add two new aspects to MM: (1) map retrieval with compact and discriminative binary features powered by deep convolutional neural network (DCNN), which efficiently generates a small number of good initial alignment hypotheses; and (2) map merge, which jointly deforms the two maps to minimize differences in shape between them. A preemption scheme is introduced to avoid excessive evaluation of useless MM hypotheses. For experimental investigation, we created a novel collection of uncertain loop-less maps by utilizing the recently published North Campus Long-Term (NCLT) dataset and its ground-truth GPS data. The results obtained using these map collections confirm that our approach improves on previous MM approaches.

Loop closure detection in loop-less maps

- [1] S. Thrun, W. Burgard, and D. Fox, “Probabilistic Robotics,” MIT Press, 2005.

- [2] J. Neira, J. D. Tardós, and J. A. Castellanos, “Linear time vehicle relocation in SLAM,” Proc. of the 2003 IEEE Int. Conf. on Robotics & Automatics (ICRA), pp. 427-433, 2003.

- [3] H. Lategahn and C. Stiller, “Vision-only localization,” IEEE Trans. on Intelligent Transportation Systems, Vol.15, No.3, pp 1246-1257, 2014.

- [4] N. Carlevaris-Bianco, A. K. Ushani, and R. M. Eustice, “University of Michigan North Campus long-term vision and lidar dataset,” Int. J. of Robotics Research, Vol.35, No.9, pp. 1023-1035, 2015.

- [5] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “Imagenet classification with deep convolutional neural networks,” Advances in neural information processing systems, pp. 1097-1105, 2012.

- [6] K. Tanaka, “Self-Localization from Images with Small Overlap,” 2016 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), 2016.

- [7] A. Babenko, A. Slesarev, A. Chigorin, and V. Lempitsky, “Neural codes for image retrieval,” European Conf. on Computer Vision, pp. 584-599, 2014.

- [8] K. Ikeda and K. Tanaka, “Visual robot localization using compact binary landmarks,” 2010 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 4397-4403, 2010.

- [9] R. Mur-Artal, J. M. M. Montiel, and J. D. Tardós, “Orb-slam: a versatile and accurate monocular slam system,” IEEE Trans. on Robotics, Vol.31, No.5, pp. 1147-1163, 2015.

- [10] R. Raguram, O. Chum, M. Pollefeys, J. Matas, and J.-M. Frahm, “USAC: a universal framework for random sample consensus,” IEEE Trans. on, Pattern Analysis and Machine Intelligence, Vol.35, No.8, pp. 2022-2038, 2013.

- [11] B. P. Williams, M. Cummins, J. Neira, P. M. Newman, I. D. Reid, and J. D. Tardós, “A comparison of loop closing techniques in monocular SLAM,” Robotics and Autonomous Systems, Vol.57, No.12, pp. 1188-1197, 2009.

- [12] M. Cummins and P. M. Newman, “Appearance-only SLAM at large scale with FAB-MAP 2.0,” I. J. Robotics Res., Vol.30, No.9, pp. 1100-1123, 2011.

- [13] R. W. Sumner, J. Schmid, and M. Pauly, “Embedded deformation for shape manipulation,” ACM Transactions on Graphics (TOG), Vol.26, No.3, Article No.80, 2007.

- [14] A. Koenig, J. Kessler, and H.-M. Gross, “A graph matching technique for an appearance-based, visual slam-approach using rao-blackwellized particle filters,” 2008 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 1576-1581, 2008.

- [15] M. Bosse and R. Zlot, “Map matching and data association for large-scale two-dimensional laser scan-based slam,” Int J. of Robotics Research, Vol.27, No.6, pp. 667-691, 2008.

- [16] K. Ni, D. Steedly, and F. Dellaert, “Tectonic SAM: Exact, out-of-core, submap-based SLAM,” Proc. 2007 IEEE Int. Conf. on Robotics and Automation, pp. 1678-1685. 2007.

- [17] Z. Chen, A. Jacobson, N. Sünderhauf, B. Upcroft, L. Liu, C. Shen, I. Reid, and M. Milford, “Deep learning features at scale for visual place recognition,” 2017 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 3223-3230, 2017.

- [18] L. A. Clemente, A. J. Davison, I. D. Reid, J. Neira, and J. D. Tardós, “Mapping Large Loops with a Single Hand-Held Camera,” Robotics: Science and Systems, Vol.3, 2007.

- [19] D. Galvez-López and J. D. Tardós, “Bags of Binary Words for Fast Place Recognition in Image Sequences,” IEEE Trans. on Robotics, Vol.28, No.5, pp. 1188-1197, 2012.

- [20] K. Tanaka and E. Kondo, “Incremental ransac for online relocation in large dynamic environments,” Proc. 2006 IEEE International Conference on Robotics and Automation (ICRA), pp. 68-75, 2006.

- [21] T. Nagasaka and K. Tanaka, “An incremental scheme for dictionary-based compressive SLAM,” 2011 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 872-879, 2011.

- [22] K. Tanaka and E. Kondo, “A scalable algorithm for monte carlo localization using an incremental E2LSH-database of high dimensional features,” 2008 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 2784-2791, 2008.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.