Paper:

Near-Field Touch Interface Using Time-of-Flight Camera

Lixing Zhang*,** and Takafumi Matsumaru**

*Department of automation, Shanghai Jiaotong University

800 Dongchuan RD., Minhang District, Shanghai 200240, China

**Graduate School of Information, Production and Systems, Waseda University

2-7 Hibikino, Wakamatsu-ku, Kitakyushu, Fukuoka 808-0135, Japan

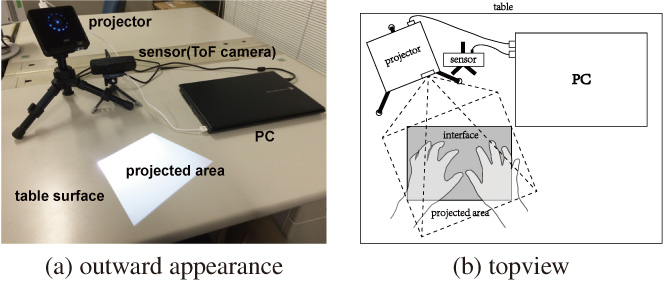

Near-field touch interface system, NFTIS

- [1] M. Komazaki and M. Idesawa, “A Study of Human Pointing Features on Touch-Screens-Age Effect, Difference by Gender, and Difference in Direction,” J. of Robotics and Mechatronics, Vol.19, No.6, pp. 715-723, 2007.

- [2] S. Hashimoto, A. Ishida, M. Inami, and T. Igarashi, “TouchMe: An Augmented Reality Interface for Remote Robot Control,” J. of Robotics and Mechatronics, Vol.25, No.3, pp. 529-537, 2013.

- [3] P. D. Le and V. H. Nguyen, “Remote Mouse Control Using Fingertip Tracking Technique,” AETA 2013: Recent Advances in Electrical Engineering and Related Sciences, pp. 467-476, Springer, 2014.

- [4] Y. Sato, Y. Kobayashi, and H. Koike, “Fast tracking of hands and fingertips in infrared images for augmented desk interface,” Proc. of Fourth IEEE Int. Conf. on Automatic Face and Gesture Recognition 2000, pp. 462-467, IEEE, 2000.

- [5] S. Do-Lenh, F. Kaplan, A. Sharma, and P. Dillenbourg, “Multi-finger interactions with papers on augmented tabletops,” Proc. of the 3rd Int. Conf. on Tangible and Embedded interaction, pp. 267-274, ACM, 2009.

- [6] D.-D. Yang, L.-W. Jin, and J.-X. Yin, “An effective robust fingertip detection method for finger writing character recognition system,” Proc. of 2005 Int. Conf. on Machine Learning and Cybernetics 2005, Vol.8, pp. 4991-4996, IEEE, 2005.

- [7] P. Song, S. Winkler, S. O. Gilani, and Z. Zhou, “Vision-based projected tabletop interface for finger interactions,” Human-Computer Interaction, pp. 49-58, Springer, 2007.

- [8] S. Odo and K. Hoshino, “Pointing Device Based on Estimation of Trajectory and Shape of a Human Hand in a Monocular Image Sequence,” J. of Advanced Computational Intelligence and Intelligent Informatics, Vol.8, No.2, pp. 140-149, 2004.

- [9] N. Takao, J. Shi, and S. Baker, “Tele-graffiti: A camera-projector based remote sketching system with hand-based user interface and automatic session summarization,” Int. J. of Computer Vision, Vol.53, No.2, pp. 115-133, 2003.

- [10] A. Bellarbi, S. Benbelkacem, N. Zenati-Henda, and M. Belhocine, “Hand gesture interaction using color-based method for tabletop interfaces,” 2011 IEEE 7th Int. Symposium on Intelligent Signal Processing (WISP), pp. 1-6, IEEE, 2011.

- [11] A. D. Wilson, “PlayAnywhere: a compact interactive tabletop projection-vision system,” Proc. of the 18th annual ACM symposium on User interface software and technology, pp. 83-92, ACM, 2005.

- [12] L. Song and M. Takatsuka, “Real-time 3D finger pointing for an augmented desk,” Proc. of the Sixth Australasian Conf. on User interface, Vol.40, pp. 99-108, Australian Computer Society, Inc., 2005.

- [13] S. Malik and J. Laszlo, “Visual touchpad: a two-handed gestural input device,” Proc. of the 6th Int. Conf. on Multimodal interfaces, pp. 289-296, ACM, 2004.

- [14] X. Jian-guo, W. Wen-long, Z. Wen-min, and H. Jing, “A novel multi-touch human-computer-interface based on binocular stereo vision,” 2009 Int. Symposium on Intelligent Ubiquitous Computing and Education, pp. 319-323, IEEE, 2009.

- [15] T. Matsumaru, Y. Jiang, and Y. Liu, “Image-projective Desktop Arm Trainer IDAT for therapy,” 2013 IEEE RO-MAN, pp. 801-806, IEEE, 2013.

- [16] A. D. Wilson, “Using a depth camera as a touch sensor,” ACM Int. Conf. on interactive tabletops and surfaces, pp. 69-72, ACM, 2010.

- [17] C. Harrison, H. Benko, and A. D. Wilson, “OmniTouch: wearable multitouch interaction everywhere,” Proc. of the 24th annual ACM symposium on User interface software and technology, pp. 441-450, ACM, 2011.

- [18] H. Benko, R. Jota, and A. Wilson, “MirageTable: freehand interaction on a projected augmented reality tabletop,” Proc. of the SIGCHI Conf. on human factors in computing systems, pp. 199-208, ACM, 2012.

- [19] F. Klompmaker, K. Nebe, and A. Fast, “dSensingNI: a framework for advanced tangible interaction using a depth camera,” Proc. of the Sixth Int. Conf. on Tangible, Embedded and Embodied Interaction, pp. 217-224, ACM, 2012.

- [20] S. Murugappan, N. Elmqvist, K. Ramani et al., “Extended multitouch: recovering touch posture and differentiating users using a depth camera,” Proc. of the 25th annual ACM symposium on User interface software and technology, pp. 487-496, ACM, 2012.

- [21] J. Hardy and J. Alexander, “Toolkit support for interactive projected displays,” Proc. of the 11th Int. Conf. on Mobile and Ubiquitous Multimedia, p. 42, ACM, 2012.

- [22] R. Ziola, S. Grampurohit, N. Landes, J. Fogarty, and B. Harrison, “Examining interaction with general-purpose object recognition in LEGO OASIS,” 2011 IEEE Symposium on Visual Languages and Human-Centric Computing (VL/HCC), pp. 65-68, IEEE, 2011.

- [23] R. Sukthankar, R. G. Stockton, and M. D. Mullin, “Smarter presentations: Exploiting homography in camera-projector systems,” Proc. of Eighth IEEE Int. Conf. on Computer Vision 2001 (ICCV 2001), Vol.1, pp. 247-253, IEEE, 2001.

- [24] M. V. d. Bergh and L. Van Gool, “Combining RGB and ToF cameras for real-time 3D hand gesture interaction,” 2011 IEEE Workshop on Applications of Computer Vision (WACV), pp. 66-72, IEEE, 2011.

- [25] P. Kakumanu, S. Makrogiannis, and N. Bourbakis, “A survey of skin-color modeling and detection methods,” Pattern recognition, Vol.40, No.3, pp. 1106-1122, 2007.

- [26] C. Von Hardenberg and F. Bérard, “Bare-hand human-computer interaction,” Proc. of the 2001 workshop on Perceptive user interfaces, pp. 1-8, ACM, 2001.

- [27] M. Piccardi, “Background subtraction techniques: a review,” 2004 IEEE Int. Conf. on Systems, man and cybernetics, Vol.4, pp. 3099-3104, IEEE, 2004.

- [28] S. S. Rautaray and A. Agrawal, “Vision based hand gesture recognition for human computer interaction: a survey,” Artificial Intelligence Review, pp. 1-54, 2012.

- [29] F. Dominio, G. Marin, M. Piazza, and P. Zanuttigh, “Feature Descriptors for Depth-Based Hand Gesture Recognition,” Computer Vision and Machine Learning with RGB-D Sensors, pp. 215-237, Springer, 2014.

- [30] D. Lee and S. Lee, “Vision-based finger action recognition by angle detection and contour analysis,” ETRI J., Vol.33, No.3, pp. 415-422, 2011.

- [31] H. Kato and T. Kato, “A marker-less augmented reality based on fast fingertip detection for smart phones,” 2011 IEEE Int. Conf. on Consumer Electronics (ICCE), pp. 127-128, IEEE, 2011.

- [32] M. Shekow and L. Oppermann, “On Maximum Geometric Finger-Tip Recognition Distance Using Depth Sensors,” Communication Papers Proc. of 22nd Int. Conf. in Central Europe on Computer Graphics, Visualization and Computer Vision 2014 (WSCG 2014), pp. 83-89, 2014.

- [33] G. Borgefors, “Distance transformations in digital images,” Computer vision, graphics, and image processing, Vol.34, No.3, pp. 344-371, 1986.

- [34] Y. Liao, Y. Zhou, H. Zhou, and Z. Liang, “Fingertips detection algorithm based on skin colour filtering and distance transformation,” 2012 12th Int. Conf. on Quality Software (QSIC), pp. 276-281, IEEE, 2012.

- [35] J. Choi, S. Han, H. Park, and J.-I. Park, “A Study on Providing Natural Two-handed Interaction Using a Hybrid Camera,” The Third Int. Conf. on Digital Information Processing and Communications (ICDIPC2013), pp. 481-484, The Society of Digital Information and Wireless Communication, 2013.

- [36] P.-D. Le and V.-H. Nguyen, “Real time finger writing recognition system for human computer interaction,” 2013 Int. Conf. on Control, Automation and Information Sciences (ICCAIS), pp. 58-63, IEEE, 2013.

- [37] K. Oka, Y. Sato, and H. Koike, “Real-time fingertip tracking and gesture recognition,” Computer Graphics and Applications, IEEE, Vol.22, No.6, pp. 64-71, 2002.

- [38] S. Kratz, P. Chiu, and M. Back, “Pointpose: finger pose estimation for touch input on mobile devices using a depth sensor,” Proc. of the 2013 ACM Int. Conf. on Interactive tabletops and surfaces, pp. 223-230, ACM, 2013.

- [39] I. Sobel and G. Feldman, “A 3x3 isotropic gradient operator for image processing, presented at a talk at the Stanford Artificial Project,” R. Duda and P. Hart (Eds.), “Pattern Classification and Scene Analysis,” pp. 271-272, John Wiley & Sons, 1968.

- [40] J. Dai and C.-K. R. Chung, “Touchscreen everywhere: on transferring a normal planar surface to a touch-sensitive display,” IEEE Trans. on Cybernetics, Vol.44, No.8, pp. 1383-1396, 2014.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.