Paper:

Mobile Robot Navigation Utilizing the WEB Based Aerial Images Without Prior Teaching Run

Satoshi Muramatsu*, Tetsuo Tomizawa**, Shunsuke Kudoh***, and Takashi Suehiro***

*Tokai University

4-1-1 Kitakaname, Hiratsuka, Kanagawa 259-1292, Japan

**National Defense Academy of Japan

1-10-20 Hashirimizu, Yokosuka, Kanagawa 239-8686, Japan

***The University of Electro-Communications

1-5-1 Choufugaoka, Choufu, Tokyo 182-8585, Japan

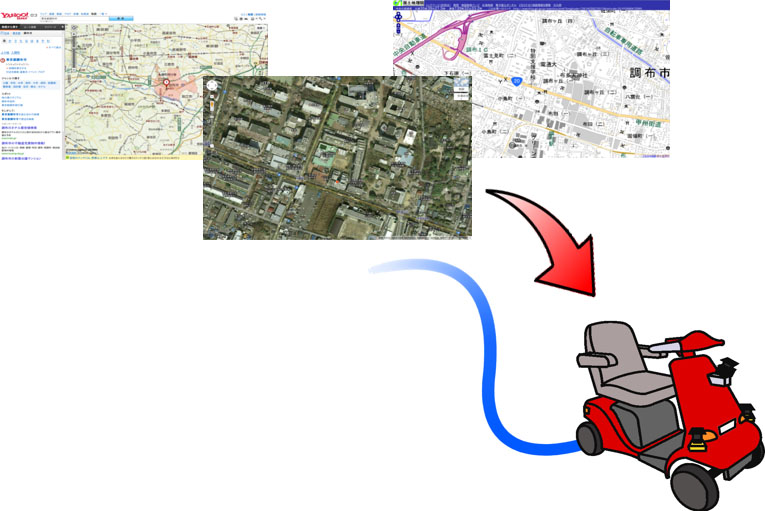

In order to realize the work of goods conveyance etc. by robot, localization of robot position is fundamental technology component. Map matching methods is one of the localization technique. In map matching method, usually, to create the map data for localization, we have to operate the robot and measure the environment (teaching run). This operation requires a lot of time and work. In recent years, due to improved Internet services, aerial image data is easily obtained from Google Maps etc. Therefore, we utilize the aerial images as a map data to for mobile robots localization and navigation without teaching run. In this paper, we proposed the robot localization and navigation technique using aerial images. We verified the proposed technique by the localization and autonomous running experiment.

Mobile robot navigation without prior teaching run

- [1] S. Cooper and H. Durrant-Whyte, “A Kalman filter model for GPS navigation of land vehicles,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 157-163, 1994.

- [2] M. Betke and L. Gurvits, “Mobile Robot Localization Using Landmark,” IEEE Trans. on Robotics and Automation, Vol.13, No.2, April 1997.

- [3] M. Tomono, “A Scan Matching Method using Euclidean Invariant Signature for Global Localization and Map Building,” Proc. of IEEE Int. Conf. on Robotics and Automation, pp. 866-871, 2004.

- [4] H. Koyasu, J. Miura, and Y. Shirai, “Integrating Multiple Scan Matching Results for Ego-Motion Estimation with Uncertainty,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 3104-3109, 2004.

- [5] Y. Negishi, J. Miura, and Y. Shirai, “Adaptive Robot Speed Control by Considering Map and Localization Uncertainty,” Proc. of the 8th Int. Conf. on Intelligent Autonomous Systems, pp. 873-880, 2004.

- [6] T. Yoshida, K. Irie, E. Koyanagi, and M. Tomono, “A Sensor Platform for Outdoor Navigation Using Gyro-assisted Odometry and Roundly-swinging 3D Laser Scanner,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 1414-1420, 2010.

- [7] E. Takeuchi, K. Ohno, and S. Tadokoro, “A robust localization method based on free-space observation model using 3D-Map,” Proc. of IEEE Int. Conf. on Robotics and Biomimetics, 2010.

- [8] S. Olufs and M. Vincze, “An Efficient Area-based Observation Model for Monte-Carlo Robot Localization,” Proc. of the 2009 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 13-20, 2009.

- [9] P. Moutarlier and R. Chatila, “An experimental system for incremental environment modeling by an autonomous mobile robot,” 1st Int. Symposium on Experimental Robotics, 1989.

- [10] M. Noda, T. Takahashi, D. Deguchi, I. Ide, H. Murase, Y. Kojima, and T. Naito, “Vehicle Ego-Localization by Matching In-Vehicle Camera Image to an Aerial Image,” Proc. of ACCV ’10 Int. Conf. on Computer vision, pp. 163-173, 2010.

- [11] K. Y. K. Leung, C. M. Clark, and J. P. Huissoon, “Localization in Urban Environments by Matching Ground Level Video Images with an Aerial Image,” Proc. of IEEE Int. Conf. on Robotics and Automation, pp. 551-556, 2008.

- [12] T. Senlet and A. Elgammal, “Satellite image based precise robot localization on sidewalks,” Proc. of the 2012 IEEE Int. Conf. on Robotics and Automation, pp. 2647-2653, 2012.

- [13] T. Mori, T. Sato, A. Kuroda, M. Tanaka, M. Shimosaka, T. Sato, H. Sanada, and H. Noguchi, “Outdoor map Construction Using Edge Based Grid Map and Laser Scan Data,” J. of Robotics and Mechatronics, Vol.25, No.1, pp. 5-15, 2013.

- [14] C. U. Dogrueer, A. B. Koku, and M. Dolen, “Novel solutions for Global Urban Localization,” J. of Robotics and Autonomous Systems, Vol.58, pp. 634-647, 2010.

- [15] T. Tomizawa, S. Muramatsu, M. Sato, M. Hirai, S. Kudoh, and T. Suehiro, “Development electronic cart in walk way,” Int. Advanced Robotics, Vol.38, No.8, 2011.

- [16] N. Fairfiled and D. Wettergreen, “Evidence grid-based methods for 3D map matching,” Proc. of IEEE Int. Conf. on Robotics and Automation, pp. 1637-1642, 2009.

- [17] L. Armesto, J. Minguez, and L. Montesano, “A generalization of the metric-based interactive Closet Point technique for 3D scan matching,” Proc. of IEEE Int. Conf. on Robotics and Automation, pp. 1367-1372, 2010.

- [18] K. Ohno, T. Kawahara, and S. Tadokoro, “Development of 3D laser scanner for measuring uniform and dense 3D shapes of static objects in dynamic environment,” Proc. of IEEE Int. Conf. on Robotics and Biomimetics, pp. 2161-2167, 2009.

- [19] M. Matsumoto and S. Yuta, “3d laser range sensor module with roundly swinging mechanism for fast and wide view range image,” Proc. of IEEE Conf. on Multisensor Fusion and Integration for Intelligent Systems, pp. 156-161, 2010.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.